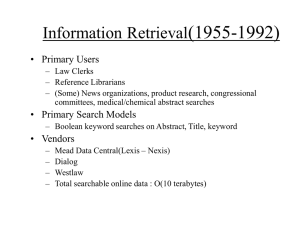

(1955-1992) Information Retrieval • Primary Users

advertisement

Information Retrieval(1955-1992) • Primary Users – Law Clerks – Reference Librarians – (Some) News organizations, product research, congressional committees, medical/chemical abstract searches • Primary Search Models – Boolean keyword searches on Abstract, Title, keyword • Vendors – – – – Mead Data Central(Lexis – Nexis) Dialog Westlaw Total searchable online data : O(10 terabytes) Information Retrieval(1993+) • Primary users – – • 1st time computer users novices Primary search modes – – • Still Boolean keyword searches with limited probabilistic models But FULL TEXT Retrieval Vendors – – Lycos, Infoseek, Yahoo, Excite, AltaVista, Google Total online data : ??? Growth of the Web # of web sites or Volume of web traffic ? Mosaic Netscape Exponential Growth 1992 1993 1994 1995 1996 1997 1998 Volume doubling every 6 months Observation • Early IR system basically extended library catalog systems, allowing – Keyword searches, – Limited abstract searches in addition to Author/Title/Subject and including Boolean combination functionality • IR was seen as reference retrieval (full documents still had to be ordered/delivered by hand) In Contrast Today, IR has a much wider role in the age of digital libraries • Full document retrieval (hypertext, postscript or optical image(TIFF) representations) • Question answering Old View … AND … OR … Function of IR : Map queries to relevant documents 15 New View 1 8 Satisfy user’s information need Infer goals/information need from: - query itself - past user query history - User profiling(aol.com vs. CS dept.) - Collective analysis of other user feedback on similar queries In addition, return information in a format useful/intelligible to the user • weighted orderings • clusterings of documents by different attributes • visualization tools ** Text Understanding techniques to extract answer to questions or at least subregion of text Who is the current mayor of Columbus, Ohio? don’t need full AP/CNN article on city scandals, just the answer(and available source for proof) Boolean Systems Function #1 : Provide a fast, compact index into the database (of documents or references) Chihuahua (granularity) Index options - Doc number - Page number in Doc - Actual word offset Nanny Data structure: Inverted file Boolean Operations Chihuahua AND Nanny g Join ( Chihuahua OR Nanny g Union ( ) Proximity searches Chihuahua W/3 Nanny ) Vector IR model d1 Find optimal f( ) V1 _____________ _____________ _____________ _____________ _____________ _____________ _____________ f( d2 ) _____________ _____________ _____________ _____________ _____________ _____________ _____________ f( ) V2 Sim (Vi , VQ) = Sim’ (Di , Q) Sim (V1, V2) Sim’ (d1 , d2) Cosine distance Query Vector models D1 D2 Query _____________ _____________ _____________ _____________ _____________ _____________ _____________ _____________ _____________ _____________ _____________ _____________ _____________ _____________ V1 V2 Bit vector capturing essence/meaning of D1 Sim (V1 , Q1) Q1 Find max Sim (Vi , Q1) Dimensionality Reduction d1 _____________ _____________ _____________ _____________ _____________ _____________ _____________ f( V1 ) Initial (term) vector representation Dimensionality Reduction(SVD/LSI) ^ V1 More compact/reduced dimensionality model of d1 V1 V1 D1 3 Japanese Japan Nippon Japanese Nihon Japanese Japanese .. Clustering words Offset K - hash(w) - hash(cluster(w)) - hash(cluster(stem(w))) 5 Japan * 1 The 192 1 1 Raw Term Vector Condensed Vector Stem : books computer computation book comput comput d1 The soap opera 0 0 1 Soap Opera Soap opera 1 1 0 Soap Opera Soap opera Collocation (Phrasal Term) d2 The soap residue an opera by Verdi Vector g Abstractly it is a compressed document(meaning preserving) m1 f(d1) …… …… …… A meaning or context vector representation m2 document f(d2) …… …… …… document Compression : m1 = m2 iff d1 = d2 f( ) must be invertible Summarization : m1 = m2 iff d1 and d2 are about the same thing (mean the same thing) What is the optimal method for meaning preserving compression? Issues • size of representation(ideally size(Vi) << size(Di)) • cost of computation of vectors – one time cost at model creation • cost of similarity function • must be computed for each query • crucial to speed that this be minimized – header processing retain/model cross references to cited(Xref) articles/mail: Vi 1 Vi 2 ref1 (Vi ) 3 ref 2 (Vi ) – Body Processing/Term Weighting 1. remove (most) function words (but must treat words such as ‘NOT’ specially) 2. downweight by frequency 3. use text analysis to decide which function words carry meaning: Mr. Dong Ving The of the Hanoi branch. The Who (use named entity analysis and phrasal dictionaries) Supervised Learning/Training Collective Discrimination A Chihuahua Breeding Club C J Input data stream recognizer Junk mail Personal recognizer Training B Project #1 In Real time (ongoing) recognizer recognizer B A C J Labeled (routed) output Other related problems: Mail/News Routing and Filtering 119 Data Stream 121 125 131 Project #1 at work Project #2 at work Chihuahua breeding Scuba club Personal Junk mail Inboxes (prioritize/partition mail reading) Typically model long-term information needs (People put effort into training and user feedback that they aren’t willing to invest for single query-based IR) Features for classification • • • • • • • Subject line Source/Sender X-annotations Date/time Length Other recipients Message content (regions weighted differently) Probabilistic IR models – Intermediate Topic models/detectors S TV1 Topic Detectors TDA (Topic Models) f( d1 TDB 000100 Q 100000 V1 010100 V2 TDE V1 V2 ) f( d2 )