Performance Evaluation and Comparison of Middle-Ware based QoS support for Real-

advertisement

Performance Evaluation and Comparison of

Middle-Ware based QoS support for RealTime/Multimedia applications versus using

Modified OS Kernels

By

Saif Raza Hasan

University of Massachusetts, Amherst, MA, USA 2001

1

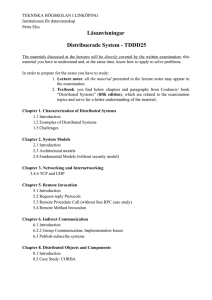

Table of Contents

1. Introduction ..................................................................................................................... 3

2. System Description ......................................................................................................... 3

2.1 QLinux Features ........................................................................................................ 3

2.2 TAO / Corba Features................................................................................................ 4

3. Experimental Setup ......................................................................................................... 6

3.1 Echo / Null RPC ........................................................................................................ 6

3.2 Simple HTTP Client-Server ...................................................................................... 9

3.3 Concurrent HTTP Client-Server .............................................................................. 10

3.4 Compute Intensive HTTP Client-Server ................................................................. 10

3.5 Long File/ MPEG Download ................................................................................... 11

3.6 Video Streaming Without QoS Specification .......................................................... 13

3.7 Video Streaming With QoS Specification ............................................................... 16

3.8 Video Streaming performance in presence of another application.......................... 19

3.9 Industrial Control System Simulation ..................................................................... 20

4. Summary & Discussion of Qualitative Comparisons ................................................... 21

4.1 QLinux ..................................................................................................................... 22

4.2 TAO / CORBA ........................................................................................................ 22

References ......................................................................................................................... 24

2

1. Introduction

Most common off-the-shelf operating systems (COTS) such as Windows-NT, Linux and most

Unises are not designed to provide applications with QoS guarantees for access to system

resources such as network bandwidth or CPU time. This leads to poor performance of Real-Time

/ Multimedia applications which have deadlines that must be met in a guaranteed fashion on

these systems.

In trying to address this problem, two approaches are common. One is to modify the OS itself

and incorporate mechanisms for guaranteeing QoS and modifying applications to use these

mechanisms. The other alternative is to use a middle-ware which runs on top of the unmodified

operating and provides an API for application programmers that allows them to specify QoS

requirements, and the middleware attempts to meet those requirements.

In this project we have compare the performance and evaluated some of the trade-offs involved

in these two approaches. We have used the QLinux kernel which supports HSFQ for process and

packet scheduling as an example of a modified COTS system and the TAO ORB as the example

for a Real Time Middleware. TAO is an open-source implementation of the Corba specification

and contains optimizations and built in support for providing QoS to it’s applications.

We’ve developed various applications and performed experimental evaluation of their

performance to obtain a quantitative and qualitative comparison of the two systems.

2. System Description

The systems that we are evaluating and comparing in this project are QLinux and TAO (a Real

Time CORBA implementation). The two systems represent two alternative approaches to

providing QoS guarantees to Soft Real Time / Multimedia applications. The mechanisms and

features provided by the two systems are different and in this section describe them in detail.

2.1 QLinux Features

QLinux is a Linux kernel that can provide quality of service guarantees. QLinux, based on the

Linux 2.2.x kernel, combines some of the latest innovations in operating systems research. It

includes the following features:

Hierarchical Start Time Fair Queuing (H-SFQ) CPU scheduler

Hierarchical Start Time Fair Queuing (H-SFQ) network packet scheduler

The H-SFQ CPU scheduler enables hierarchical scheduling of applications by fairly allocating

cpu bandwidth to individual applications and application classes. The H-SFQ packet scheduler

provides rate guarantees and fair allocation of bandwidth to packets from individual flows as

well as flow aggregates (classes). The kernel also supports Lazy Receiver Processing (LRP) of

packets, however, this feature was turned off in the kernel we used for our experiments.

3

In the QLinux framework for CPU scheduling[1], the hierarchical partitioning requirements are

specified by a tree structure. Each thread in the system belongs to exactly one leaf node. Each

non-leaf node represents an aggregation of threads and hence an application class in the system.

Each node in the tree has a weight that determines the percentage of its parent node’s bandwidth

that should be allocated to it. Also, each node has a scheduler: whereas the scheduler of a leaf

node schedules all the threads that belong to the leaf node, the scheduler of an intermediate node

schedules it’s child nodes. Given such a scheduling structure, the scheduling of threads occurs

hierarchically. The weights associated with the nodes determine the proportion of the CPU

bandwidth available to the threads/application class represented by that node.

Similarly for the case of the network packet scheduler[2], it is possible to create a hierarchical

structure that represents the distribution of available network bandwidth to the various flows in

the system. This is done by attaching queues to leaf nodes in the scheduling hierarchy and

associating sockets with the respective queues.

These manipulations are all performed through special system calls made by an application

running as root. This application can be the same or different as the one requiring the QoS

guarantees.

2.2 TAO / Corba Features

TAO[5] is a freely available, open-source, and standards-compliant real-time implementation of

CORBA that provides efficient, predictable, and scalable quality of service (QoS) end-to-end.

Unlike conventional implementations of CORBA, which are inefficient, unpredictable, nonscalable, and often non-portable, TAO applies the best software practices and patterns to

automate the delivery of high-performance and real-time QoS to distributed applications.

Many types of real-time applications can benefit from the flexibility of the features provided by

the TAO ORB and its CORBA services. In general, these applications require predictable timing

characteristics and robustness since they are used in mission-critical real-time systems. Other

real-time applications require low development cost and fast time to market.

Traditionally, the barrier to viable real-time CORBA has been that many real-time challenges are

associated with end-to-end system design aspects that transcend the layering boundaries

traditionally associated with CORBA. That's why TAO integrates the network interfaces, OS I/O

subsystem, ORB, and middleware services in order to provide an end-to-end solution.

For instance, consider the CORBA Event Service[6], which simplifies application software by

supporting decoupled suppliers and consumers, asynchronous event delivery, and distributed

group communication. TAO enhances the standard CORBA Event Service to provide important

features, such as real-time event dispatching and scheduling, periodic event processing, efficient

event filtering and correlation mechanisms, and multicast protocols required by real-time

applications.

4

TAO is carefully designed using optimization principle patterns that substantially improve the

efficiency, predictability, and scalability of the following characteristics that are crucial to highperformance and real-time applications:

TAO uses active demultiplexing and perfect hashing optimizations to dispatch requests to

objects in constant, i.e., O(1), time regardless of the number of objects, IDL interface

operations, or nested POAs.

TAO's IDL compiler generate either compiled and/or interpreted stubs and skeletons,

which enables applications to make fine-grained time/space tradeoffs in the presentation

layer.

TAO's concurrency model is carefully design to minimize context switching,

synchronization, dynamic memory allocation, and data movement. In particular, TAO's

concurrency model can be configured to incur only 1 context switch, 0 memory

allocations, and 0 locks in the ``fast path'' through the ORB.

TAO uses a non-multiplexed connection model that avoids priority inversion and behaves

predictably when used with multi-rate real-time applications.

TAO's I/O subsystem is designed to minimize priority inversion interrupt overhead over

high-speed ATM networks and real-time interconnects, such as VME. It also runs very

efficiently over standard TCP/IP protocols, as well.

TAO provides all these optimizations within the standard CORBA 2.x reference model.

5

3. Experimental Setup

To evaluate the two systems, we developed and tested a set of applications for them and

performed various performance measurements. For this purpose we used a fire-walled network

of identical Linux workstations. The machines were all 300 MHz Pentium – II’s with 192MB

RAM connected to each other through a 10Mbps Ethernet link and running Redhat Linux 6.1.

Applications were client-server type and were tested in two configurations – client running on

the same host as the server and client running on another host in the network (remote client

case).

The following applications were developed for both systems - (QLinux as well as Corba) to

compare various aspects of performance of these two systems. Since the two systems support

slightly different application design frameworks, care was taken to keep the corresponding

applications on both systems comparable.

3.1 Echo / Null RPC

This application was designed to measure the response time and the corresponding over head for

a single unit of synchronous communication. In this application, the client would send a small

(usually empty string) string to the server which would then respond by sending the same string

back. The metric in this application was the response time of a single request as observed from

the client side.

In the case of the application QLinux the client and server communicate through a simple,

connection oriented (TCP) socket. The server launches a new thread whenever a client connects

to it, to service the request. The server then reads the string sent by the client from the socket and

writes it back to the socket immediately. The client sends 100 sequential requests to the server,

records the response times for each of these and then exits. As this application does not involve

and QoS specific services, it was run on a plain Linux kernel.

In the Corba based application, we used the concept of a distributed object to implement the

Echo server. The server implements the interface of the Echo object (the interface consists of a

single function that takes in a string argument and returns the same string).

The server creates an instance of the Echo object, writes it's unique identification string (IOR) to

a disk file and then waits for client requests. The client reads the Server IOR string from the disk

file to obtain a reference to the Echo object. It then performs the Echo function by means of an

RPC type mechanism. The actual sending and receiving of the data between the client and the

server takes place through the ORB (Object Request Broker) and the details of this are

completely transparent to the programmer.

For a fair evaluation, we configured the server to operate in a 'thread per request' mode. Other

modes such as reactive, 'thread per connection' or pool of threads are also available without

needing any special coding effort from the developer. Also, this application does not use any

6

QoS related features of the TAO ORB, but it does benefit from any special optimizations built

into the ORB.

The following table indicates the average response times for the Echo application for the two

systems:

Local Client

Non Corba

Corba with

first request

Corba

without first

request

513.55 7.01

Remote Client

767.45 4.97

648.99 119.42

897.93 119.65

588.101 4.31

836.899 3.04

As can be seen from the table, on the average, the response time for the Corba based echo

application was larger than for the simple socket based ones. This is because of the extra

overhead which the middle-ware involves. An strace of the Corba application revealed that

although it too uses TCP based socket for it's communication, the marshalling, demarshalling of

arguments, extra header information that needs to be sent and the allocation and deallocation of

the paramters at the server-side was the cause of the larger response time for the CORBA

application.

Figures 1 and 2 also show that in the Corba case, the response time for the very first request

made on the remote object takes an order of magnitude time more than subsequent requests to

the same object. This difference can become significant in case the application design is such

that only one request is made per remote object (i.e. a remote object reference is never reused. In

this case, every request would experience the extra overhead of the very first request.

7

Non Corba Null RPC Response Time

Corba Null RPC Response Times

1000

8000

900

7000

800

6000

700

600

Local

Remote

4000

usecs

usecs

5000

Local

Remote

500

400

3000

300

2000

200

1000

100

Figure 1: Corba Null RPC Response Time

97

93

89

85

81

77

73

69

65

61

57

53

49

45

41

37

33

29

25

21

9

17

5

13

0

1

97

93

89

85

81

77

73

69

65

61

57

53

49

45

41

37

33

29

25

21

9

17

13

5

1

0

Figure 2: Socket Null RPC Response Times

The explanation for this can be seen from the following ‘strace’ of the client application

for the first two requests sent to the server:

gettimeofday({989986519, 979772}, NULL) = 0

brk(0x80e7000)

= 0x80e7000

socket(PF_INET, SOCK_STREAM, IPPROTO_IP) = 7

connect(7, {sin_family=AF_INET, sin_port=htons(4937),

sin_addr=inet_addr("192.168.42.3")}, 16) = 0

fcntl(7, F_GETFL)

= 0x2 (flags O_RDWR)

fcntl(7, F_SETFL, O_RDWR)

= 0

fcntl(7, F_GETFL)

= 0x2 (flags O_RDWR)

fcntl(7, F_SETFL, O_RDWR)

= 0

setsockopt(7, SOL_SOCKET, SO_SNDBUF, [65535], 4) = 0

setsockopt(7, SOL_SOCKET, SO_RCVBUF, [65535], 4) = 0

setsockopt(7, IPPROTO_TCP1, [1], 4)

= 0

getpid()

= 20221

fcntl(7, F_SETFD, FD_CLOEXEC)

= 0

getpeername(7, {sin_family=AF_INET, sin_port=htons(4937),

sin_addr=inet_addr("192.168.42.3")}, [16]) = 0

rt_sigprocmask(SIG_BLOCK, ~[RT_1], [RT_0], 8) = 0

rt_sigprocmask(SIG_SETMASK, [RT_0], NULL, 8) = 0

writev(7,

[{"GIOP\1\1\1\0F\0\0\0\0\0\0\0\0\0\0\0\1\237\0@\33\0\0\0\24"..., 82}],

1) = 82

rt_sigprocmask(SIG_BLOCK, ~[RT_1], [RT_0], 8) = 0

rt_sigprocmask(SIG_SETMASK, [RT_0], NULL, 8) = 0

select(8, [5 7], NULL, NULL, NULL)

= 1 (in [7])

read(7, "GIOP\1\1\1\1\26\0\0\0", 12)

= 12

read(7, "\0\0\0\0\0\0\0\0\0\0\0\0\6\0\0\0Hello\0", 22) = 22

gettimeofday({989986519, 987282}, NULL) = 0

fstat(1, {st_mode=S_IFREG|0640, st_size=20519, ...}) = 0

mmap(0, 4096, PROT_READ|PROT_WRITE, MAP_PRIVATE|MAP_ANONYMOUS, -1, 0) =

0x40923000

write(1, "7510\n", 57510

)

= 5

gettimeofday({989986519, 988551}, NULL) = 0

writev(7,

[{"GIOP\1\1\1\0F\0\0\0\0\0\0\0\1\0\0\0\1\237\0@\33\0\0\0\24"..., 82}],

1) = 82

rt_sigprocmask(SIG_BLOCK, ~[RT_1], [RT_0], 8) = 0

rt_sigprocmask(SIG_SETMASK, [RT_0], NULL, 8) = 0

8

select(8, [5 7], NULL, NULL, NULL)

= 1 (in [7])

read(7, "GIOP\1\1\1\1\26\0\0\0", 12)

= 12

read(7, "\0\0\0\0\1\0\0\0\0\0\0\0\6\0\0\0Hello\0", 22) = 22

gettimeofday({989986519, 990551}, NULL) = 0

The code between the 2 pairs of gettimeofday system calls shows the system calls made

by the client for the first and the second requests to the same object. As can be seen, the

client creates a socket and connects to the server only after the first request is made, for

subsequent calls the connection is kept open and reused. Hence the first request involves

a greater over head. We expect a similar behavior at the server side contributing to the

patter, when requests are received, however, this could not be confirmed as strace failed

to work correctly on the server (which is a multithreaded application).

3.2 Simple HTTP Client-Server

This application models a simple HTTP server that only receives requests for static

HTML pages or objects. The client sends a properly formatted HTTP request to the

server requesting a file. In our system, the client can request one of 8 possible files

varying in size from 20kB to 1.29MB. The server receives the HTTP request, parses it,

reads the requested file from it’s local disk, includes it in an HTTP response and sends it

back to the client. For this experiment, there is only one client thread that sequentially

makes 100 requests for a file of the same size. To avoid disk-caching effects, the server

has 100 copies of files of each possible size and reads a different one every time.

The QLinux application again uses simple TCP based server that creates a new thread for

every request. The new thread services the HTTP request and also logs the time taken for

disk I/O and for processing the HTTP request. This can be used in conjunction with the

response time measured at the client to determine the time spent by the request and

response in the network.

The Corba version of the server uses a remote object with ‘thread per request’ policy to

perform the same operation as the QLinux server.

The following plots indicate that the performance of the two systems in terms of response

time are mostly comparable, though the QLinux application performs marginally better.

Local HTTP Response Times

Remote HTTP Response Time

700000

2500000

600000

2000000

500000

400000

300000

(usecs)

(usecs)

1500000

Corba

Non Corba

Corba

Non Corba

1000000

200000

500000

100000

0

0

0

0

200

400

600

800

1000

1200

1400

200

400

600

800

1000

1200

1400

File Size (kB)

Filesize (kB)

Figure 3: Local HTTP Response Times

Figure 4: Remote HTTP Response Times

9

3.3 Concurrent HTTP Client-Server

This experiment differs from the previous one in that, instead of having a single client

thread, the client launches a number of threads (1-4), each of which make 100 requests to

the same HTTP server concurrently. This forces the server to service 1-4 requests

simultaneously. In both applications, the server remains unchanged from the previous

experiment, and the clients were modified so that the client launches a certain no of

threads on startup each of which would make requests to the server. These experiments

were done for varying no. of client threads (1-4), varying file sizes (20 kB to 1.28Mb)

and with the client on a host local or remote to the server.

The figures5-8 summarize these results for the following representative cases.

No of Client Threads = 4 and varying the filesize

Filesize=640kB, varying the no. of client threads.

Local Concurrent HTTP Response Time, Filesize=640kB

600000

1000000

500000

800000

400000

Corba

Non Corba

600000

(usecs)

(usecs)

Local HTTP Response Times, Concurrency=4

1200000

Corba

Non Corba

300000

400000

200000

200000

100000

0

0

0

200

400

600

800

1000

1200

0

1400

0.5

1

1.5

2

2.5

3

3.5

4

4.5

No of concurrent clients

Filesize (kB)

Figure 6: Remote HTTP Response Times

Figure 5: Local HTTP Response Times

Remote Concurrent HTTP Response Times, Filesize=640kB

Remote HTTP Response Times,Concurrency = 4

3500000

7000000

3000000

6000000

2500000

5000000

(usecs)

Corba

Non Corba

(usecs)

2000000

4000000

Corba

Non Corba

3000000

1500000

2000000

1000000

1000000

500000

0

0

0

200

400

600

800

1000

1200

1400

File size (kB)

Figure 7: Local HTTP Response Times

0

0.5

1

1.5

2

2.5

3

3.5

4

4.5

No of concurrent clients

Figure 8: Remote HTTP Response Times

3.4 Compute Intensive HTTP Client-Server

This again is a variation on the HTTP theme. This is a simulation of cgi-bin processing

request. In this case the client sends an HTTP request to the server. In response, the

server reads a small file from disk (40kB), performs some computation (a complicated

loop) and then sends the file it read from disk to the client. The server is designed so that

the time taken for the computation constitutes most of the request processing time. In this

10

case as well the applications were created by simple modifications to HTTP client-server

described in the preceding experiments. The figures 9,10 demonstrate the variation of the

average response time for a single request as the no. of clients making concurrent

requests to the server is increased from 1 – 15. As can be seen from the figures, the

response time for these computation-intensive requests increases almost linearly as the

number of simultaneous clients is increased. The Corba application because of the extra

overheads mentioned earlier performs slightly poorer than the simple socket based one.

Also since this application does not involve transfer of large amounts data over the

network, the difference in the response times for the local client and the remote client was

not too substantial.

Remote Comp Intensive Response Time

Local Computation Response Times

1400000

1200000

1200000

1000000

1000000

800000

(usecs)

(usecs)

800000

Corba

Non Corba

600000

Corba

Non Corba

600000

400000

400000

200000

200000

0

0

0

2

4

6

8

10

12

14

16

No of Clients

Figure 9: Local HTTP Response Times, with concurrency

0

2

4

6

8

10

12

14

16

No of Clients

Figure 10: Remote HTTP Response Times, with concurrency

To simulate the computation at the server, we initially used a simple counting for-loop

which counted to a large no. However since the TAO/ORB by default uses –O3 level of

compiler optimization ( g++ compiler) , this simple loop got optmized out. So we had to

make the loop more complicated and make it manipulate more dummy variables to

prevent the compiler from optimizing it out.

3.5 Long File/ MPEG Download

One of the models for serving a video stream is to assume that the client has sufficient

buffers to accommodate the entire video file. In this model the server simply sends the

video file to the client as fast as possible and letting the client buffer it and play it back

whenever it wants. This model isn’t fundamentally different from the simple download of

a large file. Since the video file is not being streamed at a real time rate and there are no

deadlines associated with the arrival of data, the only parameter of interest is the time

taken to download the entire file (basically throughput).

In this experiment, we’ve used this model and measured the time taken to download an

MPEG-1 video file, with the server sending the video frames as fast as possible. We used

an MPEG-1 video file consisting of 5962 frames. Instead of actually using the video data,

we generated a profile of the MPEG sequence that listed the size of each frame in the

video. During initialization, the server reads this profile from disk and stores the data

about frame sizes in an array. When transmitting, the server simply sends a blank block

of data of the same size as the corresponding frame to the client. This approach

11

eliminates the disk from the service loop and allows us to measure the pure network and

application overhead of transmitting data.

In the QLinux application, the server listens for client connections on a TCP socket.

When a client connects to the server, the server spawns a new thread which starts sending

the client data equivalent to the video frame sizes as soon as possible. The stream is

preceded by a 4 byte header indicating the number of frames in the stream. Each frame in

the stream is preceded by a 4 byte header specifying the size of the following frame in

bytes. These headers are necessary so that client knows how much data to receive and

when to stop receiving the data. The client logs the arrival time of each frame when all

the data for that frame is arrived.

When using Corba, for this kind of application, the synchronous RPC mechanism of the

basic Corba server is not suitable because of the amount of data sent from the server to

the client and the manner in which it is sent. TAO includes an Audio/Video Streaming

Service[3], which supports the semantics for setting up and tearing down Streaming

connections, however, the service focuses itself in the control/signaling involved during

streaming and does not deal with the actual transport/QoS related issues. The Real-Time

Event Channel Service provided in the TAO implementation of CORBA is an ideal

choice for the kind of communication required for this application.

The Event Channel service needs to be started before either client or the server. The

server starts up and creates an instance of a video supplier object and listens for requests

from clients through an RPC call. When a client wants to receive a video file from a

server, it first launches a separate thread that connects to the Event Channel as a

consumer. Since the RTES supports filtering of events at the consumer by event types,

the consumer thread specifies to the event channel the ID of events it wishes to receive.

The consumer thread then waits for events to arrive. Meanwhile the other client thread

obtains a reference to the video supplier object and performance an RPC on it, providing

it with the event ID it is interested in receiving and telling the supplier to start sending the

video frames.

The supplier on receiving the RPC from the client, connects to the event channel as a

supplier of events with the ID specified by the client. The first event it sends to the client

contains the number of frames it will be sending. Next the supplier pushes events into the

Event Channel containing one frame each.

In the general case, the Event Channel can run on any host on the distributed system and

still be used as a communication channel between a supplier and consumer on any other

hosts. However to allow a fairer comparison with the QLinux application, for our

experiments, the event channel was run on the same host as the supplier.

The following plots show the frame arrival times at the consumer for the QLinux and

Corba applications. It can be seen that the performance of Corba is poorer than the

QLinux application because of the extra overhead involved in doing the data transfer via

an event channel. The difference in the two is much more for the case when the client and

12

server are on running on the same machine. The reason for this is that in the case with the

remote client, the delay incurred in the network (which is approximately the same for

both applications) is much more substantial than the overhead due to the event channel.

Remote MPEG Download

Local MPEG Download

450000000

140000000

400000000

120000000

350000000

80000000

Corba

Non Corba

60000000

Frame arrival times (usec)

Frame arrival times (usecs)

100000000

300000000

250000000

Corba

NonCorba

200000000

150000000

40000000

100000000

20000000

50000000

5911

5714

5517

5320

5123

4926

4729

4532

4335

4138

3941

3744

3547

3350

3153

2956

2759

2562

2365

2168

1971

1774

1577

986

1380

789

592

1183

1

395

5941

5743

5545

5347

5149

4951

4753

4555

4357

4159

3961

3763

3565

3367

3169

2971

2773

2575

2377

2179

1981

1783

991

1585

1387

793

1189

1

595

397

199

Figure 11: Frame Arrival Times, Local Download

198

0

0

Figure 12: Frame Arrival Times, Remote Download

To estimate the overhead of the event channel, we measured transmission delays for 1000

byte data blocks through direct socket transfers vs through an event channel. In the case

of supplier and receiver on the same machine, we get the following numbers:

Corba (via Event Channel) - 3134.22 17.10 usecs

Socket based direct - 1279.32 203.33 usecs

These numbers clearly indicate the extra overhead that the Event Channel adds to the data

transmission.

3.6 Video Streaming Without QoS Specification

This experiment is a baseline evaluation of the performance of the two systems for

streaming a video sequence at a Real-Time rate. The application are mostly similar to the

case of the long MPEG download, except that instead of transmitting all frames as soon

as possible, the server transmits frames in rounds of 30 frames every second. Since the

video sequence is a 30fps one, this transmission corresponds to the frame rate. Although

both systems allow specification of QoS parameters (in different ways), these were not

enabled for this set of experiments. We experimented by increasing the number of

concurrent streams till the server was unable to meet the frame rate.

In the QLinux application apart from the rate at which the server sends the frames, there

were no other changes to the application from the previous experiment .

The Corba Real Time Event Service also needs the TAO Scheduling Service to be

running for it to work. Also the supplier and consumer need to locate the Event Channel

to be able to connect to it. In the previous experiments, this was done by using each

service’s unique string which can be used to locate it (the IOR). However in a real-life

Corba based distributed system, the system runs a “Naming Service” (similar to a DNS

kind of look up). Each CORBA object (i.e. service providing entity) registers itself with

13

the Naming Service and which binds a name to it. Since the Naming Service runs in a

well-known location, a client wishing to access a particular object can perform a lookup

with the naming service and get a reference to the object.

So for this and subsequent applications, we ran a Naming Service on the server machine.

Then the Scheduling Service is launched which registers itself as “SchedulingService”.

Then the Event Channel Service launches itself, try to locate the Scheduling Service by

doing a lookup on the Naming Service for “SchedulingService” and on success register

itself as “EventService”. Once this is done, the Supplier and the Consumer can connect to

the event channel by first locating it by doing a lookup for “EventService”. This means

that server machine is running at least 4 Corba applications at any time (Naming Service,

Scheduling Service, Event Service & the Supplier). Since these are large applications,

they do put a significant load on the CPU.

Another important difference between this application and the MPEG download is that

the supplier instead of pushing a single event for each frame to be transmitted, creates an

event set consisting of 30 events corresponding to the 30 frames of that round, and pushes

the whole event set at once. It is worth noting that although the application framework

allows for a consumer to receive multiple events (an event set) at a time, we found that

even though we were pushing an event set of 30 events at supplier end, the consumer was

still getting one event at a time.

We also ran these applications for the case of multiple concurrent streams being served to

different clients through the same event channel. As described earlier, the event channel

has support for filtering event delivery to consumers.

Since the supplier is run in ‘thread per request’ mode, a new supplier thread is created for

each client that wants to receive a video. These supplier threads connect to the same

event channel and push events into it separately. Since each client (consumer) provides

the supplier with a different event ID, the events being pushed into the channel by the

various supplier threads are tagged with their respective Ids and are filtered by the event

channel so that each consumer only gets the events belonging to its own stream.

The event channel uses a pool of threads to do the dispatching of the events and the size

of the pool can be configured when the Event Service is started. For our experiments, we

set the number of threads to be the same as the no of concurrent clients we would be

using for each experiment.

The Metric: For comparing the performance of the two applications we used a metric

based on the time taken to receive a round. When transmitting a stream, the server sends

a round of 30 frames every second. If it completes transmitting the round before it’s time

to send the next round, the sending thread sleeps for that duration. If, however, it is

unable to send a round in one second, it starts sending the next round as soon it finishes

with the current one. The client, who is logging the frame arrival time of each frame, is

interested in receiving a complete round (30 frames) every second to be able to play the

video stream continuously. Therefore, at the client side we measure the amount of time

14

taken to receive an entire round. This is the time from the arrival of the first frame to the

arrival of the arrival of the last (30th) frame of the round. If this time is less that 1 second,

then we can say that this round was received in-time for playback. If the time is greater

than one second, this corresponds to a missed deadline. One of the effects that this metric

does not measure is that if one of the earlier rounds is delayed and misses its deadline, it

is likely going to make the subsequent rounds also miss their deadlines. By using the time

to receive each frame separately, we factor out the cumulative effect of deadline misses.

Therefore the two metrics we are interested in are:

1. Average Round Duration (as measured from Client) with a increasing number of

Streams

2. Distribution of Round Duration (around deadline) and effect of increasing number

of Streams

Local Streaming No QoS

Remote Streaming No QoS

70000

1200000

1000000

50000

40000

Corba

Non Corba

30000

20000

Average Round duration (usecs)

Average Round Duration (usecs)

60000

800000

Corba

Non Corba

600000

400000

200000

10000

0

0

0

1

2

3

4

5

6

No of Streams

Figure 13: Average Round Durations, Local Client, No QoS

0

1

2

3

4

5

6

No of streams

Figure 14: Average Round Durations, Remote Client No QoS

As can be seen from figure 12, when the client and server are running on the same host,

the QLinux application not only outperforms the Corba application, but also is not

affected significantly by increasing the number of simultaneous streams. The reason for

this is that for the local streaming, the overhead due to the network is minimal, but since

the QLinux applications are simpler and less memory and CPU intensive, they are not

affected as much as Corba applications when a large no. are running on the same system.

However in figure 13, for the remote client, the performance of the two systems is almost

comparable. Both applications are unable to meet the frame rate (corresponding to an

average round duration of 1 second) for 5 concurrent streams.

The following figures 15,16,17,18 show the distribution of Round Durations for the

remote client and the cases of 4 and 5 concurrent streams.

We also experimented by changing the round size from 30 frames to 15 frames. In this

case the server is required to send rounds of 15 frames every 500 msecs. We found that

because of the tighter deadline and possibly the 10msec granularity of the Linux kernel

clock, the server was unable to support even 4 concurrent streams for remote clients. The

round durations for these cases are shown in figures 19 and 20

15

Corba Remote 5, No QoS

0.16

0.16

0.14

0.14

0.12

0.12

0.1

Frequency

Round Duration

More

1400000

1300000

1200000

1100000

900000

1000000

800000

700000

600000

500000

400000

0

More

1400000

1300000

1200000

1100000

900000

1000000

800000

700000

600000

500000

0

400000

0.02

0

300000

0.04

0.02

200000

0.06

0.04

0

0.06

300000

0.08

200000

Frequency

0.08

100000

0.1

Fraction

0.18

100000

Fraction

Corba Remote 4, No QoS

0.18

Round Durations

Figure 15: Corba Round Durations 4 Streams

Figure 16: Corba Round Durations 5 Streams

Non Corba Remote 4, No QoS

Non Corba Remote 5, No QoS

0.2

0.14

0.18

0.12

0.16

0.1

0.14

0.1

Frequency

Fraction

Fraction

0.12

0.08

Frequency

0.06

0.08

0.06

0.04

0.04

0.02

0.02

Round Durations

More

1400000

1300000

1200000

1100000

1000000

900000

800000

700000

600000

500000

400000

300000

200000

0

100000

More

1400000

1300000

1200000

1100000

1000000

900000

800000

700000

600000

500000

400000

300000

200000

0

0

100000

0

Round Durations

Figure 17: Non Corba Round Durations 4 Streams

Figure 18: Non Corba Round Durations 5 Streams

RoundSize=15 Corba Remote No QoS

RoundSize=15 Non Corba Remote No QoS

0.16

0.2

0.18

0.14

0.16

0.12

0.14

0.12

0.08

Frequency

Fraction

Fraction

0.1

0.1

Frequency

0.08

0.06

0.06

0.04

0.04

0.02

0.02

Round Durations

Figure 19: Corba Round Durations 4 Streams,

Round size=15 frames

More

700000

650000

600000

550000

500000

450000

400000

350000

300000

250000

200000

150000

100000

0

50000

More

700000

650000

600000

550000

500000

450000

400000

350000

300000

250000

200000

150000

100000

0

0

50000

0

Round Durations

Figure 20: Non Corba Round Durations 4 Streams,

Round size=15 frames

3.7 Video Streaming With QoS Specification

After testing the raw performance of streaming applications on both systems features, we

repeated the experiments of the previous sections, this time with the QoS features

enabled. This also involved modifying the application to specify the QoS guarantees it

needs from the system.

16

In the case of the QLinux application, the server modifies the process scheduling

hierarchy to create an SFQ scheduling class with weight 1. A default best effort class

with weight 1 is automatically created when the kernel is booted. For each thread that the

server creates to send a stream, it creates a new leaf node with weight 1 in the SFQ class

and attaches the service thread to that node. Thus, in the server application, the thread

that listens for client connections, runs in the best effort class and the threads responsible

for streaming the video run in the SFQ class, all with the same weight.

Similarly, the server also creates an SFQ class in the packet scheduling hierarchy and

attaches the new socket created for a client connection to a newly created queue with

weight 1 in the SFQ class.

The mechanism for providing QoS specification is much simpler and more intuitive in

the case of TAO. All the server program needs to do is to associate the following QoS

parameters with the a certain type of event when connecting to an Event Channel as a

supplier (or consumer):

Worst Case Time (to perform task): set to 1 sec

Typical Time (to perform task): set to 1 sec

Cached Time: set to 1 sec

Time Period of task: set to 1 sec

No of threads (of event channel to use for this task): set to 1

The Real Time Event Service uses these parameters to get a schedule from the

Scheduling Service and schedules the Event Channel’s pool of threads using that

schedule.

The following figures show the variation in the Average Round Duration as the number

of concurrent clients increases. This is for the round size of 30 frames with a 1 second

period.

Remote Streaming with QoS

1200000

50000

1000000

40000

800000

Corba

No Corba

30000

Avg Round Durations

Avg Round Durations

Local Streaming with QoS

60000

20000

400000

10000

200000

0

Corba

Non Corba

600000

0

0

1

2

3

4

5

6

No of Streams

Figure 21: Average Round Durations, Local Client with QoS

0

1

2

3

4

5

6

No of Streams

Figure 22: Average Round Duration Remote Client with QoS

As can be seen from the above figures, the relative behavior of the two applications in

terms of the average Round Duration does not change too much from the case when the

QoS was not enabled. For local performance, QLinux still out-performs CORBA and in

the case of remote client, although CORBA does better when the number of clients is

low, the QLinux application scales better.

17

The following plots show the distributions of the Round Durations for these applications

with 4 and 5 remote clients. It can be seen that QLinux with QoS enabled does manage to

shorten the tail of the distribution noticeably, thus ensuring that a greater percentage of

rounds are able to arrive within the deadline.

Corba Remote 4 with QoS

Corba Remote 5 with QoS

0.18

0.2

0.16

0.18

0.16

0.14

0.14

0.12

Frequency

Fraction

Fractions

0.12

0.1

0.08

0.1

Frequency

0.08

0.06

0.06

0.04

Round Durations

More

1400000

1300000

1200000

1100000

900000

1000000

800000

Round Durations

Figure 23: Corba RoundDurations,4 Streams with QoS

Figure 24: Corba RoundDurations,5 Streams with QoS

Non Corba Remote 4 with QoS

Non Corba Remote 5 with QoS

0.2

0.2

0.18

0.18

0.16

0.16

0.14

0.14

0.12

0.1

Round Durations

Figure 25: Non Corba RoundDurations,4 Streams with QoS

More

1400000

1300000

1200000

1100000

1000000

900000

800000

700000

600000

500000

400000

300000

Frequency

0

More

1400000

1300000

1200000

1100000

1000000

900000

800000

700000

600000

0

500000

0.02

0

400000

0.04

0.02

300000

0.06

0.04

200000

0.06

0

0.08

100000

0.08

200000

Frequency

100000

0.1

Fraction

0.12

Fraction

700000

600000

500000

400000

300000

200000

0

More

1400000

1300000

1200000

1100000

900000

1000000

800000

700000

600000

500000

400000

300000

200000

0

0

100000

0.02

0

100000

0.04

0.02

Round Durations

Figure 26: Non Corba RoundDurations,5 Streams with QoS

We also observed the effect of decreasing the round size to 15 frames transmitted every

500 msecs for 4 concurrent remote clients. Again we see that with QoS, the QLinux

application does a better job of ensuring that a greater number rounds are received within

their deadlines. The improvement from the case without QoS is also more noticeable with

QLinux.

18

RoundSize=15 Non Corba Remote QoS

0.18

0.16

0.16

0.14

0.14

0.12

0.12

0.1

Frequency

0.08

Fraction

0.1

Frequency

0.08

0.06

0.06

0.04

0.04

0.02

0.02

0

Figure 27: Corba Round Durations 4 Streams,

Round size=15 frames

More

700000

650000

600000

550000

500000

450000

400000

350000

300000

250000

200000

150000

100000

0

More

700000

650000

600000

550000

500000

450000

400000

350000

300000

250000

200000

150000

100000

0

50000

0

Round Duration

50000

Fractions

RoundSize=15 Corba Remote QoS

0.18

Round Duration

Figure 28: Non Corba Round Durations 4 Streams,

Round size=15 frames

3.8 Video Streaming performance in presence of another application

In this experiment, we’ve attempted to measure how the performance of a time critical

application is affected by the presence of another non-time critical application. This was

done by running the Streaming Server and the HTTP server on the same host. 4 streaming

clients and a certain number of HTTP clients make concurrent requests to their respective

servers. We observe the effect of increasing the number of HTTP clients on the Average

Round Duration measured by the streaming clients.

The results of some preliminary experiments are presented below in figures 29 and 30

19

Application Mix, 4 Streams + HTTP, with QoS

1400000

1200000

1200000

1000000

1000000

800000

Corba

Non Corba

600000

Avg Round Durations

Avg Round Durations

Application Mix, 4 Streams + HTTP No QoS

1400000

800000

Corba

Non Corba

600000

400000

400000

200000

200000

0

0

0

2

4

6

8

10

12

No of HTTP Clients

Figure 29: Average Round Durations, 4 Client

No QoS, increasing no of HTTP Clients

0

2

4

6

8

10

12

No of HTTP Clients

Figure 30: Average Round Durations, 4 Client

with QoS, increasing no of HTTP Clients

The experiments involving these applications are being continued by others and will be

included in a later document.

3.9 Industrial Control System Simulation

This application simulates an industrial control console system[4]. The application

consists of 2 distinct components. The first one models a supervisor’s command console

in which a client program from time to time issues a command to a remote actuator

device (simulated by a remote server). The device performs the operation requested by

the client (simulated by a brief busy-wait) and then sends an acknowledgement back to

the client. The operator is simulated by automatically generated requests at random time

intervals (100 – 300 msecs).

The second component is a remote-sensing kind of application, in which a sensor

(simulated by a supplier) periodically (every 20 msecs) sends an update of a

measurement to a remote monitoring system (simulated by a consumer).

The QLinux version of this application consists of two client-server pairs, one for the

Command Console and the other for the remote sensor. Both applications are nonconcurrent and use a TCP based socket for communication. In the command control

application, the server on receiving a connection waits for the client to send an operation

on the open socket, performs a brief busy wait and sends back a single byte

acknowledgement. The client issues commands at random time intervals and records the

response time for requests. In the remote-sensing application, the server on getting a

connection periodically sends a byte of data to the client, which measures the arrival

times of these updates.

For TAO, the Command Console application uses the RPC-like mechanism to send a

request and receive a response. The remote-sensing application uses the Real Time event

channel for communication between the sensor and the monitoring system.

20

The metrics of interest in this application are the jitter in the arrival of sensor updates and

the response times for the Command-Console requests.

These experiments were only performed for the remote client and QoS was enabled for

Remote Sensing Application.

The average response times for the Command-Console application requests are

summarized below:

Response Time (usecs)

CORBA

QLinux

Remote Sensor NOT Running

67974.33 96.09

67617.63 12.97

Remote Sensor Running

70960.69 443.86

67853.9 11.53

As can be seen from the above table, the performance of the Command-Console

application in the absence of the Remote-Sensing application for both QLinux and

CORBA is comparable, however the degradation in performance when the Remote

Sensing Application is added to the server load, is more significant in the case of

CORBA than QLinux because of the extra overhead associated with CORBA

applications.

In the case of the Remote Sensing applications, we measure the Update sending times at

the sender and the Update receiving time at the client. The differences between

successive update sending times gives an indication of how well the threads of the

sending application are scheduled. Ideally these differences should be constant. These

experiments were performed with the Command-Console application also running

simultaneously on the same hosts.

The differences between update arrival times at the client also incorporate the jitter

introduced by the communication medium between the two applications. These

measurements are summarized in the following table:

Avg. Update Differences

(usecs)

Sending Side

CORBA

QLinux

29996.37 9.21

29997.65 4.63

Receiving Side

29992.54 7.80

29997.63 5.70

As can be seen, there is really not much to choose between the performance of the two

systems. They both manage to give an almost identical level of performance.

4. Summary & Discussion of Qualitative Comparisons

One of the objectives of this work was to provide, apart from the quantitative comparison

of the two systems presented above, also give a qualitative evaluation of the relative ease

of use of the two systems and identify any potential stumbling blocks.

21

In our experience with developing corresponding applications for both QLinux and TAO,

we observed the following pros and cons of the two systems.

4.1 QLinux

One of the biggest advantages that QLinux offers it’s users is the ability to use the same,

API which most systems programmers are already familiar with (basic socket based

programming). Using the additional QoS features available, does not add significantly to

the application complexity. This makes the task of porting already existing applications

to use these features reasonably straightforward. The developer has to learn only a few

new system calls, however, the documentation (man pages), for these leaves some room

for improvement. This is a significant advantage in the usability of the system.

On the other hand, in the simplicity of it’s API, lies also a slight drawback, in that it does

not provide the user with any high-level interfaces to simplify the task of developing

networked/distributed applications. Also since QLinux deals with QoS only in terms of

HSFQ scheduling parameters (i.e. bandwidth share), the task of actually specifying the

QoS for a given applications is not straightforward. This specially so since it is common

to describe QoS parameters in terms of deadlines, end-end delays and periodicity which

do not fit directly into the QLinux framework.

The other noticeable drawbacks of the QLinux API is that it requires that all QoS related

system calls have to be executed in super-user mode. Thus making large scale in a simple

way impractical. A solution to this problem would be to develop run-time services which

run as the super-user and provide an interface for other user’s applications to request QoS

for themselves. It would also be possible to do some admission control in this way. Also,

the error reporting mechanisms for the system calls for CPU HSFQ and Link HSFQ are

neither consistent nor convenient. Link HSFQ system calls use system messages to report

erroneous or exceptional conditions which makes it hard to write clean and robust

applications.

4.2 TAO / CORBA

Since TAO is first a Distributed Object Computing (DOC) middle-ware and then a RealTime system, it suffers from the problem of being a large, complicated and a very

general-purpose application development framework. This implies that any person

wishing to use TAO for a Real-Time application has to first overcome the significant

hurdle of understanding the various concepts related to DOC and the large amount of

details associated with a specific DOC system (such as CORBA). For small, simple

applications, this overhead can be prohibitive.

Also since the use of CORBA requires the developer to follow a certain application

development framework. It makes the task of porting existing applications, which use

conventional socket based communication, to use TAO a very complicated and often

impractical task.

22

However, once this initial hurdle is overcome, the middle-ware provides many features to

make the task of developing distributed applications simpler and faster. Since the middleware frees the developer of the lower level implementation details (the socket

programming), it substantially reduces development time for simple applications because

of the time saved in writing hard to debug, error prone networking code.

This freedom, however, comes at the cost of flexibility. Thus while it is extremely easy

and quick to develop applications which conform well to the models commonly used in

DOC. Developing an application that has a unique/uncommon communication model

might involve a lot of unnecessary complications.

Building TAO and TAO based applications is surprisingly memory and CPU intensive. A

minimal build of ACE, TAO and TAO OrbServices took close to 4 hours on an otherwise

lightly loaded Pentium-II, 300 Mhz machine with 192MB RAM. It takes 5-10 minutes to

build from scratch the simplest TAO based application when a normal socket based one

takes only a few seconds.

For the purpose of specifying QoS guarantees, TAO provides a rather intuitive and easy

to understand interface which involves directly specifying deadlines, periodicity of

events, etc. Also the ORB supports various kinds of concurrency models in servers and

the services that can be used by simply providing command line parameters or

configuration files during startup.

23

References

[1] P. Goyal and X. Guo and H.M. Vin, A Hierarchical CPU Scheduler for Multimedia Operating Systems,

Proceedings of 2nd Symposium on Operating System Design and Implementation (OSDI'96), Seattle, WA,

pages 107-122, October 1996.

[2] P. Goyal and H. M. Vin and H. Cheng, Start-time Fair Queuing: A Scheduling Algorithm for Integrated

Services Packet Switching Networks, Proceedings of ACM SIGCOMM'96, pages 157-168, August 1996

[3] Sumedh Mungee, Nagarajan Surendran, Douglas C. Schmidt, The Design and Performance of a

CORBA Audio/Video Streaming Service

[4] Krithi Ramamritham, Chia Shen, Oscar Gonzalez, Shubo Sen and Shreedar Shirgurkar, Using Windows

NT for Real-Time Applications: Experimental Observations and Recommendations, Proceedings of IEEE

RTAS'98

[5] Douglas C. Schmidt, TAO Overview http://www.cs.wustl.edu/~schmidt/TAO-intro.html

[6] Douglas C. Schmidt, Using the Real Time Event Service

http://www.cs.wustl.edu/~schmidt/events_tutorial.html

24