Hybrid PC architecture Jeremy Sugerman Kayvon Fatahalian

advertisement

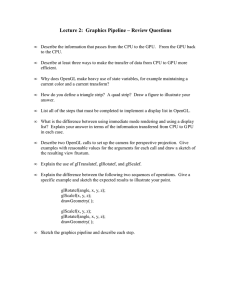

Hybrid PC architecture Jeremy Sugerman Kayvon Fatahalian Trends Multi-core CPUs Generalized GPUs – Brook, CTM, CUDA Tighter CPU-GPU coupling – PS3 – Xbox 360 – AMD “Fusion” (faster bus, but GPU still treated as batch coprocessor) CPU-GPU coupling Important apps (game engines) exhibit workloads suitable for both CPU and GPU-style cores CPU Friendly GPU Friendly IO AI/planning Collisions Adaptive algorithms Geometry processing Shading Physics (fluids/particles) CPU-GPU coupling Current: coarse granularity interaction – Control: CPU launches batch of work, waits for results before sending more commands (multi-pass) – Necessitates algorithmic changes GPU is slave coprocessor – Limited mechanisms to create new work – CPU must deliver LARGE batches – CPU sends GPU commands via “driver” model Fundamentally different cores “CPU” cores – Small number (tens) of HW threads – Software (OS) thread scheduling – Memory system prioritizes minimizing latency “GPU” cores – Many HW threads (>1000), hardware scheduled – Minimize per-thread state (state kept on-chip) • shared PC, wide SIMD execution, small register file • No thread stack – Memory system prioritizes throughput (not clear: sync, SW-managed memory, isolation, resource constraints) GPU as a giant scheduler cmd buffer = on-chip queues data buffer IA 1-to-1 VS 1-to-N (bounded) GS 1-to-N (unbounded) RS 1-to-(0 or X) (X static) PS Off-chip buffers (data) output stream OM data buffer GPU as a giant scheduler VS/GS/PS IA RS Processing cores command queue Thread scoreboard Off-chip buffers (data) Hardware scheduler vertex queue primitive queue fragment queue On-chip queues OM (read-modify-write) GPU as a giant scheduler Rasterizer (+ input cmd processor) is a domain specific HW work scheduler – – – – Millions of work items/frame On chip queues of work Thousands of HW threads active at once CPU threads (via API commands), GS programs, fixed function logic generate work – Pipeline describes dependencies What is the work here? – – – – Vertices Geometric primitives Fragments In the future: Rays? Well defined resource requirements for each category. The project Investigate making “GPU” cores first-class execution engines in multi-core system Add: 1. 2. Fine granularity interaction between cores Processing work on any core can create new work (for any other core) Hypothesis: scheduling work (actions) is key problem – Keeping state on-chip Drive architecture simulation with interactive graphics pipeline augmented with raytracing Our architecture Multi-core processor = some “CPU” + some “GPU” style cores Unified system address space “Good” interconnect between cores Actions (work) on any core can create new work Potentially… – Software-managed configurable L2 – Synchronization/signaling primitives across actions Need new scheduler GPU HW scheduler leverages highly domain-specific information – Knows dependencies – Knows resources used by threads Need to move to more general-purpose HW/SW scheduler, yet still do okay Questions – What scheduling algorithms? – What information is needed to make decisions? Programming model = queues Model system as a collection of work queues – – – – Create work = enqueue SW driven dispatch of “CPU” core work HW driven dispatch of “GPU” core work Application code does not dequeue Benefits of queues Describe classes of work – Associate queues with environments • • • • • GPU (no gather) GPU + gather GPU + create work (bounded) CPU CPU + SW managed L2 Opportunity to coalesce/reorder work – Fine-created creation, bulk execution Describe dependencies Decisions Granularity of work – Enqueue elements or batches? “Coherence” of work (batching state changes) – Associate kernels/resources with queues (part of env)? Constraints on enqueue – Fail gracefully in case of explosion Scheduling policy – Minimize state (size of queues) – How to understand dependencies First steps Coarse architecture simulation – Hello world = run CPU + GPU threads, GPU threads create other threads • Identify GPU ISA additions Establish what information scheduler needs – What are the “environments” Eventually drive simulation with hybrid renderer Evaluation Compare against architectural alternatives 1. Multi-pass rendering (very coarse-grain) with domain-specific scheduler – Paper: “GPU” microarchitecture comparison with our design – Scheduling resources – On chip state / performance tradeoff – On chip bandwidth 2. Many-core homogenous CPU Summary Hypothesis: Elevating “GPU” cores to first-class execution engines is better way to build hybrid system – Apps with dynamic/irregular components – Performance – Ease of programming Allow all cores to generate new work by adding to system queues Scheduling work in these queues is key issue (goal: keep queues on chip) Three fronts GPU micro-architecture – GPU work creating GPU work – Generalization of DirectX 10 GS CPU-GPU integration – GPU cores as first-class execution environments (dump the driver model) – Unified view of work throughout machine – Any core creates work for other cores GPU resource management – Ability to correctly manage/virtualize GPU resources – Window manager