Processor Opportunities Jeremy Sugerman Kayvon Fatahalian

advertisement

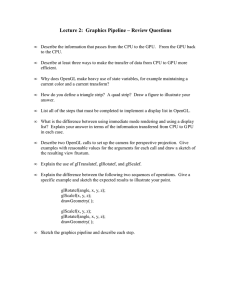

Processor Opportunities Jeremy Sugerman Kayvon Fatahalian Outline Background Introduction and Overview Phase One / “The First Paper” Background Evolved from the GPU, Cell, and x86 ray tracing work with Tim (Foley). Grew out of the FLASHG talk Jeremy gave in February 2006 and Kayvon’s experiences with Sequoia. Daniel, Mike, and Jeremy pursued related short term manifestations in our I3D 2007 paper. GPU K-D Tree Ray Tracing k-D construction really hard. Especially lazy. Ray – k-D Tree intersection painful - Entirely data dependent control flow and access patterns - With SSE packets, extremely CPU efficient Local shading runs great on GPUs, though – Highly coherent data access (textures) – Highly coherent execution (materials) Insult to injury: Rasterization dominates tracing eye rays “Fixing” the GPU Ray segments are a lot like fragments – Add frame buffer (x,y) and weight – Otherwise independent, but highly coherent But: Rays can generate more rays What if: – Fragments could create fragments? – “Shading” and “Intersecting” fragments could both be runnable at once? But: – SIMD still inefficient and (lazy) k-D build still hard Moving to the Present… Applications are FLOP Hungry “Important” workloads want lots of FLOPS – Video processing, Rendering – Physics / Game “Physics” – Computational Biology, Finance – Even OS compositing managers! All can soak vastly more compute than current CPUs deliver All can utilize thread or data parallelism. Compute-Maximizing Processors Or “throughput oriented” Packed with ALUs / FPUs Trade single-thread ILP for higher level parallelism Offer an order of magnitude potential performance boost Available in many flavours: SIMD, Massively threaded, Hordes of tiny cores, … Compute-Maximizing Processors Generally offered as off-board “accelerators” Performance is only achieved when utilization stays high. Which is hard. Mapping / porting algorithms is a labour intensive and complex effort. This is intrinsic. Within any given area / power / transistor budget, an order of magnitude advantage over CPU performance comes at a cost… If it didn’t, the CPU designers would steal it. Real Applications are Complicated Complete applications have aspects both well suited to and pathological for computemaximizing processors. Often co-mingled. Porting is often primarily disentangling into large enough chunks to be worth offloading. Difficulty in partitioning and cost of transfer disqualifies some likely seeming applications. Enter Multi-Core Single threaded CPU scaling is very hard. Multi-core and multi-threaded cores are already mainstream – 2-, 4-way x86es, 9-way Cell, 16+ way* GPU Multi-core allows heterogeneous cores per chip – Qualitatively “easier” acceptance than multiple single core packages. – Qualitatively “better” than an accelerator model Heterogeneous Multi-Core Balance the mix of conventional and compute cores based upon target market. – Area / Power budget can be maximized for e.g. Consumer / Laptop versus Server Always worth having at least one throughput core per chip. – Order of magnitude advantage when it works – Video processing and window system effects – A small compute core is not a huge trade off. Heterogeneous Multi-Core Three significant advantages: – (Obvious) Inter-core communication and coordination become lighter weight. – (Subtle) Compute-maximizing cores become ubiquitous CPU elements and thus create a unified architectural model predicated on their availability. Not just a CPU plus accelerator! – The CPU-Compute interconnect and software interface have a single owner and can thus be extended in key ways. Changing the Rules AMD (+ ATI) already rumbling about “Fusion” Just gluing a CPU to a GPU misses out, though. – (Still CPU + Accelerator, with a fat pipe) A few changes break the most onerous flexibility limitations AND ease the CPU – Compute communication and scheduling model. – Without being impractical (i.e. dropping down to CPU level performance) Changing the Rules Work queues / Write buffers as first class items Simple, but useful building block already pervasive for coordination / scheduling in parallel apps. Plus: Unified address space, simple sync/atomicity,… Queue / Buffer Details Conventional or Compute threads can enqueue for queues associated with any core. Dequeue / Dispatch mechanisms vary by core – HW Dispatched for a GPU-like compute core – TBD (Likely SW) for thin multi-threaded cores – SW Dispatched on CPU cores Queues can be entirely application defined or reflect hardware resource needs of entries. CPU+GPU hybrid What should change? Accelerator model of computing – Today: work created by CPU, in batches – Batch processing not a prerequisite for efficient coherent execution Paper 1: GPU threads create new GPU threads (fragments generate fragments) What should change? GPU threads to create new GPU threads GPU threads to create new CPU work (paper 2) Efficiently run data parallel algorithms on a GPU where per-element processing goes through unpredictable: – Number of stages – Spends unpredictable about of time in stage – May dynamically create new data elements – Processing is still coherent, but unpredictably so (have to dynamically find coherence to run fast) Queues Model GPU as collection of work queues Applications consist of many small tasks – Task is either running or in a queue – Software enqueue = create new task – Hardware decides when to dequeue and start running task All the work in a queue is in similar “stage” Queues GPUs today have similar queuing mechanisms They are implicit/fixed function (invisible) GPU as a giant scheduler cmd buffer = on-chip queues data buffer IA 1-to-1 VS 1-to-N (bounded) GS 1-to-N (unbounded) RS 1-to-(0 or X) (X static) PS Off-chip buffers (data) stream out MC OM data buffer GPU as a giant scheduler “Hardware scheduler” VS/GS/PS IA RS Processing cores command queue vertex queue Thread scoreboard Off-chip buffers (data) MC geometry queue fragment queue OM memory queues On-chip queues (read-modify-write) GPU as a giant scheduler Rasterizer (+ input cmd processor) is a domain specific work scheduler – – – – Millions of work items/frame On-chip queues of work Thousands of HW threads active at once CPU threads (via DirectX commands), GS programs, fixed function logic generate work – Pipeline describes dependencies What is the work here? – Vertices – Geometric primitives – Fragments Well defined resource requirements for each category. GPU Delta Allow application to define queues – Just like other GPU state management – No longer hard-wired into chip Make enqueue visible to software – Make it a “shader” instruction Preserve “shader”execution – Wide SIMD execution – Stackless lightweight threads – Isolation Research Challenges Make create queue & enqueue operation feasible in HW – Constrained global operations Key challenge: scheduling work in all the queues without domain specific knowledge – Keep queue lengths small to fit on chip What is a good scheduling algorithm? – Define metrics What information does scheduler need? Role of queues Recall GPU has queues for commands, vertices, fragments, etc. – Well-defined processing/resource requirements associated with queues Now: Software associates properties with queues during queue instantiation – Aka. Queues are typed Role of queues Associate execution properties with queues during queue instantiation – Simple: 1 kernel per queue – Tasks using no more than X regs – Tasks that do not perform gathers – Tasks that do not create new tasks – Future: Tasks to execute on CPU Notice: COHERENCE HINTS! Role of queues Denote coherence groupings (where HW finds coherent work) Describe dependencies: connecting kernels Enqueue= async. add new work into system Enqueue & terminate: – Point where coherence groupings change – Point where resource/environment changes Design space Queue setup commands / enqueue instructions Scheduling algorithm (what are inputs?) What properties associated with queues Ordering guarantees Determinism Failure handling (kill or spill when queues full?) Inter-task synch (or maintain isolation) Resource cleanup Implementation GPU shader interpreter (SM4 + extensions) – “Hello world” = run CPU thread+GPU threads • GPU threads create other threads • Identify GPU ISA additions – GPU raytracer formulation – May investigate DX10 geometry shader Establish what information scheduler needs Compare scheduling strategies Alternatives Multi-pass rendering – Compare: scheduling resources – Compare: bandwidth savings – On chip state / performance tradeoff Large monolithic kernel (branching) – CUDA/CTM Multi-core x86 Three interesting fronts Paper 1: GPU micro-architecture – GPU work creating new GPU work – Software defined queues – Generalization of DirectX 10 GS? GPU resource management – Ability to correctly manage/virtualize GPU resources CPU/compute-maximized integration – Compute cores? GPU/Niagara/Larrabee – compute cores as first-class execution environments (dump the accelerator model) – Unified view of work throughout machine – Any core creates work for other cores