Educational Effectiveness An Assessment Guide to

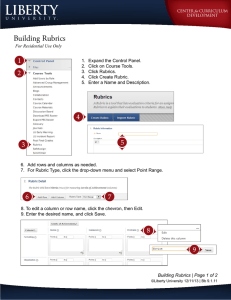

advertisement