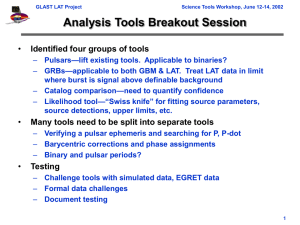

THE GLAST SCIENCE ANALYSIS TOOLS

advertisement