GLAST Large Area Telescope Instrument Science Operations Center CDR Section 6

advertisement

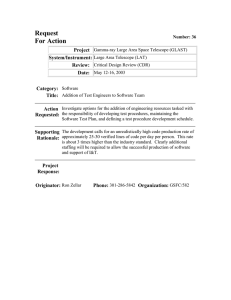

GLAST LAT Project ISOC CDR, 4 August 2004 GLAST Large Area Telescope Gamma-ray Large Area Space Telescope Instrument Science Operations Center CDR Section 6 Network and Hardware Architecture Richard Dubois SAS System Manager Document: LAT-PR-04500 Section 6 1 GLAST LAT Project ISOC CDR, 4 August 2004 Outline • SAS Summary Requirements • Pipeline • • • • Requirements • Data Storage and Archive Processing database Prototype status Networking • • • • Proposed Network Topology Network Monitoring File exchange Security Document: LAT-PR-04500 Section 6 2 GLAST LAT Project ISOC CDR, 4 August 2004 Level III Requirements Summary Ref: LAT-SS-00020 Function Flight Ground Processing Requirement perform prompt processing from Level 0 through Level 1 provide near-real time monitoring to IOC maintain state and performance tracking facilitate monitoring and updating of iinstrument calibrations archive all data passing through Expected Performance (if applicable) keep pace with up to 10 GB Level 0 per day and deliver to SSC within 24 hrs within 6 hrs Verification demonstration demonstration demonstration demonstration > 50 TB on disk and tape backup demonstration • Basic requirements are to routinely handle ~10 GB/day in multiple passes coming from the MOC • @ 150 Mb/s – 2 GB should take < 2 mins • Outgoing volume to SSC is << 1 GB/day • NASA 2810 Security Regs – normal security levels for IOC’s; as practiced by computing centers already Document: LAT-PR-04500 Section 6 3 GLAST LAT Project ISOC CDR, 4 August 2004 Pipeline Spec • • • • Function – The Pipeline facility has five major functions • automatically process Level 0 data through reconstruction (Level 1) • provide near real-time feedback to IOC • facilitate the verification and generation of new calibration constants • produce bulk Monte Carlo simulations • backup all data that passes through Must be able to perform these functions in parallel Fully configurable, parallel task chains allow great flexibility for use online as well as offline – Will test the online capabilities during Flight Integration The pipeline database and server, and diagnostics database have been specified (will need revision after prototype experience!) – database: LAT-TD-00553 – server: LAT-TD-00773 – diagnostics: LAT-TD-00876 Document: LAT-PR-04500 Section 6 4 GLAST LAT Project ISOC CDR, 4 August 2004 ISOC Network and Hardware Architecture SLAC Internet LAT ISOC Web Server … Firewall PVO Workstations FSW Workstations CHS Workstations Linux PC (Hkpg Replay ITOS) (Realtime connection ITOS) Firewall Gateway System (Oracle, GINO, FastCopy/DTS) Firewall SCS CPU Farm SAS/SP Workstations Linux PC Abilene Network SCS Storage Farm MOC GSSC Solaris Workstation (VxWorks tools) 1553 LAT Test Bed SIIS LVDS (S/C Sim) Linux PC (Test Bed ITOS) LAT Test Bed Lab Document: LAT-PR-04500 Anomaly Tracking & Notification System Section 6 5 GLAST LAT Project ISOC CDR, 4 August 2004 Expected Capacity • We routinely made use of 100-300 processors on the SLAC farm for repeated Monte Carlo simulations, lasting weeks – Expanding farm net to France and Italy – Unknown yet what our MC needs will be – We are very small compared to our SLAC neighbour BABAR – computing center sized for them • 2000-3000 CPUS; 300 TB of disk; 6 robotic silos holding ~30000 200 GB tapes total – SLAC computing center has guaranteed our needs for CPU and disk, including maintenance for the life of the mission. – Data rate expanded to ~300 Hz with fatter pipe and compression • ~75 CPUs to handle 5 hrs of data in 1 hour @ 0.15 sec/event Document: LAT-PR-04500 Section 6 6 GLAST LAT Project Dominated by disk/tape costs: Upper Limit on needs - approved Straw Budget Profile ISOC CDR, 4 August 2004 FY05 FY06 FY07 FY08 farm CPU total 20 40 75 95 farm CPU increment 20 20 35 20 farm CPU cost 25 35 43.75 25 compute servers total 4 6 8 12 compute servers incr 2 2 2 4 compute srv cost 3.5 3.5 3.5 7 user servers total 3 5 7 9 user servers incr 2 2 2 2 2.5 2.5 2.5 2.5 pipeline servers total 6 6 8 8 pipeline servers incr 4 0 2 pipeline srv cost 5 0 2.5 0 user srv cost database server cost 10 disk (TB) total 25 50 200 400 disk (TB) incr 25 25 150 200 disk cost 200 125 600 800 tapes needed total 250 500 2000 4000 tapes needed incr 250 250 1500 2000 20 20 120 160 256 196 772.25 994.5 tape cost Total cost (k$) Document: LAT-PR-04500 Section 6 7 GLAST LAT Project ISOC CDR, 4 August 2004 A Possible 10% solution FY05 FY06 FY07 FY08 20 20 35 20 25k 25k 44k 25k 25 TB 25 40 40 200k 150k 160k 160k tape 20k 20k 24k 32k Total 245k 195k 228k 217k CPU disk Document: LAT-PR-04500 • base per flight year of L0 + all digi = ~25 TB • then 10% of 300 Hz recon Section • disk in 05-06 is for Flight Int, DC2 and 6DC3 (WAG) 8 GLAST LAT Project ISOC CDR, 4 August 2004 Pipeline in Pictures State machine + complete processing record Expandable and configurable set of processing nodes Configurable linked list of applications to run Document: LAT-PR-04500 Section 6 9 GLAST LAT Project ISOC CDR, 4 August 2004 Processing Dataset Catalogue Processing records Datasets grouped by task Document: LAT-PR-04500 Datasets info is here Section 6 10 GLAST LAT Project ISOC CDR, 4 August 2004 First Prototype - OPUS Open source project from STScI In use by several missions Now outfitted to run DC1 dataset Replaced by GINO OPUS Java mangers for pipelines Document: LAT-PR-04500 Section 6 11 GLAST LAT Project ISOC CDR, 4 August 2004 Gino - Pipeline View Once we had inserted Oracle DB and LSF batch, there was only a small piece of OPUS left. Gone now! Document: LAT-PR-04500 Section 6 12 GLAST LAT Project ISOC CDR, 4 August 2004 Disk and Archives • We expect ~10 GB raw data per day and assume comparable volume of events for MC – Leads to ~40 TB/year for all data types • No longer frightening – keep it all on disk • Have funding approval for up to 200 TB/yr – Use SLAC’s mstore archiving system to keep a copy in the silo • Already practicing with it and will hook it up to Gino – Archive all data we touch; track in dataset catalogue – Not an issue Document: LAT-PR-04500 Section 6 13 GLAST LAT Project ISOC CDR, 4 August 2004 Network Path: SLAC-Goddard ٭ ٭ ٭ ٭ ٭ SLAC Stanford Oakland (CENIC) Houston Atlanta Washington Document: LAT-PR-04500 LA UC-AID (Abilene) GSFC (77 ms ping) Section 6 14 GLAST LAT Project ISOC CDR, 4 August 2004 ISOC Stanford/SLAC Network • SLAC Computing Center – OC48 connection to outside world – provides data connections to MOC and SSC – hosts the data and processing pipeline – Transfers MUCH larger datasets around the world for BABAR – World renowned for network monitoring expertise • Will leverage this to understand our open internet model – Sadly, a great deal of expertise with enterprise security as well • Part of ISOC expected to be in new Kavli Institute building on campus – Connected by fiber (~2 ms ping) – Mostly monitoring and communicating with processes/data at SLAC Document: LAT-PR-04500 Section 6 15 GLAST LAT Project ISOC CDR, 4 August 2004 Network Monitoring Need to understand failover reliability, capacity and latency Document: LAT-PR-04500 Section 6 16 GLAST LAT Project ISOC CDR, 4 August 2004 LAT Monitoring LAT Monitoring Keep track of connections to collaboration sites Alerts if they go down Fodder for complaints if poor connectivity Monitoring nodes at most LAT collaborating institutions Document: LAT-PR-04500 Section 6 17 GLAST LAT Project ISOC CDR, 4 August 2004 File Exchange: DTS & FastCopy • • • • • Secure – No passwords in plain text etc Reliable – Has to work > 99% of the time (say) handle the (small) data volume – order 10 GB/day from Goddard (MOC); 0.3 GB/day back to Goddard (SSC) – keep records of transfers – database records of files sent and received – handshakes – both ends agree on what happened – some kind of clean error recovery – Notification sent out on failures Web interface to track performance GOWG investigating DTS & FastCopy now – Either will work Document: LAT-PR-04500 Section 6 18 GLAST LAT Project ISOC CDR, 4 August 2004 Security • Network security – application vs network – ssh/vpn among all sites – MOC, SSC and internal ISOC – A possible avenue is to make all applications secure (ie encrypted), using SSL. • File and Database security – Controlled membership in disk ACLs – Controlled access to databases – Depend on SLAC security otherwise Document: LAT-PR-04500 Section 6 19 GLAST LAT Project ISOC CDR, 4 August 2004 Summary • We are testing out the Gino pipeline as our first prototype – Getting its first test in Flight Integration support – Interfaces to processing database and SLAC batch done – Additional practice with DC2, 3 • We expect to need O(50 TB)/year of disk and ~2-3x that in tape archive – Not an issue, even if we go up to 200 TB/yr • We expect to use Internet2 connectivity for reliable and fast transfer of data between SLAC and Goddard – Transfer rates of > 150 Mb/s already demonstrated – < 2 min transfer for standard downlink. More than adequate. – Starting a program of routine network monitoring to practice • Network security is an ongoing, but largely solved, problem – There are well-known mechanisms to protect sites – We will leverage considerable expertise from the SLAC and Stanford networking/security folks Document: LAT-PR-04500 Section 6 20