A Performance Study of BDD-Based Model Checking Bwolen Yang

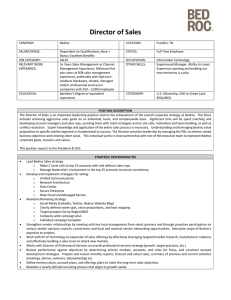

advertisement

A Performance Study of BDD-Based Model Checking Bwolen Yang Randal E. Bryant, David R. O’Hallaron, Armin Biere, Olivier Coudert, Geert Janssen Rajeev K. Ranjan, Fabio Somenzi Motivation for Studying Model Checking (MC) MC is an important part of formal verification digital circuits and other finite state systems BDD is an enabling technology for MC Not well studied Packages are tuned using combinational circuits (CC) Qualitative differences between CC and MC computations CC: build outputs, constant time equivalence checking MC: build model, many fixed-points to verify the specs CC: BDD algorithms are polynomial MC: key BDD algorithms are exponential 2 Outline BDD Overview Organization of this Study participants, benchmarks, evaluation process Experimental Results performance improvements characterizations of MC computations BDD Evaluation Methodology evaluation platform various BDD packages » real workload » metrics 3 BDD Overview BDD DAG representation for Boolean functions fixed order on Boolean variables Set Representation represent set as Boolean function » an element’s value is true <==> it is in the set Transition Relation Representation set of pairs (current to next state transition) each state variable is split into two copies: » current state and next state 4 BDD Overview (Cont’d) BDD Algorithms dynamic programming sub-problems (operations) » recursively apply Shannon decomposition memoization: computed cache Garbage Collection recycle unreachable (dead) nodes Dynamic Variable Reordering BDD graph size depends on the variable order sifting based » nodes in adjacent levels are swapped 5 Organization of this Study: Participants Armin Biere: ABCD Carnegie Mellon / Universität Karlsruhe Olivier Coudert: TiGeR Synopsys / Monterey Design Systems Geert Janssen: EHV Eindhoven University of Technology Rajeev K. Ranjan: CAL Synopsys Fabio Somenzi: CUDD University of Colorado Bwolen Yang: PBF Carnegie Mellon 6 Organization of this Study: Setup Metrics: 17 statistics Benchmark: 16 SMV execution traces traces of BDD-calls from verification of » cache coherence, Tomasulo, phone, reactor, TCAS… size 6 million - 10 billion sub-operations » 1 - 600 MB of memory » Evaluation platform: trace driver “drives” BDD packages based on execution trace 7 Organization of this Study: Evaluation Process Phase 1: no dynamic variable reordering Phase 2: with dynamic variable reordering Iterative Process collect stats identify issues hypothesize suggest improvements design experiments validate validate 8 Phase 1 Results: Initial / Final 13 6 n/a 1e+6 100x 10x 1x initial time (sec) 1e+5 1 76 1e+4 1e+3 s 1e+2 1e+1 1e+1 1e+2 1e+3 1e+4 1e+5 n/a 1e+6 speedups > 100: 6 10 - 100: 16 5 - 10: 11 2 - 5: 28 final time (sec) Conclusion: collaborative efforts have led to significant performance improvements 9 Phase 1: Hypotheses / Experiments Computed Cache effects of computed cache size amounts of repeated sub-problems across time Garbage Collection reachable / unreachable Complement Edge Representation work space Memory Locality for Breadth-First Algorithms 10 Phase 1: Hypotheses / Experiments (Cont’d) For Comparison ISCAS85 combinational circuits (> 5 sec, < 1GB) c2670, c3540 13-bit, 14-bit multipliers based on c6288 Metrics depends only on the trace and BDD algorithms machine-independent implementation-independent 11 Computed Cache: Repeated Sub-problems Across Time Source of Speedup increase computed cache size Possible Cause many repeated sub-problems are far apart in time Validation study the number of repeated sub-problems across user issued operations (top-level operations). 12 Hypothesis: Top-Level Sharing Hypothesis MC computations have a large number of repeated sub-problems across the top-level operations. Experiment measure the minimum number of operations with GC disabled and complete cache. compare this with the same setup, but cache is flushed between top-level operations. 13 Results on Top-Level Sharing # of ops (flush / min) 100 10 1 Model Checking Traces ISCAS85 flush: cache flushed between top-level operations Conclusion: large cache is more important for MC 14 Garbage Collection: Rebirth Rate Source of Speedup reduce GC frequency Possible Cause many dead nodes become reachable again (rebirth) GC is delayed till the number of dead nodes reaches a threshold » dead nodes are reborn when they are part of the result of new sub-problems » 15 Hypothesis: Rebirth Rate Hypothesis MC computations have very high rebirth rate. Experiment measure the number of deaths and the number of rebirths 16 Results on Rebirth Rate rebirths / deaths 1 0.8 0.6 0.4 0.2 0 Model Checking Traces ISCAS85 Conclusions delay garbage collection triggering GC should not base only on # of dead nodes delay updating reference counts 17 BF BDD Construction On MC traces, breadth-first based BDD construction has no demonstrated advantage over traditional depth-first based techniques. Two packages (CAL and PBF) are BF based. 18 BF BDD Construction Overview Level-by-Level Access operations on same level (variable) are processed together one queue per level Locality group nodes of the same level together in memory Good memory locality due to BF ==> # of ops processed per queue visit must be high 19 # of ops processed per queue visit Average BF Locality 5000 4000 3000 2000 1000 0 Model Checking Traces ISCAS85 Conclusion: MC traces generally have less BF locality 20 avg. locality / # of ops (x 1e-6) Average BF Locality / Work 250 200 150 100 50 0 Model Checking Traces ISCAS85 Conclusion: For comparable BF locality, MC computations do much more work. 21 Phase 1: Some Issues / Open Questions Memory Management space-time tradeoff » computed cache size / GC frequency resource awareness » available physical memory, memory limit, page fault rate Top-Level Sharing possibly the main cause for strong cache dependency » high rebirth rate » better understanding may lead to better memory management » higher level algorithms to exploit the pattern » 22 Phase 2: Dynamic Variable Reordering BDD Packages Used CAL, CUDD, EHV, TiGeR improvements from phase 1 incorporated 23 Why is Variable Reordering Hard to Study Time-space tradeoff how much time to spent to reduce graph sizes Chaotic behavior e.g., small changes to triggering / termination criteria can have significant performance impact Resource intensive reordering is expensive space of possible orderings is combinatorial Different variable order ==> different computation e.g., many “don’t-care space” optimization algorithms 24 Phase 2: Experiments Quality of Variable Order Generated Variable Grouping Heuristic keep strongly related variables adjacent Reorder Transition Relation BDDs for the transition relation are used repeatedly Effects of Initial Variable Order with and without variable reordering Only CUDD is used 25 Variable Grouping Heuristic: Group Current / Next Variables Current / Next State Variables for transition relation, state variable is split into two: » current state and next state Hypothesis Grouping the corresponding current- and next-state variables is a good heuristic. Experiment for both with and without grouping, measure work (# of operations) » space (max # of live BDD nodes) » reorder cost (# of nodes swapped with their children) » 26 Results on Grouping Current / Next Variables 3 2 1 0 MC traces 3 nodes swapped max live nodes # of ops 4 Reorder Cost Space Work 2 1 0 MC traces 3 2 1 0 MC traces All results are normalized against no variable grouping. Conclusion: grouping is generally effective 27 Effects of Initial Variable Order: Experimental Setup For each trace, find a good variable ordering O perturb O to generate new variable orderings fraction of variables perturbed » distance moved » measure the effects of these new orderings with and without dynamic variable reordering 28 Effects of Initial Variable Order: Perturbation Algorithm Perturbation Parameters (p, d) p: probability that a variable will be perturbed d: perturbation distance Properties in average, p fraction of variables is perturbed max distance moved is 2d (p = 1, d = infinity) ==> completely random variable order For each perturbation level (p, d) generate a number (sample size) of variable orders 29 Effects of Initial Variable Order: Parameters Parameter Values p: (0.1, 0.2, …, 1.0) d: (10, 20, …, 100, infinity) sample size: 10 ==> for each trace, 1100 orderings 2200 runs (w/ and w/o dynamic reordering) 30 Effects of Initial Variable Order: Smallest Test Case Base Case (best ordering) time: 13 sec memory: 127 MB Resource Limits on Generated Orders time: 128x base case memory: 500 MB 31 Effects of Initial Variable Order: Result # of unfinished cases no reorder reorder # of cases 1200 800 400 0 >1x >2x >4x >8x >16x >32x >64x >128x time limit At 128x/500MB limit, “no reorder” finished 33%, “reorder” finished 90%. Conclusion: dynamic reordering is effective 32 Phase 2: Some Issues / Open Questions Computed Cache Flushing cost Effects of Initial Variable Order determine sample size Need New Better Experimental Design 33 BDD Evaluation Methodology Trace-Driven Evaluation Platform real workload (BDD-call traces) study various BDD packages focus on key (expensive) operations Evaluation Metrics more rigorous quantitative analysis 34 BDD Evaluation Methodology Metrics: Time elapsed time (performance) page fault rate CPU time GC time BDD Alg time reordering time memory usage # of ops (work) # of GCs computed cache size # of reorderings # of node swaps (reorder cost) 35 BDD Evaluation Methodology Metrics: Space memory usage computed cache size max # of BDD nodes # of GCs reordering time 36 Importance of Better Metrics Example: Memory Locality elapsed time (performance) CPU time cache miss rate page fault rate without accounting for work, may lead to false conclusions TLB miss rate memory locality # of GCs # of ops (work) computed cache size # of reorderings 37 Summary Collaboration + Evaluation Methodology significant performance improvements » up to 2 orders of magnitude characterization of MC computation computed cache size » garbage collection frequency » effects of complement edge » BF locality » reordering heuristic » – current / next state variable grouping effects of reordering the transition relation » effects of initial variable orderings » other general results (not mentioned in this talk) issues and open questions for future research 38 Conclusions Rigorous quantitative analysis can lead to: dramatic performance improvements better understanding of computational characteristics Adopt the evaluation methodology by: building more benchmark traces » for IP issues, BDD-call traces are hard to understand using / improving the proposed metrics for future evaluation For data and BDD traces used in this study, http://www.cs.cmu.edu/~bwolen/fmcad98/ 39 Phase 1: Hypotheses / Experiments Computed Cache effects of computed cache size amounts of repeated sub-problems across time Garbage Collection reachable / unreachable Complement Edge Representation work space Memory Locality for Breadth-First Algorithms 41 Phase 2: Experiments Quality of Variable Order Generated Variable Grouping Heuristic keep strongly related variables adjacent Reorder Transition Relation BDDs for the transition relation are used repeatedly Effects of Initial Variable Order for both with and without variable reordering Only CUDD is used 42 Benchmark “Sizes” # of BDD Vars Work Space Min Ops (millions) Max Live Nodes (thousands) MC 86 – 600 6 – ~10000 ISCAS85 26 – 233 15 – 178 53 – 26944 4363 – 9662 Min Ops: minimum number of sub-problems/operations (no GC and complete cache) Max Live Nodes: maximum number of live BDD nodes 43 Phase 1: Before/After Cumulative Speedup Histogram 75 76 76 # of cases 80 61 60 33 40 22 20 13 6 6 1 bad failed new >0 >0.95 >1 >2 >5 >10 >100 0 speedups 6 packages * 16 traces = 96 cases 44 Computed Cache Size Dependency Hypothesis The computed cache is more important for MC than for CC. Experiment Vary the cache size and measure its effects on work. size as a percentage of BDD nodes normalize the result to minimum amount of work necessary; i.e., no GC and complete cache. 45 Effects of Computed Cache Size MC Traces ISCAS85 Circuits 1e+4 # of ops # of ops 1e+4 1e+3 1e+2 1e+1 1e+0 10% 20% 40% 80% 1e+3 1e+2 1e+1 1e+0 10% cache size 20% 40% 80% cache size # of ops: normalized to the minimum number of operations cache size: % of BDD nodes Conclusion: large cache is important for MC 46 deaths / operations Death Rate 3 2.5 2 1.5 1 0.5 0 Model Checking Traces ISCAS85 Conclusion: death rate for MC can be very high 47 Effects of Complement Edge Work no C.E. / with C.E. # of ops 2 1.5 1 0.5 0 Model Checking Traces ISCAS85 Conclusion: complement edge only affects work for CC 48 Effects of Complement Edge Space no C.E. / with C.E. sum of BDD sizes 1.5 1 0.5 0 Model Checking Traces ISCAS85 Conclusion: complement edge does not affect space Note: maximum # of live BDD nodes would be better measure 49 Phase 2 Results With / Without Reorder no reorder (sec) n/a 1e+6 1e+5 100x 10x 1x 1e+4 .1x 1e+3 .01x 1 6 13 44 1e+2 1e+1 n/a 1e+1 1e+2 1e+3 1e+4 1e+5 1e+6 with reorder (sec) 4 packages * 16 traces = 64 cases 50 Variable Reordering Effects of Reordering Transition Relation Only Work 2 1 0 MC traces 3 2 1 0 Reorder Cost nodes swapped max live nodes # of ops 3 Space MC traces 1 0 MC traces All results are normalized against variable reordering w/ grouping. nodes swapped: number of nodes swapped with their children 51 Quality of the New Variable Order Experiment use the final variable ordering as the new initial order. compare the results using the new initial order with the results using the original variable order. 52 Results on Quality of the New Variable Order 4 original order (sec) 1e+6 n/a 100x 10x 1x 1e+5 1e+4 .1x 1e+3 .01x 60 1e+2 1e+1 n/a 1e+1 1e+2 1e+3 1e+4 1e+5 1e+6 new initial order (sec) Conclusion: qualities of new variable orders are generally good. 53 No Reorder (> 4x or > 500Mb) 10 9 7 6 4 2 1.0 0.8 0.6 1 0.4 0 0.2 10 20 30 40 50 60 70 80 90 100 3 probability 5 inf # of cases 8 distance 54 > 4x or > 500Mb Reorder 10 8 8 distance 0 prob 0.4 10 0.1 2 30 10 30 50 70 90 inf 0 0.4 1.0 0.7 50 2 4 70 1.0 0.7 90 4 6 inf 6 # of cases 10 prob # of cases No Reorder 0.1 distance Conclusions: For very low perturbation, reordering does not work well. Overall, very few cases get finished. 55 > 32x or > 500Mb Reorder 10 8 8 distance 0 prob 0.4 10 0.1 2 30 10 30 50 70 90 inf 0 0.4 1.0 0.7 50 2 4 70 1.0 0.7 90 4 6 inf 6 # of cases 10 prob # of cases No Reorder 0.1 distance Conclusion: variable reordering worked rather well 56 Memory Out (> 512Mb) Reorder 10 8 8 distance 0 prob 0.4 10 0.1 2 30 10 30 50 70 90 inf 0 0.4 1.0 0.7 50 2 4 70 1.0 0.7 90 4 6 inf 6 # of cases 10 prob # of cases No Reorder 0.1 distance Conclusion: memory intensive at highly perturbed region. 57 Timed Out (> 128x) Reorder 10 8 8 0 distance prob 0.4 10 0.1 2 30 10 30 50 70 90 inf 0 0.4 1.0 0.7 50 2 4 70 1.0 0.7 90 4 6 inf 6 # of cases 10 prob # of cases No Reorder 0.1 distance diagonal band from lower-left to upper-right 58 Issues and Open Questions: Cross Top-Level Sharing Why are there so many repeated sub-problems across top-level operations? Potential experiment identify how far apart these repetitions are 59 Issues and Open Questions: Inconsistent Cross-Platform Results For some BDD packages, results on machine A are 2x faster than B Other BDD packages, the difference is not as significant Probable Cause memory hierarchy This may shed some light on the memory locality issues. 60