Robust Nonparametric Regression by Controlling Sparsity Gonzalo Mateos and Georgios B. Giannakis

advertisement

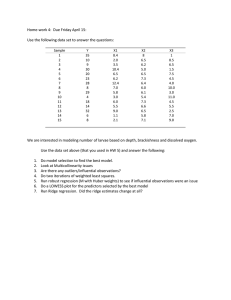

Robust Nonparametric Regression by Controlling Sparsity Gonzalo Mateos and Georgios B. Giannakis ECE Department, University of Minnesota Acknowledgments: NSF grants no. CCF-0830480, 1016605 EECS-0824007, 1002180 May 24, 2011 1 Nonparametric regression Given , function estimation allows predicting Estimate unknown from a training data set If one trusts data more than any parametric model Then go nonparametric regression: lives in a (possibly -dimensional) space of “smooth’’ functions Ill-posed problem Workaround: regularization [Tikhonov’77], [Wahba’90] RKHS with reproducing kernel and norm Our focus Nonparametric regression robust against outliers Robustness by controlling sparsity 2 Our work in context Noteworthy applications Load curve data cleansing [Chen et al’10] Spline-based PSD cartography [Bazerque et al’09] Robust nonparametric regression Huber’s function [Zhu et al’08] No systematic way to select thresholds Robustness and sparsity in linear (parametric) regression Huber’s M-type estimator as Lasso [Fuchs‘99]; contamination model Bayesian framework [Jin-Rao‘10][Mitra et al’10]; rigid choice of 3 Variational LTS Least-trimmed squares (LTS) regression [Rousseeuw’87] Variational (V)LTS counterpart (VLTS) is the -th order statistic among residuals discarded Q: How should we go about minimizing ? (VLTS) is nonconvex; existence of minimizer(s)? A: Try all subsamples of size , solve, and pick the best Simple but intractable beyond small problems 4 Modeling outliers Outlier variables Nominal data obey s.t. outlier otherwise ; outliers something else Remarks Both and are unknown If outliers sporadic, then vector is sparse! Natural (but intractable) nonconvex estimator 5 VLTS as sparse regression Lagrangian form (P0) Tuning parameter controls sparsity in Proposition 1: If solves (P0) with then solves (VLTS) too. number of outliers chosen s.t. , The equivalence Formally justifies the regression model and its estimator (P0) Ties sparse regression with robust estimation 6 Just relax! (P0) is NP-hard relax (P1) (P1) convex, and thus efficiently solved Role of sparsity controlling is central Q: Does (P1) yield robust estimates ? A: Yap! Huber estimator is a special case where 7 Alternating minimization (P1) jointly convex in AM solver (P1) Remarks Single Cholesky factorization of Soft-thresholding Reveals the intertwining between Outlier identification Function estimation with outlier compensated data 8 Lassoing outliers Alternative to AM Proposition 2: Minimizers solve Lasso [Tibshirani’94] of (P1) are fully determined by w/ as and , with Enables effective methods to select Lasso solvers return entire robustification path (RP) Cross-validation (CV) fails with multiple outliers [Hampel’86] 9 Robustification paths LARS returns whole RP [Efron’03] Same cost of a single LS fit ( ) Coeffs. Lasso path of solutions is piecewise linear Lasso is simple in the scalar case Coordinate descent is fast! [Friedman ‘07] Exploits warm starts, sparsity Other solvers: SpaRSA [Wright et al’09], SPAMS [Mairal et al’10] Leverage these solvers values of For each , consider 2-D grid values of 10 Selecting and Relies on RP and knowledge on the data model Number of outliers known: from RP, obtain range of Discard outliers (known), and use CV to determine s.t. Variance of the nominal noise known: from RP, for each grid, obtain an entry of the sample variance matrix The best . on the as are s.t. Variance of the nominal noise unknown: replace above with a robust estimate , e.g., median absolute deviation (MAD) 11 Nonconvex regularization Nonconvex penalty terms approximate better in (P0) Options: SCAD [Fan-Li’01], or sum-of-logs [Candes et al’08] Iterative linearization-minimization of around Remarks Initialize with , use and Bias reduction (cf. adaptive Lasso [Zou’06]) 12 Robust thin-plate splines Specialize to thin-plate splines [Duchon’77], [Wahba’80] Smoothing penalty only a seminorm in Solution: Radial basis function Augment w/ member of the nullspace of Given , unknowns found in closed form Still, Proposition 2 holds for appropriate 13 Simulation setup Training set : noisy samples of Gaussian mixture examples, i.i.d. Outliers: Nominal: True function i.i.d. for w/ i.i.d. ( known) Data 14 Robustification paths Grid parameters: grid: grid: Paths obtained using SpaRSA [Wright et al’09] Outlier Inlier 15 Results True function Robust predictions Nonrobust predictions Refined predictions Effectiveness in rejecting outliers is apparent 16 Generalization capability In all cases, 100% outlier identification success rate Figures of merit Training error: Test error: Nonconvex refinement leads to consistently lower 17 Load curve data cleansing Load curve: electric power consumption recorded periodically Reliable data: key to realize smart grid vision B-splines for load curve prediction and denoising [Chen et al ’10] Deviation from nominal models (outliers) Faulty meters, communication errors Unscheduled maintenance, strikes, sporting events Uruguay’s aggregate power consumption (MW) 18 Real data tests Robust predictions Nonrobust predictions Refined predictions 19 Concluding summary Robust nonparametric regression VLTS as -(pseudo)norm regularized regression (NP-hard) Convex relaxation variational M-type estimator Lasso Controlling sparsity amounts to controlling number of outliers Sparsity controlling role of is central Selection of using the Lasso robustification paths Different options dictated by available knowledge on the data model Refinement via nonconvex penalty terms Bias reduction and improved generalization capability Real data tests for load curve cleansing 20