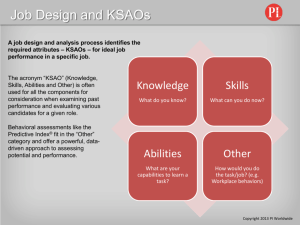

DEVELOPMENT OF A WRITTEN SELECTION EXAM FOR THREE ENTRY-

advertisement