Efficient Content Location Using Interest-based Locality in Peer-to-Peer Systems

advertisement

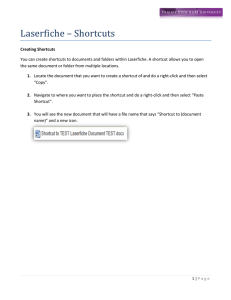

Efficient Content Location Using Interest-based Locality in Peer-to-Peer Systems Presented by: Lin Wing Kai Outline Background Design of Interest-based Locality Simulation of Interest-based Locality Enhancement of Interest-based Locality Understanding the scheme Background 3 types of P2P systems Centralized P2P: Napster Decentralized Unstructured: Gnutella Decentralized Structured: Distributed Hash Table (DHT) Background Each peer is connected randomly, and searching is done by flooding. Allow keyword search Example of searching a mp3 file in Gnutella network. The query is flooded across the network. Background DHT (Chord): An chord with about 50 nodes. The black lines point to adjacent nodes while the red lines are “finger” pointers that allow a node to find key in O(log N) time. Given a key, Chord will map the key to the node. Each node need to maintain O(log N) information Each query use O(log N) messages. Key search means searching by exact name Outline Background Design of Interest-based Locality Simulation of Interest-based Locality Enhancement of Interest-based Locality Understanding the scheme Interest-based Locality Peers have similar interest will share similar contents Architecture Shortcuts are modular. Shortcuts are performance enhancement hints. Creation of shortcuts The peer use the underlying topology (e.g. Gnutella) for the first few searches. One of the return peers is selected from random and added to the shortcut lists. Each shortcut will be ordered by the metric, e.g. success rate, path latency. Subsequent queries go through the shortcut lists first. If fail, lookup through underlying topology. Outline Background Design of Interest-based Locality Simulation of Interest-based Locality Enhancement of Interest-based Locality Understanding the scheme Performance Evaluation Performance metric: success rate load characteristics (query packets per peers process in the system) query scope (the fraction of peers in each query) minimum reply path length additional state kept in each node Methodology – query workload Create traffic trace from the real application traffic: Boeing firewall proxies Microsoft firewall proxies Passively collect the web traffic between CMU and the Internet Passively collect typical P2P traffic (Kazza, Gnutella) Use exact matching rather than keyword matching in the simulation. “song.mp3” and “my artist – song.mp3” will be treated as different. Methodology – Underlying peers topology Based on the Gnutella connectivity graph in 2001, with 95% nodes about 7 hops away. Searching TTL is set to 7. For each kind of traffic (Boeing, Microsoft… etc), run 8 times simulations, each with 1 hour. Methodology – Storage and replication modeling (web) The first peer make the web request will be modeled as first node containing the web pages. Subsequent search from other peers will search from this peer and replicate the page. a.html b node b retrieve a.html from node a a.html a Node a is the first peer to search for a.html, and it will be modeled as the first node containing a.html a.html node c can retrieve a.html from node a, node b c Methodology – Storage and replication modeling (P2P) File is downloaded from t0 tS t=t0 simulation end (tE) From the traffic trace collected, if a file is downloaded for download at t0. The file should also be available for download before t0 . However, if the file isn’t downloaded during the sampled trace, There is no information to indicate the existence of the file. Simulation Results – success rate Simulation Results – load, scope and path length -- Query load for Boeing and Microsoft Traffic: -- Query scope for shortcut scheme is about 0.3%, where in Gnutella is about 100%. -- Average path length of the traces: Outline Background Design of Interest-based Locality Simulation of Interest-based Locality Enhancement of Interest-based Locality Understanding the scheme Increase Number of Shortcuts Diminished return 7 ~ 12 % performance gain Add all shortcut at a time, no limit on the shortcut size Add k shortcut at a time, only 100 shortcuts are used. Using Shortcuts’ Shortcuts Idea: Add the shortcut’s shortcut Performance gain of 7% on average Outline Background Design of Interest-based Locality Simulation Enhancement of Interest-based Locality Understanding the scheme Interest-based Structures When viewed as an undirected graph: In the first 10 minutes, there are many connected components, each component has a few peers in between. At the end of simulation, there are few connected components, each component has several hundred peers. Each component is well connected. The clustering coefficient is about 0.6 ~ 0.7, which is higher than that in Web graph. Web Objects Locality Webpage contains several web objects, locality should exists in between these objects. There is performance drop of 10% when we retrieve web objects rather than webpages. Performance is gained back when we exhaust all the shortcuts. Locality Across Publishers Same publisher exhibit low interest locality, peer actually may interest different publishers content. Same publisher shortcuts means shortcuts that are originally created as accessing the same content from the same publisher for the current request. Sensitivity of Shortcuts Run Interest based shortcuts over DHT (Chord) instead of Gnutella. Query load is reduced by a factor 2 – 4. Query scope is reduced from 7/N to 1.5/N Conclusion Interest based shortcuts are modular and performance enhancement hints over existing P2P topology. Shortcuts are proven can enhance the searching efficiencies. Shortcuts form clusters within a P2P topology, and the clusters are well connected.