Chapter 5: Computer System Organization

advertisement

Chapter 5: Computer System

Organization

Chapter 4 treated the very low level of hardware

We still not have the big picture of how a computer

really work

How can we abstract from gates dealing with binary

values (1 = high, 0 = low)?

What are the higher-level components of a

computer?

Compare with biology:

Chapter 4: cells, DNA …

Chapter 5: Heart, lung, veins …

1

Outline of Chapter 5

Abstraction

Von-Neumann Architecture

Memory

Input/Output

ALU

Control Unit

Historical Overview of Computers

2

Abstraction

Ignore details and concentrate on the basic building

blocks of a computer

Computer = collection of functional units or

subsystems

Tasks of any subsystem include:

Instruction processing

Information storage

Data transfer

Input/Output

Computer organization: branch of computer

science studying computers on this abstraction level

3

Abstraction

All of our subsystems are built using gates

But gates are now invisible

different levels of abstractions

Abstraction is essential in order to reduce complexity

Methodical approach for dealing with abstraction:

System S (e.g. computer) includes a high number of primitive

components S = {c1…..cN}

Grouping components based on their functionality produces a

system S = {A, B, C, …} with less components

The highest level of abstraction is to treat the system as a black

box

Hierarchy of abstractions

4

Abstraction

Already known (chapter 4) forms of abstractions

AND Gate = 2 Transistors in series

1-ADD Circuit = 25 Gates including NOTs, ANDs,

and Ors

Transistor: primitive component

Gate: next level of abstraction

Circuit: highest level abstraction (so far)

Hierarchy

5

Von-Neumann Architecture

Variety of computers:

PCs

Mainframes

Minicomputers

Laptops

However: All of them based on the same design principles

Differences are only in speed, capacity, in-/ and output and so

on

Compare: automotive technology: many makes but the same

principles

Principles of today’s computer systems are based on a

theoretical model of computer design called Von-Neumann

architecture (1946)

6

Characteristics of a VonNeumann Architecture

1. The computer consists of 4 major subsystems

Memory

Input-output

Arithmetic-logic unit (ALU)

Control unit

2. Stored program concept

1.1

1.2

1.3

1.4

Instructions to be executed and data are represented in

binary values and stored in memory

3. Sequential execution of instruction

Instructions are fetched one at a time from memory to the

control unit, where they are decoded and executed

7

Memory

The memory is the unit of a computer that stores data and

instructions and make them accessible (to the processor)

Binary numbering scheme is used for data and instructions

Random access memory (RAM)

Memory is divided into fixed-sized units called cells and each cell is

associated with a unique identifier called address, any a address is

an unsigned integer 0, 1, 2, …

Accesses to memory are always to a specified address, and the

complete cell must always be stored/retrieved. The cell is the

minimum unit of access

The time it takes to store/fetch contents of a cell is the same for all

cells in memory

Read-only memory (ROM) is also usually used in addition to RAM:

A ROM is a RAM for retrieving information (data and instructions)

only (no store operations are allowed)

8

Structure of a RAM

0

bit

1

cell

2

Addresses

2N-1

Memory width (W)

9

RAM

Cell size = memory width W

Standard: W = 8 Byte

Maximum value that can be stored in a byte is:

11111111 = 255

Too low greater number are stored in several bytes e.g. 2, 4, 8

bytes (16, 32, 64 bits)

Problem with higher numbers: more store/retrieve operations

are needed (byte-by-byte)

Addresses: if N bits available, maximum is 2N-1

Maximum memory size is: 2N, typical abbreviations in use:

1K = 210 (1024)

1M= 220 (1, 048, 576)

1G = 230 (1, 073, 741, 824)

10

Address vs content

There is a difference between address and the

content of that address

Example

Content = 1

Instructions may operate on

Address = 42

Addresses or

Contents

Example

Add 1 to Content: Address remains unchanged but value

becomes 2

11

Memory operations

Fetch(address)

Fetch a copy of the contents of the address and

return as a result the contents. The contents

remain unchanged.

Example: Fetch(42) would return 1

Store(address, value)

Store the specified value into the cell associated

with address. Previous contents are lost.

Example: Store(42, 344) now 1 is lost

Time for fetch/store: ca. 100 nsec

12

Implementation of fetch and

store

Register:

On-processor memory cells - typically few

bytes (e.g. 2, 4 or 8 bytes)

Fetch and store need two operands:

Fetch(address)

Address (held in MAR)

Value (held in MDR)

Load the address into MAR

Decode the address

Copy the contents of the memory location

into MDR

Memory address

register

MAR

Memory data

register

RAM

Memory

MDR

Store(address, value)

Load address into MAR

Load value into MDR

Decode address

Store the contents of MDR into the

memory location

13

Decoding addresses

Role of decoder is to select one memory location (among many)

given the corresponding address.

Decoder usage:

MAR

0

N-to-2N

Decoder

High number of output lines for decoder !

Example: N = 16 65,536 output lines

2N -1

14

Organization of memory with

Two 2-dimensional Decoders

MAR

left N/2 bits: rows

right N/2 bits: columns

Memory

Column

Decoder

Row

Decoder

MDR

Multiple of bytes

e.g. 1: store, 0: fetch

Store/Fetch

Controller

15

Input/Output and Mass

Storage

Examples:

Keyboard (input)

Screen (output)

Printer (input)

Disk (input/output)

Floppies (input/output)

Tape (input/output)

CD-Rom (input)

Modem (input/output)

Very slow compared with memory access

Memory: e.g. 300 nsec

Disk: e.g. 30 msec (= 100000*300 nsec)

16

Input/Output and Mass

Storage

Direct access storage (like disks)

Every cell has an address (addressable)

Unlike RAM access time is variable

Disk organization (hard disks, floppy)

R/W head

Track e.g.

10 sectors

Sector e.g.

512 bytes

Rotating

axis

17

Input/Output and Mass

Storage

Seek time: time to find the right track

Latency: right sector

E.g. Average: 2.5 msec

Sequential access storage devices (tapes)

E.g. Average: 12.5 msec

Transfer time: time to read/write sector

E.g. Average: 15 msec

No addresses

Content-based sequential access (like cassette audio tape)

Difference between memory/processor speed and I/O devices is

enormous

Controllers are used to do the slow work

18

Controllers for Input/Output

Controllers avoid having the processor being idle most of the time.

Input/Output operations (I/O operations)

Compare: You talk at normal speed 240 words/min to somebody who could only

respond back to you at the rate of 1 word/8 hours !!!

You will be most of the time waiting (idle)

1. Processor instructs the appropriate controller to do the (slow)

job of input/output

2. Processor continues instruction processing (possibly of other programs)

3. When I/O operation is done, controller interrupts the processor and signalizes

that the task has finished (e.g. data are ready to read)

Decoupling the two units (processor and controller) is done using a buffer

(small RAM memory) within the controller

Accessing the buffer is fast

The processor only needs to put/get data from the buffer and not from the I/O

device itself.

19

Structure of I/O Controllers

Processor

Memory

Data path

Control path (interrupts)

I/O

controller

I/O devices

buffer

buffer

Control

logic

Control

logic

e.g.

Screen

e.g.

Printer

20

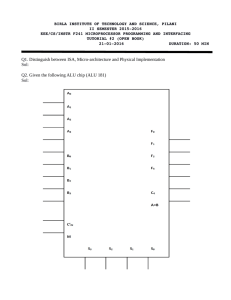

The Arithmetic Logic Unit (ALU)

Task of ALU

ALU is one part of the processor

Processor also includes control unit (later)

The ALU consists of:

Mathematical operations (+, -, *, …)

Logical operations (Equality, Inequality, AND, OR, …)

Registers

ALU Circuits

Interconnection (bus)

Registers

For holding operands and results of an operation

Example:

Prior to an addition the values to add are brought to two registers, then

the circuit for the adder is started (e.g. full-adder), and the result is

stored in a third register.

21

ALU

Registers behave like a very tiny on-processor RAM, but

without addresses

faster (10 times faster than memory)

used only for operands

Typically more than 10 registers are available

More registers means operations become faster

Example:

(a+b)*(c-d)

may involve:

Load register R0 with value a

Load register R1 with value b

Store result of a+b to R2

Load register R0 with value c

Load register R1 with value d

Store result of c-d to R3

Store result of R2*R3 to R0

22

ALU

Example: ALU with 16 registers

All registers can be used to hold either operands or results of operations

R0

R1

R15

left operand

ALU

circuitry

right operand

result

bus

23

ALU Circuitry

It implements the operations themselves

Examples:

a+b

a-b

a*b

a/b

a=b

a<b

a>b

a . B (AND)

Chapter 4 showed how to build these circuits based on gates

A decoder is to select the appropriate operation (see Chapter 4)

In order to get the correct result a multiplexer is needed

Suppose we only have 4 operation:

a+b

a-b

a.b

a/b

24

ALU Circuitry

R0

left operand

R1

Selector lines

R15

+

-

Multiplexer

right operand

/

.

result

ALU circuitry

25

Control Unit

Task of control unit

1. Fetch next instruction from memory

2. Decode it (activate the appropriate lines)

3. Execute it by issuing commands to the ALU, memory, or I/O

controller

Machine language instructions

Binary instructions (can be understood by the control unit)

Instructions have the following structure (here 2 addresses):

Op code

Binary value specifying the operation

E.g. 00001001 (decimal 9) for ADD, 00000001 (decimal 1) for COMPARE and so on

Addresses:

address2

Op code:

address1

Binary values specifying the addresses of the operands needed by the operation

E.g. 01100011 for address 99 (100th byte in memory)

Example:

00001001

ADD

01100011

99

01100100

100

26

Control Unit

Instruction Set: Set of executable instructions

Different processor makes have different instruction sets

E.g. Motorola 68040 cannot execute programs written for an Intel

Pentium (and vice versa), simply because their control units

understand different machine languages (native language of the

computer)

Two philosophies in use:

Reduced instruction set computers: RISC (e.g. Sun Sparc)

Less circuits (less operations)

More is done in software

Complex instruction set computers: CISC (e.g. Intel Pentium)

More circuits (more operations)

Less software

27

Control Unit

Types of instructions (R: register, X and Y: memory cells, means “copy”)

Data transfer

instruction

LOAD X

STORE X

MOVE X,Y

Arithmetic (here addition)

instruction

ADD X, Y, Z

ADD X, Y

ADD X

meaning

X R

RX

XY

meaning

X+YZ

X+YY

X+RR

Compare

Uses condition codes (bits within the processor), for example,GT-bit, EQ-bit, and LT-bit

Instruction

meaning

COMPARE X, Y

set condition bits accordingly

28

Control Unit

Example: COMPARE X, Y

Condition

Values of Condition Bits

GT

EQ

LT

X>Y

1

0

0

X<Y

0

0

1

X=Y

0

1

0

Branch instruction

Normally: the next instruction is fetched from the following address in

memory

Branch instruction allows for fetching any other instruction

JUMP X

next instruction is in cell X

JUMPGT X

if GT-bit is 1, next instruction is fetched from cell X

JUMPEQ, JUMPLT

similar

HALT

stop execution, don’t go to next instruction

29

Control Unit

Registers and circuits of the Control Unit

Program Counter (PC)

Instruction Register (IR)

Special register that always holds the address of the next instruction

To get that instruction from memory, the control unit copies the value

of PC to MAR and initiates a Fetch operation

Holds the instruction just fetched from memory (current instruction)

This includes the op-code and the operand addresses

Instruction decoder

What instruction is in the IR

Activate appropriate lines

30

Control Unit

Bus

PC

Op-code

[Address(es)]

IR

+1

Instruction decoder

Signals to memory,

ALU, I/O controllers,

and other units

31

The Whole Picture

Bus

ALU

Control

Unit

Memory

I/O Controller

Processor

(CPU: central processing unit)

Computer

I/O Devices (printers,

screens, keyboards, modems,

and so on)

32

History of Computers

The early age (up to 1940)

1642: Blaise Pascal

1694: Gottfried Leibnitz

Pascaline: mechanical calculator (using cogs and gears)

Addition and subtraction

Leibnitz’s Wheel

Addition, subtraction, multiplication, and division (mechanical)

both of them not computers (no memory, not programmable)

1822: Charles Babbage

Improved earlier work (still mechanical)

Difference Engine

6 significant digits of accuracy

Solved polynomial equations

Later (in 1830) he designed (without developing) the Analytic Engine (AE)

AE uses punched cards (that include the instructions)

AE design is very similar to a Von-Neumann computer:

Mill (ALU) Store (memory) operator (control unit) output (I/O)

AE is considered (by many) to be the first (design) of a computer

33

History of Computers

1890: Herman Hollerith

Enumeration in US would take 10-12 years

Based on punched cards, Hollerith designed programmable machines for sorting

and counting

First real machine based on Babbage design

Was very successful: built a company to sell his machines (later IBM)

Birth of computers (1940-1950)

Event: World War II (unfortunately)

1931: H. Aiken

Developed Mark I

Based on relays, magnets, and gears

First use of binary numbers in computers!

0 and 1 were represented using vacuum tubes and electric current

It is the first general purpose computer (Babbage dream)

Mark I was completed in 1944

Memory Capacity: 72 numbers

23-digit of accuracy

4 sec of “lightning” time

34

History of Computers

1943: J.P. Eckert and J. Mauchly

At almost the same time

ENIAC computer (Electronic Numerical Integrator And Calculator)

18,000 vacuum tubes, 100 feet long, 10 feet high, 30 tons of weight

Fully electronic: 10-digit number addition in about 1/5000th sec

Multiplication took about 1/300th sec

ABC system constructed by J. Atanasoff

Colossus built by A. Turing (England)

Z1 of Zuse (Germany) this was a full general purpose computer

1946: J. Von Neumann

He worked in the ENIAC project

After that: proposed a radically different computer design (stored program

concept)

Until then: program were developed manually using wires

Invention of programming (which replaced wiring of machines)

1950: His team built the EDVAC computer

EDSAC was built at the same time in England by M. Wilkes

Commercial model: UNIVAC I (first computer sold)

35

History of Computers

Modern Era: 1950-Present

Everything is still based on Von-Neumann ideas

Rather evolution and improvement took place but not a revolution

First Generation: 1950-1959

UNIVAC I

IBM 701

Both

Similar to EDVAC

Slow and expensive

Needed clean rooms and special personnel

Speed around 10000 instructions/sec

Second Generation: 1959-1965

Vacuum tubes were replaced by transistors and magnetic cores were used for

memory

This reduced size and increased reliability of computers

First high-level programming languages: Fortran and Cobol

Speed around 1 MIPS (Million Instructions Per Second)

36

History of Computers

Third Generation: 1965-1975

Integrated circuits (no more discrete transistors, resistors and so on, rather pieces of

silicon)

More reduction of size and cost

Desk-sized computers could be built: PDP11 of DEC in 1965

Birth of software industry (statistical packages, accounting software, etc.)

Computer were no longer a rarity by the mid-1970s

Speed around 10 MIPS

Fourth Generation: 1975-1985

First microcomputer

Desk-top machines (size of typewriter)

Software industry provided increasingly new packages (spreadsheets, databases, …)

First computer networks: Email systems

GUI: user-friendly systems with graphical interfaces

Embedded systems: computers in cars, microwave ovens, …

Speed 10-100 MIPS

37

History of Computers

Fifth Generation: since 1985

Change is constantly, only some aspects can be mentioned:

Parallel processors and supercomputers

Artificial intelligence and robotics

Laptops and notebooks

Virtual reality

Multimedia user interfaces

Integrated communication: TV, radio, Fax, Data

Speed 100-200 MIPS

Future:

More speed limiting factor is speed of light

More parallelism and scalability (Non-Von-Neumann machines)

38