Cognitive Diagnosis as Evidentiary Argument Robert J. Mislevy

advertisement

Cognitive Diagnosis as

Evidentiary Argument

Robert J. Mislevy

Department of Measurement, Statistics, & Evaluation

University of Maryland, College Park, MD

October 21, 2004

Presented at the Fourth Spearman Conference, Philadelphia, PA, Oct. 21-23, 2004.

Thanks to Russell Almond, Charles Davis, Chun-Wei Huang, Sandip Sinharay, Linda Steinberg,

Kikumi Tatsuioka, David Williamson, and Duanli Yan.

October 21, 2004

Inference & Culture

Slide 1

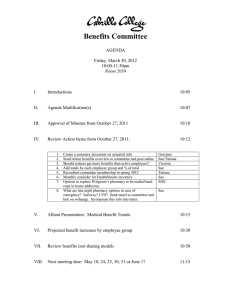

Introduction

An assessment is a particular kind of

evidentiary argument.

Parsing a particular assessment in terms of

the elements of an argument provides

insights into more visible features such as

tasks and statistical models.

Will look at cognitive diagnosis from this

perspective.

October 21, 2004

Inference & Culture

Slide 2

Toulmin's (1958) structure for arguments

C

unless

W

on

account

of

A

since

so

B

D

supports

R

Reasoning flows from data (D) to claim (C) by justification of a

warrant (W), which in turn is supported by backing (B). The

inference may need to be qualified by alternative explanations (A),

which may have rebuttal evidence (R) to support them.

October 21, 2004

Inference & Culture

Slide 3

Specialization to assessment

The role of psychological theory:

» Nature of claims & data

» Warrant connecting claims and data:

“If student were x, would probably do y”

The role of probability-based inference:

“Student does y; what is support for x’s?”

Will look first at assessment under

behavioral perspective, then see how

cognitive diagnosis extends the ideas.

October 21, 2004

Inference & Culture

Slide 4

Behaviorist Perspective

The evaluation of the success of instruction and

of the student’s learning becomes a matter of

placing the student in a sample of situations in

which the different learned behaviors may

appropriately occur and noting the frequency

and accuracy with which they do occur.

D.R. Krathwohl & D.A. Payne, 1971, p. 17-18.

October 21, 2004

Inference & Culture

Slide 5

C : Sue's probability of

correctly answering a 2digit subtraction problem

with borrowing is p

W:Sampling theory machinery

for reasoning from true

proportion for correct

responses in n targeted

situations to observed counts .

unless

since

The claim addresses the

expected value of

performance of the

targeted kind in the

targeted situations.

A: [e.g., observational

errors, data errors,

misclassification of

responses or

performance situations,

distractions, etc.]

so

and

D1jD11

: Sue's

: Sue's

D11

Sue's

answer

to: to

answer

answer

to

Item

j j

Item

Item j

D2jD2jstructure

D2jstructure

structure

andand

contents

contents

and

of Item

j contents

of Item

j j

of Item

C : Sue's probability of

correctly answering a 2digit subtraction problem

with borrowing is p

W:Sampling theory machinery

for reasoning from true

proportion for correct

responses in n targeted

situations to observed counts .

unless

since

A: [e.g., observational

errors, data errors,

misclassification of

responses or

performance situations,

distractions, etc.]

so

The student data

address the salient

features of the

responses.

and

D1jD11

: Sue's

: Sue's

D11

Sue's

answer

to: to

answer

answer

to

Item

j j

Item

Item j

D2jD2jstructure

D2jstructure

structure

andand

contents

contents

and

of Item

j contents

of Item

j j

of Item

C : Sue's probability of

correctly answering a 2digit subtraction problem

with borrowing is p

W:Sampling theory machinery

for reasoning from true

proportion for correct

responses in n targeted

situations to observed counts .

unless

since

A: [e.g., observational

errors, data errors,

misclassification of

responses or

performance situations,

distractions, etc.]

so

and

D1jD11

: Sue's

: Sue's

D11

Sue's

answer

to: to

answer

answer

to

Item

j j

Item

Item j

D2jD2jstructure

D2jstructure

structure

andand

contents

contents

and

of Item

j contents

of Item

j j

of Item

The task data

address the salient

features of the

stimulus situations

(i.e., tasks).

The warrant

encompasses definitions

of the class of stimulus

situations, response

classifications, and

sampling theory.

C : Sue's probability of

correctly answering a 2digit subtraction problem

with borrowing is p

W:Sampling theory machinery

for reasoning from true

proportion for correct

responses in n targeted

situations to observed counts .

unless

since

A: [e.g., observational

errors, data errors,

misclassification of

responses or

performance situations,

distractions, etc.]

so

and

D1jD11

: Sue's

: Sue's

D11

Sue's

answer

to: to

answer

answer

to

Item

j j

Item

Item j

D2jD2jstructure

D2jstructure

structure

andand

contents

contents

and

of Item

j contents

of Item

j j

of Item

Statistical Modeling of Assessment Data

Claims in terms of values of

unobservable variables in

student model (SM)-characterize student

knowledge.

Data modeled as depending

probabilistically on SM vars.

Estimate conditional

distributions of data given SM

vars.

Bayes theorem to infer SM

variables given data.

October 21, 2004

p()

p(X1|)

p(X3|)

p(X2 |)

X1

.

Inference & Culture

X2

.

X3

.

Slide 10

Specialization to cognitive diagnosis

Information-processing perspective

foregrounded in cognitive diagnosis

Student model contains variables in

terms of, e.g.,

» Production rules at some grain-size

» Components / organization of knowledge

» Possibly strategy availability / usage

Importance of purpose

October 21, 2004

Inference & Culture

Slide 11

Responses consistent with the

"subtract smaller from larger" bug

821

- 285

885

- 221

664

664

63

- 15

17

-9

52

12

“Buggy arithmentic”: Brown & Burton (1978); VanLehn (1990)

October 21, 2004

Inference & Culture

Slide 12

Some Illustrative Student Models in

Cognitive Diagnosis

Whole number subtraction:

» ~ 200 production rules (VanLehn, 1990)

» Can model at level of bugs (Brown & Burton) or at

the level of impasses (VanLehn)

John Anderson’s ITSs in algebra, LISP

» ~ 1000 production rules

» 1-10 in play at a given time

Reverse-engineered large-scale tests

» ~10-15 skills

Mixed number subtraction (Tatsuoka)

» ~5-15 production rules / skills

October 21, 2004

Inference & Culture

Slide 13

Mixed number subtraction

Based on example from Prof. Kikumi

Tatsuoka (1982).

» Cognitive analysis & task design

» Methods A & B

» Overlapping sets of skills under methods

Bayes nets described in Mislevy (1994):

» Five “skills” required under Method B.

» Conjunctive combination of skills

» DINA stochastic model

October 21, 2004

Inference & Culture

Slide 14

Skill 1: Basic fraction subtraction

Skill 2: Simplify/Reduce

Skill 3: Separate whole number from fraction

Skill 4: Borrow from whole number

Skill 5: Convert whole number to fractions

October 21, 2004

Inference & Culture

Slide 15

C: Sue's configuration of

production rules for

operating in the domain

(knowledge and skill) is K

W0: Theory about how persons with

configurations {K1,...,Km} would be

likely to respond to items with

different salient features.

since

so

and

C : Sue's probability of

answering a Class 1

subtraction problem with

borrowing is p1

C : Sue's probability of

...

W :Sampling

theory

for items with since

feature set

defining Class 1

answering a Class n

subtraction problem with

borrowing is pn

W :Sampling

theory

for items with since

feature set

defining Class n

so

and

D11j : Sue's

D11

answerD11

to

Item j, Class 1

so

and

D21j structure

D2j

D2j

and contents

of Item j, Class1

of Item

j j

of Item

...

D1nj : Sue's

D11

answerD11

to

Item j, Class n

D2nj structure

D2j

D2j

and contents

of Item j, Class n

of Item

j j

of Item

C: Sue's configuration of

production rules for

operating in the domain

(knowledge and skill) is K

W0: Theory about how persons with

configurations {K1,...,Km} would be

likely to respond to items with

different salient features.

since

so

and

C : Sue's probability of

answering a Class 1

subtraction problem with

borrowing is p1

C : Sue's probability of

...

Like behaviorist

:Sampling

W

inference

at level of

theory

for items with since

behavior

in classes of

feature set

so

defining

Class

n

structurally similar

and

tasks.

W :Sampling

theory

for items with since

feature set

defining Class 1

so

and

D11j : Sue's

D11

answerD11

to

Item j, Class 1

D21j structure

D2j

D2j

and contents

of Item j, Class1

of Item

j j

of Item

answering a Class n

subtraction problem with

borrowing is pn

...

D1nj : Sue's

D11

answerD11

to

Item j, Class n

D2nj structure

D2j

D2j

and contents

of Item j, Class n

of Item

j j

of Item

C: Sue's configuration of

production rules for

operating in the domain

(knowledge and skill) is K

W0: Theory about how persons with

configurations {K1,...,Km} would be

likely to respond to items with

different salient features.

since

so

and

C : Sue's probability of

answering a Class 1

subtraction problem with

borrowing is p1

W :Sampling

theory

for items with since

feature set

defining Class 1

D11j : Sue's

D11

answerD11

to

Item j, Class 1

C : Sue's probability of

...

answering a Class n

subtraction problem with

borrowing is pn

W :Sampling

theory

Structural patterns

for items with since

feature set

so

among behaviorist

defining Class n

and

claims are data for

inferences aboutD1njD11

D21j structure

: Sue's

D2jD2j

D11

answer

to

and contents

unobservable

...

Item j, Class n

of Item j, Class1

of Item

j j

of Item

production

rules that

govern behavior.

so

and

D2nj structure

D2j

D2j

and contents

of Item j, Class n

of Item

j j

of Item

C: Sue's configuration of

production rules for

operating in the domain

(knowledge and skill) is K

W0: Theory about how persons with

configurations {K1,...,Km} would be

likely to respond to items with

different salient features.

since

so

and

C : Sue's probability of

answering a Class 1

subtraction problem with

borrowing is p1

W :Sampling

theory

C : Sue's probability of

...

answering a Class n

subtraction problem with

borrowing is pn

W :Sampling

theory

for items level

with since

items with sincefrom subscores.

•This

distinguishes cognitivefordiagnosis

feature set

feature set

so

so

defining

Class 1 (but not necessary) difference

defining Class

•A

typical

isnthat cognitive

and

and observable

diagnosis has many-to-many

relationship between

D11j : Sue's

D21j structure

D1nj As

D2nj structure

: Sue's

variables

variables.

partitions,

D11D11and student-model

D2jD2j

D11

D2jD2j

D11

answer to

answer

to

and contents

and contents

...

Item j, Class

1

Item j, Class

n

subscores

have

1-1

relationships

between

scores

and

of Item j, Class1

of Item j, Class n

of Item

j

of Item

j j

of Item

inferential targets. of Item j

October 21, 2004

Inference & Culture

Slide 19

Structural and stochastic

aspects of inferential models

Structural model relates student model

variables (s) to observable variables (xs)

» Conjunctive, disjunctive, mixture

» Complete vs incomplete (e.g., fusion model)

» The Q matrix (next slide)

Stochastic model addresses uncertainty

» Rule based; logical with noise

» Probability-based inference (discrete Bayes nets,

extended IRT models)

» Hybrid (e.g., Rule Space)

October 21, 2004

Inference & Culture

Slide 20

The Q-matrix (Fischer, Tatsuoka)

Items

1

2

3

4

5

Features

1

0

1

0

0

1

1

0

0

0

0

0

0

1

1

0

0

1

1

1

qjk is extent Feature k pertains to Item j

Special case: 0/1 entries and a 1-1

relationship between features and studentmodel variables.

October 21, 2004

Inference & Culture

Slide 21

Conjunctive structural relationship

Person i: i = (i1, i2, …, iK)

» Each ik =1 if person possesses “skill”, 0 if

not.

Task j: qj = (qj1, qj2, …, qjK)

» A qjk = 1 if item j “requires skill k”, 0 if not.

Iij = 1 if (qjk =1 ik =1) for all k,

0 if (qjk =1 but ik =0) for any k.

October 21, 2004

Inference & Culture

Slide 22

Conjunctive structural relationship:

No stochastic model

Pr(xij =1| i , qj ) = Iij

No uncertainty about x given .

There is uncertainty about given x, even

if no stochastic part, due to competing

explanations (Falmagne):

xij = {0,1} just gives you partitioning into all

s that cover of qj, vs. those that miss with

respect to at least one skill.

October 21, 2004

Inference & Culture

Slide 23

Conjunctive structural relationship:

DINA stochastic model

Now there is uncertainty about x given :

Pr(xij =1| Iij =0) = pj0 -- False positive

Pr(xij =1| Iij =1) = pj1 -- True positive

Likelihood over n items:

i

Posterior :

October 21, 2004

1 p

xi , q j p i

xi , q j p j , Iij

j

i

1 xij

xij

Inference & Culture

j , Iij

Slide 24

The particular challenge of

competing explanations

Triangulation

» Different combinations of data fail to support

some alternative explanations of responses,

and reinforce others.

» Why was an item requiring Skills 1 & 2 wrong?

– Missing Skill 1? Missing Skill 2? A slip?

– Try items requiring 1 & 3, 2 & 4, 1& 2 again.

Degree design supports inferences

» Test design as experimental design

October 21, 2004

Inference & Culture

Slide 25

Bayes net

for mixed

number

subtraction

(Method B)

Bas ic fraction

subtraction

(Skill 1)

6/7 - 4/7

Item 6

2/3 - 2/3

Item 8

Simplify/reduce

(Skill 2)

Convert whole

number to

fraction

(Skill 5)

Mixed number

skills

Separate whole

number from

fraction

(Skill 3)

Borrow from

whole number

(Skill 4)

Skills 1 & 3

3 7/8 - 2

Skills 1 & 2

Item 9

4 5/7 - 1 4/7

3 4/5 - 3 2/5

Item 16

Skills 1, 3, &

4

Item 14

Skills

1,2,3,&4

11/8 - 1/8

Skills 1, 3, 4,

&5

7 3/5 - 4/5

Item 17

Item 12

3 1/2 - 2 3/2

4 1/3 - 2 4/3

4 1/3 - 1 5/3

Item 4

Item 11

Item 20

4 4/12 - 2 7/12

4 1/10 - 2 8/10

Item 10

Item 18

Skills 1, 2, 3,

4, & 5

2 - 1/3

Item 15

3 - 2 1/5

4 - 3 4/3

Item 7

Item 19

Bayes net

for mixed

number

subtraction

(Method B)

Structural aspects: The

logical conjunctive

relationships among

skills, and which sets of

skills an item requires.

Latter determined by its

qj vector.

Basic fraction

subtraction

(Skill 1)

6/7 - 4/7

Item 6

2/3 - 2/3

Item 8

Simplify/reduce

(Skill 2)

Convert whole

number to

fraction

(Skill 5)

Mixed number

skills

Separate whole

number from

fraction

(Skill 3)

Borrow from

whole number

(Skill 4)

Skills 1 & 3

3 7/8 - 2

Skills 1 & 2

4 5/7 - 1 4/7

Item 9 3 4/5 - 3 2/5

Item 16

Skills 1, 3, &

4

Item 14

Skills

1,2,3,&4

11/8 - 1/8

7 3/5 - 4/5

Item 17

Item 12

3 1/2 - 2 3/2

4 1/3 - 2 4/3

4 1/3 - 1 5/3

Item 4

Item 11

Item 20

4 4/12 - 2 7/12

4 1/10 - 2 8/10

Item 10

Item 18

Skills 1, 2, 3,

4, & 5

3 - 2 1/5

4 - 3 4/3

Item 7

Item 19

Skills 1, 3, 4,

&5

2 - 1/3

Item 15

Bayes net

for mixed

number

subtraction

(Method B)

Stochastic aspects,

Part 1: Empirical

relationships among

skills in population

(red).

Basic fraction

subtraction

(Skill 1)

6/7 - 4/7

Item 6

2/3 - 2/3

Item 8

Simplify/reduce

(Skill 2)

Convert whole

number to

fraction

(Skill 5)

Mixed number

skills

Separate whole

number from

fraction

(Skill 3)

Borrow from

whole number

(Skill 4)

Skills 1 & 3

3 7/8 - 2

Skills 1 & 2

4 5/7 - 1 4/7

Item 9 3 4/5 - 3 2/5

Item 16

Skills 1, 3, &

4

Item 14

Skills

1,2,3,&4

11/8 - 1/8

7 3/5 - 4/5

Item 17

Item 12

3 1/2 - 2 3/2

4 1/3 - 2 4/3

4 1/3 - 1 5/3

Item 4

Item 11

Item 20

4 4/12 - 2 7/12

4 1/10 - 2 8/10

Item 10

Item 18

Skills 1, 2, 3,

4, & 5

3 - 2 1/5

4 - 3 4/3

Item 7

Item 19

Skills 1, 3, 4,

&5

2 - 1/3

Item 15

Bayes net

for mixed

number

subtraction

(Method B)

Basic fraction

subtraction

(Skill 1)

6/7 - 4/7

Item 6

2/3 - 2/3

Item 8

Simplify/reduce

(Skill 2)

Convert whole

number to

fraction

(Skill 5)

Mixed number

skills

Separate whole

number from

fraction

(Skill 3)

Borrow from

whole number

(Skill 4)

Skills 1 & 3

Stochastic aspects,

Part 2: Measurement

errors for each item

(yellow).

3 7/8 - 2

Skills 1 & 2

4 5/7 - 1 4/7

Item 9 3 4/5 - 3 2/5

Item 16

Skills 1, 3, &

4

Item 14

Skills

1,2,3,&4

11/8 - 1/8

7 3/5 - 4/5

Item 17

Item 12

3 1/2 - 2 3/2

4 1/3 - 2 4/3

4 1/3 - 1 5/3

Item 4

Item 11

Item 20

4 4/12 - 2 7/12

4 1/10 - 2 8/10

Item 10

Item 18

Skills 1, 2, 3,

4, & 5

3 - 2 1/5

4 - 3 4/3

Item 7

Item 19

Skills 1, 3, 4,

&5

2 - 1/3

Item 15

Bayes net

for mixed

number

subtraction

yes

no

1

0

Skill1

yes

no

1

0

Item6

Item8

Skill2

yes

no

Skill5

MixedNumbers

yes

no

yes

no

Skill3

yes

no

Skill4

Skills1&3

1

0

yes

no

1

0

Item9

Probabilities

before

observations

1

0

yes

no

Item16

Skills1&2

Skills134

Item14

yes

no

1

0

Item12

Skills1234

1

0

1

0

1

0

yes

no

Item17

Skills1345

yes

no

1

0

1

0

Skills12345

Item4

1

0

Item10

Item11

1

0

Item20

Item15

1

0

1

0

Item18

Item7

Item19

Note: Bars represent probabilities , s umming to one for all the poss ible values of a variable.

Figure 10

Inference Network for Method B, Initial Status

Bayes net

for mixed

number

subtraction

yes

no

1

0

Skill1

yes

no

1

0

Item6

Item8

Skill2

yes

no

Skill5

MixedNumbers

yes

no

yes

no

Skill3

yes

no

Skill4

Skills 1&3

1

0

yes

no

1

0

Item9

Probabilities

after

observations

Skills 1&2

1

0

yes

no

Item16

Skills 134

Item14

yes

no

1

0

Item12

1

0

Skills 1234

1

0

yes

no

Item17

1

0

Skills 1345

yes

no

1

0

1

0

Skills 12345

Item4

1

0

Item10

Item11

1

0

Item20

Item15

1

0

1

0

Item18

Item7

Item19

Bars represent probabilities, summing to one for all the possible values of a variable. A shaded bar

extending the full width of a node represents certainty, due to having observed the value of that variable.

Figure 11

Inference Network for Method B, After Observing Item Responses

Bayes net

for mixed

number

subtraction

Method

Skill1

Skill2

Skill6

It em6

It em8

Skill5

Skills1&2

Skills1&6

It em12

Skills156

Skills125

MixedNumbers

It em4

Skill3

Skill4

It em7

Skill7

It em9

Skills1&3

Skills126

It em10

For mixture

of strategies

across

people

Skills134

Skills1267

It em11

Skills1345

It em14

Skills1234

Skills12567

It em15

Skills12345

It em16

It em17

It em18

It em19

It em20

Figure 12

Directed Acyclic Graph for Both Methods

Extensions (1)

More general …

» Student models (continuous vars, uses)

» Observable variables (richer, times, multiple)

» Structural relationships (e.g., disjuncts)

» Stochastic relationships (e.g., NIDA, fusion)

» Model-tracing temporary structures (VanLehn)

October 21, 2004

Inference & Culture

Slide 33

Extensions (2)

Strategy use

» Single strategy (as discussed above)

» Mixture across people (Rost, Mislevy)

» Mixtures within people (Huang: MV Rasch)

Huang’s example of last of these follows…

October 21, 2004

Inference & Culture

Slide 34

What are the forces at the instant of impact?

20 mph

20 mph

A. The truck exerts the same amount of force on the

car as the car exerts on the truck.

B. The car exerts more force on the truck than the

truck exerts on the car.

C. The truck exerts more force on the car than the car

exerts on the truck.

D. There’s no force because they both stop.

What are the forces at the instant of impact?

10 mph

20 mph

A. The truck exerts the same amount of force on the

car as the car exerts on the truck.

B. The car exerts more force on the truck than the

truck exerts on the car.

C. The truck exerts more force on the car than the car

exerts on the truck.

D. There’s no force because they both stop.

What are the forces at the instant of impact?

10 mph

1 mph

A. The truck exerts the same amount of force on the

fly as the fly exerts on the truck.

B. The fly exerts more force on the truck than the

truck exerts on the fly .

C. The truck exerts more force on the fly than the fly

exerts on the truck.

D. There’s no force because they both stop.

The Andersen/Rasch Multidimensional

Model for m strategy categories

m

P( X ij p) exp(ip jp ) / exp(iq jq )

q 1

p

is an integer between 1 and m;

xij

is the strategy person i uses for item j;

ip

is the pth element in the person i’s vector-valued parameter;

jp

is the pth element in the item j’s vector-valued parameter.

October 21, 2004

Inference & Culture

Slide 38

Conclusion:

The Importance of Coordination…

Among psychological model, task design,

and analytic model

» (KWSK “assessment triangle”)

» Tatsuoka’s work is exemplary in this respect:

– Grounded in psychological analyses

– Grainsize & character tuned to learning model

– Test design tuned to instructional options

October 21, 2004

Inference & Culture

Slide 39

Conclusion:

The Importance of Coordination…

With purpose, constraints, resources

» Lower expectations for retrofitting existing

tests designed for different purposes, under

different perspectives & warrants.

» Information & Communication Technology

(ICT) project at ETS

– Simulation-based tasks

– Large scale

– Forward design

October 21, 2004

Inference & Culture

Slide 40