The Neuroscience of Language

advertisement

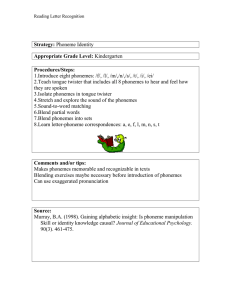

The Neuroscience of Language What is language? What is it for? • Rapid efficient communication – (as such, other kinds of communication might be called language for our purposes and might share underlying neural mechanisms) • Two broad but interacting domains: – Comprehension – Production Speech comprehension • Is an auditory task – (but stay tuned for the McGurk Effect!) • Is also a selective attention task – Auditory scene analysis • Is a temporal task – We need a way to represent both frequency (pitch) and time when talking about language -> the speech spectrogram Speech comprehension • Is also a selective attention task – Auditory scene analysis • Which streams of sound constiutute speech? • Which one stream constitutes the to-becomprehended speech • Not a trivial problem because sound waves combine prior to reaching the ear Speech comprehension • Is a temporal task – Speech is a time-varying signal – It is meaningless to freeze a word in time (like you can do with an image) – We need a way to consider both frequency (pitch) and time when talking about language -> the speech spectrogram What forms the basis of spoken language? • Phonemes • Phonemes strung together over time with prosody What forms the basis of spoken language? • Phonemes = smallest perceptual unit of sound • Phonemes strung together over time with prosody What forms the basis of spoken language? • Phonemes = smallest perceptual unit of sound • Phonemes strung together over time with prosody = the variation of pitch and loudness over the time scale of a whole sentence What forms the basis of spoken language? • Phonemes = smallest perceptual unit of sound • Phonemes strung together over time with prosody = the variation of pitch and loudness over the time scale of a whole sentence To visualize these we need slick acoustic analysis software…which I’ve got What forms the basis of spoken language? • The auditory system is inherently tonotopic Is speech comprehension therefore an image matching problem? • If your brain could just match the picture on the basilar membrane with a lexical object in memory, speech would be comprehended Problems facing the brain •Acoustic - Phonetic invariance –says that phonemes should match one and only one pattern in the spectrogram –This is not the case! For example /d/ followed by different vowels: Problems facing the brain • The Segmentation Problem: – The stream of acoustic input is not physically segmented into discrete phonemes, words, phrases, etc. – Silent gaps don’t always indicate (aren’t perceived as) interruptions in speech Problems facing the brain • The Segmentation Problem: – The stream of acoustic input is not physically segmented into discrete phonemes, words, phrases, etc. – Continuous speech stream is sometimes perceived as having gaps How (where) does the brain solve these problems? – Note that the brain can’t know that incoming sound is speech until it first figures out that it isn’t !? – Signal chain goes from non-specific -> specific – Neuroimaging has to take the same approach to track down speech-specific regions Functional Anatomy of Speech Comprehension • low-level auditory pathway is not specialized for speech sounds • Both speech and non-speech sounds activate primary auditory cortex (bilateral Heschl’s Gyrus) on the top of the superior temporal gyrus Functional Anatomy of Speech Comprehension • Which parts of the auditory pathway are specialized for speech? • Binder et al. (2000) – fMRI – Presented several kinds of stimuli: • • • • • white noise These have non-word-like acoustical properties pure tones non-words These have word-like acoustical properties but no reversed words lexical associations real words word-like acoustical properties and lexical associations Functional Anatomy of Speech Comprehension • Relative to “baseline” scanner noise – Widespread auditory cortex activation (bilaterally) for all stimuli – Why isn’t this surprising? Functional Anatomy of Speech Comprehension • Statistical contrasts reveal specialization for speech-like sounds – superior temporal gyrus – Somewhat more prominent on left side Functional Anatomy of Speech Comprehension • Further highly sensitive contrasts to identify specialization for words relative to other speech-like sounds revealed only a few small clusters of voxels • Brodmann areas – Area 39 – 20, 21 and 37 – 46 and 10 Next time we’ll discuss • Speech production • Aphasia • Lateralization