Logic 3003 Assignment 1.

advertisement

Logic 3003 Assignment 1.

1.

Complete the following proofs. 10 points for a; 20 for b (10 each direction); 5 extra for c

(I know, it’s pretty long for just 5 points… but they’re BONUS points…); 5 for d (a very

simple point to make).

Total: 35 plus 5 bonus.

(a)

1

2

3

4

1,4

6

7

8

6,7

6,7

6,7

12

2,6

2,6

15

3,15

3,15

1,2,3,4

1,2,3

(1) A B P Q

A

(2) P S

A

(3) Q R

A

(4) A B

A (for CP)

(5) P Q

1,4, MPP

(6) P

A (for -E)

(7) P

A (for -E)

(8) S

A (for RAA)

(9) P P

6,7, -I

(10) S

8,9, RAA

(11) S

10, DN

(12) S

A (for -E)

(13) S

2,7,11,12,12, -E

(14) S R

13, -I

(15) Q

A (for -E)

(16) R

3,15 MPP

(17) S R

16, -I

(18) S R

5,6,14,15,17 -E

(19) A B S R

4,18 CP

(b)

1

2

3

3

3

3

7

3

3

3

3

12

13

14

13

12,13

12,13

12,13

12

20

21

20

12,20

(A B) (P Q), P S, Q R (A B ) (S R)

(A B) ((AB) (AB))

(1) (A B)

A

(2) ((A B) (A B))

(for RAA)

(3) A B

A (for -E)

(4) A

A (for CP)

(5) B

3, -E

(6) A B

4,5, CP

(7) B

A (for CP)

(8) A

3, -E

(9) B A

7,8, CP

(10) (A B ) (B A)

6,9, -I

(11) A B

10, df

(12) (A B)

A (for -E)

(13) A

A (for CP)

(14) B

A (for RAA)

(15) A B

14, -I

(16) (A B) (A B)

12,15, -I

(17) B

14,16, RAA

(18) B

17, DN

(19) A B

13,18, CPP

(20) B

A (for CP)

(21) A

A (for RAA)

(22) A B

20, -I

(23) (A B) (A B)

12,22, -I

QED

12,20

12,20

12

12

13

2

1,2

1

(24) A

21,23, RAA

(25) A

24, DN

(26) B A

20,25, CPP

(27) (A B ) (B A)

19,26, -I

(28) A B

27, df

(29) A B

2,3,11,12,28 -E

(30) (A B) (A B) 1,30, -I

(31)((A B) (A B)) 2,31, RAA

QED

Note: The other direction is straightforward. Assume the RHS (right hand side) above, assume the LHS

without the negation (for RAA); then all you need to do is extract, from A B, the disjunction (A B)

(A B). Then conjoin with the first assumption for a contradiction, and apply RAA.

(c)

(A B) C ((A B) C))(((A (B C)) (B (A C))) (C

(A B)))

As noted in email from last week, this is very long (one direction around 50 steps; the other more

than double that in my version). It is, therefore, NOT REQUIRED. But the strategy is worth thinking

through.

First, assume the LHS and try to prove the RHS. Consider the LHS. This is a nested

biconditional—so the first thing we need to do is unpack it into two conditionals, one with a biconditional

antecedent, the other with a biconditional consequent. (The unpacking of the nested biconditional happens

later.) Next, assume the negation of the RHS for RAA. This is a disjunction, so we can quickly derive the

negation of each disjunct. Then assume C for RAA, to get things started. This gets us A B by MPP.

Assume A for RAA; this allows us to get B from our biconditional (after unpacking it to get A B). So

we now have A, B and C. Conjoin to get (A B) C and then conjoin with the negation of this wff

(derived above) to get the contradiction. So we have A by RAA. Now we use B A and MTT to get

B. So we have A B. Apply DeM to get (A B). So we now have C (A B), which gives

us a contradiction again. So we have C by RAA. This (combined with (A B) C, from our premise)

gives us (AB) by MTT. A contradiction isn’t hard to find here. Assume A for RAA, then assume B

for RAA. From A and B we get A B and B A using CP, giving us (A B). Conjoin to get our

contradiction and conclude B. So we now have A, B and C. But with a conjunction, a DeM and

another conjunction, this gives us A (B C). But we already have the negation of this, so we get our

contradiction. So we conclude that A by RAA. Assuming B for RAA, we get (after a little fooling

around with RAA and CP) A B and B A, so we get A B, which again gives us a contradiction. So

we conclude B, and get B. This time, conjoining and DeM gives us B (A C). But this gives us a

contradiction with (B (A C)) proved earlier. So our main RAA (which began when we assumed the

negation of the RHS above) is done, and we get the negation of the negation of the RHS, and apply DN to

get the RHS.

Here of course we begin by assuming the RHS. Since this is a disjunction, the obvious move is

to use -E. So, beginning with the first disjunct: Assume (A (B C)) for -E; apply -E to get each

atom. Assume C for CP. Then assume A for CP and write A B, by CP (drawing on the line where B

was written). Assume B for CP, and write B A by CP. Conjoin, apply df , and apply CP to get

C(A B). Now we assume (((A (B C)) (B (A C))) (C (A B))) for -E. Again, we

have a disjunction, so we assume the first disjunct for -E: ((A (B C) (C (A B)). This is a

disjunction, so we assume the first disjunct for -E again. A (B C) allows us to prove our nested

biconditional again: Assume C for CP. Assume (A B) for RAA. Extract C from A (B C),

conjoin our contradiction, and conclude that (A B). Apply CP to get C (A B). Now assume

A B. Assume C for RAA. Get A from A (B C). Apply df E and MPP to get B. Extract

B from (B C) using DeM and -E, conjoin the contradiction and conclude that C, so C by DN.

Apply CP to get (A B) C. Conjoin and use df bic to get (A B) C again. Keep doing this (with

slight variations), one disjunct at a time, and applying -E to get (A B) C at every stage. In the end,

a final -E gives us the result.

Let A and B be any wffs. Prove that A B iff A B, and that A B iff

A B

Note for d: Here the key is to show how to transform each proof into the other, as

required. The second is really just a simple extension of the first.

(d)

1. Suppose that we have a proof of the sequent A B.

Then we can convert this into a proof of the sequent A B simply by adding A as an

assumption, and using MPP to obtain B. The assumption line for our conclusion will

include only the line where A was assumed, since ex hypothesi (because A B is

assumed to be a theorem) the line where A B was written has no assumptions listed

for it. So the extended proof constitutes a proof of the required sequent.

2. Suppose we have a proof of the sequent A B. Then we can turn this into a proof

of A B simply by adding a CP step: Ex hypothesis, the only assumption listed at the

line where B is written is A. But a CP step removes the assumption of the antecedent

from the assumptions listed next to B. So A B is a theorem, as required.

The biconditional case is an obvious extension of this, using A B to get A B and

vice versa, B A to get B A and vice versa, and df to finish the job.

2.

Translations: Translate the following sentences into L. 5 each; 4 for structure; 1 for the

basic (atomic) sentences. Total: 20 points

(a)

Once Fred has got the goods, only Alice will be able to stop him.

Let F:: Fred has got the goods, A:: Alice is able to stop Fred S:: Someone else is able to

stop Fred.

F (A S)

(b)

Alice won’t stop Fred unless neither of Ann and George steps in.

Let A:: Alice stops Fred. S:: Ann steps in. G:: George steps in

A (S G)

(c)

Whether Fred gets the goods or gives up, Alice won’t bother him unless he tries

to use them.

Let F:: Fred gets the goods. G:: Fred gives up. A:: Alice bothers Fred U:: Fred tries to

use the goods.

A U (I also think A (F U) is OK, since trying to use the goods probably goes

along with/requires having got them.)

(d)

If George continues to play the fool, neither Alice nor Fred will have patience

for him, unless he’s awfully amusing.

Let G:: George continues to play the fool A: Alice has patience for George F: Fred has

patience for George. H:: George is awfully amusing.

G ((A F) H)

3.

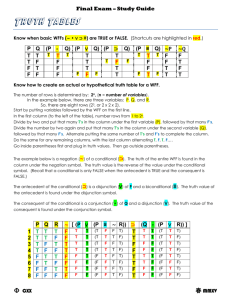

Uniform Substitutions: Identify which sentences in the lists are substitution instances of the

starting wff. (Be careful about the binding power rules!) 5 each; total 15.

The key here is to identify which wffs match the given wff at the ‘final’ levels of structure

specified by the given wffs. In (a), then, all we need to do is figure out whether is the main connective.

If it is, then substituting the antecedent wff for P and the consequence wff for Q produces the wff, showing

that the wff is a substitution instance of ‘P Q’.

PQ: (i) PRS (ii) PRS (iii) (P Q) Q R (iv) (RS)

PQRS

i., iii. (iv is the toughest call—it fails because, as the weakest binding connective,

ends up as the main connective, with the buried one level deeper in the wff’s

structure.)

(a)

RS: (i) ( R S) (Q P) (ii) A B C D (iii) (A B C)

(B C)

iii. The binding rules imply that the others aren’t disjunctions with a negated left hand

disjunct.

(b)

(c)

Prove that the rule TI(S) is a conservative extension of our base rules, i.e. that

anything we can prove with its help, we can prove using just the base rules.

All we need to do is perform uniform substitution on every step of the proof. The rules

are unaffected by such substitutions: For MPP, for instance, if we have something of the

form A B and something of the form A already, we can write B by MPP regardless of

whether A and B are atomics, or wffs of any degree of complexity you like. The rules are

formal in this very strong sense: They care only about the structure of the wffs they are

operating on, and ignore the details that are altered by such a uniform substitution. So the

result of such a uniform substitution (replacing each sentence letter in the original proof

with some unique wff throughout the proof) is itself a proof of the required new theorem.

Note for c: It’s trivial for TI, since we could always just interpose the proof to get the theorem

instead. But for TI(S) we need to show how that proof could be ‘transformed’ into a proof of the

substitution instance of the theorem.

4.

Structural Rules: 5 each; total 15.

(a)

Let A be a theorem. What structural rule requires that A follow from every

ensemble? Explain by appeal to the definition of a theorem.

Monotonicity. Monotonicity says that whatever we can prove from an ensemble, we can

prove from any larger ensemble including all the original ensemble’s members.

Since a theorem is (by definition) provable from the empty ensemble, monotonicity

requires that it be provable from any ensemble extending the empty ensemble—that is,

from any ensemble whatsoever.

(b)

Prove that our formal system (L plus the base rules) respects the structural rule

of transitivity.

Note for b: Recall that transitivity just says that if there is a proof of B from , A (i.e.

together with A) and a proof of A from , then there is a proof of B from .

Trans: , A B, A / B

Suppose we have a proof of , A B and a proof of A. We can transform the proof

of , A B into a proof of A B using CP. But we now have a proof of A and

a proof of A B. Put these two together (tacking the second onto the end of the

first) and apply MPP. The result is a proof of B.

(c)

What rule in our formal system ensures the structural rule of reflexivity holds?

Explain what the structural rule of reflexivity requires.

The rule of assumption. In general, reflexivity requires that any ensemble including a wff

A must prove A. The rule of assumption plus monotonicity ensures that this general form

of reflexivity holds for our system.

5.

Truth Functions: 16 points for a; 5 for b and c. Total: 26.

(a)

Show that the Sheffer stroke allows us to construct expressions for all binary

truth functions as well as for negation. (Use parentheses to help indicate how

groupings go.)

Note for a: See page 70 for the list of all binary truth functions. All you need is an

expression using the stroke ‘|’, that takes the required values on each line. Note also that

the expressions for P, Q, P and Q give us 4 of the 16 right off the bat.

Negation:

P | (P|Q)

1

0

1

1

P P|P Binaries:

1 0

0

1

P|Q

0

1

1

1

(P|Q) | (P|Q)

1

0

0

0

P | (P| P)

1

1

1

1

Q|(P|Q) (P|(P|P)) | (P|(P|Q)) (P| (P|P))| (Q|(P|Q))

1

0

0

1

1

0

0

0

1

1

0

0

(P| (P|P)) | (P| (P|P))

0

0

0

0

(P|P)|(Q|Q) | (P|P)|(Q|Q)

0

0

0

1

Q Q|Q

1 0

0 1

1 0

0 1

P

1

1

0

0

P|P

0

1

0

1

(P|P) | (Q|Q)

1

1

1

0

(P|Q) | ((P|P) | (Q|Q)) ((P|Q) | ((P|Q) | (P|Q))) | (P | (P| P))

1

0

0

1

0

1

1

0

b. Using the result of a, show constructively that the Sheffer stroke is expressively

complete, i.e. give a recipe for constructing an expression with an arbitrary truth table

using just the Sheffer stroke. (Hint: begin with the familiar construction using , , and

, and translate it into Sheffer strokes.)

Our usual construction is a disjunction of conjunctions of literals, with each conjunction

corresponding to one of the lines where the wff in question takes the value ‘1’.

So first we need a Sheffer stroke version of the literals, which is easy:

1. For an atom P use P; for a negated atom P use P|P

Next we need to know how to conjoin collections of literals.

2. Our conjunction equivalent above is (P|Q) | (P|Q). So we need to apply this pattern,

one by one, to each of the literals on the list, conjoining the first with the second, then

conjoining the result of that with the third, and so on. This is ugly, but straightforward.

3. Our disjunction equivalent is (P|P) | (Q|Q). So again, we take the results of step 2, and

apply this pattern to them, beginning with the first pair, then applying the pattern to that

result and the third, and so on. This is really ugly, but again straightforward, and it’s

evident (since we have equivalence at every step) that the result will give the same truth

function as our original disjunction of conjunctions of literals.

(c) Let A be a wff containing any number of atoms but with as its only connective.

Show that A must be contingent. (Hint: Try an induction!)

We need to think just a little about what such disjunctions do. Suppose one atom is true. Then the

disjunction will be true. Suppose all atoms are false. Then the disjunction will be false. Can we prove this

by an induction on the length of the wff?

Base: For wffs of length 1, we have an atom. Then, if that atom is true, the wff is true, but if all

the atoms in the wff are false, the wff is false.

Hyp of Ind: Assume this holds of pure wffs up to length k-1.

Ind step: Consider a pure wff of length K, A. A is of the form B C. By the hypothesis of

induction, if all the atoms in B are false, B is false, while if one atom or more in B is true, B is true. And if

all the atoms in C are false, C is false, while if one atom or more in C is true, C is true. But A is true if one

of B or C is true, i.e. iff at least one atom in either of those wffs is true, i.e. iff one or more of A’s atoms are

true. And A is false otherwise, i.e. A is false if all of A’s atoms are false. So A is contingent: It’s false on

the last line of its truth table, and true on every other. QED

6.

Extra Credit: 5 bonus.

Show that any connective of any ‘arity’ is expressively complete if its first line

gives the value false, its last line gives the value true, and two other

enantiomorphic lines have the same value. (Enantiomorphic lines are mirror

images of each other; the first line is enantiomorphic to the last; the second to

the second to last, and so on.)

The key here is that we quickly obtain ‘not’ from such a connective just by

writing the same wff in every ‘slot’: Let * be our connective, so that * needs n

arguments. A = *(A,A,…), where A occurs n times in the list. And we obtain

either ‘and’ or ‘or’ from the other two lines: Put one of the wffs being joined in

in every slot assigned ‘1’ in the first line, and the other in every slot assigned

‘0’. If the resulting value is 0, we have a conjunction. If the resulting value is 1,

we have a disjunction.

But we already know that {,} and {,} are expressively complete. QED.

Maximum score: 111 plus 10 bonus.