Fault Tolerance Chapter 7

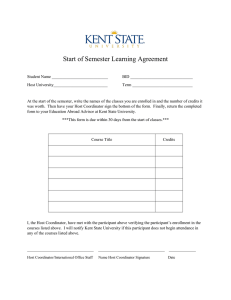

advertisement

Fault Tolerance

Chapter 7

Basic Concepts

• System:

A collection of components (incl. interconnections) that achieve a common

task.

• Component:

A software or hardware component or a set thereof (a subsystem).

• Failure:

A deviation from the the specified behavior of a component.

Examples: 1) The program has crashed.

2) The disk is becoming slower.

• Fault:

The cause of a failure.

Examples:

1) The crash of the program was because of a bug in the

software.

2) The disk is slower because the R/W-head is overheated.

Basic Concepts

•

•

Difference between fault and failure is very subtle.

It helps to introduce the notion of an observation unit.

Component 2

System

1

F1

2

Component 4

F2

Component 1

Component 3

•

•

•

Observer 1 will treat F1 as a failure, and he will speak of F2 as a fault (since it was the

cause for F1). Unit of observation of observer 1 is the whole system.

However, observer 2 will see F2 as a failure and will look at what fault did cause this

failure. Unit of observation of observer 2 is component 1.

In other words: Observer 1 sees the system as a black box whereas observer 2 sees it as

a glass box.

Types of Failure in a Distributed

System

Type of failure

Description

Crash failure

A server halts, but is working correctly until it halts

Omission failure

- Receive omission

- Send omission

A server fails to respond to incoming requests

- A server fails to receive incoming messages (e.g. no

listener)

- A server fails to send messages (e.g. buffer overflow)

Timing failure

A server's response lies outside the specified time interval

(e.g. time too short and no buffer is available or time too long)

Response failure

- Value failure

The server's response is incorrect

- The value of the response is wrong (e.g. server recognizes

request but deliver wrong answer because of a bug)

- The server deviates from the correct flow of control

(e.g. server does not recognize request and is not prepared

for it – no exception handling for example)

- State transition failure

Arbitrary failure

(Byzantine failures)

A server may produce arbitrary responses at arbitrary times

Different types of failures.

•

Basic Concepts

Failures may be:

- Permanent: they need recovery in order to be removed (e.g. OS crash reboot).

- Transient: they disappear after some (short) period without recovery (e.g. dust on a disk).

• Fault tolerance:

A system is said to be fault tolerant, if it is able to perform its function even in the case of

failures.

Examples: 1) Two-processor system.

2) 747s have 4 engines but can fly with 3.

• Redundancy:

Fault tolerance can be achieved using redundancy.

Different types of redundancy:

- Information redundancy: More information that help detect and correct failures;

e.g. CRC bits in packets.

- Time redundancy: Repetitions of operations in order to overcome (transient) failures;

e.g. retry access to a disk, redo a transaction.

- Functional redundancy: more functions (e.g. fault handling functions, monitors etc.) in

order to detect/mask failures.

- Structural redundancy: more units (e.g. 2 WWW servers, 2 processors etc.) in order to mask

failures of any one of them.

Quantitative Measures for

Dependability

• Dependability includes:

•

•

•

•

•

•

•

•

Reliability

Availability

Robustness

Trustworthiness

Security (see next chapter)

Safety

Maintainability

And perhaps more

• Reliability:

Informally: The ability that a system can perform its functions according to its specification

during a (specified long) time interval. Thus, the continuity of functioning is the main issue.

Examples:

reliable: An automobile that needs a repair each two years.

unreliable: An automobile that needs a repair after each month.

Quantitative Measures for

Dependability

• Reliability:

Formally:

L: random variable for the lifetime of a component. (t >= 0)

F(t) = p(L <= t) is the distribution function of L (failure probability).

The reliability of the component is then:

R(t) = 1 – F(t) = p(L > t) (survivability)

Characteristic measures for reliability:

- Mean Time To Failure (MTTF): E[L] = R(t )dt (E[L] is also the life time expectation)

0

- Failure rate: a(t).Dt = p(L <= t+Dt | L > t)

Thus: a (t ) 1 p(t L t Dt ) 1 F (t Dt ) F (t ) f (t ) ( for Dt 0)

Dt

p( L t )

Dt

1 F (t )

R(t )

Special case: a(t) = a (constant)

Then: a = f(t)/R(t) -a = -f(t)/1-F(t) -a.t +c = ln(1-F(t)) F(t) = 1 – e-at +c

Since c = 0 F(t) = 1 – e-at is the exponential distribution!

Thus the failure rate is constant iff the lifetime is exponentially distributed.

Quantitative Measures for

Dependability

• Reliability (continued):

- Residual lifetime expectation: r(t) = E(L| L > t) - t

Informally: After t units of time have elapsed, what is the expected rest period until the

next failure (in general until the next event).

Theorem:

1

r (t )

R( x)dx t

R(t ) t

Proof:

E[ L | L t ] x. f L| L t ( x) dx

t

p (t L x) F ( x) F (t )

p( L t )

1 F (t )

d

d F ( x)

f ( x)

f ( x)

Thus f L| L t ( x)

FL| L t ( x)

dx

dx 1 F (t ) 1 F (t )

R (t )

FL| L t ( x) p ( L x | L t )

Hence r (t ) E[ L | L t ] t x. f L| L t ( x) dx t x.

t

t

f ( x)

dx t

R (t )

R( x)dx

t

R (t )

t

Quantitative Measures for Dependability

• Availability

.

Informally: A component is available at time t, if it is able to perform its function at time t.

A highly reliable system is always highly available (in the perceived interval).

However, a highly available system, may be poorly reliable.

Example (for lowly reliable system that is highly available): Suppose you have a system that

experiences one failure each hour. Each failure is repaired very quickly (e.g. after one

second), and after that the system is available again. This system is clearly highly available,

but the continuity of its service (i.e. its reliability) is very poor.

Formally:

To study the availability of a system, one has to consider, in addition to the failure rate, the

(mean) repair rate b of the system (Mean Time To Repair: MTTR = 1/ b).

Here we make a useful assumption that the system is always in one of two states: up or down.

We define availability a time t as: a(t) = p(System is up at t)

The system switches from the up state to the down state with rate: a (constant)

The system switches from the down state to the up state with rate: b (constant)

a

This results in the following model:

down

up

b

down

Quantitative Measures for

Dependability

• Availability (continued)

up

(1)

a

b

down

(2)

Our goal is to find and expression a(t) = p(System is in state up at t) (= pup(t)).

Let p1(t) = pup(t), p2(t) = pdown(t) and let p12(Dt) and p21(Dt) be the transition probabilities from 1

to 2 and vice versa. Clearly: p1(t) + p2(t) = 1 (since system is in either state at any time). [1]

Also: a = p12(Dt)/Dt and b = p21(Dt)/Dt (for Dt 0)

Consider state 1: p1(t+Dt) = p1(t).p11(Dt) + p2(t).p21(Dt) (*)

In words: To be in 1 at t+Dt means either the system was at 1 already and made a transition to 1

again, or it was in 2 and made a transition to 1.

Since p11(Dt) + p12(Dt) = 1 (system should make a transition), (*) results in:

p1(t+Dt) = p1(t).(1-p12(Dt)) + p2(t).p21(Dt)

p1(t+Dt)-p1(t) = -p1(t).p12(Dt) + p2(t).p21(Dt) (divide by Dt and set Dt to 0)

dp1(t)/dt = -p1(t).a + p2(t).b [2]

Consider state 2: Because of symmetry we get: dp2(t)/dt = -p2(t).b + p1(t).a [3]

With [1] and [2] we get: dp1(t)/dt + (a+ b).p1(t) = b this has the solution:

p1(t) = b/(a +b) + c.e-(a+b).t, suppose system starts in the up state p1(0) = 1

c = a/(a +b), hence p1(t) = b/(a +b) + a/(a +b).e-(a+b).t

For t , p1(t) tends to b/(a +b), hence the availability is a(t) = b/(a +b) = MTTF/(MTTF+MTTR)

Or in other words: availability = expected uptime/(expected uptime+expected downtime)

Failure Masking by Redundancy

Triple modular redundancy.

TMR is an example of structural redundancy.

Voters filter out incorrect signals.

Voters are also “replicated” because they may fail, too.

System Reliability

• Problem:

Suppose you know the reliability of each component in your system, what is the reliability of

your system?

• Let S = {C1, …, Cn} be a system consisting of n components C1, …, Cn.

Let pi and Ri(t) be respectively the failure probability and the reliability of

component i.

Typical cases: Serial structure, parallel structure, k-from-n structure.

Serial Structure: All components are needed in order for the system to work.

n

R

(

t

)

Ri (t )

System reliability:

S

i 1

In words: System survives t iff all components nsurvive t.

System’s failure probability: s(p1,…,pn) = 1 - (1 pi )

i 1

In words: System is intact iff all Ci are intact. With 1-pi is probability that Ci is

n

intact, it follows that 1-s = p(System is intact) = (1 pi )

i 1

1

2

n-1

n

System Reliability

Example: 3 servers each with failure probability p and reliability R(t) and all of them are needed

to achieve a special task. serial structure of three servers.

1) System failure probability:

s(p) = 1 – (1-p)3

2) System reliability: RS(t) = R(t)3

Suppose R(t) = e-lt RS(t) = e-3lt

1

E[ L]

3lt

e

dt

In other words, the life time expectation of the system E[LS] =

3l

3

0

(with E[L] lifetime expectation of one server)

Parallel Structure: At least one component is needed for the system to work.

n

System reliability: RS(t) = 1- (1 Ri (t ))

i 1

In words: A component does not survives t with probability 1-Ri(t), hence the

n

1

system does not survive t with probability (1 Ri (t )) which is clearly

i 1

1

–

R

(t).

2

S

n

pi

System failure probability: s(p1,…,pn) =

i 1

In words: The system fails iff all components fail.

n

System Reliability

Example: 3 servers each with failure probability p and reliability R(t) and at least one of them is

needed to achieve a special task. parallel structure of three servers.

1) System failure probability:

s(p) = p3 < p

2) System reliability: RS(t) = 1 – (1-R(t))3

Suppose R(t) = e-lt RS (t) = 1- (1-e-lt)3

1

The life time expectation of the system E[LS] = 1 (1 e lt ) 3 dt (1 1 / 2 1 / 3)

l

0

n

( In general 1 (1 e lt ) n dt

0

1

1

1

i l)

l

i 1

E[ L] E[ L] / 2 E[ L] / 3 E[ L]

k-from-n Structure: At least k components are needed for the system to work.

RS (t ) in ) R (t ) i (1 R(t )) n i (We assume Ri (t ) R (t ))

n

i k

Example: TMR (2-from-3 Structure with voter)

RS (t) = 3R(t)2 – 2R(t)3 FS(t) = 3F(t)2 – 2F(t)3

F (t )

1

1

for small F (t )

2

FS (t ) 3F (t ) 2 F (t )

3F (t )

Thus ratio F(t)/FS(t) is high for small F(t) Gain of TMR is huge.

(However, in general lifetime of a TMR system is less then that of a single component)

System Reliability

TMR (continued)

In fact, TMR is nothing but a combination of a serial and a parallel system (see figure

below).

1) TMR failure probability:

Let p be the failure probability of one component.

Let p[i,j] be the failure probability of two serial components i and j.

p[i,j] = 1 – (1-p)2 for all i and j.

Hence: s(p) = p[1,2].p[1,3].p[2,3] = (1 – (1-p)2)3

2) TMR reliability:

Suppose R(t) = e-lt RS(t) = 3e-2lt - 2e-3lt

E[ LS ] RS (t )dt 5 1 5 E[ L] E[ L]

0

6l 6

Attention: here we ignored the voter component needed in TMR

1

2

1

3

2

3

Flat Groups versus Hierarchical Groups

a)

b)

Communication in a flat group.

Communication in a simple hierarchical group

Motivation: Replicated processes are organized into groups in order to achieve higher

availability.

Main assumption: Processes are identical (and run in general on different machines).

Attention: Own reliability of processes cannot be augmented using replication.

Agreement in Faulty Systems

n = 4, m = 1

Agreement: (1, 2, ?, 4)

n = 3, m = 1

No agreement is possible

Byzantine generals problem: n generals where n-m are loyal and m are traitors; the generals

try to achieve an agreement.

With m traitors at least 2m+1 loyal generals are needed for an agreement.

(2 army problem: 2 perfect generals (processes) but communication channel is impaired.

No agreement is possible)

RPC Semantics in Presence of Failures

•

•

•

•

•

Client cannot find server (e.g. server down):

Solution: Exception handling

non transparent

Request to server is lost:

Solution: Resend request after timeout

- Message identifiers are needed to detect duplicates.

- Server is then stateful.

Reply from server is lost:

Solution: Resend request after timeout

- Client cannot be sure whether request or reply was lost.

- Operations that the server performed may be re-executed.

not all operations are idempotent !

Client crash: Server may be executing a request on behalf of a crashed client orphan

Solution: find orphans and kill them (solutions are not ideal).

1) Extermination: client logs requests, and after restart it kills any orphan.

2) Reincarnation: Divide time into intervals, after restart, client broadcasts new epoch number.

Orphans are killed on receipt of such a message.

3) Expiration: Server works T units of time and asks client for more time if needed. Client waits

T time units after restart (to be sure that potential orphans are gone).

Sever crash: see next slides

Server Crashes (1)

A server in client-server communication

a)

Normal case

b)

Crash after execution

c)

Crash before execution

Problem: From the point of view of the client (b) looks like (c), simply there is no reply.

Different RPC semantics:

At least once semantics: keep trying until reply has been received.

At most once semantics: never retry a request.

Exactly once semantics: in distributed systems generally unachievable.

Server Crashes (2)

Client

Server

Strategy M P

Reissue strategy

Strategy P M

MPC

MC(P)

C(MP)

PMC

PC(M)

C(PM)

Always

2

1

1

2

2

1

Never

1

0

0

1

1

0

Only when ACKed

2

1

0

2

1

0

Only when not ACKed

1

0

1

1

2

1

Different combinations of client and server strategies in the presence of server crashes.

P: Processing, C: Crash, M: Completion message

Server strategies: MP: Send completion message before processing

PM: Send completion message after processing

Thus: The number of executions of an operation depends on when the server crash has

occurred.

Basic Reliable-Multicasting Schemes

Problem: Sender overwhelmed with ACKs

A simple solution to reliable multicasting when all receivers

are known and are assumed not to fail

a)

Message transmission

b)

Reporting feedback

Feedback Control (for better Scalability)

Several receivers have scheduled a request for retransmission, but the first

retransmission request leads to the suppression of others.

- Only negative ACKs (NACKs) are sent back to sender.

- Any receiver may suppress its NACK, if another receiver would require the same

message from the sender:

1. A receiver (with a NACK wish) waits a random delay T.

2. If after T it does not receive any NACK, it multicasts its own NACK.

3. If it receives a NACK before T elapses, it suppresses its own scheduled

NACK.

Virtual Synchrony (1)

The logical organization of a distributed system to distinguish between message receipt and

message delivery

Virtual synchrony: Deals with reliable multicast in presence of process failures.

Minimum requirement: If a sender sends a message m to a group G, m is either delivered to all

non-faulty processes in G or to none of them (reliable multicast).

Problem: What if the group membership changes during the transmission of m?

Virtual Synchrony (2)

The principle of virtual synchronous multicast.

• Process P3 crashes Group membership changes.

1) Partially sent multicast messages of P3 should be discarded.

2) P3 should be removed from the group.

• If the group membership changes voluntarily (without crashes), the system should

deliver partially sent multicasts to all members or to none of them.

Message Ordering (1)

Process P1

Process P2

Process P3

sends m1

receives m1

receives m2

sends m2

receives m2

receives m1

Three communicating processes in the same group.

Typical message ordering policies for virtual synchrony:

1) No requirements: any ordering is valid

2) FIFO-ordering of multicasts: messages originating from the same process are

delivered in the order the process has sent them.

3) Causally-ordered multicasts: messages that are causally related are delivered

in the order of causality; but no requirement for concurrent messages.

4) Totally-ordered multicast: all messages appear in same order to all group

members (orthogonal to FIFO, causal, or no requirement).

Message Ordering (2)

Process P1

Process P2

Process P3

Process P4

sends m1

receives m1

receives m3

sends m3

sends m2

receives m3

receives m1

sends m4

receives m2

receives m2

receives m4

receives m4

Four processes in the same group with two different senders, and a

possible delivery order of messages under FIFO-ordered

multicasting

Totally-ordered FIFO would be: P2 is delivered message m3 and then message m1.

Implementing Virtual Synchrony (1)

Multicast

Basic Message Ordering

Total-ordered Delivery?

Reliable multicast

None

No

FIFO multicast

FIFO-ordered delivery

No

Causal multicast

Causal-ordered delivery

No

Atomic multicast

None

Yes

FIFO atomic multicast

FIFO-ordered delivery

Yes

Causal atomic multicast

Causal-ordered delivery

Yes

Six different versions of virtually synchronous reliable

multicasting.

Implementing Virtual Synchrony (2)

a)

b)

c)

Process 4 notices that process 7 has crashed, sends a view change

Process 6 sends out all its unstable messages, followed by a flush message

Process 6 installs the new view when it has received a flush message from

everyone else

Above protocol is implemented in Isis on top of TCP/IP.

Unstable message: A partially received message e.g. only 1 and 2 received it.

Two-Phase Commit (1)

a)

b)

The finite state machine for the coordinator in 2PC.

The finite state machine for a participant.

Main goal: All-or-nothing property in presence of failures.

Failures: Coordinator’s or participant’s crash.

Two-Phase Commit (2)

State of Q

Action by P (in READY state)

COMMIT

Make transition to COMMIT

ABORT

Make transition to ABORT

INIT

Make transition to ABORT

READY

Contact another participant (if all

participants are in READY state, wait

until coordinator recovers)

Actions taken by a participant P when residing in state READY and

having contacted another participant Q.

Situations:

1) Coordinator crash (or no response after timeout):

1.1 If participant P is in state INIT P makes a transition to state ABORT.

1.2 If participant P is in state READY P contacts another participant Q (see

table above).

2) Participant crash (or no response from a participant after timeout):

Since coordinator must be in the WAIT state, it makes a transition to the

ABORT state.

Two-Phase Commit (3)

Actions by coordinator:

write START_2PC to local log;

multicast VOTE_REQUEST to all participants;

while not all votes have been collected {

wait for any incoming vote;

if timeout {

write GLOBAL_ABORT to local log;

multicast GLOBAL_ABORT to all participants;

exit;

}

record vote;

}

if all participants sent VOTE_COMMIT and coordinator votes COMMIT{

write GLOBAL_COMMIT to local log;

multicast GLOBAL_COMMIT to all participants;

} else {

write GLOBAL_ABORT to local log;

multicast GLOBAL_ABORT to all participants;

}

Outline of the steps taken by the coordinator

in a two phase commit protocol

Two-Phase Commit (4)

Actions by participant:

DECISION must come

from another participant

or from coordinator after

a potential recovery.

Steps taken by

participant

process in

2PC.

write INIT to local log;

wait for VOTE_REQUEST from coordinator;

if timeout {

write VOTE_ABORT to local log;

exit;

}

if participant votes COMMIT {

write VOTE_COMMIT to local log;

send VOTE_COMMIT to coordinator;

wait for DECISION from coordinator;

if timeout {

multicast DECISION_REQUEST to other participants;

wait until DECISION is received; /* remain blocked */

write DECISION to local log;

}

if DECISION == GLOBAL_COMMIT

write GLOBAL_COMMIT to local log;

else if DECISION == GLOBAL_ABORT

write GLOBAL_ABORT to local log;

} else {

write VOTE_ABORT to local log;

send VOTE ABORT to coordinator;

}

Two-Phase Commit (5)

Actions for handling decision requests: /* executed by separate thread */

while true {

wait until any incoming DECISION_REQUEST is received; /* remain blocked */

read most recently recorded STATE from the local log;

if STATE == GLOBAL_COMMIT

send GLOBAL_COMMIT to requesting participant;

else if STATE == INIT or STATE == GLOBAL_ABORT

send GLOBAL_ABORT to requesting participant;

else

skip; /* other participant remains blocked */

Steps taken for handling incoming decision requests.

Three-Phase Commit

a)

b)

Finite state machine for the coordinator in 3PC

Finite state machine for a participant

Recovery Stable Storage

Disk 1

Disk 2

a)

b)

c)

Stable Storage: e.g. two disks

Crash after Disk 1 is updated: a’ is used (update begins

with Disk 1)

Bad spot: get copy from the other disk

Checkpointing (1)

A recovery line (consistent cut).

Recovery:

Backward recovery: back to an earlier fault-free state checkpointing

Forward recovery: Switch to a new fault-free state (e.g. replication).

Problems with checkpointing: - Added overhead ( message logging can help)

- Finding recovery line (see figure)

Checkpointing (2)

c11

c13

c12

c21

c22

c23

The domino effect (independent checkpointing).

(c13, c23) and (c13, c22) inconsistent, since m resp. m’ received but not sent.

Recovery from initial states is needed!

Solution: coordinated checkpointing, which always leads to a consistent cut:

1. Coordinator multicasts CP_REQUEST to all processes.

2. Processes do the checkpoint and defer any message traffic until CP_DONE

has been received from coordinator (P1 does not send m).

3. After ACK from all processes, coordinator multicasts CP_DONE.

Message Logging

Incorrect message replay after recovery, leading to an orphan process (R)

Message logging: Messages since last checkpoint are logged and replayed after

recovery.

Advantages:

+ helps reduce checkpointing frequency (i.e. overhead).

+ Message replay means that behavior of the process before and after recovery

are the same.

Problem: orphan processes (see figure: R does work for Q and Q does not know that)