Coordinated Workload Scheduling A New Application Domain for Mechanism Design

advertisement

Coordinated Workload

Scheduling

A New Application Domain

for Mechanism Design

Elie Krevat

Introduction

Distributed systems becoming larger, more complex

Nodes perform computation and storage tasks

Workloads enter system and are distributed across nodes

Clients run many workloads, can pay for resources

Nodes service many workloads (not dedicated)

System provides QoS guarantees:

Performance – load balance workloads to faster free nodes

Efficiency – minimize cycles wasted when tasks available

Fairness – nodes share resources across workloads

Benefits of Shared Storage

Why cluster? Scaling, cost, and management.

Why share? Slack sharing, economies of scale, uniformity.

Throughput Performance

Insulation in Shared Storage

Each of n workloads on a server:

Executes efficiently within its portion of time (timeslice)

Ideally: gets ≥ 1/n of its standalone performance

In practice: within a fraction of the ideal

Argon project [Wachs07] provides bounds on

efficiency across workloads for one server

Problems extending to many servers (cluster-style)

Synchronized workloads need coordination of schedules

Performance of system limited by slowest node

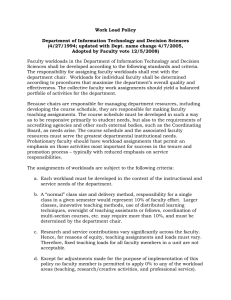

Timeslice challenges

140 ms

Workload 1

Workload 2

Workload 3

Server A

Workload 1

Workload 2

280 ms

Workload 4

Server B

Workload 1

Workload 4

100 ms

1

6

3

2

5

1

Server C

6

3

Cluster-style Storage Systems

Data Block

Synchronized Read

1

R

R

R

R

2

3

Client

1

2

Switch

3

Data Fragment

4

4

Client now sends

next batch of requests

Storage Servers

6

Environment Assumptions

One client per workload

Bounded number W of workloads, N of nodes

Constant set of workloads to be scheduled

But mechanism might support changing set

Communication doesn’t interfere with

computation/storage tasks

Workload Distribution Settings

Two alternative workload distribution settings

Setting I: Free Workload Assignment

Workloads can be freely assigned to many nodes

Example: Embarrassingly parallel distributed apps

Problem: Determine best set of nodes to assign

Setting II: Fixed Workload Assignment

Workloads must be assigned to fixed set of nodes

Example: Cluster-style storage

Problem: Coordinate responses of nodes with

better timeslice scheduling

Computing Environments with

Monetary Incentives

Workloads pay for resources:

Weather forecasting

Seismic measurement simulations of oil fields

Distributed systems sell resources

Supercomputing centers sell resources

Shared infrastructures

Grid computing

Individually-owned computers sell spare cycles

SETI@Home for $$

May not have single administrative domain

Why Mechanism Design?

Central coordinator(s) lack per-node information

Enforce cooperation and global QoS

Different performance capabilities and revenue models

Efficiency and fairness not always goals of players

Reduce scheduling problems to general mechanism

Scheduling coordinated workloads is hard (proof later)

Divide scheduling problems across nodes

Design mechanism to produce coordination

Outline

Background and Motivation

Mechanism I: Free Workload Assignment

Mechanism II: Fixed Workload Assignment

Conclusions

Revenue Model:

Free Assignment

Clients pay nodes directly after task

Clients may also pay fixed cost to central scheduler

Workloads want the best and fastest nodes

Central scheduler doesn’t know load/speed of nodes

Nodes are greedy and want lots of workloads

Payment is per-workload

Amount depends on many factors:

Speed of response

Number of requests/computations per timeslot

May lie about load/speed if asked directly

System Goal: Assign workloads to nodes that will

respond fastest

Mechanism Design: VCG

Run auction to decide which M nodes to assign

Nodes respond with bids

FIFO approach to scheduling each workload

Can also run combinatorial auction on bundles

Valuations depend on speed and current load

Same factors that affect final payment

Apply Vickrey-Clarke-Groves mechanism

First auction iteration finds top M bids

Remove Node X, recompute top M bids

Additional auction iteration not actually necessary

Difference between X’s bid and M+1st bid is payment

May also normalize payments to share wealth over nodes

Mechanism Results

Incentive compatible

Nodes have no incentive to lie, since if they over-report

valuation for workload they’ll still be paid true valuation

Global efficiency (i.e., best allocation for workload)

Related to general task allocation problem [Nisan99]

k tasks allocated to n agents

Goal is to minimize completion time of last assignment

(make-span)

Valuation of agent is negation of total time spent on tasks

Approximation/randomized algorithms exist for CA

Outline

Background and Motivation

Mechanism I: Free Workload Assignment

Mechanism II: Fixed Workload Assignment

Conclusions

Revenue Model:

Fixed Assignment

Nodes paid by system at every timestep

System wants quick resolution of workload requests

Nodes need monetary incentives to schedule fully

coordinated workloads efficiently and fairly

Payment is part of mechanism payment scheme

All M nodes service workload in same timeslice

Uncoordinated workloads not important

System Goals:

Enforce coordination of workloads per timeslice

Achieve fair distribution of resources

Achieve efficient schedule allocations

Coordination is hard

Wkld

1

2

3

4

1

2

3

4

Node

A

B

C

D

Wklds

1,4

1,2,4

2,3

3,4

Reduce Max Independent Set problem to problem of

scheduling max # of fully coordinated workloads per timeslice

Nodes

A,B

B,C

C,D

A,B,D

For every Node xi that services a workload wi, then wi has a

dependency edge to all other workloads serviced by xi

NP-Complete, but approximation algorithms exist

For above example, max independent set is {1,3}

Properties of

Schedule Allocations

Two types of schedule allocations

Set of workloads serviced by node nx is Sx

# / timeslice quanta allocated per workload wi is qi

Basic quanta timeslice allocation a

Longer sequence of timeslices atot

Total quanta count Qx for each node nx

Delay between consecutive workload schedules in

allocation atot is schedule distance di,k

k refers to schedule instance in atot

Average schedule distance di,avg, per-node is dx,avg

Maximum schedule distance di,max, per-node is dx,max

Formulas for Schedule

Allocation Properties

Possible Payment Scheme

Node is paid max of P credits for each scheduled

time quanta

No credits for uncoordinated schedule

For every cycle of time that workload isn’t

scheduled, payment decreases by c (c << P)

Node is fined F if starves workload over a period of

quanta greater than Qthr

Using derived properties of schedule allocations,

each node calculates payments

Mechanism Design:

Open Research Problem

Goal is to improve efficiency and fairness

But coordination is hard optimization problem

Nodes compute their best allocations (through heuristics)

using payment scheme that rewards efficiency/fairness

Send valuations to central scheduler

General mechanism determines best global allocation

May be better suited only for central scheduler

Expected properties of a mechanism:

Nodes are players

No additional utility past payments?

Auctioned good may be single or total allocations

Tradeoff is ability to adapt to changing workloads vs. better

assessment of efficient allocations over longer time

Outline

Background and Motivation

Mechanism I: Free Workload Assignment

Mechanism II: Fixed Workload Assignment

Conclusions

Conclusions

Distributed systems environments provide new

applications for mechanism design

Model and analysis of 2 different distribution settings

Goals of better global performance, efficiency, fairness

Not always shared by individual nodes

Free workload assignment solved with VCG

Fixed workload assignment still open problem

Revenue model and goals of mechanism vary

Payment functions use derived allocation properties

Coordination of workloads is hard optimization problem

Motivation for further research in related areas