TRUST AND SEMANTIC ATTACKS - II Class Notes: February 23, 2006

advertisement

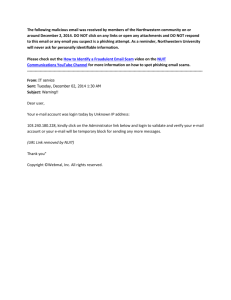

TRUST AND SEMANTIC ATTACKS - II Class Notes: February 23, 2006 Lecturer: Ponnurangam Kumaraguru Scribe: Ashley Brooks SUMMARY OF PART 1 While there is no single definition of trust, it is dependent up on the situation/problem. There have been many models developed but few have been evaluated. Both positive and negative antecedents can effect the trust behavior. SEMANTIC ATTACKS Security attacks occure in waves: physical, sysntactic, and semantic. - Physical: (i.e. cutting the network cable) - Syntactic: (i.e. buffer overflows, DDoS) - Semantic: target the mental model of the user, the way users assign meaning (i.e. Phishing) PHISHING (pronounce Fishing) Phishing employs social engineering and technical subterfuge to steal a user’s identity. Successful phishing is dependent upon discrepancies in the system model and the mental model. For example, a forged e-mail is sent that falsely mimics a legitimated establishment in effort to get recipient to divulge (sensitive) private information. This is a growing problem, the number of phishing attacks has doubled since 2004. The US host the majority of phishing attacks. Phishing trends show that in the month of December a largest number of phishing reports, sites, and campaign are found. Phishing is not limited to email-based attacks. There are basically three types of phishing attack depending on whether the attack uses human nature, technical exploits, or a combination of both. An example of these attacks is spear phishing. Spear phishing target users w/ respect to the context in which they use the internet (i.e. facebook, public auctions). There are many ways to classify these attacks. At a high level classification is based upon a lack of knowledge, visual deception, and bounded attention. A lack of knowledge entails lack of security and security indicators. Visual deception can consist of deceptive text and makes images or windows. Bounded attention refers to the lack of attention to security indicators. USER STUDIES “Why Phishing Works” Goal: To understand which attack strategies are successful, and what proportion of users they fool. Methodology: To analyze a set of phishing attacks and develop a set of hypotheses about how users are deceived. The hypotheses were tested in a usability study that showed 22 participants 20 web sites and asked them to determine which ones were fraudulent, and why. Analysis: Good phishing websites fooled 90% of participants, meaning 90% of participants clicked on the link and supplied information. These participants were fooled by animations, deceptive text, stamp of security verification, and the authentic log-in area. The existing anti-phishing browsing cues were found to be ineffective, because 23% of participants in our study ignored the address bar, status bar, or the security indicators. Participants seemed to proceed without hesitation when presented with warnings. Conclusion: Vulnerability to phishing attacks is a result of a lack of knowledge of web fraud and erroneous security knowledge. Suggestions: The realization is that once users have reached the website they are less likely to be able to distinguish between the legitimate and the spoof. This is because successful phishers present a web presence that causes the victim to fail to recognize security measures. The fact that security indicators are ineffective, suggests that alternative approaches are needed to aid user’s in distinguishing between the legitimate and the spoofed. The question for anti-phisher designers is, would it be easier to teach user’s how to detect a spoof email vs. a spoof website? February 23, 2006 TRUST AND SEMANTIC ATTACKS - II “Do Security Toolbars Actually Prevent Phishing Attacks?” Goal: To evaluate the usability of security toolbars to really prevent users from being tricked into providing personal information. Methodology: To perform user studies that would determine which attacks were more effective than others and evaluate the security toolbar approach for fighting phishing. Tested Toolbars: - SPOOFSTICK – displays real domain name w/ a customized color and size, to expose obscure domain names. - NETCRAFT – Detects phishing sites by displays domain registration date, hosting name and country, and popularity among other users; phishing sites are short-lived. - TRUSTBAR – makes secure connection more visible by displaying logos of the website and certificate authorities - EBAY ACCOUNT GUARD – green indicates approved e-bay sites, red indicates blacklisted e-bay sites, and gray for others - SPOOFGUARD – uses heuristics to calculate a spoof score which is translated in to colored icons to indicate safety of site Analysis: Even after receiving notification from the toolbars 34% of the users disregarded or explained away the toolbars’ warnings if the content of web pages looked legitimate and still provided information. 25% failed to notice the toolbars despite being asked to pay attention to them. Conclusion: All toolbars are probable to fail at preventing users from being spoofed by highquality phishing attacks. Users rely on web content to decide if a site is authentic or phishing, since maintaining security is not the user’s primary goal. Suggestions: Users don’t respond to suspicious signs coming from the indicators, because warnings are unclear or personal rationale dismisses them. Therefore the solution to phishing is to combine active interruptions (pop-ups) and tutorials. These methods are most effective and allow the anti-phishing designers to keep increase the usability of this security and respect the user’s intentions. February 23, 2006 TRUST AND SEMANTIC ATTACKS - II ACTIVITY The task was to design system specifications to train one of four user types about phishing attacks and help them make trust based decisions. There were four user types identified. Each was classified by their knowledge of computer security and their relative vulnerability to phishing attacks. The level of vulnerability was dependent upon the user’s susceptibility to injury from the attack. Most groups suggested increasing the user’s knowledge. For users with higher vulnerabilities, groups suggested more noticeable warnings that would alert them of the risk of phishing websites and emails. February 23, 2006 TRUST AND SEMANTIC ATTACKS - II