Learning from data with graphical models Padhraic Smyth © Information and Computer Science

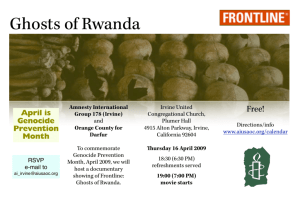

advertisement

Learning from data with graphical

models

Padhraic Smyth ©

Information and Computer Science

University of California, Irvine

www.datalab.uci.edu

© P. Smyth: UC Irvine: Graphical Models: 2004: 1

Outline

• Graphical models

– A general language for specification and computation with

probabilistic models

• Learning graphical models from data

– EM algorithm, Bayesian learning, etc

• Applications

– Learning topics from documents

– Discovering clusters of curves

– Other work…

© P. Smyth: UC Irvine: Graphical Models: 2004: 2

The Data Revolution

• Technological Advances in past 20 years

–

–

–

–

–

Sensors

Storage

Computational power

Databases and indexing

Machine learning

• Science and engineering are increasingly data driven

© P. Smyth: UC Irvine: Graphical Models: 2004: 3

Examples of Digital Data Sets

• The Web: > 4.3 billion pages

– Link graph

– Text on Web pages

• Genomic Data

– Sequence = 3 billion base pairs (human)

– 25k genes: expression, location, networks….

• Sloan Digital Sky Survey

– 15 terabytes

– ~500 million sky objects

• Earth Sciences

– NASA MODIS satellite

– Entire Earth at 15m to 250m resolution every day

– 37 spectral bands

© P. Smyth: UC Irvine: Graphical Models: 2004: 4

© P. Smyth: UC Irvine: Graphical Models: 2004: 5

© P. Smyth: UC Irvine: Graphical Models: 2004: 6

© P. Smyth: UC Irvine: Graphical Models: 2004: 7

© P. Smyth: UC Irvine: Graphical Models: 2004: 8

© P. Smyth: UC Irvine: Graphical Models: 2004: 10

Questions of Interest

• can we detect significant change?

• identify, classify, and catalog certain phenomena?

• segment/cluster pixels into land cover types?

• measure and predict seasonal variation?

• And so on….

Previously

• scientists did much of this work by hand

But now….

• automated algorithms are essential

© P. Smyth: UC Irvine: Graphical Models: 2004: 13

Learning from Data

• Uncertainty abounds….

–

–

–

–

Measurement error

Unobserved phenomena

Model and parameter uncertainty

Forecasting and prediction

• Probability is the “ language of uncertainty”

– Significant shift in computer science towards probabilistic

models in recent years

© P. Smyth: UC Irvine: Graphical Models: 2004: 14

Preliminaries

• Probability

• X = x : statement about the world (true or false)

• P(X=x) : degree of belief that the world is in state X=x

• Set of variables U = {X1, X2, ...... XN}

• Joint Probability Distribution:

P(U) = p (X1, X2, ...... XN )

P(U) is a table of K x K x K …. = KN numbers

(say all variables take K values)

© P. Smyth: UC Irvine: Graphical Models: 2004: 15

Conditional Probabilities

• Many problems of interest involve computing conditional

probabilities

– Prediction

P(XN, | xN,...... x1)

– Classification/Diagnosis/Detection

arg max { P(Y | x1,...... xN) }

– Learning

P( q | x1,...... xN)

• Note:

– Computing P(XN+1 | X1 = x) has time complexity O(KN)

© P. Smyth: UC Irvine: Graphical Models: 2004: 16

Two Problems

• Problem 1: Computational Complexity

– Inference computations scale as O(KN)

• Problem 2: Model Specification

– To specify p(U) we need a table of KN numbers

– Where do these numbers come from?

© P. Smyth: UC Irvine: Graphical Models: 2004: 17

A very brief history….

• Problems recognized by 1970’s, 80’s in artificial intelligence (AI)

– E.g., see early AI work in constructing diagnostic reasoning systems:

• e.g., medical diagnosis, N=100 different symptoms

– 1970’s/80’s Solutions?

• invent new formalisms

– Certainty factors

– Fuzzy logic

– Non-monotonic logic

– 1985: “In defense of probability”, P. Cheeseman, IJCAI 85

– 1990’s: marriage of statistics and computer science

© P. Smyth: UC Irvine: Graphical Models: 2004: 18

Two Key Ideas

• Problem 1: Computational Complexity

– Idea:

• Represent dependency structure as a graph and exploit

sparseness in computation

• Problem 2: Model Specification

– Idea:

• learn models from data using statistical learning principles

© P. Smyth: UC Irvine: Graphical Models: 2004: 19

“…probability theory is more fundamentally

concerned with the structure of reasoning

and causation than with numbers.”

Glenn Shafer and Judea Pearl

Introduction to Readings in Uncertain Reasoning,

Morgan Kaufmann, 1990

© P. Smyth: UC Irvine: Graphical Models: 2004: 20

Graphical Models

• Dependency structure encoded by an acyclic directed graph

– Node <-> random variable

– Edges encode dependencies

• Absence of edge -> conditional independence

– Directed and undirected versions

• Why is this useful?

– A language for communication

– A language for computation

• Origins:

– Wright 1920’s

– 1988

• Spiegelhalter and Lauritzen in statistics

• Pearl in computer science

– Aka Bayesian networks, belief networks, causal networks, etc

© P. Smyth: UC Irvine: Graphical Models: 2004: 21

Examples of 3-way Graphical Models

A

Conditionally independent effects:

p(A,B,C) = p(B|A)p(C|A)p(A)

B

C

B and C are conditionally independent

given A

© P. Smyth: UC Irvine: Graphical Models: 2004: 22

Examples of 3-way Graphical Models

A

B

C

© P. Smyth: UC Irvine: Graphical Models: 2004: 23

Independent Causes:

p(A,B,C) = p(C|A,B)p(A)p(B)

Examples of 3-way Graphical Models

A

B

C

© P. Smyth: UC Irvine: Graphical Models: 2004: 24

Markov dependence:

p(A,B,C) = p(C|B) p(B|A)p(A)

Directed Graphical Models

B

A

p(A,B,C) = p(C|A,B)p(A)p(B)

C

© P. Smyth: UC Irvine: Graphical Models: 2004: 25

Directed Graphical Models

B

A

p(A,B,C) = p(C|A,B)p(A)p(B)

C

In general,

p(X1, X2,....XN) =

© P. Smyth: UC Irvine: Graphical Models: 2004: 26

p(Xi | parents(Xi ) )

Example

D

A

B

E

C

F

© P. Smyth: UC Irvine: Graphical Models: 2004: 27

G

Example

D

A

B

E

c

F

Say we want to compute p(a | c, g)

© P. Smyth: UC Irvine: Graphical Models: 2004: 28

g

Example

D

A

B

E

c

F

g

Direct calculation: p(a|c,g) = Sbdef p(a,b,d,e,f | c,g)

Complexity of the sum is O(K4)

© P. Smyth: UC Irvine: Graphical Models: 2004: 29

Example

D

A

B

E

c

F

Reordering:

Sd p(a|b) Sd p(b|d,c) Se p(d|e) Sf p(e,f |g)

© P. Smyth: UC Irvine: Graphical Models: 2004: 30

g

Example

D

A

B

E

c

F

g

Reordering:

Sb p(a|b) Sd p(b|d,c) Se p(d|e) Sf p(e,f |g)

p(e|g)

© P. Smyth: UC Irvine: Graphical Models: 2004: 31

Example

D

A

B

E

c

F

Reordering:

Sb p(a|b) Sd p(b|d,c) Se p(d|e) p(e|g)

p(d|g)

© P. Smyth: UC Irvine: Graphical Models: 2004: 32

g

Example

D

A

B

E

c

F

Reordering:

Sb p(a|b) Sd p(b|d,c) p(d|g)

p(b|c,g)

© P. Smyth: UC Irvine: Graphical Models: 2004: 33

g

Example

D

B

E

c

F

A

g

Reordering:

Sb p(a|b) p(b|c,g)

p(a|c,g)

© P. Smyth: UC Irvine: Graphical Models: 2004: 34

Complexity is O(K), compared to O(K4)

A More General Algorithm

• Message Passing (MP) Algorithm

– Pearl, 1988; Lauritzen and Spiegelhalter, 1988

– Declare 1 node (any node) to be a root

– Schedule two phases of message-passing

• nodes pass messages up to the root

• messages are distributed back to the leaves

– In time O(N), we can compute any probability of interest

© P. Smyth: UC Irvine: Graphical Models: 2004: 35

Sketch of the MP algorithm in action

© P. Smyth: UC Irvine: Graphical Models: 2004: 36

Sketch of the MP algorithm in action

1

© P. Smyth: UC Irvine: Graphical Models: 2004: 37

Sketch of the MP algorithm in action

1

© P. Smyth: UC Irvine: Graphical Models: 2004: 38

2

Sketch of the MP algorithm in action

1

3

© P. Smyth: UC Irvine: Graphical Models: 2004: 39

2

Sketch of the MP algorithm in action

2

1

3

© P. Smyth: UC Irvine: Graphical Models: 2004: 40

4

Complexity of the MP Algorithm

• Efficient

– Complexity scales as O(N K m)

• N = number of variables

• K = arity of variables

• m = maximum number of parents for any node

– Compare to O(KN) for brute-force method

© P. Smyth: UC Irvine: Graphical Models: 2004: 41

Graphs with “loops”

D

A

B

E

C

F

G

Message passing algorithm does not work when

there are multiple paths between 2 nodes

© P. Smyth: UC Irvine: Graphical Models: 2004: 42

Graphs with “loops”

D

A

B

E

C

F

General approach: “cluster” variables

together to convert graph to a tree

© P. Smyth: UC Irvine: Graphical Models: 2004: 43

G

Junction Tree

D

B, E

A

C

© P. Smyth: UC Irvine: Graphical Models: 2004: 44

F

G

Junction Tree

D

B, E

A

C

F

G

Good news: can perform MP algorithm on this tree

Bad news: complexity is now O(K2)

© P. Smyth: UC Irvine: Graphical Models: 2004: 45

Probability Calculations on Graphs

• Structure of the graph reveals

– Computational strategy

• Sparser graphs -> faster computation

– Dependency relations

• Automated computation of conditional probabilities

– i.e., a fully general algorithm for arbitrary graphs

– exact vs. approximate answers

• Extensions

– For continuous variables:

• replace sum with integral

– For identification of most likely values

• Replace sum with max operator

– Conditional probability tables can be approximated

© P. Smyth: UC Irvine: Graphical Models: 2004: 46

Hidden or Latent Variables

• In many applications there are 2 sets of variables:

– Variables whose values we can directly measure

– Variables that are “hidden”, cannot be measured

• Examples:

– Speech recognition:

• Observed: acoustic voice signal

• Hidden: label of the word spoken

– Face tracking in images

• Observed: pixel intensities

• Hidden: position of the face in the image

– Text modeling

• Observed: counts of words in a document

• Hidden: topics that the document is about

© P. Smyth: UC Irvine: Graphical Models: 2004: 47

Mixture Models

S

Hidden discrete variable

Y

Observed variable(s)

© P. Smyth: UC Irvine: Graphical Models: 2004: 48

A Graphical Model for Clustering

S

Y1

Yj

Hidden discrete (cluster) variable

Yd

Observed variable(s)

(assumed conditionally independent given S)

© P. Smyth: UC Irvine: Graphical Models: 2004: 49

A Graphical Model for Clustering

S

Y1

Hidden discrete (cluster) variable

Yj

Yd

Observed variable(s)

(assumed conditionally independent given S)

Clusters = p(Y1,…Yd | S = s)

Clustering = learning these probability

distributions from data

© P. Smyth: UC Irvine: Graphical Models: 2004: 50

Mixtures of Markov Chains

S

Y1

Y2

Y3

YN

Can learn model sets of sequences as coming from

K different Markov chains

© P. Smyth: UC Irvine: Graphical Models: 2004: 51

Mixtures of Markov Chains

S

Y1

Y2

Y3

YN

Can learn model sets of sequences as coming from

K different Markov chains

Provides a useful method for clustering sequences

Cadez, Heckerman, Meek, Smyth, 2003

• used to cluster 1 million Web user navigation patterns

• algorithm is part of SQL Server 2005

© P. Smyth: UC Irvine: Graphical Models: 2004: 52

Hidden Markov Model (HMM)

Y1

Y2

Y3

YN

Observed

---------------------------------------------------S1

S2

S3

SN

Hidden

Two key conditional independence assumptions

Widely used in:

….speech recognition, protein models, error-correcting codes

Comments:

- inference about S given Y is O(N)

- if S is continuous, we have a Kalman filter

© P. Smyth: UC Irvine: Graphical Models: 2004: 53

Generalized HMMs

I1

Y1

Y2

Y3

Yn

S1

S2

S3

Sn

I2

I3

© P. Smyth: UC Irvine: Graphical Models: 2004: 54

In

Learning from Data

© P. Smyth: UC Irvine: Graphical Models: 2004: 55

Probabilistic

Model

© P. Smyth: UC Irvine: Graphical Models: 2004: 56

Probabilistic

Model

© P. Smyth: UC Irvine: Graphical Models: 2004: 57

Real World

Data

P(Data | Parameters)

Probabilistic

Model

© P. Smyth: UC Irvine: Graphical Models: 2004: 58

Real World

Data

P(Data | Parameters)

Probabilistic

Model

Real World

Data

P(Parameters | Data)

© P. Smyth: UC Irvine: Graphical Models: 2004: 59

Parameters and Data

Model parameters

q

y1

y2

y3

y4

Data observations are assumed IID here

© P. Smyth: UC Irvine: Graphical Models: 2004: 60

Data = {y1,…y4}

Plate Notation

q

q

y1

y2

y3

yn

yi

i=1:n

© P. Smyth: UC Irvine: Graphical Models: 2004: 61

Maximum Likelihood

q

Model parameters

yi

Data = {y1,…yn}

i=1:n

Likelihood(q) = p(Data | q ) = p(yi | q )

Maximum Likelihood:

qML = arg max{ Likelihood(q) }

© P. Smyth: UC Irvine: Graphical Models: 2004: 62

Being Bayesian

a

Prior(q) = p( q | a )

q

yi

i=1:n

Bayesian Learning:

p( q | evidence ) = p( q | data, prior)

© P. Smyth: UC Irvine: Graphical Models: 2004: 63

Learning = Inference in a Graph

a

q

yi

i=1:n

q is unknown

Learning = process of computing p( q | y’s, prior )

Information “flows” from green nodes to q node

© P. Smyth: UC Irvine: Graphical Models: 2004: 64

Example: Gaussian Model

a

s

m

b

yi

i=1:n

Note: priors and parameters are assumed independent here

© P. Smyth: UC Irvine: Graphical Models: 2004: 65

Example: Bayesian Regression

a

q

s

xi

yi

i=1:n

Model:

yi = f [xi;q] + e,

e ~ N(0, s2)

p(yi | xi) ~ N ( f[xi;q] , s2 )

© P. Smyth: UC Irvine: Graphical Models: 2004: 66

b

Learning with Hidden Variables

q

Si

yi

i=1:n

Finding q that maximizes L(q) is difficult

-> use iterative optimization algorithms

© P. Smyth: UC Irvine: Graphical Models: 2004: 67

Learning with Hidden Variables

• Guess at some initial parameters q0

• E-step (inference in a graph)

– For each case, and each unknown variable compute

p(S | known data, q0 )

• M-step: (optimization)

– Maximize L(q) using p(S | ….. )

– This yields new parameter estimates q1

• This is the EM algorithm:

– Guaranteed to converge to a (local) maximum of L(q)

© P. Smyth: UC Irvine: Graphical Models: 2004: 68

Mixture Model

q

Si

yi

i=1:n

© P. Smyth: UC Irvine: Graphical Models: 2004: 69

E-Step

q

Si

yi

i=1:n

© P. Smyth: UC Irvine: Graphical Models: 2004: 70

M-Step

q

Si

yi

i=1:n

© P. Smyth: UC Irvine: Graphical Models: 2004: 71

E-Step

q

Si

yi

i=1:n

© P. Smyth: UC Irvine: Graphical Models: 2004: 72

ANEMIA PATIENTS AND CONTROLS

Red Blood Cell Hemoglobin Concentration

4.4

4.3

4.2

4.1

4

3.9

Data from Prof.

Christine McLaren,

Dept of Epidemiology,

UC Irvine

3.8

3.7

3.3

3.4

3.5

3.6

3.7

Red Blood Cell Volume

© P. Smyth: UC Irvine: Graphical Models: 2004: 73

3.8

3.9

4

Mixture Model

q

Ci

Two hidden clusters

C=1 and C= 2

yi

P(y | C = k) is Gaussian

i=1:n

© P. Smyth: UC Irvine: Graphical Models: 2004: 74

Model for y =

mixture of two 2d-Gaussians

EM ITERATION 1

Red Blood Cell Hemoglobin Concentration

4.4

4.3

4.2

4.1

4

3.9

3.8

3.7

3.3

3.4

3.5

3.6

3.7

Red Blood Cell Volume

© P. Smyth: UC Irvine: Graphical Models: 2004: 75

3.8

3.9

4

EM ITERATION 3

Red Blood Cell Hemoglobin Concentration

4.4

4.3

4.2

4.1

4

3.9

3.8

3.7

3.3

3.4

3.5

3.6

3.7

Red Blood Cell Volume

© P. Smyth: UC Irvine: Graphical Models: 2004: 76

3.8

3.9

4

EM ITERATION 5

Red Blood Cell Hemoglobin Concentration

4.4

4.3

4.2

4.1

4

3.9

3.8

3.7

3.3

3.4

3.5

3.6

3.7

Red Blood Cell Volume

© P. Smyth: UC Irvine: Graphical Models: 2004: 77

3.8

3.9

4

EM ITERATION 10

Red Blood Cell Hemoglobin Concentration

4.4

4.3

4.2

4.1

4

3.9

3.8

3.7

3.3

3.4

3.5

3.6

3.7

Red Blood Cell Volume

© P. Smyth: UC Irvine: Graphical Models: 2004: 78

3.8

3.9

4

EM ITERATION 15

Red Blood Cell Hemoglobin Concentration

4.4

4.3

4.2

4.1

4

3.9

3.8

3.7

3.3

3.4

3.5

3.6

3.7

Red Blood Cell Volume

© P. Smyth: UC Irvine: Graphical Models: 2004: 79

3.8

3.9

4

EM ITERATION 25

Red Blood Cell Hemoglobin Concentration

4.4

4.3

4.2

4.1

4

3.9

3.8

3.7

3.3

3.4

3.5

3.6

3.7

Red Blood Cell Volume

© P. Smyth: UC Irvine: Graphical Models: 2004: 80

3.8

3.9

4

ANEMIA DATA WITH LABELS

Red Blood Cell Hemoglobin Concentration

4.4

4.3

4.2

Control Group

4.1

4

Anemia Group

3.9

3.8

3.7

3.3

3.4

3.5

3.6

3.7

Red Blood Cell Volume

© P. Smyth: UC Irvine: Graphical Models: 2004: 81

3.8

3.9

4

Application 1: Probabilistic Topic Modeling

in Text Documents

Collaborators:

Mark Steyvers, UC Irvine

Michal Rosen-Zvi, UC Irvine

Tom Griffiths, Stanford/MIT

© P. Smyth: UC Irvine: Graphical Models: 2004: 82

The slides on author-topic

modeling are in a separate

Powerpoint file

(with author-topic in the title)

© P. Smyth: UC Irvine: Graphical Models: 2004: 83

Application 2: Modeling Sets of Curves

Collaborators:

Scott Gaffney, Dasha Chudova, UC Irvine,

Andy Robertson, Suzana Camargo, Columbia University

© P. Smyth: UC Irvine: Graphical Models: 2004: 84

Graphical Models for Curves

Data = { (y1,t1),……. yT, tT) }

q

t

y

n

y = f(t ; q )

e.g., y = at2 + bt + c,

© P. Smyth: UC Irvine: Graphical Models: 2004: 85

q = {a, b, c}

Graphical Models for Curves

q

t

s

y

T points

y ~ Gaussian density

with mean = f(t ; q ), variance = s2

© P. Smyth: UC Irvine: Graphical Models: 2004: 86

Example

y

t

© P. Smyth: UC Irvine: Graphical Models: 2004: 87

Example

f(t ; q ) <- this is hidden

y

t

© P. Smyth: UC Irvine: Graphical Models: 2004: 88

Sets of Curves

TIME-COURSE GENE EXPRESSION DATA

2

Normalized log-ratio of intensity

1.5

1

0.5

0

-0.5

-1

Yeast Cell-Cycle Data

Spellman et al (1998)

-1.5

-2

0

2

4

6

8

10

12

Time (7-minute increments)

© P. Smyth: UC Irvine: Graphical Models: 2004: 89

14

16

18

Sets of Curves

© P. Smyth: UC Irvine: Graphical Models: 2004: 90

Clustering “non-vector” data

• Challenges with the data….

– May be of different “lengths”, “sizes”, etc

– Not easily representable in vector spaces

– Distance is not naturally defined a priori

• Possible approaches

– “convert” into a fixed-dimensional vector space

• Apply standard vector clustering – but loses information

– use hierarchical clustering

• But O(N2) and requires a distance measure

– probabilistic clustering with mixtures

• Define a generative mixture model for the data

• Learn distance and clustering simultaneously

© P. Smyth: UC Irvine: Graphical Models: 2004: 91

Graphical Models for Sets of Curves

q

t

s

y

T

N curves

Each curve: P(yi | ti, q ) = product of Gaussians

© P. Smyth: UC Irvine: Graphical Models: 2004: 92

Curve-Specific Transformations

q

Note: we can learn

function parameters

and shifts

simultaneously with EM

t

a

s

y

T

N curves

e.g., E[yi] = at2 + bt + c + ai,

© P. Smyth: UC Irvine: Graphical Models: 2004: 93

q = {a, b, c, a1,….aN}

Learning Shapes and Shifts

Data = smoothed growth acceleration data from teenagers

EM used to learn a spline model + time-shift for each curve

Original data

© P. Smyth: UC Irvine: Graphical Models: 2004: 94

Data after Learning

Clustering: Mixtures of Curves

q

c

t

a

s

y

T

N curves

Each set of trajectory points comes from 1 of K models

Model for group k is a Gaussian curve model

Marginal probability for a trajectory = mixture model

© P. Smyth: UC Irvine: Graphical Models: 2004: 95

Clustering Methodology

• Mixtures of polynomials and splines

– model data as mixtures of noisy regression models

– 2d (x,y) position as a function of time

– use the model as a first-order approximation for clustering

• Compare to vector-based clustering...

– provides a quantitative (e.g., predictive) model

– can handle

• variable-length trajectories

• missing measurements

• background/outlier process

• coupling of other “features” (e.g., intensity)

© P. Smyth: UC Irvine: Graphical Models: 2004: 96

Winter Storm Tracks

• Highly damaging

weather

• Important watersource

• Climate change

implications

© P. Smyth: UC Irvine: Graphical Models: 2004: 97

Data

– Sea-level pressure on a global grid

– Four times a day, every 6 hours, over 30 years

Blue indicates

low pressure

© P. Smyth: UC Irvine: Graphical Models: 2004: 98

Clusters of Trajectories

© P. Smyth: UC Irvine: Graphical Models: 2004: 99

Cluster Shapes for Pacific Cyclones

© P. Smyth: UC Irvine: Graphical Models: 2004: 100

TROPICAL CYCLONES Western North Pacific 1983-2002

© P. Smyth: UC Irvine: Graphical Models: 2004: 101

© P. Smyth: UC Irvine: Graphical Models: 2004: 102

Hierarchical Bayesian Models

c

qi

t

a

p

s

y

T

N curves

Each curve is allowed to have its own parameters

-> “clustering in parameter space”

© P. Smyth: UC Irvine: Graphical Models: 2004: 103

Summary

• Graphical Models

– Representation language for complex probabilistic models

– Provide a systematic framework for

• Model description

• Probabilistic computation

– General framework for learning from data

• Applications

– author-topic models for text data

– mixture of regressions for sets of curves

– …. many more

• Extensions

– Hierarchical Bayesian models

– Spatio-temporal models

– ……

© P. Smyth: UC Irvine: Graphical Models: 2004: 104

Further Information

• Papers online at www.datalab.uci.edu

– Graphical models

• Smyth, Heckerman, Jordan, 1997, Neural Computation

– Topic models

• Steyvers et al, ACM SIGKDD 2004

• Rosen-Zvi et al, UAI 2004

– Curve clustering

• Gaffney and Smyth, NIPS 2004

• Scott Gaffney, Phd thesis, UCI 2004

• Author-Topic Browser: www.datalab.uci.edu/author-topic

– JAVA demo of online browser

– additional tables and results