Performance And Power Benchmarking Khushboo Sheth Department of Electrical and Computer Engineering

advertisement

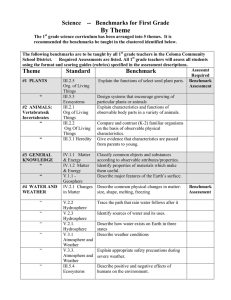

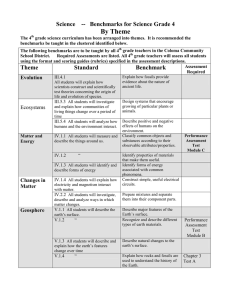

Performance And Power Benchmarking Khushboo Sheth Department of Electrical and Computer Engineering 10/24/05 ELEC6500 1 Performance Reducing Response Time (Execution Time)- the time between the start and the completion of a task. Total time required for the computer to complete a task, including disk accesses, memory accesses, I/O activities, operating systems overhead, CPU execution time, etc. Increasing Throughput-the total amount of work done in a given time. 10/24/05 ELEC6500 2 Performance Performance and Execution time relation for a computer X can be given as PerformanceX = 1 -------------Execution time X If comuter X is n times faster than computer Y then the execution time on Y is n times longer than it is on X : PerformanceX = Execution Time Y = n ----------------------------------PerformanceY Execution Time X 10/24/05 ELEC6500 3 Execution Time Elapsed Time - total time to complete a task, including disk accesses, memory accesses, I/O activities, operating systems overhead, etc. CPU Time – the time the CPU spends computing for the task and does not include time spent waiting for I/O or running other programs ( response time = elapsed time not the CPU time ) User CPU Time – the CPU time spent in the program System CPU Time – the CPU time spent in the operating system performing tasks on behalf of the program 10/24/05 ELEC6500 4 Computing CPU Execution Time Computers are constructed using a clock that runs at a constant rate and determines when event take place in the hardware. These discrete time intervals are called clock cycles. Clock rate is the inverse of clock period. CPU Execution time = CPU clock cycles * Clock cycle for a program for a program time CPU clock cycles = Instructions * Average clock cycles for a program per instruction CPU Time = Instruction count * CPI * Clock cycle time Seconds = Instruction * Clock cycles * Seconds ---------- ------------------------ --------Program Program Instruction Clock cycles 10/24/05 ELEC6500 5 Evaluating Performance The computer may be evaluated using a set of BENCHMARKS – programs specifically chosen to measure the performance. The benchmarks form a workload that the user hopes will predict the performance of the actual workload. “Synthetic” benchmarks – specially created programs that impose the workload on the component “Application” benchmarks – run actual real-world programs on the system. Application Benchmarks usually give a much better measure of real world performance on a given system, synthetic benchmarks still have their use for testing out individual components like a hard disk or networking device. 10/24/05 ELEC6500 6 Types Of Benchmarks Real Program Kernel Word processing software Tool software of CDA User`s application software (MIS) Contains key codes Normally abstracted from actual program Popular kernel-Livermore loop Linpack benchmark (contains basic linear algebra subroutine written in FORTRAN language) Results are represented in MFLOPS Toy Benchmark User can program it and use it to test computer`s basic components. 10/24/05 ELEC6500 7 Types of Benchmarks Synthetic Benchmark Procedure for programming synthetic benchmark Take statistics of all type of operations from plenty of application programs Get proportion of each operation Write a program based on the proportion above Its results are represented in KWIPS (Kilo Whetstone Instructions Per Second). Not suitable for measuring pipeline computers Types of Synthetic Benchmarks Whetstone – is a benchmark for evaluating the power of computers. It was first written in Algol60 at the National Physical Laboratory in the United Kingdom. It originally measured computing power in units of kilo-WIPS. Results for a variety of languages, compilers and system architectures have been obtained and modern workstations typically achieve more than 1,000,000 kWIPS. It primarily measures the floating point arithmetic performance. 10/24/05 ELEC6500 8 Types of Benchmarks Types of Synthetic Benchmarks Dhrystone – is a benchmark invented in 1984 by Reinhold P. Weicker. It contains no floating point operations, thus the name is a pun on the then popular Whetstone benchmark for floating point operations. The o/p from the benchmark is the number of Dhrystones per second (the number of iterations of the main code loop per second). One common representation of the Dhrystone benchmark is the DMIP-Dhrystone MIPS-obtained when the Dhrystone score is divided by 1,757 (the number of Dhrystones per second obtained on the VAX 11/780, a 1 MIPS machine). The Dhrystone benchmark contains mainly integer and string operations. But like most synthetic benchmarks, the Dhrystone benchmark is not particularly useful in measuring the performance of real-world computer systems and has fallen into disuse replaced by benchmarks that more closely resemble typical actual usage. 10/24/05 ELEC6500 9 SPEC The Standard Performance Evaluation Corporation (SPEC) is a non-profit organization that aims to produce fair, impartial and meaningful benchmarks for computers. SPEC was founded in 1988 and is financed by its member organizations which include all leading computer & software manufacturers. SPEC benchmarks are widely used today in evaluating the performance of computer systems. The benchmarks aims to test real-life situations. SPEC_WEB, for example, tests web servers performance by performing various types of parallel HTTP requests, and SPEC_CPU tests CPU performance by measuring the run time of several programs such as the compiler gcc and the chess program crafty. The various tasks are assigned weights based on their perceived importance; these weights are used to compute a single benchmark result in the end. SPEC benchmarks are written in a platform neutral programming language (usually C or FORTRAN) and the interested parties may compile the code using whatever compiler they prefer for their platform, but may not change the code. Manufacturers have been known to optimize their compilers to improve performance of the various SPEC benchmarks. 10/24/05 ELEC6500 10 Various Current SPEC Benchmarks SPEC CPU2000,combined performance of CPU, memory and compiler CIN2000 (“SPECint”) ,testing integer arithmetic, with programs such as compilers, interpreters, word processors, chess programs, etc. CFP2000(“SPECfp”) , testing floating point performance, with physical simulations, 3D graphics, image processing, computational chemistry, etc. SPECWEB99, web server performance, measured by setting up a network of client machines that stress the server with parallel requests. SPEC HPC2002, testing high end parallel computing systems with applications such as weather prediction and computational chemistry. SPEC JVM98, performance of a java client server running a java virtual machine. SPEC MAIL2001, performance of a mail server, testing SMTP and POP protocol SPEC SFS97_R1, NFS file server throughput and response time. 10/24/05 ELEC6500 11 Power Benchmarking The power benchmarking of a computer is fundamentally the notion of determining how much energy the computer is consuming in order to accomplish some measure of work. The BDTI (Berkeley Design Technology Inc.), EEMBC (EDN Embedded Microprocessor Benchmark Consortium), and SPEC (Standard Performance Evaluation Corp) benchmark organizations support benchmark suites that highlight a processor's performance when performing application specific tasks. Researchers at BDTI and EEMBC are both working on how to extend their benchmark suites to measure and compare a processor’s energy efficiency as opposed to power consumption when performing application specific tasks. 10/24/05 ELEC6500 12 Power Benchmark Strategy There are 3 primary areas of interest when benchmarking the characteristics of “low power” systems employing power management techniques to achieve low power goals. First – actual power consumption of the system under typical user conditions, presumably under power management spectrum. Second – system operability or usability under power management conditions. Its clear that one could achieve remarkable power characteristics at the cost of system performance and the response time. Third – impact of power management techniques on system reliability. An appropriate benchmarking strategy for power managed systems must address these three areas in order to postulate an overall system figure merit, low power without sacrificing system operability or reliability. It would be one that characterizes the system power consumption while the system was carrying out some useful task. 10/24/05 ELEC6500 13 Power Benchmarking The primary interest in power benchmarking is power consumed over the course of exercising a given application or in the case of multi-tasking environments, multiple applications running simultaneously. Specifically, what is the system power consumption as an application is exercised and the system transitions through various power managed power states. This is fundamentally a question of system expectations from both the application and end user’s perspective and how a power management facility might be able to exploit these expectations to reduce the system power consumption. If a specific system component isn’t being used and is unlikely to be used in the immediate time frame, its level of readiness might be compromised in order to reduce its and ultimately the system power consumption. A Word Processing application being used in EDIT mode might not access the system Fixed Disk for an extended period of time. The power management facility might recognize this as a flag that suggests that the Fixed Disk is unlikely to be called upon in the near time frame. Based on this determination the power management facility might exploit this system expectation as an opportunity to transition the fixed disk to a lower power state. This scenario could progress to a point when the fixed disk is actually completely powered off, its lowest power state and lowest state of readiness. 10/24/05 ELEC6500 14 Power Benchmarking The energy consumption of a system is therefore, the aggregate power dissipated by its components over time, at varying power states. In terms of time , it is the energy required to execute a given task to completion. This can be reflected at the system level as the summation of the energy requirements of each subtask and can be computed by the following expression: m Pt = II (Pn) * Tc 1 -----3600 where, Pt = “Task Energy” in watt hours, WHrs. m = no. of power transitions occurring during the task Pn = Segmented power dissipation during a given power state Tc = “Task Cycle” time, time required to complete the task Pn = Tsn * Ps -----Tc where, Tsn = time duration of the power state n, Ps = power level during the power state 10/24/05 ELEC6500 15 Power Benchmarking This expression accurately reflects the energy consumed by a system during the execution of a task but does not reflect any notion of system operability, specifically the time spent to complete the task. It is unclear how to define and apply a consistent approach to measure energy efficiency and correlating it with a performance point. Both BDTI and EEMBC now propose that the core and local memories to a workload is sufficient, provided that proper disclosure of the testing configuration exists. Standard power and energy efficiency benchmarks are coming to fruition and a lot of opportunity exists for people to refine them. The importance of power benchmarks will continue to grow, especially because a growing number of processors have similar or identical core architectures. However, just like performance benchmarks, power benchmarks require developers to practice due diligence when mapping the benchmark data and testing configuration to their project’s requirements. Reference : David A. Patterson and John L. Hennessy, James W. Davis, EDNAsia.com 10/24/05 ELEC6500 16