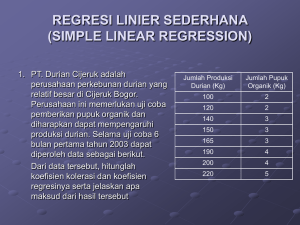

B IVARIATE AND MULTIPLE REGRESSION

advertisement

LEZIONI IN LABORATORIO Corso di MARKETING L. Baldi Università degli Studi di Milano BIVARIATE AND MULTIPLE REGRESSION Estratto dal Cap. 8 di: “Statistics for Marketing and Consumer Research”, M. Mazzocchi, ed. SAGE, 2008. 1 BIVARIATE LINEAR REGRESSION yi a b xi i Dependent variable (Random) error term Intercept Explanatory variable Regression coefficient Causality (from x to y) is assumed The error term embodies anything which is not accounted for by the linear relationship The unknown parameters (a and b) need to be estimated (usually on sample data). We refer to the sample parameter estimates as a and b 2 TO STUDY IN DETAIL: LEAST SQUARES ESTIMATION OF THE UNKNOWN PARAMETERS For a given value of the parameters, the error (residual) term for each observation is ei yi a bxi The least squares parameter estimates are those who minimize the sum of squared errors: n n i 1 i 1 SSE ( yi a bxi ) 2 ei 2 3 TO STUDY IN DETAIL: ASSUMPTIONS ON THE ERROR TERM The error term has a zero mean 2. The variance of the error term does not vary across cases (homoskedasticity) 3. The error term for each case is independent of the error term for other cases 4. The error term is also independent of the values of the explanatory (independent) variable 5. The error term is normally distributed 1. 4 PREDICTION Once a and b have been estimated, it is possible to predict the value of the dependent variable for any given value of the explanatory variable yˆ j a bx j Example: change in price x, what happens in consumption y? 5 MODEL EVALUATION An evaluation of the model performance can be based on the residuals (yi yˆ i), which provide information on the capability of the model predictions to fit the original data (goodness-offit) Since the parameters a and b are estimated on the sample, just like a mean, they are accompanied by the standard error of the parameters, which measures the precision of these estimates and depends on the sampling size. Knowledge of the standard errors opens the way 6 to run hypothesis testing. HYPOTHESIS TESTING ON REGRESSION COEFFICIENTS T-test on each of the individual coefficients • Null hypothesis: the corresponding population coefficient is zero. • The p-value allows one to decide whether to reject or not the null hypothesis that coeff.=zero, (usually p<0.05 reject the null hyp.) F-test (multiple independent variables, as discussed later) • • It is run jointly on all coefficients of the regression model Null hypothesis: all coefficients are zero 7 COEFFICIENT OF DETERMINATION R2 The natural candidate for measuring how well the model fits the data is the coefficient of determination, which varies between zero (when the model does not explain any of the variability of the dependent variable) and 1 (when the model fits the data perfectly) 0 R 1 2 Definition: A statistical measure of the ‘goodness of fit’ in a regression equation. It gives the proportion of the total variance of the forecasted variable that is explained by the fitted regression equation, i.e. the independent explanatory variables. 8 MULTIPLE REGRESSION The principle is identical to bivariate regression, but there are more explanatory variables yi a 0 a1 x1i a 2 x2i ... a k xki i 9 ADDITIONAL ISSUES: Collinearity (or multicollinearity) problem: The independent variables must be also independent of each other. Otherwise we could run into some doublecounting problem and it would become very difficult to separate the meaning. • Inefficient estimates • Apparently good model but poor forecasts 10 GOODNESS-OF-FIT The coefficient of determination R2 always increases with the inclusion of additional regressors Thus, a proper indicator is the adjusted R2 which accounts for the number of explanatory variables (k) in relation to the number of observations (n) n -1 2 2 2 R 1 (1 R ) 0 R 1 n - k -1 11 _______________________________________________________ applicazione della regressione multivariata con EXCEL FILE:esregress.xls obs. 1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 35 36 37 38 39 40 41 42 43 44 45 46 47 cons_elett 45 73 43 61 52 56 70 69 53 51 39 55 55 57 68 73 57 51 55 56 72 73 69 38 50 37 43 42 25 31 31 32 35 32 34 35 41 51 34 19 19 30 23 35 29 55 56 Tmax 87 90 88 88 86 91 91 90 79 76 83 86 85 89 88 85 84 83 81 89 88 88 77 75 72 68 71 75 74 77 79 80 80 81 80 81 83 84 80 73 71 72 72 79 84 74 83 Tmin 68 70 68 69 69 75 76 73 72 63 57 61 70 69 72 73 68 69 70 70 69 76 66 65 64 65 67 66 52 51 50 50 53 53 53 54 67 67 63 53 49 56 53 48 63 62 72 velvento 1 1 1 1 1 1 1 1 0 0 0 1 1 0 1 0 1 0 0 1 1 1 1 1 1 1 0 1 1 0 0 0 0 1 0 1 0 1 1 1 0 1 1 1 1 0 1 nuvole 2,0 1,0 1,0 1,5 2,0 2,0 1,5 2,0 3,0 0,0 0,0 1,0 2,0 2,0 1,5 3,0 3,0 2,0 1,0 1,5 0,0 2,5 3,0 2,5 3,0 3,0 3,0 3,0 0,0 0,0 0,0 0,0 0,0 0,0 0,0 2,0 2,0 1,5 3,0 1,0 0,0 3,0 0,0 0,0 1,0 3,0 2,5 con: cons_elett= consumi di energia per condizionamento. Tmax= temperatura massima registrata Tmin= temperatura minima registrata velvento= velocità del vento (maggiore o minore di 6 nodi) nuvole=grado di copertura delle nuvole 12 OUTPUT RIEPILOGO Statistica della regressione 0,856 R multiplo 0,732 R al quadrato 0,707 R al quadrato corretto 8,341 Errore standard 47 Osservazioni ANALISI VARIANZA gdl 4 Regressione 42 Residuo 46 Totale Intercetta Tmax Tmin velvento nuvole Coefficienti -85,05 0,62 1,31 -1,96 -0,19 SQ 7997,08 2921,90 10918,98 Errore standard 16,56 0,32 0,30 2,71 1,75 MQ 1999,27 69,57 Significatività F F 0,00000 28,74 Inferiore Superiore Inferiore Superiore Valore di 95,0% 95,0% 95% 95% Stat t significatività -51,64 -118,46 -51,64 0,0000 -118,46 -5,14 1,25 -0,02 1,25 -0,02 0,0574 1,95 1,91 0,72 1,91 0,72 0,0001 4,45 3,51 -7,42 3,51 -7,42 0,4735 -0,72 3,34 -3,71 3,34 -3,71 0,9160 -0,11 13 Confronto tra valori reali e stimati con il modello di regressione multipla 80 70 60 50 40 30 20 10 0 1 3 5 7 9 11 13 15 17 19 21 23 25 27 29 31 33 35 37 39 41 43 45 47 Previsto cons_elett cons_elett 14