Are “Trusted Systems” Useful for Privacy Protection? Joan Feigenbaum PORTIA Workshop

advertisement

Are “Trusted Systems” Useful for Privacy Protection? Joan Feigenbaum http://www.cs.yale.edu/~jf PORTIA Workshop Stanford Univ., July 8-9, 2004 1 General Problem: It is Hard to Exercise “Remote Control” over Sensitive Data Many machine-readable permissions systems – Rights-management languages – Privacy policies – Software licenses None is now technologically enforceable. – All software-only permissions systems can be circumvented. – Once data are transferred, control is lost. 2 Proposed Solution: “Trusted Systems” We need definition(s) of “trusted systems.” Key feature: hardware-based, cryptographic support for proofs that remote machines are running approved software stacks Useful feature – Leads to interesting theory? – Feasible to build and deploy? Solves practical problems? 3 Trusted Systems for the Distribution of Entertainment Content Content providers’ problem is piracy. Approved software stacks would implement “draconian DRM.” – No unauthorized use of content – No unauthorized access to or transfer of content Unclear whether these goals, especially the second, can be achieved. See, e.g., – Biddle et al. 2002: “Darknet” creates unauthorized access and transfer. – Haber et al. 2003: Technological weaknesses If these goals were achieved, the piracy problem would be (at least partly) solved. 4 Dan Geer at YLS: “DRM ≡ Privacy” This claim is made often and disputed often in the trusted-system context. Is it true? What (and whose) privacy problem is being addressed? What is the approved software stack required to do? Are these technical requirements achievable? If they were achieved, would the privacy problem be solved? 5 Example: Privacy of Medical Data Whose problem? Individual data subject – Often, he or she is not the entity that creates the data or the one that must demand proof from a potential data recipient. – Different from DRM for entertainment content. Institution Hospital, drs’ office, insurance co., etc. 6 Privacy of Medical Data, cont. What problem? Example: Compliance with HIPAA rules about when personal medical data may be disclosed. Targeted attack on specific data subject. Trusted systems won’t prevent this disclosure! – Small data objects (some only one bit) abound. Attacker can disclose them without computers. General fact about trusted systems (but not applicable to DRM for entertainment) – Data objects obtainable by other means. General fact (sometimes applicable to DRM for entertainment, as in “Darknet” ) General disregard for or misunderstanding of rules 7 General (Untargeted) HIPAA-Compliant Privacy Medical-privacy logic is incomplete. Complying with it requires good human judgment. – “Life-threatening situation” – “Public-health emergency” – “Poses a threat to himself or others” Trusted systems can’t fully solve this problem! This is a general fact about trusted systems; they can’t solve problems that are inherently unsolvable by computers. – Not necessarily relevant to draconian DRM – Relevant to the more general problem of copyright compliance, which includes fair use 8 Conclusion: Trusted Systems Cannot Ensure Privacy of Personal Medical Data Circumvention of privacy-protection mechanisms is not the most pressing threat to medical-data privacy. More generally, trusted systems will be ineffective when – There are alternative ways to obtain the sensitive data (or accomplish the attacker’s goals). – The relevant policy is not conveniently expressible as a program. When will trusted systems be effective? 9 Policy-Enforcement Mechanisms in MS Products (BAL/cs155/03-25-03) MS DRM for eBooks MS DRM for Windows Media Windows Rights Management Services Office 2003 Information Rights Management License servers for Ultimate TV/eHome (digital Terminal Services, File & Print Services, etc. Xbox (anti-repurposing) storage of video) File system ACLs Enterprise policy management Group policy in domains Partially trusted code policies (.NET Framework) NGSCB 10 Which Attributes are Relevant? Number of data sources? Number of data recipients? Need to retransmit data? Stability of policies? Third-party certifiers? ••• When could trusted systems help? 11 Whenever “The Program is the Policy?” Organizations mandate the use of particular software environments for a variety of reasons, some objectionable. Are there benign reasons to do so? – Absence of particular serious flaws – Mission-critical properties, e.g., • Maintains audit trails • Does not maintain audit trails – Protection against subversion and misrepresentation (analogy with copy-left licenses) What else are trusted systems good for? 12

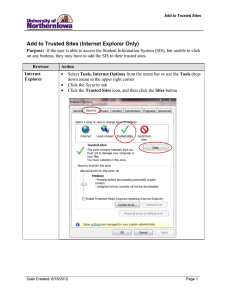

![[Date] [Insert name/address of person doing observation] [Student’s name]](http://s2.studylib.net/store/data/015502417_1-ba344f07c5e1458cb15ba4718659ad4b-300x300.png)