252y0343 1/12/04 ECO252 QBA2 Name KEY

advertisement

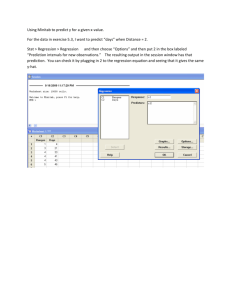

252y0343 1/12/04 ECO252 QBA2 Name KEY FINAL EXAM Hour of Class Registered _______ DEC 11, 2003 I. (25+ points) Do all the following. Note that answers without reasons receive no credit. Most answers require a statistical test, that is, stating or implying a hypothesis and showing why it is true or false by citing a table value or a p-value. The fourth computer problem involved the regression of the Y variable below against some, but not all of the X values. Column Variables in Data Set C2 X2 Type 1 = Private, 0 = Public. C3 X1 First Quartile SAT C4 X5 3rd Quartile SAT C5 X4 Room and Board Cost C6 Y Annual Total Cost C7 X6 Average Indebtedness at Graduation C8 X3 Interaction = X1 * X2 You were directed to hand in the computer output (4 points) and your answer to problem 14.37 or the equivalent problem in the 8th edition. (Up to 7 points). I ran the same problem you did, but went on to add X4 and X5 to the input. The output appears on pages 1-7. My first two regressions were stepwise regressions. The second stepwise regression is set up to force the dummy variable designating ‘type of university’ into the equation. This means that our first equation in regression 2 is essentially Yˆ b0 b2 X 2 . a)According to the first regression in regression 2 what are the mean annual total costs for public and private universities and how does the printout show us that they are significantly different? (2) b) Regressions 3 and 4 are the regressions you supposedly did. According to this regression for a public university the constant in the regression equation is b0 1013 and the slope, relative to the first quartile SAT is b1 11.3339. The equation relating annual total costs to the first quartile SAT effectively has both a different intercept and a different slope; what is the equation? Are the intercepts and slopes for public and private universities, in fact significantly different? What tells us this? (3). (Extra credit: at what SAT level do public and private universities have the same cost? (2)) c) Regression 6 should be the best of all the regressions, because it has the most independent variables and the highest R-squared, but it isn’t. (i) Look at the coefficients of the independent variables and ignore their significance, one of those coefficients is incredibly unreasonable, which one is it? (1) (ii) Which coefficients are significant at the 1% level, why? (2) What about the 10% level? (1) Compare the adjusted R-squares with the other regressions, what do they tell us? (1) Look at the VIFs, what do they imply?(2) d) Do an F test to tell whether adding X3, X4 and X5 as a package to equation 3 with only X1 and X2 was useful? What is your conclusion? (4) e) I didn’t follow directions when I did a prediction interval for equation 3, so it should disagree with yours. I added some guesses as to (median?) values for X3, X4 and X5. What does the printout say I used? What would you expect should happen to the size of the prediction interval if our addition of new variables gives us a better estimate of Y? Did it happen? Cite numbers.(3) f) Use the method suggested in the text, using the standard error s e to compute a prediction interval for the same values of the independent variables and equation 3 – how accurate is it? (3) 32 ————— 12/5/2003 7:15:10 PM ———————————————————— Welcome to Minitab, press F1 for help. MTB > Retrieve "C:\Documents and Settings\RBOVE.WCUPANET\My Documents\Drive D\MINITAB\Colleges2002.MTW". 252y0343 1/12/04 Retrieving worksheet from file: C:\Documents and Settings\RBOVE.WCUPANET\My Documents\Drive D\MINITAB\Colleges2002.MTW # Worksheet was saved on Fri Dec 05 2003 Results for: Colleges2002.MTW MTB > Stepwise c6 c3 c2 c8 c5 c4; SUBC> AEnter 0.15; SUBC> ARemove 0.15; SUBC> Constant. 1) Stepwise Regression: Annual Total versus First quarti, Type of Scho, ... Alpha-to-Enter: 0.15 Alpha-to-Remove: 0.15 Response is Annual T on 5 predictors, with N = Step Constant 1 12198 2 -1021 inter T-Value P-Value 10.42 17.40 0.000 8.35 11.97 0.000 First qu T-Value P-Value 80 13.3 4.62 0.000 S 3058 2724 R-Sq 79.51 83.95 R-Sq(adj) 79.25 83.54 C-p 20.2 1.3 More? (Yes, No, Subcommand, or Help) SUBC> yes No variables entered or removed More? (Yes, No, Subcommand, or Help) SUBC> no MTB > Stepwise c6 c3 c2 c8 c5 c4; SUBC> Force c2; SUBC> AEnter 0.15; SUBC> ARemove 0.15; SUBC> Constant. 2) Stepwise Regression: Annual Total versus First quarti, Type of Scho, ... Alpha-to-Enter: 0.15 Alpha-to-Remove: 0.15 Response is Annual T on 5 predictors, with N = Step Constant 1 12478 2 -7264 3 1013 Type of T-Value P-Value 11646 13.97 0.000 8732 11.57 0.000 -3016 -0.48 0.630 19.5 7.37 0.000 11.3 2.25 0.027 First qu T-Value P-Value inter T-Value P-Value 11.2 1.90 0.061 S 3610 2783 2737 R-Sq 71.44 83.24 84.00 R-Sq(adj) 71.07 82.81 83.37 C-p 58.1 4.7 3.1 More? (Yes, No, Subcommand, or Help) SUBC> yes 80 252y0343 1/12/04 No variables entered or removed More? (Yes, No, Subcommand, or Help) SUBC> no MTB > Name c18 = 'RESI1' MTB > Regress c6 2 c3 c2; SUBC> Residuals 'RESI1'; SUBC> GHistogram; SUBC> GNormalplot; SUBC> GFits; SUBC> RType 1; SUBC> Constant; SUBC> VIF; SUBC> Predict c9 c10; SUBC> Brief 2. 3) Regression Analysis: Annual Total versus First quarti, Type of Scho The regression equation is Annual Total Cost = - 7264 + 19.5 First quartile SAT + 8732 Type of School Predictor Constant First qu Type of Coef -7264 19.524 8732.4 S = 2783 SE Coef 2728 2.651 754.7 R-Sq = 83.2% T -2.66 7.37 11.57 P 0.009 0.000 0.000 VIF 1.4 1.4 R-Sq(adj) = 82.8% Analysis of Variance Source Regression Residual Error Total Source First qu Type of DF 1 1 DF 2 77 79 SS 2963313624 596492779 3559806404 MS 1481656812 7746659 F 191.26 P 0.000 Seq SS 1926306635 1037006989 Unusual Observations Obs First qu Annual T 27 1040 21484 56 1010 15722 61 1320 17526 Fit 13041 21188 27240 SE Fit 514 560 578 Residual 8443 -5466 -9714 St Resid 3.09R -2.00R -3.57R R denotes an observation with a large standardized residual Predicted Values for New Observations New Obs 1 Fit 20993 SE Fit 579 ( 95.0% CI 19839, 22147) ( 95.0% PI 15332, 26654) Values of Predictors for New Observations New Obs 1 First qu 1000 Type of 1.00 Residual Histogram for Annual T Normplot of Residuals for Annual T Residuals vs Fits for Annual T MTB > %Resplots c18 c2; SUBC> Title "Residuals vs Type". Executing from file: W:\wminitab13\MACROS\Resplots.MAC Macro is running ... please wait Residual Plots: RESI1 vs Type of Scho 252y0343 1/12/04 MTB > %Resplots c18 c3; SUBC> Title "Residuals vs Type". Executing from file: W:\wminitab13\MACROS\Resplots.MAC Macro is running ... please wait Residual Plots: RESI1 vs First quarti MTB > Name c19 = 'RESI2' MTB > Regress c6 3 c3 c2 c8; SUBC> Residuals 'RESI2'; SUBC> GHistogram; SUBC> GNormalplot; SUBC> GFits; SUBC> RType 1; SUBC> Constant; SUBC> VIF; SUBC> Predict c9 c10 c11; SUBC> Brief 2. 4) Regression Analysis: Annual Total versus First quarti, Type of Scho, ... The regression equation is Annual Total Cost = 1013 + 11.3 First quartile SAT - 3016 Type of School + 11.2 inter Predictor Constant First qu Type of inter Coef 1013 11.339 -3016 11.177 S = 2737 SE Coef 5120 5.039 6234 5.889 R-Sq = 84.0% T 0.20 2.25 -0.48 1.90 P 0.844 0.027 0.630 0.061 VIF 5.2 97.2 120.6 R-Sq(adj) = 83.4% Analysis of Variance Source Regression Residual Error Total Source First qu Type of inter DF 1 1 1 DF 3 76 79 SS 2990309581 569496823 3559806404 MS 996769860 7493379 F 133.02 P 0.000 Seq SS 1926306635 1037006989 26995957 Unusual Observations Obs First qu Annual T 3 800 9476 9 1250 13986 27 1040 21484 61 1320 17526 Fit 10084 15186 12805 27718 SE Fit 1176 1303 520 622 Residual -608 -1200 8679 -10192 St Resid -0.25 X -0.50 X 3.23R -3.82R R denotes an observation with a large standardized residual X denotes an observation whose X value gives it large influence. Predicted Values for New Observations New Obs 1 Fit 20513 SE Fit 623 ( 95.0% CI 19271, 21755) Values of Predictors for New Observations New Obs 1 First qu 1000 Type of 1.00 MTB > Name c20 = 'RESI3' MTB > Regress c6 4 c3 c2 c8 c5; SUBC> Residuals 'RESI3'; SUBC> Constant; SUBC> VIF; SUBC> Predict c9 c10 c11 c12; SUBC> Brief 2. inter 1000 ( 95.0% PI 14921, 26105) 252y0343 1/12/04 5) Regression Analysis: Annual Total versus First quarti, Type of Scho, ... The regression equation is Annual Total Cost = - 13 + 11.4 First quartile SAT - 3053 Type of School + 10.9 inter + 0.165 Room and Board Predictor Constant First qu Type of inter Room and Coef -13 11.382 -3053 10.928 0.1655 S = 2750 SE Coef 5483 5.064 6263 5.934 0.3062 R-Sq = 84.1% T -0.00 2.25 -0.49 1.84 0.54 P 0.998 0.028 0.627 0.069 0.591 VIF 5.2 97.3 121.3 1.9 R-Sq(adj) = 83.2% Analysis of Variance Source Regression Residual Error Total Source First qu Type of inter Room and DF 4 75 79 DF 1 1 1 1 SS 2992518033 567288370 3559806404 MS 748129508 7563845 F 98.91 P 0.000 Seq SS 1926306635 1037006989 26995957 2208452 Unusual Observations Obs First qu Annual T 9 1250 13986 27 1040 21484 61 1320 17526 Fit 15174 12880 27621 SE Fit 1309 541 650 Residual -1188 8604 -10095 St Resid -0.49 X 3.19R -3.78R R denotes an observation with a large standardized residual X denotes an observation whose X value gives it large influence. Predicted Values for New Observations New Obs 1 Fit 20071 SE Fit 1030 ( 95.0% CI 18020, 22122) ( 95.0% PI 14221, 25922) Values of Predictors for New Observations New Obs 1 First qu 1000 Type of 1.00 inter 1000 Room and 5000 MTB > Name c21 = 'RESI4' MTB > Regress c6 5 c3 c2 c8 c5 c4; SUBC> Residuals 'RESI4'; SUBC> Constant; SUBC> VIF; SUBC> Predict c9 c10 c11 c12 c13; SUBC> Brief 2. 6) Regression Analysis: Annual Total versus First quarti, Type of Scho, ... The regression equation is Annual Total Cost = 5873 + 26.2 First quartile SAT - 4605 Type of School + 12.2 inter + 0.150 Room and Board - 17.0 Third quartile SAT Predictor Constant First qu Type of inter Room and Third qu S = 2754 Coef 5873 26.23 -4605 12.162 0.1503 -17.01 SE Coef 8515 17.18 6502 6.096 0.3070 18.81 R-Sq = 84.2% T 0.69 1.53 -0.71 2.00 0.49 -0.90 P 0.493 0.131 0.481 0.050 0.626 0.369 R-Sq(adj) = 83.2% VIF 59.2 104.5 127.7 1.9 58.0 252y0343 1/12/04 Analysis of Variance Source Regression Residual Error Total Source First qu Type of inter Room and Third qu DF 5 74 79 DF 1 1 1 1 1 SS 2998718897 561087507 3559806404 MS 599743779 7582264 F 79.10 P 0.000 Seq SS 1926306635 1037006989 26995957 2208452 6200863 Unusual Observations Obs First qu Annual T 3 800 9476 9 1250 13986 27 1040 21484 61 1320 17526 Fit 9606 15381 13192 27217 SE Fit 1323 1331 642 789 Residual -130 -1395 8292 -9691 St Resid -0.05 X -0.58 X 3.10R -3.67R R denotes an observation with a large standardized residual X denotes an observation whose X value gives it large influence. Predicted Values for New Observations New Obs 1 Fit 20002 SE Fit 1034 95.0% CI 17942, 22061) ( ( 95.0% PI 14141, 25862) Values of Predictors for New Observations New Obs 1 First qu 1000 Type of 1.00 inter 1000 Room and 5000 Third qu 1200 MTB > Name c22 = 'RESI5' MTB > Regress c6 2 c3 c5 ; SUBC> Residuals 'RESI5'; SUBC> Constant; SUBC> VIF; SUBC> Predict c9 c12 ; SUBC> Brief 2. 7) Regression Analysis: Annual Total versus First quarti, Room and Boa The regression equation is Annual Total Cost = - 24258 + 27.9 First quartile SAT + 1.84 Room and Board Predictor Constant First qu Room and Coef -24258 27.927 1.8439 S = 3959 SE Coef 3686 3.532 0.3534 R-Sq = 66.1% T -6.58 7.91 5.22 P 0.000 0.000 0.000 VIF 1.2 1.2 R-Sq(adj) = 65.2% Analysis of Variance Source Regression Residual Error Total Source First qu Room and DF 1 1 DF 2 77 79 SS 2352968982 1206837422 3559806404 Seq SS 1926306635 426662346 MS 1176484491 15673213 F 75.06 P 0.000 252y0343 1/12/04 Unusual Observations Obs First qu Annual T 14 920 7210 16 1120 9451 41 1060 25865 53 900 17886 61 1320 17526 Fit 16752 18272 14472 18758 26398 SE Fit 1006 593 849 1441 845 Residual -9542 -8821 11393 -872 -8872 St Resid -2.49R -2.25R 2.95R -0.24 X -2.29R R denotes an observation with a large standardized residual X denotes an observation whose X value gives it large influence. Predicted Values for New Observations New Obs 1 Fit 12889 SE Fit 820 ( 95.0% CI 11255, 14522) ( 95.0% PI 4838, 20939) Values of Predictors for New Observations New Obs 1 First qu 1000 Room and 5000 Note: The assignment of the fourth computer problem was given as follows. This problem is problem 14.37 in the 9 th edition of the textbook. The data are on the next two pages. As far as I can see, the problem is identical to Problem 15.10 in the 8 th edition, but you should use the 9th edition data which can be downloaded from the website at http://courses.wcupa.edu/rbove/eco252/Colleges2002.MTP or the disk for the 9th edition. To download from the website enter Minitab, use the file pull-down menu, pick ‘open worksheet’ and copy the URL into ‘File name.’ If you can save the worksheet on the computer on which you are working. Read through the document 252soln K1 and use the analysis there to help you with this problem. In particular Exercise 14.35 is almost identical to this problem and my description is very similar. Once you get the data loaded, you need a column for the interaction variable and two columns for the input to the prediction interval. The problem says “ Develop a model to predict the annual total cost based on SAT score and whether the school is public or private.” The assignment was amplified as follows. Dec 5 – I finally got around to running the last computer problem . I have suggested that you carefully and neatly write up a solution to problem 14.37, using my write – up to problems in that section as a model, and turn it in with your computer output and the exam. When I ran it I used t the following allocation of columns: Column Variables in Data Set C2 X2 Type 1 = Private C3 X1 First Quartile SAT C4 X5 3rd Quartile SAT C5 X4 Room and Board Cost C6 Y Annual Total Cost C7 X6 Average Indebtedness at Graduation C8 X3 Interaction = X1 * X2 I used the columns beyond column 8 for the data for the prediction interval, for example I put 1000 in column 9, your inputs for the interval should be in the same order that you name the predictors in the pull – down menu regression instruction. Of course I didn’t actually use X6, which is part of a very different problem, though I did experiment with some of the other variables. 252y0343 1/12/04 1) There was no reason why the majority of you concluded that Y , the dependent variable, was either X2 (Type: 1 = Private) or X1 (First Quartile SAT). Does it make sense to explain First Quartile SAT by how much the school costs? 2) To be able to explain the results or even do the problem, you have to have read some of the posted problem solutions. I don’t see any evidence that many of you had read them. Unfortunately, I do not have time to write the solution I wanted to see. The following comes from the text solution manual – but I hardly expected anything this complete. (a) (b) (c) Yˆ 7263.5561 19.5239X 1 8732.4175X 2 , where X1 = first quartile SAT score and X2 = type of institution (public = 0, private = 1). Holding constant the effect of type of institution, for each point increase on the first quartile SAT, the total cost is estimated to increase on average by $19.53. For a given first quartile SAT score, a private college or university is estimated to have an average total cost of $8732.42 over a public institution. Yˆ 7263.5561 19.5239 1000 8732.4175 0 = $12260.38 (d) First quartile SAT Residual Plot Residuals 14.37 10000 8000 6000 4000 2000 0 -2000 -4000 -6000 -8000 -10000 -12000 0 200 400 600 800 1000 First quartile SAT 1200 1400 1600 252y0343 1/12/04 Based on a residual analysis, the model shows departure from the homoscedasticity assumption caused by the first quartile SAT score. From the normal probability plot, the residuals appear to be normally distributed with the exception of the single outlier in each of the two tails. (e) (f) (g) between total cost and the two dependent variables. For X1: t 7.3654 t77 1.9913 . Reject H0. first quartile SAT score makes a significant contribution and should be included in the model. For X2: t 11.5700 t77 1.9913 . Reject H0. Type of institution makes a significant contribution and should be included in the model. Based on these results, the regression model with the two independent variables should be used. 14.2456 1 24.8023 , 7229.5242 2 10235.3109 Normal Probability Plot 10000 8000 6000 4000 Residuals 14.37 cont. F 191.26 F2,77 3.1154 . Reject H0. There is evidence of a relationship 2000 0 -2.5 -2000 -2 -1.5 -1 -0.5 0 0.5 1 1.5 2 2.5 -4000 -6000 -8000 -10000 -12000 Z Value (h) rY2.12 0.8324 . 83.24% of the variation in total cost can be explained by variation in first quartile SAT score and variation in type of institution. (i) 2 radj 0.8281 (j) rY21.2 0.4133 . Holding constant the effect of type of institution, 41.33% of the (k) variation in total cost can be explained by variation in first quartile SAT score. rY22.1 0.6348 . Holding constant the effect of first quartile SAT score, 63.48% of the variation in total cost can be explained by variation in type of institution. The slope of total cost with first quartile SAT score is the same regardless of whether the institution is public or private. (l) Yˆ 1013.1354 11.3386X 1 3015.6623X 2 11.1768X 1 X 2 . (m) For X1X2: the p-value is 0.0615. Do not reject H0. There is not evidence that the interaction term makes a contribution to the model. The two-variable model in (a) should be used. 252y0343 12/10/03 II. Do at least 4 of the following 6 Problems (at least 13 each) (or do sections adding to at least 50 points Anything extra you do helps, and grades wrap around) . Show your work! State H 0 and H1 where applicable. Use a significance level of 5% unless noted otherwise. Do not answer questions without citing appropriate statistical tests – That is, explain your hypotheses and what values from what table were used to test them. 1. A marketing analyst collects data on the screen size and price of the two models produced by a competitor. Here ‘price’ is the price in dollars, ‘size’ is screen size in inches, ‘model’ is 1 for the deluxe model (zero for the regular model) and rx1 is the column in which you may rank x1 . Row price 1 2 3 4 5 6 7 8 9 10 size y x1 371.69 403.61 484.41 492.89 606.25 634.41 651.00 806.25 1131.00 1739.00 7320.51 13 19 21 17 25 21 210 25 290 370 1011 a. Fill in the model x12 x 22 0 169 0 361 0 441 1 289 0 625 1 441 0 44100 1 625 0 84100 1 136900 4 268051 0 0 0 1 0 1 0 1 0 1 4 x2 y2 x1 y 138153 162901 234653 242941 367539 402476 423801 650039 1279161 3024121 6925785 x2 y 4832 0.00 7669 0.00 10173 0.00 8379 492.89 15156 0.00 13323 634.41 136710 0.00 20156 806.25 327990 0.00 643430 1739.00 1187817 3672.55 x1 x 2 0 0 0 17 0 21 0 25 0 370 433 rx1 1 3 9.0 10.0 x1 x 2 column.(2) Most people did this and just about everyone got credit. b. Compute the simple regression of price against size.(6) c. Compute R squared and R squared adjusted for degrees of freedom. (3) d. Compute the standard error s e (3) e. Compute s b1 and make it into a confidence interval for 1 . (3) f. Do a prediction interval for the price of a model with a 19 inch screen. (4) 21 Solution: a) Fill in the x1 x 2 column.(2) Material is in red above. b) Compute the simple regression of price against size. x1 1011, y 7320.51, From above n 10, y 2 x 2 1 x y 1187817 and 268051, 1 6925785. (In spite of the fact that most column computations were done for you, many of you wasted time and energy doing them over again. Then there were those who decided that instead of x1 y 1011 7320 .51 . Anyone x1 y that was computed for you decided that x1 y ??? the who did this should be sentenced to repeat ECO251.) Spare Parts Computation: x1 1011 x1 101 .100 n 10 y y 7320 .51 732 .051 n 10 Note that the starred quantities are sums of squares and must be positive. x1 y nx1 y 447713 .439 Sx y b1 1 2.6997 SSx1 165838 .9 x12 nx1 2 Yˆ b0 b1 x becomes Yˆ 459.11 2.700 x . SSx1 x 2 1 nx12 268051 10 101 .100 2 165838 .9 * Sx1 y x y nx y 1187817 10101 .100 732 .051 1 1 447713 .439 SSy y 2 ny 2 6925785 10 732 .051 2 1566799 * b0 y b1 x 732 .051 2.6997 101 .100 459 .11 252y0343 12/10/03 c) Compute R squared and R squared adjusted for degrees of freedom. (3) y 2 ny 2 1566799 . We already know that SST SSy x y nx y 2.6997 447713 .439 1208692 so b X Y nX Y b Sx y SSR 1208692 R .7714 . ( R must be between zero and one!) SSy SSy 1566799 Y nY X Y nX Y Sx y 447713 .439 .7714 so We could also try R SSx SSy 165838 .91566799 X nX Y nY SSR b1 Sx1 y b1 1 1 2 2 1 1 2 1 2 1 1 2 2 2 1 2 2 2 1 2 1 2 1 2 2 1 1 that SSR b1 Sx1 y R SST .77141566799 1208629. 2 R2 n 1R 2 k 90.7714 1 .7428 . k is the number of independent variables. R squared adjusted n k 1 8 for degrees of freedom must be below R squared. SSE 358107 d) Compute the standard error s e (3) s e2 44763 and s e 44763 211 .57 n k 1 8 where SSE SST SSR 1566799 1208692 358107 e) Compute s b1 and make it into a confidence interval for 1 . (3) Using the formula from the outline, s2 1 e 44763 0.2699 s 0.2699 0.5195 s b21 s e2 b1 X 1 2 nX 1 2 SSx1 165838 .9 8 t nk 1 t.025 2.306 1 b1 t nk 1 sb1 2.6997 2.3060.5195 2.70 1.20 2 2 f) Do a prediction interval for the price of a model with a 19 inch screen. (4) 21 2 1 X0 X . In From the outline, the Prediction Interval is Y0 Yˆ0 t sY , where sY2 s e2 1 2 2 n X 1 nX 1 ˆ ˆ this formula, for some specific X , Y b b X . Here X 19 and Y 459.11 2.700 x , so 0 0 0 1 0 0 Yˆ0 459.11 2.70019 510.41, X 101.1 and n 10 . Then 1 X X sY2 s e2 0 n SSx1 2 1 44763 1 19 101 .12 1 44763 1.1 .0406 51059 10 165838 .9 8 sY 51059 225 .96 , so that, if t nk 1 t.025 2.306 the prediction interval is and 2 Y0 Yˆ0 t sY 510.41 2.306225.96 510.41 521.06 . This represents a confidence interval for a particular value that Y will take when x 19 and is proportionally rather gigantic because we have picked a point fairly far from the mean of the data that was actually experienced. 11 252y0343 1/12/04 2. A marketing analyst collects data on the screen size and price of the two models produced by a competitor. Here ‘price’ is the price in dollars, ‘size’ is screen size in inches, ‘model’ is 1 for the deluxe model (zero for the regular model) and rx1 is the column in which you will rank x1 . Row 1 2 3 4 5 6 7 8 9 10 price size y x1 371.69 403.61 484.41 492.89 606.25 634.41 651.00 806.25 1131.00 1739.00 7320.51 13 19 21 17 25 21 210 25 290 370 1011 model x12 x 22 0 169 0 361 0 441 1 289 0 625 1 441 0 44100 1 625 0 84100 1 136900 4 268051 0 0 0 1 0 1 0 1 0 1 4 x2 y2 x1 y 138153 162901 234653 242941 367539 402476 423801 650039 1279161 3024121 6925785 x2 y x1 x 2 4832 0.00 7669 0.00 10173 0.00 8379 492.89 15156 0.00 13323 634.41 136710 0.00 20156 806.25 327990 0.00 643430 1739.00 1187817 3672.55 0 0 0 17 0 21 0 25 0 370 433 rx1 1 3 9.0 10.0 a. Do a multiple regression of price against size and model.(10) b. Compute R-squared and R-squared adjusted for degrees of freedom for this regression and compare them with the values for the previous problem. (4) c. Using either R – squares or SST, SSR and SSE do F tests (ANOVA). First check the usefulness of the simple regression and then the value of ‘model’ as an improvement to the regression (6) d. Predict the price of a deluxe model with a 19 inch screen – how much change is there from your last prediction? (2) Solution: a) Do a multiple regression of price against size and model.(10) We have the following spare parts from the last problem. Spare Parts Computation: SSx1 x12 nx12 268051 10 101 .100 2 x1 1011 165838 .9 * x1 101 .100 n 10 Sx1 y x1 y nx1 y 1187817 10 101 .100 732 .051 y 7320 .51 447713 .439 y 732 .051 n 10 SSy y 2 ny 2 6925785 10 732 .051 2 1566799 * x And from above n 10, x 2 x 4, 2 2 X 4*, 2Y 2672 .55 and X 1X 2 433 .00 so that 4 2 X 2 Y ?? X2 Y . Perhaps you thought 0.400 . About half of you decided that n 10 that I was crazy to do all these computations for you. Note that the starred quantities are sums of squares and must be positive. x2 We need and X Y nX Y 3672 .55 100.40 732 .051 744 .346 SSx2 X 22 nX 22 4.00 100.42 2.400 * Sx x X X nX X 433 .00 10101 .10.400 28.6 Sx 2 y 1 2 2 1 2 2 1 2 * indicates quantities that must be positive. Then we substitute these numbers into the Simplified Normal Equations: X 1Y nX 1Y b1 X 12 nX 12 b2 X 1 X 2 nX 1 X 2 X Y nX Y b X X 2 2 1 1 2 nX X b X 1 2 2 2 2 nX 2 2 12 252y0343 1/12/04 or S x1 y SS x1 b1 S x1 x2 b2 1 and S x2 y S x1x2 b1 SS x2 b2 , 447713 .439 165838 .9b1 28 .6b2 28 .6b1 2.4b2 744 .346 which become We solve the Normal Equations as two equations in two unknowns for b1 and b2 . These are a fairly tough pair of equations to solve until we notice that, if we multiply 2.4 by 11.91667 we get 28.6 447713 .439 165838 .9b1 28 .6b2 If we subtract these, we get 438843 165498 .1b1 . This means that 8870 .000 340 .82b1 28 .6b2 438843 2.6547 . Now remember that 744 .346 28.6b1 2.4b2 and this means 165498 .1 618 .5074 278 .54 . 744 .346 28 .62.6537 2.4b2 or 2.4b2 744 .346 75.8386 618 .5074 . So b2 2.4 Finally we get b0 by solving b0 Y b1 X 1 b2 X 2 732 .051 2.6547 101 .1 278 .54 0.4 352 .55 . Thus our equation is Yˆ b b X b X 352.55 5.232X 0.2351X . b1 0 1 1 2 2 1 2 b. Compute R-squared and R-squared adjusted for degrees of freedom for this regression and compare them with the values for the previous problem. (4) On the previous pages R 2 .7714 and R 2 .7428 . ( R 2 must be between zero and one! R squared adjusted for degrees of freedom must be below R squared.) (The way I did it) SSE SST SSR and so b1 2.6547 , b2 278 .54 . Sx1 y 447713 .439 Sx 2 y 744 .346 SST SSy 1566799 * SSR b1 Sx1 y b2 Sx2 y 2.6547 447713 278 .54744 .346 1188544 207330 1395874 * so SSE SST SSR 1566799 1395874 170925 * Note that the starred quantities are sums of squares and must be positive. SSR 1395874 R2 0.891 . If we use R 2 , which is R 2 adjusted for degrees of SST 1566799 freedom R 2 n 1R 2 k 90.891 2 .860 . n k 1 7 Both of these have risen, so it looks like we did well by adding the new independent variable. c. Using either R – squares or SST, SSR and SSE do F tests (ANOVA). First check the usefulness of the simple regression and then the value of ‘model’ as an improvement to the regression (6) For this regression, the ANOVA reads: Source DF SS MS Regression 2 1395874 697937 Residual Error Total 7 9 170925 1566799 24418 F 28.58 F.05 2,7 4.74 F.05 Since our computed F is larger that the table F, we reject the hypothesis that X and Y are unrelated. For the previous regression we had. SSR b1 Sx1 y b1 x1 y nx1 y 2.6997 447713 .439 1208692 13 252y0343 1/12/04 For the previous regression, the ANOVA reads: Source DF SS MS Regression 1 1208692 1208692 Residual Error Total 8 9 357107 1566799 44763 F 27.00 F.05 1,8 5.32 F.05 Since our computed F is larger that the table F, we reject the hypothesis that X and Y are unrelated. The change in the regression sum of squares is 1395874 -1208692 = 187182, so we have Source Size DF 1 SS 1208692 MS Model 1 187182 187182 Residual Error Total 7 9 170935 1566799 24418 F 7.775 F.05 1,7 5.59 F.05 Since our computed F is larger that the table F, we reject the hypothesis that Model does not contribute to the explanation of Y. Recall that now R 2 SSR 1395874 0.891 and before R 2 .771 . We can rewrite the analysis with RSST 1566799 squared as follows. Source Size DF 1 ‘SS’ .771 MS F Model 1 .891-.771 = .120 .120 7.692 Residual Error Total 7 9 1 - .891 = .109 1.000 F.05 1,7 5.59 F.05 .0156 This is identical with the previous ANOVA except for rounding error. d. Predict the price of a deluxe model with a 19 inch screen – how much change is there from your last prediction? (2) 22 ˆ Our equation is Y b0 b1 X 1 b2 X 2 352.55 5.232X 1 0.2351X 2 . So we have Yˆ b b X b X 352.55 5.23219 0.23511 452.19 . Our previous predication was 510.41, 0 1 1 2 2 more than 10% less, which indicates that the new variable is making a difference. 14 252y0343 1/12/04 3. A marketing analyst collects data on the screen size and price of the two models produced by a competitor. Here ‘price’ is the price in dollars, ‘size’ is screen size in inches, ‘model’ is 1 for the deluxe model (zero for the regular model) and rx1 is the column in which you will rank x1 . Row price 1 2 3 4 5 6 7 8 9 10 size y x1 371.69 403.61 484.41 492.89 606.25 634.41 651.00 806.25 1131.00 1739.00 7320.51 13 19 21 17 25 21 210 25 290 370 1011 model x12 x 22 0 169 0 361 0 441 1 289 0 625 1 441 0 44100 1 625 0 84100 1 136900 4 268051 0 0 0 1 0 1 0 1 0 1 4 x2 x1 y x2 y 4832 0.00 7669 0.00 10173 0.00 8379 492.89 15156 0.00 13323 634.41 136710 0.00 20156 806.25 327990 0.00 643430 1739.00 1187817 3672.55 x1 x 2 rx1 0 0 0 17 0 21 0 25 0 370 433 1 3 4.5 2 6.5 4.5 8.0 6.5 9.0 10.0 b X Y nX Y b Sx y SSy Y nY 2 R 138153 162901 234653 242941 367539 402476 423801 650039 1279161 3024121 6925785 a. Compute the correlation between price and size and check to see if it is significant using the spare parts from problem 1 if you have them. (5) b. Use the same correlation to test the hypothesis that the correlation is .85 (4) c. Do ranks for the values of ‘size’ in the rx1 column, compute a rank correlation between price and size and test it for significance using the rank correlation table if possible. (5) 14 XY nXY , but the easiest war to compute it is to remember that X 2 nX 2 Y 2 nY 2 a) r 2 y2 1 1 2 1 1 1 2 2 SSR 1208692 .7714 and that the slope was positive so that SSy 1566799 we can take the positive square root and get r .7714 .8783. The outline says that if we want to r r test H 0 : xy 0 against H1 : xy 0 and x and y are normally distributed, we use t n 2 . sr 1 r 2 n2 If we use this we get t r 1 r n2 2 .8783 1 .8783 8 .8783 7.123 . Our rejection region is below .1233 8 8 t .025 2.308 and above t .025 2.308 . Since our computed value of t falls in the reject region, we reject the null hypothesis. b) The outline says that if we are testing H 0 : xy 0 against H 1 : xy 0 , and 0 0 , the test is quite 1 1 r z ln different. We need to use Fisher's z-transformation. Let ~ . This has an approximate mean of 2 1 r ~ n 2 z z 1 1 1 0 and a standard deviation of s z z ln , so that t . We know n3 sz 2 1 0 r .7714 .8783 and 0 .85 . So 1 1 r 1 1.8783 1 1 ~ z ln ln ln 15 .4338 2.7366 1.3683 s z 2 1 r 2 .1217 2 2 1 n3 1 0.3780 7 15 252y0343 1/12/04 1 1 0 z ln 2 1 0 t n 2 1 1.85 1 1 ln 2 .15 2 ln 12 .3333 2 2.5123 1.2562 . Finally ~ z z 1.3683 1.2562 8 .2965 . Our rejection region is below t .025 2.308 and above sz 0.3780 8 t .025 2.308 . Since our computed value of t does not fall in the reject region, we do not reject the null hypothesis. c. Do ranks for the values of ‘size’ in the rx1 column, compute a rank correlation between price and size and test it for significance using the rank correlation table if possible. (5) The ranking for size appears above, and the ranking of price is obvious. We now have the following columns. d Row rprice rsize d2 1 2 3 4 5 6 7 8 9 10 1 2 3 4 5 6 7 8 9 10 1.0 3.0 4.5 2.0 6.5 4.5 8.0 6.5 9.0 10.0 Since n 5, rs 1 0.0 -1.0 -1.5 2.0 -1.5 1.5 -1.0 1.5 0.0 0.0 0.0 0.00 1.00 2.25 4.00 2.25 2.25 1.00 2.25 0.00 0.00 15.00 d 1 615 1 90 1 .090909 =0.9091. If we check the table 990 nn 1 10 10 1 2 6 2 2 ‘Critical Values of rs , the Spearman Rank Correlation Coefficient,’ we find that the critical value for n 10 and .05 is .55150 so we must reject the null hypothesis and we conclude that we cannot say that the rankings agree. 16 252y0343 1/12/04 4. Explain the following. a. Under what circumstances you could use a Chi squared method to test for Normality but not a Kolmogorov - Smirnov? (2) b. Under what circumstances could you use a Lilliefors test to test for Normality but not a Kolmogorov – Smirnov? (2) c. Under what circumstances could you use a Kruskal – Wallis test to test whether four distributions are similar but not a one – way ANOVA? (2) d. What 2 tests can be used to test for the equality of two medians? Which is more powerful? (2) f. A random sample of 21 Porsche drivers were asked how many miles they had driven in the last year and a frequency table was constructed of the data. Miles Observed frequency 0 – 4000 2 4000 – 8000 7 8000 – 12000 7 Over 12000 5 Does the data follow a Normal distribution with a mean of 8000 and a standard deviation of 2000? Do not cut the number of groups below what is presented here. Find the appropriate E or cumulative E and do the test. (6) Solution: a. When the parameters of the distribution must be computed from the data. b. When the mean and standard deviation had to be computed from the data. c. When the distributions are not approximately Normal. d. This was misstated and almost any answer that showed you knew how to do a test for 2 medians was accepted. e. f. Miles Values of z O Fo Fe D F0 Fe 0 – 4000 4000 – 8000 8000 – 12000 Over 12000 -4.00 -2.00 0 to 2.00 to -2.00 2 to 0 7 2.00 7 and up 5 21 .0952 .4286 .7619 1.000 .5 - .4772 =.0228 .5 .9772 1.0000 .0624 .0714 .2153 0 This is a K-S test where Fe is the cumulative probability under the Normal distribution. The tabulated probabilities are Pz 2 , Pz 0 , Pz 2 and Pz . According to the K-S table, the 5% critical value for n 21 is .287. Since the maximum discrepancy does not exceed the critical value, do not reject H 0 : N 8000 ,2000 17 252y0343 12/10/03 5. (Ullman) A Latin Square is an extremely effective way of doing a 3 way ANOVA. In this example the data is arranged in 4 rows and 4 columns . there are 3 factors. Factor A is rows - machines. Factor B is columns – operators and Factor C - materials is shown by a tag C1, C2, C3, and C4.each material appears once in each row or column. These are times to do a job categorized by machines, operators and cutting material. The rules are just the same as in any ANOVA- degrees of freedom add up and sums of squares add up. I am going to set this up as a 2 way ANOVA with one measurement per cell. There is no interaction. We, of course assume that the parent distribution is Normal B1 B2 B3 B4 Sum SS ni x i x 2 i A1 A2 A3 A4 Sum nj 7 C1 6 C4 5 C3 6 C2 24 4 4 C2 9 C1 1 C4 3 C3 17 4 5 C3 4 C2 6 C1 4 C4 19 4 3 C3 2 C2 1 C1 10 C4 16 4 6.00 4.25 4.75 4.00 x j SS x j 146 107 93 19 21 13 23 76 16 ( 4 4 4 4 16 n ) 4.75 5.25 3.25 5.75 ( ) x 99 137 63 161 2 xijk x i 2 x 2 xijk 114 x .2j . 2 You now have a choice. a) If you are a real wimp, you will pretend that each column is a random sample and compare the means of each operator. (5) the table will look like that below. Source SS DF MS F F.05 Between Within Total b) If you are less wimpy, you will pretend that this is a 2-way ANOVA and your table will look like that below (8) A choice means you don’t get full credit for doing more than one of these! Source SS DF MS F F.05 Rows A Columns B Within Total c) If you are very daring, you will try the table below. (11) To do this you need to know that the means for the 4 materials are 8, 3.75, 3.75 and 3.50 and that the factor C sum of squares is x SSC 4 2 ..k nx 2 4 8 2 3.752 3.752 3.50 2 16 2 ?. I think that the degrees of freedom should be obvious. Please don’t make the same mistakes you make on the last exam! You have 3 null hypotheses. Tell me what they are and whether you reject them. Source SS DF MS F F.05 Rows A Columns B Materials C Within Total d) Assuming that your data is cross classified, compare the means of columns 1 and 4 using a 2-sample method. (3) e) Assume that this is the equivalent of a 2-way one-measurement per cell ANOVA, but that the underlying distribution is not Normal and do an appropriate rank test. (5) 18 252y0343 12/10/03 Solution: Lets start by playing ‘fill in the blanks.’ I am using the same format as was given in the 2-way ANOVA example with one measurement per cell. No SS can be negative, ever. And saying that an SS is zero implies that the variable it describes is a constant! We, of course, assume that the parent distribution is Normal. B1 B2 B3 B4 Sum SS ni x i x 2 i A1 A2 A3 A4 Sum nj x j SS 7 C1 6 C4 5 C3 6 C2 24 4 4 C2 9 C1 1 C4 3 C3 17 4 5 C3 4 C2 6 C1 4 C4 19 4 3 C3 2 C2 1 C1 10 C4 16 4 19 21 13 23 76 16 4 4 4 4 16 n 6.00 4.25 4.75 4.00 (4.75) x 107 18.0625 93 22.5625 114 16 460 92.625 146 36 x j 2 4.75 5.25 3.25 5.75 (4.75) x 99 137 63 161 460 2 xijk 22.5625 27.5625 10.5625 33.0625 93.7500 x i 2 2 xijk x .2j . Since most of this has been done for you, the only mystery is x 4.75 , which should be found by dividing x 76 by n 16 . In this particular case, because the row sizes and the column sizes are equal, the overall mean can also be found by averaging the row means or averaging the column means. There are x i2. 93 .75 and x ij2 5037 , R 4 rows, C 4 columns and K 4 materials. x 2 .j 92 .625 . All the formulas that we need to use end with n x 164.752 361 . 2 So SST x 2 ij 2 n x 460 361 99 . If we use SSA for rows, we can use SS ( Rows) SSA Rx 2 i. n x 493 .75 16 4.75 2 375 361 14 . 2 If we use SSB for columns, we can use SS (Columns) SSB one way ANOVA. Finally, I gave you SSC K x 2 ..k Cx 2 j n x 492 .625 16 4.75 2 370 .5 361 9.5 . This is SSB in a 2 nx 2 4 8 2 3.752 3.752 3.50 2 164.752 4104.375 361 417.5 361 56.5 . Because there are 4 items in each of the sums of squares, the degrees of freedom for each is 3. The total degrees of freedom are n 1 16 1 15. If we remember that sums of squares and degrees of freedom must add up, that MS is SS divided by DF and that F is MS divided by the Within MS, we get the following tables. a) If you are a real wimp, you will pretend that each column is a random sample and compare the means of each operator. (5) the table will look like that below. H 0 : Column means are equal. We do not reject this hypothesis because our computed F is less than the table F. Source SS DF MS F F.05 Between 9.5 3 3.167 0.106ns F 3,12 3.49 .05 Within Total 89.5 99.0 12 15 29.833 19 252y0343 12/10/03 b) If you are less wimpy, you will pretend that this is a 2-way ANOVA and your table will look like that below (8) H 01 : Row means are equal. We do not reject this hypothesis because our computed F is less than the table F. H 02 : Column means are equal. We do not reject this hypothesis because our computed F is less than the table F. Source SS Rows A Columns B DF MS F F.05 14.0 3 4.667 0.556ns 9.5 3 3.167 0.377ns 3,9 3.86 F.05 3,9 3.86 F.05 Within 75.5 9 8.389 Total 99 15 c) If you are very daring, you will try the table below. (11) To do this you need to know that the means for the 4 materials are 8, 3.75, 3.75 and 3.50 and that the factor C sum of squares is x SSC 4 2 ..k nx 2 4 8 2 3.752 3.752 3.50 2 16 2 ?. I think that the degrees of freedom should be obvious. You have 3 null hypotheses. Tell me what they are and whether you reject them. H 01 : Row means are equal. We do not reject this hypothesis because our computed F is less than the table F. H 02 : Column means are equal. We do not reject this hypothesis because our computed F is less than the table F. H 03 : Material means are equal. We reject this hypothesis because our computed F is larger than the table F. Source SS DF MS F F.05 Rows A 14.0 3 4.6667 1.474ns F 3,6 3.76 .05 Columns B 9.5 3 3.1667 1 ns Materials C 56.5 3 18.833 5.947 s 3,6 3.76 F.05 3,6 3.76 F.05 Within 19.0 6 3.1667 Total 99 15 d) Assuming that your data is cross classified, compare the means of columns 1 and 4 using a 2-sample method. (3) Solution: This is an easy one. Let d x1 x 4 e) Assume that this is the equivalent of a 2-way one-measurement per cell ANOVA, but that the underlying distribution is not Normal and do an appropriate rank test. (5) x1 x 4 d d 2 7 3 4 16 6 2 4 16 5 1 4 16 5 1 6 36 23 7 18 84 From the document 252solnD2 we have the following. To do this problem we do not need statistics on x1 or x 2 , but only on the difference, d x1 x 2 which is displayed above. You should be able to compute n 4, d 2 d 18 and 84 . 20 252y0343 1/12/04 d 18 4.5 and s d So we have d n sd 2 d 4 2 nd 2 n 1 84 44.52 1 , which gives s d 1 1 We 3 1 0.25 0.5. 4 n If the paired data problem were on the formula table, it would appear as below. Interval for Confidence Hypotheses Test Ratio Critical Value Interval Difference H 0 : D D0 * D d t 2 s d d cv D0 t 2 s d d D0 t between Two H 1 : D D0 , s d d x1 x 2 Means (paired D 1 2 sd data.) sd n H 0 : 1 2 3 * Same as t n1 t.025 3.182 . We can do one of the following. H 1 : 1 2 if D0 0. 2 need s d (i) Confidence interval – D d t 2 s d 4.5 3.182 0.5 4.5 1.591 . Because this interval does not include zero, we reject our null hypothesis and conclude that there is a significant difference between the means of the populations from which the two data columns come. d D 0 4.5 0 9.00 Our reject zone is below -3.182 above 3.182. Since (ii) Test ratio – t sd .5 the t-ratio falls in the upper reject zone, we reject our null hypothesis and conclude that there is a significant difference between the means of the populations from which the two data columns come. (iii) Critical value - d cv D0 t 2 s d 0 3.182 0.5 1.591 . Since d 4.5 does not fall between these 2 limits and conclude that there is a significant difference between the means of the populations from which the two data columns come. e) Assume that this is the equivalent of a 2-way one-measurement per cell ANOVA, but that the underlying distribution is not Normal and do an appropriate rank test. (5) In general if the parent distribution is Normal use ANOVA, if it's not Normal, use Friedman or Kruskal-Wallis. If the samples are independent random samples use 1-way ANOVA or Kruskal Wallis. If they are cross-classified, use Friedman or 2-way ANOVA. So the other method that allows for cross-classification is Friedman and we use it if the underlying distribution is not Normal. The null hypothesis is H 0 : Columns from same distribution or H 0 : 1 2 3 4 . We use a Friedman test because the data is cross-classified by store. This time we rank our data only within rows. There are c 4 columns and r 4 rows. 1 2 3 4 Sum x1 x2 x3 7 6 5 6 4 9 1 3 5 4 6 4 x4 r1 3 2 1 10 r2 r3 r4 2 4 1.5 1 3 2 4 2 1 1 1.5 4 4 3 3 3 13 8.5 11 7.5 To check the ranking, note that the sum of the three rank sums is 13 + 8.5 + 11 +7.5 = 40, and that rcc 1 445 SRi 40 . the sum of the rank sums should be 2 2 21 252y0333 11/25/03 12 Now compute the Friedman statistic F2 rc c 1 SR 3r c 1 2 i i 12 132 8.52 112 7.52 345 0.15169 72.25 121 56.25 60 . 4 4 5 .15418 .5 60 2.775 If we check the Friedman Table for c 4 and r 4 , we find that the p-value is between .508 (for 2.7) and .432 )for 3. Since 2.775 is about halfway between 2.7 and 3, the p-value must be above 5% and we do not reject the null hypothesis. Alternately, since the table says that 7.5 has a p-value of .052 and 7.8 has a pvalue of .036, the 5% critical value must be slightly above 7.5. Since 2.775 is well below the critical value, do not reject the null hypothesis. 22 252y0343 1/12/04 6. a. A Stock moves up and down as follows. In 36 days it goes up 14 times and down 22 times. UDDDDUUUDUDDDUUDDDDUDDDUUDDDUDUUDDDU (i) Test these movements for randomness. (5) (ii) Take the first half of the series and test it for randomness – (and don’t repeat what you did in part (i) exactly. (4) b. Explain, briefly, why I did not bother with a Durbin – Watson test in the regression that began the exam (2) c. Test the hypothesis that the population the D’s and U’s above came from is evenly split between D’s and U’s (4). Solution: a)(i) U DDDD UUU D U DDD UU DDD - D U DDD UU DDD U D UU DDD U 1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 . n 36 , n1 14 , n 2 22 , r 17 The outline says that for a larger problem (if n1 and n 2 are too large 2n1 n 2 214 22 1 1 18 .111 and n 36 1 2 16 .11117 .111 7.877 . So z r 17 17 .111 .0395 . Since this value of z 2 n 1 35 7.877 is between z 1.960 , we do not reject H 0 : Randomness. for the table), r follows the normal distribution with 2 (ii) For half the series, n 18 , n1 7, n 2 11 , r 8. The Runs Test table says that the critical values are 5 and 14. Since 8 is between these numbers, we do not reject the null hypothesis. b. The Durbin Watson is a test for serial correlation. It is useful in problems that have a time dimension, which this problem does not have. c. We are testing that p .5, where p is the proportion of D’s in the population underlying the sample. 22 0.61 . 36 Hypotheses Test Ratio Our table has the following. x 22 , n 36 and p Interval for Proportion Confidence Interval p p z 2 s p pq n q 1 p sp H 0 : p p0 H1 : p p0 z p p0 p Critical Value pcv p0 z 2 p p0 q0 n q0 1 p0 p p0 q0 .5.5 .0069 .08333 H 0 : p .5. 36 n p0 z 2 p .5 1.960.08333 .5 0.16 or .34 to .66. Since For the test ratio or critical value method, p Critical value method: pcv 22 0.61 falls between these limits, we cannot reject the null hypothesis. 36 p p 0 .61 .5 1.32 . Critical values for z are 1.96 . Since our computed z Test ratio method: z p .08333 p falls between these limits, we cannot reject the null hypothesis. 23