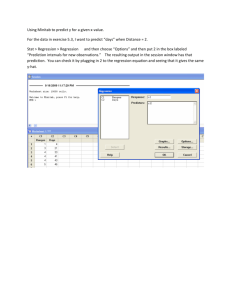

252solnK1 12/02/03 Problem 14.41 [14.35] (15.8)... could be results from a symmetrical distribution. Comment:

advertisement

![252solnK1 12/02/03 Problem 14.41 [14.35] (15.8)... could be results from a symmetrical distribution. Comment:](http://s2.studylib.net/store/data/015930307_1-cc583b42bb7cf453822003bcf3e38ffb-768x994.png)

252solnK1 12/02/03 Problem 14.41 [14.35] (15.8) continues. Histogram of the Residuals (response is Sales) 5 Frequency 4 3 2 1 0 -0.3 -0.2 -0.1 0.0 0.1 0.2 0.3 0.4 Residual Comment: This graph doesn’t really look bad. Given the relatively small sample size, it could be results from a symmetrical distribution. Normal Probability Plot of the Residuals (response is Sales) 2 Normal Score 1 0 -1 -2 -0.3 -0.2 -0.1 0.0 0.1 0.2 0.3 0.4 Residual Comment: This seems to be fairly close to a straight line indicating that the residuals have a distribution that is close to Normal. 13 252solnK1 12/02/03 Comment: These look fairly random, given the fact that shelves seem to only come in lengths that are multiples of 5 and that Location is a dummy variable. 14 252solnK1 12/02/03 MTB > Regress c2 3 c1 c3 c4; SUBC> RType 1; SUBC> Constant; SUBC> VIF; SUBC> Brief 3. Regression Analysis: Sales versus Space, Locatn, Inter The regression equation is Sales = 1.20 + 0.0820 Space + 0.750 Locatn - 0.0240 Inter Predictor Constant Space Locatn Inter Coef 1.2000 0.08200 0.7500 -0.02400 S = 0.2124 SE Coef 0.1840 0.01344 0.3186 0.02327 R-Sq = 88.0% T 6.52 6.10 2.35 -1.03 P 0.000 0.000 0.046 0.333 VIF 1.5 6.0 6.5 R-Sq(adj) = 83.5% Analysis of Variance Source Regression Residual Error Total Source Space Locatn Inter DF 1 1 1 DF 3 8 11 SS 2.64150 0.36100 3.00250 MS 0.88050 0.04513 F 19.51 P 0.000 Seq SS 2.05350 0.54000 0.04800 Comment: Things don’t look so good in this regression. The VIFs for the last two variables are high indicating collinearity, The p-value for the coefficient of interaction is very high, indication that it’s not significant at the 1%, 5% or 10% level. Obs 1 2 3 4 5 6 7 8 9 10 11 12 Space 5.0 5.0 5.0 10.0 10.0 10.0 15.0 15.0 15.0 20.0 20.0 20.0 Sales 1.6000 2.2000 1.4000 1.9000 2.4000 2.6000 2.3000 2.7000 2.8000 2.6000 2.9000 3.1000 Fit 1.6100 2.2400 1.6100 2.0200 2.0200 2.5300 2.4300 2.4300 2.8200 2.8400 2.8400 3.1100 SE Fit 0.1257 0.1777 0.1257 0.0823 0.0823 0.1164 0.0823 0.0823 0.1164 0.1257 0.1257 0.1777 Residual -0.0100 -0.0400 -0.2100 -0.1200 0.3800 0.0700 -0.1300 0.2700 -0.0200 -0.2400 0.0600 -0.0100 St Resid -0.06 -0.34 -1.23 -0.61 1.94 0.39 -0.66 1.38 -0.11 -1.40 0.35 -0.09 MTB > Stepwise c2 c1 c3 c4; SUBC> AEnter 0.15; SUBC> ARemove 0.15; SUBC> Constant. 15 252solnK1 12/02/03 Stepwise Regression: Sales versus Space, Locatn, Inter Alpha-to-Enter: 0.15 Response is Sales Alpha-to-Remove: 0.15 on 3 predictors, with N = Step Constant 1 1.450 2 1.300 Space T-Value P-Value 0.074 4.65 0.001 0.074 6.72 0.000 Locatn T-Value P-Value 12 0.45 3.45 0.007 S 0.308 0.213 R-Sq 68.39 86.38 R-Sq(adj) 65.23 83.35 C-p 13.0 3.1 More? (Yes, No, Subcommand, or Help) SUBC> yes No variables entered or removed More? (Yes, No, Subcommand, or Help) SUBC> no MTB > Comment: The stepwise regression confirms the results above. Minitab decides that ‘Space’ is the best single predictor and essentially redoes our first regression. It then brings in ‘Location’ and gets our second regression. But when it is told to go ahead and add a third independent variable, it doesn’t find enough explanatory power in ‘Inter’ to make it worth adding. Note that C-p is close to k + 1 in the second regression, indicating good results. The following material is an edited version of the solution in the Instructor’s Solution Manual. 14.35 (a) (b) (c) Yˆ 1.30 0.074X 1 0.45X 2 , where X1 = shelf space and X2 = aisle location. Holding constant the effect of aisle location, for each additional foot of shelf space, sales are expected to increase on average by 0.074 hundreds of dollars, or $7.40. For a given amount of shelf space, a front-of-aisle location is expected to increase sales on average by 0.45 hundreds of dollars, or $45. Yˆ 1.30 0.074(8) 0.45(0) 1.892 or $189.20 These intervals can only be done using Minitab or another regression program and appear in the printout. 1.3684 YX X i 2.4156 1.6880 Y |X X i 2.0960 (d) (e) Based on a residual analysis, the model appears adequate. 2,9 4.26 . Reject H and say that there is evidence of a relationship F 28 .53 F.05 0 (f) between sales and the two dependent variables. 9 2.262 . Reject H0 and say that Shelf space makes a significant For X1: t 6.72 t .025 contribution and should be included in the model. 9 2.262 . Reject H0 and say that aisle location makes a significant For X2: t 3.45 t .025 contribution and should be included in the model. Both variables should be kept in the model. 16 252solnK1 12/02/03 (g) Remember that our results were The regression equation is Sales = 1.30 + 0.0740 Space + 0.450 Locatn Predictor Constant Space Locatn Coef 1.3000 0.07400 0.4500 SE Coef 0.1569 0.01101 0.1305 T 8.29 6.72 3.45 P 0.000 0.000 0.007 VIF 1.0 1.0 9 Using the coefficient of space, b1 0.074, t nk 1 t.025 2.262 , and sb1 0.01101 , 2 you should be able to get 0.049 1 0.099 . (Is this right? I haven’t checked so tell (h) (i) (j) (k) (l) (m) (n) me.). By the same method, you should be able to get 0.155 2 0.745 for location. The slope here takes into account the effect of the other predictor variable, placement, while the solution for Problem 13.3 did not. rY2.12 0.864 . So, 86.4% of the variation in sales can be explained by variation in shelf space and variation in aisle location. 2 radj 0.834 rY2.12 0.864 while about six pages back, for the simple regression, rY2.1 0.684 . The inclusion of the aisle-location variable has resulted in the increase. I’m a little bit too lazy to compute these things the war our author wants you to do it. There’s a formula at the end of 252corr that gets you these results much faster. 6.72 2 45 .1584 t2 rY21.2 2 2 .8338 . Holding constant the effect of aisle t 2 df 6.72 2 9 54 .1584 location, 83.4% of the variation in sales can be explained by variation in shelf space. 3.45 2 11 .9025 t2 rY22.1 2 1 .5694 rY22.1 0.569 . Holding constant the t1 df 3.45 2 9 20 .9025 effect of shelf space, 56.9% of the variation in sales can be explained by variation in aisle location. The slope of sales with shelf space is the same regardless of whether the aisle location is front or back. From the last regression in the printout, Yˆ 1.20 0.082 X 1 0.75 X 2 0.024 X 1 X 2 . Do not reject H0. There is not (o) evidence that the interaction term makes a contribution to the model. The two-variable model in (a) should be used. 17 252solnK1 12/01/03 Nonlinear regression Exercise 15.1: The equation given is Yˆ 5 3X 1 1.5 X 12 . n 25 , so n k 1 25 2 1 22 . (a) Yˆ 5 3X 1.5 X 2 5 3(2) 1.5(2 2 ) 17 (b) (c) (d) t 2.35 t 22 2.0739 with 22 degrees of freedom. Reject H0. The quadratic term is significant. t 1.17 t 22 2.0739 with 22 degrees of freedom. Do not reject H0. The quadratic term is not significant. Yˆ 5 3X 1.5 X 2 5 3(2) 1.5(2 2 ) 5 Exercise 15.6(15.13 in 8th edition): The given equation is ln Yˆ 3.07 0.9 X 1 1.41 ln X 2 (a) (b) ln Yˆ 3.07 0.9 ln(8.5) 1.41ln(5.2) 7.32067 Yˆ e 7.32067 1511 .22 Holding constant the effects of X2, for each additional unit of the natural logarithm of X1 the natural logarithm of Y is expected to increase on average by 0.9. Holding constant the effects of X1, for each additional unit of the natural logarithm of X2 the natural logarithm of Y is expected to increase on average by 1.41. Exercise 15.7(15.14 in 8th edition): The given equation is ln Yˆ 4.62 0.5 X 10.7 X 2 (5.2) 12.51 Yˆ e12.51 271,034 .12 (a) ln Yˆ 4.62 0.5(8.5) 0.7(5.2) 12.51 (b) Holding constant the effects of X2, for each additional unit of X1 the natural logarithm of Y is expected to increase on average by 0.5. Holding constant the effects of X1, for each additional unit of X2 the natural logarithm of Y is expected to increase on average by 0.7. Note: To deal with the VIF, the following material has been added to 252corr together with an example. A relatively recent method to check for collinearity is to use the Variance Inflation Factor 1 . Here R 2j is the coefficient of multiple correlation gotten by regressing VIF j 1 R 2j the independent variable X j against all the other independent variables Xs . The rule of thumb seems to be that we should be suspicious if any VIFj 5 and positively horrified if VIFj 10 . If you get results like this, drop a variable or change your model. Note that, if you use a correlation matrix for your independent variables and see a large correlation between two of them, putting the square of that correlation into the VIF formula gives you a low estimate of the VIF, since the R-squared that you get from a regression against all the independent variables will be higher. 18