J. MULTIPLE REGRESSION 1. Two explanatory variables a. Model

advertisement

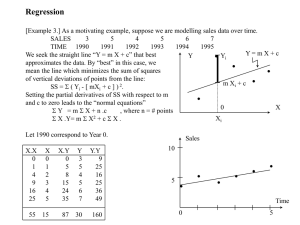

252mreg.doc 1/22/07 (Open this document in 'Outline' view!) Roger Even Bove J. MULTIPLE REGRESSION 1. Two explanatory variables a. Model Let us assume that we have two independent variables, so that Y j represents the jth observation on the dependent variable and X ij is the jth observation on independent variable i. For example, X 15 is the 5th observation on independent variable 1 and X 29 is the 9th observation on independent variable 2. We wish to estimate the coefficients 0 ,1 and 2 , of the presumably 'true' regression line Y 0 1 X 1 2 X 2 . Any actual point Y j 0 1 X 1 j 2 X 2 j j , where j Yj may not be precisely on the regression line, so we write is a random variable usually assumed to be N 0, , and is unknown but constant. The line that we estimate will have the equation Yˆ b0 b1 X 1 b2 X 2 . Our prediction of Y for any specific X 1 j and X 2 j will be Yˆ j , and since Yˆ j is unlikely to equal Y j exactly, we call our error e j Y j Yˆ j ( in estimating Y j ) so that Y j b0 b1 X 1 j b2 X 2 j e j . b. Solution. After computing a set of six "spare parts" put them together in a set of Simplified Normal Equations X 1Y nX 1Y b1 X 12 nX 12 b2 X 1 X 2 nX 1 X 2 X 2Y nX 2 Y b X 1 1X 2 nX X b X 1 2 2 2 2 nX 22 and solve them as two equations in two unknowns for b1 and b2 ; and, then get b0 by solving b0 Y b1 X 1 b2 X 2 . c. Example Recall our original example. Y is the number of children actually born and X is the number of children wanted. Add a new independent variable W , a dummy variable indicating the education of the wife. ( W 1 if she has finished college, W 0 if she has not.) In the above equations, X X 1 and W X 2 . i Y X 1 0 0 2 2 1 3 1 2 4 3 1 5 1 0 6 3 3 7 4 4 8 2 2 9 1 2 10 2 1 sum 19 16 W 1 0 1 0 0 0 0 1 1 0 4 X2 0 1 4 1 0 9 16 4 4 1 40 W 2 XW 1 0 0 0 1 2 0 0 0 0 0 0 0 0 1 2 1 2 0 0 4 6 XY 0 2 2 3 0 9 16 4 2 2 40 WY Y 2 0 0 0 4 1 1 0 9 0 1 0 9 0 16 2 4 1 1 0 4 4 49 1 Y 19, X X 16, X W 4, X X 40, X W 4, X X XW 6, X Y XY 40, X Y WY 4 and Y 49 . Y 19 1.90, X X X 16 1.60 The compute means: Y Copy sums: n 10, 2 1 1 2 2 2 2 2 1 2 1 2 2 n and X 2 W Spare Parts: 1 10 n W 4 0.40. n Y 2 10 nY 2 49 101.92 12.90 SST SSY X Y nX Y 40 101.61.9 9.60 S X Y nX Y 4 100.41.9 3.60 S X 12 nX 12 40 101.602 14.40 SS X X 22 nX 22 4 100.42 2.40 SS X X X nX X 6 101.60.4 0.40 S 1 1 2 10 X 1Y 2 X 2Y 1 2 1 2 1 2 X1 X 2 Note that SS Y , SS X 1 and SS X 2 must be positive, while the other sums can be either positive or negative. Also note that df n k 1 10 2 1 7 . ( k is the number of independent variables.) SST is used later. Rewrite the Normal Equations to move the unknowns to the right. X Y nX Y X X Y nX Y X 1 2 2 1 1 2 X X nX X b X b X nX b nX 12 b1 1X 2 nX 1 2 1 1 2 1 2 2 2 2 (Eqn. 1) 2 2 2 (Eqn. 2) Y b0 X 1b1 X 2 b2 . (Eqn. 3) Or: S X1Y SS X1 b1 S X1 X 2 b2 (Eqn. 1) S X 2Y S X1 X 2 b1 SS X 2 b2 (Eqn. 2) Y b0 X 1b1 X 2 b2 . (Eqn. 3) If we fill in the above spare parts, we get: 9.60 14 .40b1 0.40b2 3.60 1.90 b0 0.40b1 2.40b2 1.60b1 0.40b2 Eqn. 1 Eqn. 2 Eqn. 3 We solve the first two equations alone, by multiplying one of them so that the coefficients of b1 or b2 are of equal value. We then add or subtract the two equations to eliminate one of the variables. In this case, note that if we multiply equation 1 by 6, the coefficients of b2 in Equations 1 and 2 will be equal and opposite, so that, if we add them together, b2 will be eliminated. 2 57 .60 3.60 6 Eqn. 1 Eqn. 2 86 .40b1 2.40b2 0.40 b1 2.40b2 54 .00 But if 86b1 54.00 , then b1 86 .00b1 54 0.62791 . 86 Now, solve either Equation 1 or 2 for b2 . If we pick Equation 1, we can write it as 0.40b2 9.60 14.40b1 . We can solve this for b2 by dividing through by 0.40, so that b2 24.0 36.0b1 . If we substitute in b1 0.62791 , we find that b2 24.0 36.00.62791 1.3956 . Finally rearrange Equation 3 to read b0 1.90 1.60b1 0.40b2 1.90 1.600.62791 0.401.3956 1.4536 . Now that we have values of all the coefficients, our regression equation, Yˆ b b X b X , becomes 0 1 1 2 2 Yˆ 1.4536 0.6279X 1 1.3956X 2 or Y 1.4536 0.6279 X 1 1.3956 X 2 e . 2. Interpretation 3. Standard errors Recall that in the example in J1 SST Y 2 nY 2 SSY 12 .90 and that we had computed Spare Parts: S X1Y 9.60, S X 2Y 3.60, SS X1 14.40, SS X 2 2.40 and S X 1X 2 0.40 . The explained or regression sum of squares is SSR b1 X 1Y nX 1Y b2 X 2 Y nX 2 Y b1 S X 1Y b2 S X 2Y . The error or residual sum of squares is SSE SST SSR . k 2 is the number of independent variables. The coefficient of determination is R2 SSR b1 S X1Y b2 S X 2Y b1 SST SSY X Y nX Y b X Y nX Y . Y nY 1 1 2 2 2 2 2 An alternate formula, if spare parts are not available, is Y b X Y b X Y nY . Y nY SS SS 1 R SSE The standard error is s 2 SSR b0 R SST 1 2 1 2 2 2 2 2 2 e Or s e2 Y 2 nY b1 2 Y n3 Y b1 S X 1Y b2 S X 2Y n k 1 n3 X Y nX Y b X Y nX Y 1 1 2 2 n3 An alternate formula, if spare parts are not available, is s e2 2 Y 2 b0 Y b X Y b X 1 n3 1 2 2Y . 3 If we wish the coefficient of determination in the example in J1, recall that SSR b1 X 1Y nX 1Y b2 X 2 Y nX 2 Y b1 S X 1Y b2 S X 2Y 0.6279 9.60 1.3956 3.60 6.02784 5.02416 11.0520 R2 b1 X Y nX Y b X Y nX Y b S Y nY 1 1 2 2 2 2 1 X 1Y 2 b2 S X 2Y SSY SSR 11 .0520 .8567 . This SST 12 .90 represents a considerable improvement over the simple regression. SSE SST SSR SSY b1 S X1Y b2 S X 2Y 12.90 11.0520 1.848 Alternate computations for s e2 are s e2 Or s e2 Y 2 nY 2 1 R 2 n k 1 SSY 1 R n3 Or, if no spare parts are available, s e2 SSY b1 S X 1Y b2 S X 2Y n3 2 Y SSE 1.848 0.2640 10 3 7 12.901 .8587 .2604 7 2 b0 Y b X Y b X 1 1 2 2Y n3 49 1.453 19 0.6279 40 1.3956 4 1.8594 0.2655 . 10 3 7 We can use this to find an approximate confidence interval Y0 Yˆ0 t se and an approximate n prediction interval Y0 Yˆ0 t s e . Remember df n k 1 10 2 1 7 . To do significance tests or confidence intervals for the coefficients of the regression equation, we must either invert a matrix or use a computer output. H 0 : i i 0 b i0 To test use t i i . For example, part of the computer output for the current H : s bi i0 1 i example reads: Predictor Constant X W Coef 1.4535 0.6279 - 1.3953 Stdev 0.3086 0.1357 0.3325 t-ratio p 4.71 0.000 4.63 0.000 -4.20 0.004 b2 0 1.3953 To test the coefficient of W for significance, use t 2 4.196 s b2 0.3325 k 1 To do a test at the 5% significance level, compare this with t .n025 . If the t coefficient lies between k 1 , it is not significant. t.n025 Toward the end of the computer output we find: Analysis of Variance SOURCE DF SS MS Regression 2 11.0512 5.5256 Error 7 1.8488 0.2641 Total 9 12.9000 F 20.92 p 0.001 4 The first SS is the regression Sum of Squares SSR b1 X 1Y nX 1Y b2 X 2 Y nX 2 Y b1 S X 1Y b2 S X 2Y and the third SS is the Total Sum of Y SSR . Of course, the second sum of squares is the Error SST R2 SSR k k k , n k 1 Sum of Squares SSE SST SSR . This means that F . In other words, SSE 1 R 2 n k 1 n k 1 Squares SST 2 nY 2 SSY so that R 2 we can substitute R 2 and 1 R 2 for SSR and SSE in the F-test and get the same results. (In this case 2, 7 , so that we reject the null hypothesis of no relationship between the F 20 .92 is larger than F.05 dependent variable and the independent variables.) . For a Minitab printout example of the multiple regression problem used here and in subsequent sections, see 252regrex2. 4. Stepwise regression Note: In the material that follows we compare the simple regression and the regression with two independent variables with a third version of the regression that has three independent variables. The third independent variable is X 3 H , a dummy variable that represents the husband’s education, again a 1 if the husband completed college, a zero if he did not . The ten observations now read: Obs Y X W H 1 2 3 4 5 6 7 8 0 2 1 3 1 3 4 2 0 1 2 1 0 3 4 2 1 0 1 0 0 0 0 1 1 0 1 0 0 0 0 0 9 10 1 2 2 1 1 0 1 0 R 2 adjusted for degrees of freedom is Rk2 n 1R 2 k , where k is the number of independent n k 1 variables. From this we can get an F statistic to check the value of adding r independent variables in addition to the k already in use: 2 2 2 n k 1 1 Rk n k r 1 n k r 1 Rk r Rk 2 F r ,n k r 1 . Though R adjusted for 2 2 r r r 1 Rk r 1 Rk r degrees of freedom is useful to compare as a preliminary way to see if adding new independent variables is worthwhile, a trick using R 2 and a faked or real ANOVA table may be easier to follow. In the example we have been using, three ANOVA tables are generated, the first for 1 independent variable, the second for two independent variables and the third for three independent variables. Beneath the second two ANOVAs the effect of adding the independent variables on SSR is given in sequence. 5 Analysis of Variance SOURCE Regression Error Total DF 1 8 9 Analysis of Variance SOURCE Regression Error Total DF 2 7 9 SOURCE X W DF 1 1 DF 3 6 9 SOURCE X W H DF 1 1 1 9.857 2 2 2 .816 k 2, R .857 , R 7 SS MS F 11.0512 5.5256 20.92 1.8488 0.2641 12.9000 p 0.023 p 0.001 SEQ SS 6.4000 4.6512 Analysis of Variance SOURCE Regression Error Total 9.496 1 2 2 .433 k 1, R .496 , R 8 SS MS F 6.4000 6.4000 7.88 6.5000 0.8125 12.9000 9.906 3 2 2 .859 k 3, R .906 , R 6 SS MS F 11.6937 3.8979 19.39 1.2063 0.2011 12.9000 p 0.002 SEQ SS 6.4000 4.6512 0.6425 If we wish to tell whether the addition of the second and third independent variables is an improvement over the regression with one independent variable, r 2 and k 1 compute 2 n k 1 1 Rk n k r 1 10 1 1 1 .433 6 .567 F r ,n k r 1 1 .859 2 4 .141 3 13 .09 or 2 r r 2 1 Rk r 2 2 n k r 1 Rk r Rk 10 1 2 1 .906 .496 6 .410 1 .906 2 .094 13 .09 2 r 2 1 Rk r It may be easier to see what is happening if we copy the third ANOVA, but split the regression sum of squares between the first independent variable and the last two, using the regression sum of squares in the first regression or the itemization in the third. F r ,n k r 1 SOURCE Regression X W,H Error Total DF 3 1 2 6 9 SS 11.6937 6.4000 5.2937 1.2063 12.9000 MS 2.64685 0.2011 F 13.1619 6 The F-test here would be done with 2 and 6 degrees of freedom, and could as easily be done with .410 in place of 5.2937 and .094 in place of 1.2063 as is done in the equation immediately above this ANOVA. The 2, 6 difference in the value of F is due to rounding. In any case the table value of Fr ,n k r 1 F.05 is 5.14 so we reject the null hypothesis that W and H do not add to the explanatory power of the independent variables. Appendix to J1 – Derivation of the regression equations. Ignore this appendix unless you have had calculus. As we did with simple regression, we want to pick values of b0 ,b1 and b2 that minimize the sum of squares, SS e 2 . To do so we use a technique involving partial derivatives. A partial derivative is simply a derivative of a function of several variables with respect to one variable taken while presuming that the other variables are constants. For example, remember that if a is a constant and x is a variable, 2 3 2 d ax a , d d dx dx ax 2ax and dx ax 3ax .(Also recall that if v is a function of x and f v is a function of v , d dx f (v) df dv dv Remembering this and using the symbol for a partial derivative dx ) we can find the partial derivatives of f x, y, z xy 2 z 3 . but y 2 z 3 is treated as a constant). Similarly, treated as a constant) and xy 2 z 3 y 2 z 3 (Remember, x is a variable, x xy 2 z 3 2xyz 3 (this time y is the variable and xz 3 is y xy 2 z 3 3xy 2 z 2 . Just as with regular derivatives, to have a minimum, z partial derivatives must equal zero. If we take partial derivatives of the sum of squares with respect to our unknowns, b0 ,b1 and b2 and set them equal to zero, we find for SS e Y 2 j j SS b0 2Y SS b1 2Y SS b2 2Y j b0 b1 X 1 j b2 X 2 j 2 , j j b0 b1 X 1 j b2 X 2 j 1 0 j b0 b1 X 1 j b2 X 2 j X 1 j 0 j b0 b1 X 1 j b2 X 2 j X 2 j 0 j j j If we multiply through by the term in parentheses on the right ( - 1, X 1 j , or X 2 j ), and divide by two, these equations, called the Normal Equations, become: Y nb0 b1 X 1 b2 X2 X Y b X b X b X X X Y b X b X X b X Sums such as Y , X , X , X Y , X Y , X 1 0 2 0 1 2 2 1 1 1 1 2 2 1 2 1 1 2 2 2 2 2 2 1 X and 2 2 are easy to compute, but we are still left with three simultaneous equations in three unknowns, b0 ,b1 and b2 . Fortunately, we can use the first of the three Normal Equations to reduce this system to two equations in Y nb0 b1 X 1 b2 X 2 , by n , we get two unknowns. If we divide this first equation, Y b0 b1 X 1 b2 X 2 . 7 If we multiply this equation by nX 1 and subtract it from the second Normal Equation, then the X Y b X X Y nX Y b X second Normal Equation, 1 X b X nX b X X b1 1 0 1 1 1 2 1 2 1 2 1 2 2 1 1X 2 2 becomes: nX 1 X 2 . Similarly if we multiply the equation for Y by nX 2 , and subtract it from the third Normal X Y b X b X X b X , the third Normal Equation becomes: X Y nX Y b X X nX X b X nX . Equation, 2 2 0 2 2 1 1 1 1 2 2 2 2 2 1 2 2 2 2 2 2 X Y , X Y , , X and X X ; third, to compute our spare parts, X Y nX Y , X Y nX Y , nX , X nX and X X nX X :fourth, to substitute these numbers into the Thus our procedure is : first, to compute Y , X 1 and X 2 ; second, to compute X X 12 2 1 2 2 2 1 1 2 2 1 2 2 2 1 Simplified Normal Equations: X 1Y nX 1Y b1 2 1 1 1 2 2 2 2 X nX b X X nX X X Y nX Y b X X nX X b X nX 2 2 1 2 1 1 2 1 2 2 1 2 1 2 2 1 2 2 2 2 2 and solve them as two equations in two unknowns for b1 and b2 ; and, finally to get b0 by solving: b0 Y b1 X 1 b2 X 2 . 8