Colorado School of Mines Annual Assessment of Student Learning Outcomes Report

advertisement

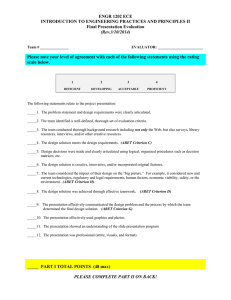

Colorado School of Mines Annual Assessment of Student Learning Outcomes Report For Academic Year 2013-14 Undergraduate Programs The purpose of assessment is to promote excellence in student learning and educational practices by fostering a campus culture of self-evaluation and improvement. The annual assessment report enables CSM to document engagement in continuous improvement efforts. Provide responses to the following questions/items and email this completed document to kmschnei@mines.edu by September 26. Feel free to expand the tables below as needed. The Assessment Committee will provide written feedback in response to the department annual report, using this rubric as the basis for their feedback. Department/Program: Chemical and Biological Engineering/Chemical Engineering B.S. degree, and Chemical and Biochemical Engineering B.S. degree* Person Submitting This Report: Tracy Gardner Phone: x3846 Email address: tgardner@mines.edu 1. Describe your assessment plan (list not only the activities completed in the past year, but your entire ongoing plan.) Note: We use the same 5 assessment methods for all 15 of our outcomes, so rather than list the methods all 14 times I will list the methods more generally here and you can use the matrix in question 3 to see how the particular courses and assessment methods map to the individual outcomes. Student Outcome 1 (1.1): At the time of graduation, our Chemical Engineering graduates will be able to apply knowledge of math, chemistry, biology, physics, and engineering to identify, formulate, and solve chemical engineering problems (ref. ABET Criteria 3a and 3e). Student Outcome 2 (1.2): At the time of graduation, our Chemical Engineering graduates will be able to apply knowledge of rate and equilibrium processes to identify, formulate, and solve chemical engineering problems (ref. ABET Criteria 3a and 3e). Student Outcome 3 (1.3): At the time of graduation, our Chemical Engineering graduates will be able to apply knowledge of unit operations to identify, formulate, and solve chemical engineering problems (ref. ABET Criteria 3a and 3e). Student Outcome 4 (1.4): At the time of graduation, our Chemical Engineering graduates will demonstrate an ability to use the computational techniques, skills, and modern engineering tools necessary for chemical engineering practice (ref. ABET Criterion 3k). Student Outcome 5 (1.5): At the time of graduation, our Chemical Engineering graduates will be able to analyze the economic profitability of chemical engineering processes and systems. (ref. ABET criteria 3c and 3h). Student Outcome 6 (2.1): At the time of graduation, our Chemical Engineering graduates will be able to design and conduct experiments of chemical engineering processes or systems (ref. ABET criterion 3b). 1 Student Outcome 7 (2.2): At the time of graduation, our Chemical Engineering graduates will be able to analyze and interpret experimental data from chemical engineering experiments (ref. ABET criterion 3b). Student Outcome 8 (2.3): At the time of graduation, our Chemical Engineering graduates will be able to design a process or system to meet desired needs within realistic constraints such as time, economic, environmental, social, political, ethical, health and safety, manufacturability, and sustainability. (ref. ABET criterion 3c). Student Outcome 9 (2.4): At the time of graduation, our Chemical Engineering graduates will be able to function effectively on a multi-disciplinary team (ref. ABET criterion 3d). Student Outcome 10 (3.1): At the time of graduation, our Chemical Engineering graduates will demonstrate an awareness of professional and ethical responsibility (ref. ABET criterion 3f). Student Outcome 11 (3.2): At the time of graduation, our Chemical Engineering graduates will demonstrate an ability to communicate effectively orally and in writing (ref. ABET criterion 3g). Student Outcome 12 (3.3): At the time of graduation, our Chemical Engineering graduates will demonstrate an understanding of the impact of engineering solutions in a global, economic, environmental, and societal context (ref. ABET criterion 3h). Student Outcome 13 (3.4): At the time of graduation, our Chemical Engineering graduates will demonstrate recognition of the need for and an ability to engage in self-education and life-long learning (ref. ABET Criterion 3i). Student Outcome 14 (3.5): At the time of graduation, our Chemical and Biochemical Engineering graduates will demonstrate an understanding of contemporary issues (ref. ABET Criterion 3j). Student Outcome 15 (4.1): At the time of graduation, or Chemical and Biochemical Engineering graduates will be able to apply knowledge of bioprocessing methods including fermentation and biofuel production (ref. ABET Criterion 3a, 3e, and 3j). Direct assessment measure(s)/method(s) Final exams and/or projects in appropriate courses (see matrix in question 3 for mapping of which materials are assessed for which outcomes) Performance criteria Student scores on relevant problems on scale from 0 to 4 average 2.5 or higher FE exam results for outcomes 1, 2, and 5 Average student scores in each area are > 70% 2 Population/sample/ recruitment strategies All students taking particular course All students who take the FE exam – approximately 15% of our students? Frequency/timing of assessment These materials are assessed every time the course is taught (once per year) October and April each year the exam is given, we get results 6-8 weeks later Population/sample/ recruitment strategies All students just finishing particular course Indirect assessment measure(s)/method(s) Course evaluations – Performance criteria >70% of student responses to direct questions about their abilities are positive Alumni Survey >50% of responses to related questions (see attached survey for question/outcome mapping) are 3 or higher Alumni (this recent survey went to 10 years of graduates; 2001-2010) Plan to do survey every 5-6 years Senior exit survey >50% of responses to related question(s) (see attached survey) are positive All graduating seniors Every May and December Undergraduate Education Advisory Council course review Council agrees student outcome is being met Group of ~20 local alumni/employers of our graduates Meets once per year in Spring (April) Recruiter/Employer Survey >70% positive responses to question(s) about given outcome Employers and recruiters of our current graduates Plan to survey every ~5 years 3 Frequency/timing of assessment Every time course is taught (once per year) 2. Map your assessment methods to your outcomes. (I did measures here rather than methods, as the specific measures give more information than just the methods, and can be easily mapped back to the methods using the answers in 1.) Recruiter survey x x x x x x Student outcome 2 x x x x x x x x x x x x x x x Student outcome 3 Student outcome 4 x x Student outcome 5 x x Student outcome 6 x x x x Student outcome 7 x x x x x x x Student outcome 9 x x x Student outcome 10 x x x x x x Student outcome 12 x x x Student outcome 13 x x x Student outcome 14 x x x Student outcome 8 Student outcome 11 x x x x Student outcome 15 x 4 Teamwork evaluation forms (peer and Prof.) Alumni survey Student outcome 1 FE exam results Senior exit survey CHEN 460, 461 final exams and projects Senior design final reports (rubric) U.O. lab and senior design oral reports (rubric) U.O. lab written reports (rubric) CHEN 308 project (rubric) CHEN 357, 375, and 418 final exams (rubric) Table 1 x 3. Map the student outcomes to courses and to the ABET outcomes. You may use check marks or designate P=primary emphasis and S=secondary emphasis. If your assessment plan only includes the ABET outcomes and you have no additional outcomes, you do not need to complete table #3. CHEN375 CHEN403 CHEN418 x x x x x x Student outcome 2 x x x x x x x x x x Student outcome 3 x x x x x x x x x x Student outcome 4 x x x x x x x x x x x x x Student outcome 5 Student outcome 6 x x Student outcome 7 x x Student outcome 8 Student outcome 9 x x Student outcome 11 x Student outcome 12 x Student outcome 13 x x x x x x x x x x x x x x x x x x x x x x x x x x x x x x x x x x x x x x x Student outcome 14 x x Student outcome 15 x Table 3 Student outcome 1 Student outcome 2 Student outcome 3 Student outcome 4 Student outcome 5 Student outcome 6 Student outcome 7 Student outcome 8 Student outcome 9 x x x x Student outcome 10 x x A x x x B C ABET Outcomes D E F G H x x x I J K X x CHEN461 CHEN358 x CHEN460 CHEN357 x CHEN430 CHEN308 x CHEN421 CHEN307 x CHEN402 CHEN202 Student outcome 1 312/313 CHEN201 Courses BELS101 Table 2 x x x x x 5 x Student outcome 10 Student outcome 11 Student outcome 12 Student outcome 13 Student outcome 14 Student outcome 15 x x x x x x x x 4. Identify the assessment activities that your program has implemented in the past year. Direct assessment methods: Student work evaluations, FE exam, career center reports Indirect assessment methods: Course evaluations, senior exit surveys, recruiter surveys, peer and professor evaluations in field session 5. Describe how you have shared assessment results with faculty. Describe how faculty have used assessment results to improve student learning, including the specific actions you have taken or will take to facilitate students’ attainment of the student learning outcomes. (ABET Criterion 4C.) When deficiencies are noted, this information is shared with the entire faculty via discussions at staff meetings and, when necessary, subcommittees are formed to address problems. In some cases, the faculty teaching the course or courses that are most amenable to modifying to address the problem are consulted and changes made to courses directly. In other cases, new courses are offered to address needs that are not met with the existing course offerings. An example of this is that we modified our Chemical Process Principles (CBEN 202) and Thermodynamics Laboratory (CBEN 358) courses in January of 2013 to be more self-paced, and further modified 358 with addition of video tutorials in January of 2014 to address issues students had. The first year we developed tutorials so that the students could learn the computer programs on their own time, and we implemented competency exams and midterm evaluations as well. The second year we implemented video tutorials (screencasts) and TA computer instruction to help with CBEN 358, though CBEN 202 seemed to run fine with just the tutorials. In this case the changes were made by the instructing professor with assistance of instructors who have taught the course in the past and assistance from the department management. We have also been working in subcommittees to redesign our course offerings into a single degree with optional courses for specialty tracks similar to those offered in Chemistry and MME. We are still working on this curriculum redesign. Table 4 Mechanisms for sharing assessment results with faculty: Staff meetings, subcommittees (standing subcommittees in the department include Undergraduate Affairs, Graduate Affairs, Research Affairs, and Awards committees; others are formed as needed) and their reports to the faculty, and faculty retreats when necessary. 6 Table 5 Action #1 taken: CBEN 201 Date action taken: Basis for this action: Student outcomes impacted: Specific assessment measure(s) that motivated action (if not described above): Measurement/assessment of the impact of the action that was taken: Action #2 taken: Date action taken: Basis for this action: Student outcomes impacted: Specific assessment measure(s) that motivated action (if not described above): Measurement/assessment of the impact of the action that was taken: Assigned and assessed student teams in CBEN 201 using CATME. Spring 2014 Ad hoc groups could be improved based on literature and the tools on catme.org. Worked well in CBEN 210 in Fall 2013, so applied in CBEN 201 in Spring 2014. 9, 10, 11 See “Basis for this action” above. CATME team maker and assessment tools provided data on the effectiveness of each student team. Most (>90%) of the teams were well-functioning. Biomedical Engineering Minor was created and passed UGC and Senate and Intro to Biomedical Engineering, Biology of Behavior, (and MCAT prep) courses were piloted Fall 2013 and Spring 2014 (and Fall 2014 – planned in Spring 2014) To provide more of what students who are interested in being “Premed” want and need to get into medical school. 1, 2, 3, 4, 13, 14, 15 None 21, 21, and 9 students took these courses, respectively. Will take time to learn how many students per year enroll in the BME minor and to see if MCAT outcomes and medical school acceptances increase. Action #3 taken: CBEN 201 Used YouTube problems from previous semesters and students wrote new YouTube problems in CBEN 201. Date action taken: Basis for this action: Spring 2014 Known efficacy of incorporating visuals to provide additional information for the students to better understand concepts. 1 Student outcomes impacted: Specific assessment measure(s) that motivated action (if not described above): Measurement/assessment of the impact of the action that was taken: From collecting problems completed in class and observing students during class time, it was noted that they were not understanding the material as well as we would like for them to. Collected problems showed good understanding of course concepts. Certain common errors or misconceptions were noted and addressed during future class periods. Provided excellent pre/post semester example to show students what they have learned by assigning same problem during Class 1 and Class 40. Students could not complete most of the questions during Class 1, but could during Class 40, and they were able to identify intentionally placed errors/misconceptions in problem statements at the end. 7 Action #4 taken: CBEN 201 Date action taken: Basis for this action: Student outcomes impacted: Specific assessment measure(s) that motivated action (if not described above): Measurement/assessment of the impact of the action that was taken: Action #5 taken: CBEN 201 Date action taken: Basis for this action: Student outcomes impacted: Specific assessment measure(s) that motivated action (if not described above): Measurement/assessment of the impact of the action that was taken: Action #6 taken: CBEN 201 Date action taken: Basis for this action: Student outcomes impacted: Specific assessment measure(s) that motivated action (if not described above): Measurement/assessment of the impact of the action that was taken: Action #7 taken: CBEN 358 Used Just in time teaching techniques refreshing material based on instant grading of homework completed on Sapling Learning in CBEN 201. Spring 2014 Literature showing effectiveness of this technique in non-engineering courses. 1, 4 None – just applying best practices. This provided additional practice problems in class for students to work in groups and allowed the faculty to oversee the in class work and answer questions. Survey at end of semester showed students like revisiting the hardest homework problems. Not clear if this specific action improved student learning, as other actions were also taken to improve instruction. Used Echo Smart Pen technology to record quiz and homework solutions including rubrics in CBEN 201. Spring 2014 More senses engaged improves potential for learning (based on brain science literature). 1, 4 Again, none – applying best practices. Similarly here, the impact of this particular change not clear. We tracked the number of downloads of talking pdfs in Blackboard. Usage statistics and end of semester survey results of students can be provided. Used prototype interactive (unprintable) textbook titled Fundamentals of Material and Energy Balances by Liberatore. Spring 2014 As for action #4, more senses engaged improves potential for learning (based on brain science literature). 1,4 Again, none – applying best practices. We tracked the number of students who purchased the book ($10/student) and conducted end of semester surveys and comments. Feedback was generally positive on cost and novelty of book. Missing components were corrected in revision for future semesters. Made video tutorials for teaching the ASPEN software and added them to a CBEN 358 YouTube channel. Specific videos were referenced through links on Blackboard. 8 Date action taken: January 2014 Basis for this action: Students did not do as well in CBEN 358 with the tutorials alone in Spring 2013, so we added these to support the administration of the workbook material. 3, 4 Student outcomes impacted: Specific assessment measure(s) that motivated action (if not described above): Measurement/assessment of the impact of the action that was taken: Described above. Student ratings of Aspen workbook usefulness and quality were compared between Spring 2013 (before creation of videos) and Spring 2014. Workbook material ratings improved from an average score of 3.3/5 in Spring 2013 to 3.7/5 in Spring 2014. Action #8 taken: CBEN 368 Asked students to give PowerPoint presentations of self-selected paper studied during semester Date action taken: Spring 2014 Basis for this action: Alumni surveys consistently indicate students do not have enough experience giving presentations. Student outcomes impacted: Specific assessment measure(s) that motivated action (if not described above): Measurement/assessment of the impact of the action that was taken: 11 Alumni surveys, recruiter surveys 12 students took this class. Feedback about their presentation skills compared to others who did not take the course will be sought. Action #9 taken: CBEN 398 Date action taken: Asked students to design an electrochemical energy conversion device based on literature values. Spring 2014 Basis for this action: Student outcomes impacted: Specific assessment measure(s) that motivated action (if not described above): Measurement/assessment of the impact of the action that was taken: To make their project more relevant to real research. 1, 2, 4, 6, 8, 11, 12, 13, 14 Action #10 taken: CBEN 698 Developed a new class covering topics in polymer science, colloids, and liquid crystals based on the textbook Soft Matter Physics by Masao Doi. This is part of a larger departmental set of actions to increase offerings at the 6xx level. None. 28 students took this class. The students all earned a B or better on this exercise. 9 Date action taken: Basis for this action: Student outcomes impacted: Specific assessment measure(s) that motivated action (if not described above): Measurement/assessment of the impact of the action that was taken: Action #11 taken: CBEN 210 Date action taken: Basis for this action: Student outcomes impacted: Specific assessment measure(s) that motivated action (if not described above): Measurement/assessment of the impact of the action that was taken: Action #12 taken: CBEN 375 Date action taken: Basis for this action: Student outcomes impacted: Specific assessment measure(s) that motivated action (if not described above): Measurement/assessment of the impact of the action that was taken: Spring 2014 Course had been offered once before, and previous version focused exclusively on colloids rather than the broad topic of soft matter. (Not an undergraduate course, so doesn’t map to outcomes listed above.) See above. Exams and term papers on topics in soft matter. Term papers covered a wide variety of subjects within the broad topic of soft matter. The departmentally agreed upon proposed learning objectives were met. Students were asked to analyze simple engineering machines and integrate them into power cycles and refrigeration devices. Spring 2014 A challenge in teaching this course is motivating students with different technical backgrounds and goals to care, because the course is composed of roughly 1/3 each Civil Engineers, Chemical Engineers, and Engineering Physics students. Sophomore Chemical Engineers and Physics students tend to believe and care about thermodynamics; the challenge is to convince Civil Engineering (CEs) seniors that thermodynamics will have application in their careers. Our approach was to apply the first and second thermo laws to simple engineering machines in which students believe (valves, pumps, compressors, exchangers, and turbines) and then to combine the simple machines into power and refrigeration cycles that CEs, ChemEs, and Physicists know they will use. 1, 3, 4 – for Physicists, Chemical, and Civil Engineers Student feedback from previous years 111 students took this course. Several specifically designed exam questions were used to assess student learning gains. 115 students were taught in one large section instead of breaking into 2 sections. Spring 2014 To see if claims that teaching in one large section is as good as breaking into smaller sections once the class size gets above some number (somewhere between 45 and 70 seems to be the cutoff according to some faculty). All, or maybe none specifically None. Student overall GPA was comparable to that for the same course in years past – i.e. not statistically different. However, the faculty member teaching the course concluded that she would prefer to break the class up into smaller sections and teach twice for the same 10 credit in the future once class size exceeds what would fit in a “normal classroom”. Even using many in-class problems, pen-based technology in the students’ hands, and a TA and 2 graders to help support interactive teaching methods, the instructor felt students had an easier time disengaging in the large stadium-style classroom than they would in AH 330 or AH 340, for instance, and that this disengagement is enough of a detractor to make it worth teaching the same material twice in one day. 6. Describe any changes that you are planning to make in your assessment plan for next year. Table 6 Planned changes: As mentioned above, a big change we are planning to make in the department is to offer only one degree with specialty tracks. This will directly affect our assessment plan as well. We don’t anticipate changing the basic format or process of our assessment plan, but as the discussion on the degree(s) progresses, the details of the assessment plan will likely change somewhat too. It is unclear at this point exactly how that will evolve. As far as the measures we use to assess our PEO’s and SO’s, and the frequency of our assessments go, we do not anticipate changes. The performance criteria will likely be tightened, as they are perhaps too easily met at the current levels. 7. Describe how you have used the feedback from the assessment committee in response to last year’s report to improve your assessment efforts. Table 7 Use of committee’s feedback: (See below) Last Year’s Comments: The outcomes are specific, measurable, and reasonable. The committee wondered if you were creating extra work by assessing outcomes in addition to the ABET outcomes? You certainly can do what works best for your department, but you may want to consider assessing the ABET a-k outcomes directly rather than assessing 15 outcomes Response: We may change this and just address the ABET a-k outcomes directly instead. We will make this decision after we revamp the program structure to a single degree. Thanks for the suggestion – less work is always better! (As long as we can get what we need out of the assessment, of course.) Last Year’s Comments: Your plan includes multiple direct and indirect measure of student learning so that faculty can determine if students are achieving the student learning outcomes. This approach is consistent with best practices. You have identified performance criteria for the outcomes, which should enable you to determine if students are achieving learning outcomes and attaining the objectives. Defining five primary assessment methods as the basis of your assessment plan is a reasonable approach. Your plan appears to be manageable and sustainable over the long term. 11 Last year we indicated that it was not clear to the committee if the course evaluations that you reference as part of your assessment plan are the ‘standard’ School-wide course evaluation forms or if you have developed forms that are specific to the learning outcomes for your programs. One again we are asking for clarification. Response: The course evaluations referred to are the “standard” school-wide course evaluations. In some courses instructors administer other course evaluation forms, but the instructors use those independently to improve their own courses and that is not department managed. Changes arising in courses due to feedback on those forms would come up on the “actions taken” in Table 5 above as well. Last Year’s Comments: You have implemented a variety of direct and indirect assessment methods on an ongoing basis, which is commendable. It appears there is department-wide involvement in your assessment efforts; thank you for convening a faculty retreat to discuss curriculum and assessment. Resoponse: You’re welcome! And thank you back. Last Year’s Comments: The program has made several changes to the curriculum and we applaud you for your dedication to enhancing and supporting students’ learning. The changes have been informed by multiple assessment methods, which is consistent with best practices. There appears to be ongoing discussion and use of assessment information for the improvement of student learning outcomes. We commend you for your work in this regard. Response: Thank you. *Note that one report is provided here for both the CHEN and CBEN degrees. This report is all inclusive and would technically be for the CBEN degree, whereas that for the CHEN degree would be the same but with the references to Student Outcome 15 and CHEN 460 and 461 courses removed. For the rest, our assessment plan, measures, etc. are the same. Hopefully next year there will just be one degree and this statement will not be necessary! 12