ontological based webpage classification 以本體論為基礎的網頁分類

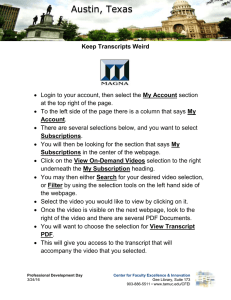

advertisement

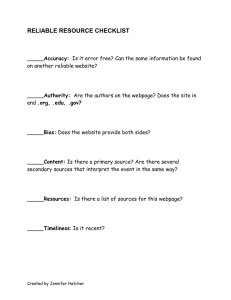

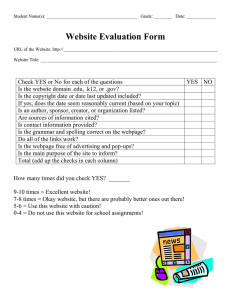

ontological based webpage classification 以本體論為基礎的網頁分類 Abstract-Current classification techniques use word matching and clustering techniques to classify webpages. These techniques use ad hoc approach of checking and matching the entire keyword in a webpage for classification. These methods are efficient but not without problems. In general, they suffer from the following problems 1) As they use brute force matching for the entire document, they tend to slow in their operation 2) word in a document may have similar meaning but they may not be identical in their spelling 3) current techniques fail to match and identify phrases efficiently 4) they also fail to consider for word disambiguation. In this paper, we propose a novel and fast ontological-based webpage classification technique to classify a webpage with high accuracy. To speed up our system, we use a segmentation technique that utilizes visual boundary of a region and matches keyword within the region instead of the entire webpage. we also use a fast clustering technique to match keyword and label the page based on the nearest match. experiment results show that our is accurate in webpage classification. 摘要-目前分類技術使用詞匹配和聚類技術來分類網頁。這些技術使用專案檢查 及匹配整個關鍵字在網頁分類方法。這些方法是有效的但是並非沒有問題的。在 一般情況下他們遭受以下幾個問題 1)由於他們用蠻力匹配整個文檔,他們往往 在他們的操作減緩 2)在一個文件的詞語,可能會有類似的含義,但是他們可能 不在他們的拼寫完全相同 3)目前的技術無法有效地匹配及識別片語 4) ,他們也 沒有考慮到詞語歧義。在本文中我們提出一種新型快速的本體論為基礎的網頁分 類技術,進行分類高精度的的網頁。為了加快本系統,我們使用了區域分割技術, 利用邊界區域的可視化及匹配區域的內部而非整個網頁的關鍵字。我們還使用快 速聚類的技術來匹配關鍵字及標籤根據最接近的匹配的網頁。實驗結果表明我們 就是在網頁中分類的準確。 Keyword - webpage, classification,ontology 關鍵字 - 網頁,分類,本體論 I. INTRODUCTION The growth of the World Wide Web has seen a great amount of data accessible to the public. search engines are developed to deal with this vast amount of data. They process user queries and return a search result within an acceptable time frame. In order to locate their documents of interest and obtain a match within a reasonable time frame, webpages are indexed and classified so that search engines can narrow down their search instead of searching through the entire Web. 全球資訊網的成長已經出現了大量的資料對公眾開放。搜索引擎被開發出來處理 這個龐大的資料量。他們處理用戶的查詢,並傳回搜索結果在一個可接受的時限 內。為了找出他們感興趣的文件,並取得一個合理的時限內的匹配項,網頁的索 引和分類使搜索引擎可以縮小他們的搜索而不是透過搜索整個 Web。 Indexing and labeling a page are hard tasks as users need to deal with the natural language processing of a document. Fortunately, HTML and their related languages such as CSS, and Javascript provide rich semantic and contextual information for the users. HTML language is defined under W3C and it is coded in a tree form known as the Document Object Model(DOM) tree. There are several existing techniques used for classifying webpages. The frist technique is the keyword matching of documents where a document text is split into large number of tokens and match them against a set of predefined keywords. This technique, however, is slow as matching of every keywords is tedious and time consuming. To address this shortcoming, users use alternative technique to separate and partition a page into several segments and then match the keywords within the segments. Although this technique fails to achieve high accuracy, it is able to scale down the running time of the previous technique significantly. 編制索引和標籤頁是很難的任務,因為用戶需要對付文檔的自然語言處理。幸運 的是,HTML 和相關的語言,例如 CSS 和 Javascript 為用戶提供了豐富的語意及上 下文信息。根據 W3CHTML 語言的被定義,它被稱為文件物件模型 DOM 樹以樹 的形式進行編碼。有幾個現有的技術用於分類網頁。第一的技術是在關鍵字的匹 配對將文檔內容是拆分為大量的記號和他們針對一組預先定義的關鍵字相匹配 的文檔。然而,這種技術為每個關鍵字匹配的為繁瑣且費時的緩慢。為了解決這 個缺點,用戶在使用替代技術,以獨立和分割的頁面劃分成若干段,然後內部細 分市場相匹配關鍵字。雖然這種技術未能達到很高的精度,這是以前的技術的運 行時間的顯著縮減。 The third method involves matching neighboring pages by locating the hyperlinks in the main page and classifying the main page based on its content and the contents of the neighboring pages. This method may degrade the performance of the classifier as the neighboring pages may not contain keywords which are relevant to the main page. Our observations states that most of the webpages available currently contain keywords with similar meaning. For example, car web sites usually contain keywords such as engine, door, fuel, sedan, four wheeled,etc. Existing techniques are not able to match these keywords as they are not similar in textual contents although they are related in many ways(e.g some words are actually synonyms). 第三種方法涉及到尋找在主要網頁的超鏈接,和根據其內容和鄰近網頁內容的主 要網頁分類匹配鄰近的網頁。這種方法可能會降低分類的績效,因為鄰近的頁面 可能不包含主網頁相關的關鍵字。 我們的觀察指出,目前大多數可用的網頁含有類似意思的關鍵字。例如,汽車網 站通常都含有的關鍵字,如發動機,車門,燃料,轎車,四輪等。現有的技術無 法匹配這些關鍵字,因為它們不是文字內容相似,雖然它們在許多方面有關(例 如一些單詞實際上是同義詞)。 In this paper, we propose a novel accurate and fast webpage classification technique which segments a page into several regions and match the keywords based on their semantic properties rather than identical keyword matching. Our method is fast due to the fact that we match keywords within regions rather than the entire page, and it is also accurate because it can classify a page which contains related keywords and phrases. Unlike existing techniques, we use Word Similarity Check tool provided by WordNet dictionary to match keywords with related terms (e.g dog is part of canine, mammal, animal). Experimental results show that our technique is accurate when tested on a wide range of datasets and it is also fast in its operation. The remainder of this paper is divided into several sections. Section II describes related work while Section III gives a complete description of the proposed method. Section IV provides an in depth discussion on the experimental evaluations and finally Section V concludes our work. 在本文中,我們提出一種新型精確和快速的網頁分類技術細分一個頁面分成幾個 區域,和相匹配基於詞語的語義屬性而非完全相同的關鍵字匹配的關鍵字。我們 的方法為快速的,因為這一事實,我們相匹配各區域內而非整個頁面的關鍵字, 亦是準確的,因為它可以歸類一個網頁,其中包含了相關的關鍵字和詞組。不同 於現有的技術,我們使用詞語相似度 WordNet 的字典提供的檢查工具,以配合 相關的術語的關鍵字(例如:狗為犬,哺乳動物,動物的一部分)。實驗結果顯 示,我們的的技術為準確的,範圍廣泛的資料集上測試時,它也在運作上快速的。 本論文的其餘部分共分為幾個章節。第二節介紹了相關的工作,而第三節給出了 提出的方法的完整描述。第四節實驗的評估提供了深入的討論,最後第五節總結 我們工作。 II. RELATED WORD Early webpage classification techniques use information directly located on the main page such as texts. Mladeinc suggests an approach to automatically classify webpage based on the Yahoo directory. In his approach, a set of predefined keywords is created and the content of the webpage is matched with these keywords. Kwon and Lee propose a webpage classification using the K-Nearest neighbor matching. In their technique, different HTML tags are given different weights, the tags are separated into groups with different weights assigned to each group. Shen et al propose text summarization to classify a webpage. Their results show that classifying webpages based on text summarization increase accuracy by 10% compare to classifying webpage based on its content. Other approaches classify webpages by separating webpages into different segments. Kovacevic et al uses multigraph approach to separate a webpage into several segments where each node represents an HTML object and each edge represents a spatial relation int the visual representation. Although webpages contain useful information, some other information may be missing, misleading, or unrecognizable for various reasons. For example, some webpages may contain valuable image or flash contents, but with little textual contents. Therefore, it is important to search for other information in order to classify these webpages. The work of use information from other webpages related to the main page to classify a webpage. Typically, hyperlinks that directly connect to other webpages are used, where contents are extracted from these neighboring pages and used to classify the main page. However, this approach may do more harm than good. For example, the neighboring pages may contain different topics from the main page. The work of addresses this issue by constructing a graph of page relations and collecting sample pages that are closest to the main page only. 早期的網頁分類方法,使用直接位於如文本的主要網頁上的資料。Mladeinc 建議 在雅虎目錄基於網頁自動分類方法。在他的方法,創建一組預先定義的關鍵字, 和與這些關鍵字相匹配的網頁的內容。Kwon 和 Lee 提出使用 K-Nearest (K 近鄰) 匹配的網頁分類。在他們的技術,不同的 HTML 標籤給予不同的權重,標籤被分 成小組分配給各組不同的權重。 Shen 等人建議摘要方法,進行分類的網頁。他們的研究結果顯示根據其內容進 行分類網頁分類的網頁文字匯總提高準確性 10%基於比較。 其他方法分類分成不同階層的的網頁的的網頁。Kovacevic et 等人使用多重圖的 辦法分成幾個部分,其中每個節點代表一個 HTML 物件以及每條邊表示一個空間 關係詮釋的可視化表示的網頁。 雖然網頁包含實用的資訊,其他的一些信息可能會遺失,誤導,或因各種原因無 法辨認。例如,某些網頁可能會包含有價值的圖像或 Flash 內容,但是有很少文 字內容。因此,重要的是為了區分上述網頁搜索其他信息。從使用資料工作相關 的主網頁分類網頁的其他網頁上。通常情況下,直接連接到其他網頁的超鏈接的 使用,內容會形成上述鄰近的的網頁提取並用於進行分類主要頁面。然而,這種 方法可能做弊大於利。例如,鄰近的頁面可能包含不同的主題,在主頁上。本工 作,解決這個問題,通過建立一個頁面關係圖和收集樣本是最接近只有主要的網 頁頁面。 III. PROPOSED METHODOLOGY In this section, we discuss the assumption and proposed solution of webpage classification using webpage segmentation technique and ontology. A. Visual Boundaries A typical webpage has its visual boundaries such as header, footer, side panel and so on. some webpages use <table> to create their visual boundaries while others use <div>. These boundaries are called regions. Figure 1 show the 5 visible regions in total, the header region, footer region, side region 1, side region 2, and content region. In Figure 1, we notice that in a webpage, the header region and the side region contain the most relevant information about a page. Figure 1 gives an example of a health webpage describing diabetes. In the header region and side region 1, it contains the most relevant keywords such as Diabetes, Symptoms, Diagnosis , Treatment, Daily, Life, Medication, Insulin, Weight loss, Obesity and so on. Based on these keywords, we can deduce that it is a webpage about health. A. 可視化界限 一個典型的網頁有其可視化的界限,如頁眉,頁腳,側板等。部分網頁使用<TABLE> 的建立他們的視覺界限,而其他人使用<div>。這些邊界被稱為地區。圖 1 顯示 共 5 可視地區,標頭區域的,頁腳區域的,側區域 1,區域 2 側,和內容區域的。 在圖 1 中,我們注意到,在網頁中,標頭區域的和側面區域的包含有關網頁最相 關的信息。圖 1 給出了一個例子描述糖尿病健康網頁。在標頭區域的和側面區域 1,它包含最相關的關鍵字,如糖尿病,症狀,診斷,治療,每日,生活,藥物, 胰島素,減肥,肥胖等。根據這些關鍵字,我們可以推斷出,這是一種有關健康 的網頁。 B. DOM Tree In order to identify the visual boundaries using a computer program, we need to first obtain the DOM Tree of a webpage. To do this, we need to use an open source HTML parser library to obtain the DOM Tree. Then the nodes in the DOM tree are further differentiated into two types: TagNode and TextNode. A TagNode is a node that represents a HTML tag such as <table>, <tr>, <td>, <div> and so on and a TextNode represents the node situated at the bottom of each path of a tree, if there is any and it is not considered as HTML tag. To make the algorithm more efficient, we skip the <head> tag and treat the <body> tag as the root tag to begin our operation. In a typical DOM tree, the <html> tag is the root tag followed by a <head> tag and a <body> tag as its immediate children nodes. We found out that the elements under the <head> tag are not necessarily relevant to the content of a webpage and it may not even exist. For instance, <link> tag, <style> tag, and <script> tag are not relevant to our work; while the <title> tag, and the <meta> tag sometimes may not be relevant at all to the content, but they may be relevant to the website, not the webpage, even though they may exist. 為了查明使用的電腦程式的可視化的邊界,我們需要先取得網頁的 DOM 樹。要 做到這一點,我們需要利用一個開放源碼的 HTML 語法分析器庫中來取得 DOM 樹。然後在 DOM 樹中的節點被進一步區分為兩種類型:TagNode 和 TextNode。 一個 TagNode 是一個節點表示 HTML 標記,如<TABLE><TR>,<TD>,<DIV>等 TextNode 表示在每個路徑樹的底部位於節點,如果有任何而且不能被當作 HTML 標籤。 為了使算法更有效,我們跳過的<head>的標籤和看待的根標籤<BODY>的標籤來 開始我們的操作。在一個典型的 DOM 樹,<html>標籤就是一個<head>標籤,並 作為其直接子節點<body>標籤的根標籤。我們發現,根據<head>標籤的元素不一 定相關網頁內容,甚至可能不存在。例如,<link>標記,<STYLE>標籤和<script> 標籤,都與我們的工作不相關,而<title>標籤,<meta>標籤有時可能不會在所有 的內容相關,但他們可能是相關的網站,網頁,即使他們可能存在。 C. Breadth First Search (BFS) Algorithm We start our operation by traversing the tree using a Breadth First Search (BFS) algorithm from the <body> node. BFS is a uniformed searching algorithm where a traversal is carried out in a left to right order, starting from the top node to the bottom most nodes. In our goal to segment a webpage into several regions, BFS search is suitable because it draws out the visual boundaries form top to bottom order. As we traverse, we skip irrelevant HTML tags such as <script> tag, <img> tag, <style> tag, <br> tag, and so on because they do not contribute to the contents in text and the text contents are more important for later operation. When we reach the TextNode, the bottom-most node and if it is not a TagNode, we split the text in the TextNode by spaces and punctuations to get a list of word; once we split the words, we will check each word to ensure that only nouns, including both proper nouns and common nouns, are being taken into consideration, where WordNet is used to further process them. There are two exceptions when segmenting a HTML page: all regions are wrapped in another HTML tag such as <table> and <div>. Depending on the web designer's preference, some web designers like to use a HTML <table> to wrap the whole content inside the <body> tag while some designers use a big <div> tag to do so. When this exception happens, we will use the next level of the tree to segment the regions. There are also some instances when the webpage has insufficient regions. Insufficient regions usually occur when a webpage is designed to be embedded into another web page using <frame> or <iframe>, or simply not designed. We will not segment this type of web page in our work. 我們先從我們的的操作由穿過樹使用從<BODY>節點的廣度優先搜索 (BFS) 算法。 BFS 是使一律化的搜索算法進行遍歷,以左到右的順序,從最底層的節點的頂端 節點開始。在我們的目標網頁分割成若干區域,BFS 搜索是合適的,因為它引出 可視化界限,形成從上到下的順序。正如我們穿越,我們跳過不相關的的<script> 標記,<img>標籤,<style>標記,參考標記,等等例如 HTML 標籤,因為它們不 利於文本的內容和文本內容更多以後的操作很重要。當我們到達 TextNode,最底 層的節點和如果不是一個 TagNode,我們分手在 TextNode 文本由空格和標點符 號,得到一個單詞列表;一旦我們分割的話,我們會檢查,以確保每個單詞唯一 名詞,包括專有名詞和普通名詞,正在考慮採取 WordNet 的用於進一步處理它 們。 有兩種例外情況分割一個 HTML 頁面時,所有地區都包裹在另一個 HTML 標記, 如<TABLE>和<div>。根據網頁設計師的偏好,一些網頁設計師喜歡使用一個 HTML 的<table>包裹內<body>標籤的整個內容,而一些設計師使用一個大的<div>標記, 這樣做的。這個例外狀況發生時,我們會用樹的下一層的部分地區。也有一些情 況下,當網頁上有不足的區域。不足的區域,通常會出現一個網頁設計時要嵌入 到另一個 Web 頁面使用的<frame>或<iframe>,或根本就沒有設計。我們將不段 這類型的網頁,在我們的工作。 Figure 1 A page with different regions of interest D. Ontology-based Webpage Classification 1) WordNet Once segmentation is carried out, we use WordNet to further classify the webpage. WordNet is a lexical English database that group words such as nouns, verbs, adverbs and adjectives into cognitive synonyms, which are called synsets. Through synsets, a word's super-subordinate relationship can be identified in terms of hypernym, hyponym or is-a relationship. Hypernym represents the superordinate or generalization of a noun, for example, 'bird is a hypernym of "crow" and "duck", "vehicle" is a hypernym of "car" and "motorbike", and so on. Hyponym is the antonym of hypernym and it represents the subordinate relationship of a word; 'motorbike' is a hyponym of 'vehicle' for example. Other than that, WordNet's has a function called 'stem' which is able to return the root word of a word. For example, the stem function will return "box" for "boxes", "child" for "children", "goose" for "geese" and so on. Hence, hypernym and hyponym relations as well as the stem function are the main WordNet features we use to implement our webpage categorization. 一旦進行了分割方法,我們使用 WordNet 的進一步歸類的網頁。WordNet 是一 個詞彙的英文資料庫,組詞,如名詞,動詞,副詞,到認知的同義詞,即所謂同 義詞集的形容詞。通過同義詞集,單詞的超級從屬關係可以識別的上位詞,下位 詞或者是一個關係。上位詞表示上級或名詞的概括,例如,“鳥是”烏鴉“和” 鴨“的上位詞,”車輛“是”汽車“和”摩托車“的上位詞,依此類推。下位詞 是上位詞的反義詞,它代表了一個詞的從屬關係;'摩托車'是一個'車',例如下位 詞。除此之外,WordNet 的稱為“根”的功能,這是能夠返回一個字的字根。例 如,根函數將返回“盒子”為“盒子”,“子”為“孩子”,“鵝”“鵝”等。 因此,上位詞和義詞的關係以及根功能,都是 WordNet 的主要特點,我們用它 來實現我們的網頁分類。 2) Ontological Techniques We use the algorithm of Lin to determine the semantic properties of segments. Lin's ontological technique is a semantic similarity measure to measure the similarity between two words. The measurement between two words will return a value between zero (0.0) and one (1.0) where zero is the least similar and 1.0 is the most similar .For example, "Car" and "Sedan" will get a similarity value of 0.9 while "tangerine" and "motorcycle" will get a much lower value. 我們使用該算法來確定 Lin 分段的語義屬性。林的本體論技術是語義相似性測度 來衡量兩個詞之間的相似性。兩個詞之間的測量,會傳回零(0.0)和一個值之 間(1.0)是最相似,1.0 是最相似的,例如,“汽車”和“轎車”將得到一個相 似值 0.9,而其中零“橘子”和“摩托車”將獲得價值低得多。 E. Implementation We implement the webpage classification algorithm by combining the three techniques mentioned previously 1) Segmenting Visual Boundaries 2) Breath First Search 3) Ontology. First of all, we identify the visual boundaries of HTML tags using information provided by the browser rendering engine. We pares and traverse the HTML page using Breadth First Search algorithm. If a particular level of a tree contains at least five HTML tags with sufficient visual boundaries (e.g having area more than 500), we take these HTML Tags as regions. Once the segmentation is done, we tokenize the TextNodes into words and then we select the first two regions, merge them, and group same words together. When a word matches another, the first word will form a cluster of size one. After segmentation and merging of the first 2 regions are carried out, we will perform the tokenization of TextNode to each of the remaining regions, and obtain the root word for each of the tokenized words. For example, the root word of "oxen" is "ox", the root word of "fishes" is "fish", and so on. After that, we measure the semantic similarity of each word in the remaining regions with the words in the merged region using Lin's algorithm. If a pair of words obtains a semantic similarity score of more than 0.7 from a scale of 0.0 to 1.0, the words will be grouped into their respective cluster. The counter of the cluster group will be increased by one each time a match is found. A pair of words which returns a value of less than 0.7 will be ignored. Finally, we will have a list of clusters with their own words. We will then match these keyword with the predefined keywords to determine their match. Keyword with the closest match is taken as the label for that page. 我們執行網頁的分類演算法三者相結合的技術,前面提到的 1)分割視覺邊界 2) 呼氣第一搜索 3)本體論。首先,我們確定使用瀏覽器呈現引擎所提供的資料的 HTML 標記可視化界限。我們分析和使用廣度優先搜索演算法遍歷 HTML 頁面。 如果樹的特定水平,至少有五個充足的可視界限(如面積超過 500)的 HTML 標 籤,我們採取這些 HTML 標籤作為區域。一旦分割完成後,我們為單詞記號化 TextNodes,然後我們選擇前兩個區域,把它們合併,相同的字組在一起。當單 詞匹配,第一個單詞將形成一個大小為一叢集。 之後進行了分割和合併前 2 區域,我們將進行的 TextNode 標記化,其餘各區域, 取得的標記化的詞,每年的詞根。例如,“牛”的詞根是“牛”,“魚”的字根 是“魚”,依此類推。之後,我們衡量在合併後的區域使用 Lin 的演算法的話, 其餘區域的每個字的語義相似性。如果一配對的話得到的語義相似程度超過 0.7, 規模從 0.0 到 1.0 的話將分為各自的叢集。將會增加一個每找到匹配項時,計數 器的群集組。對傳回值小於 0.7 的詞,將被忽略。最後,我們將用自己的詞集群 列表。然後,我們將匹配預定義的關鍵字,這些關鍵字,以確定與其匹配。具有 最接近匹配的關鍵字作為該網頁的標籤。 F. Choosing a label for a webpage In this section, we present an evaluation of our webpage classification algorithm. As mentioned in the previous section, the classification algorithm returns a numeric value of 0.0 to 1.0 to indicate the semantic similarity of words. Hence, the grouped semantically similar word will again check itself using WordNet and Lin's algorithm wtih respect to the eight categories predefined (Art, Business, Computer, Games, Health, Science, news, and Sports). When checking the similarity of a given keyword with respect to a given category is carried out, we calculate the similarity of the keyword with respect to the words in the categories. We perform the matching for the remaining keywords and then summed the similarity score up. We will check each grouped word with each category using the same method and normalized them accordingly. Finally, we rank them accordingly after normalizing theresult for each category. The category with the highest value is chosen as the label. 在本節中,我們提出我們的網頁分類演算法的評估。正如上一節中提到,分類演 算法會傳回數值 0.0 到 1.0 表示詞的語義相似度。因此,分組的語義相似的詞將 再次檢查自己使用 WordNet 和 Lin’s 的演算法:邊方面的八大類預定(藝術,商 業,電腦,遊戲,健康,科學,新聞,體育)。當檢查一個特定關鍵字具有一個 給定的類別進行的相似度性,我們計算出相似度的關鍵字,就在類別詞。我們執 行剩餘的關鍵字匹配,然後總結出來的相似性得分。使用相同的方法和規範化他 們進行相應,我們將檢查每個分組的詞,每個類別。最後我們對他們進行排名進 行相應正常化後為每個類別的結果。標籤被選為最高值與類別。 IV. EXPERIMENTS A. Human Manual Input The dataset used to compare and evaluate the system results is based on a random selection of a total of forty webpages taken form dmoz.org, five form each category which total up to eight different major categories. These categories are Art, Business, Computer, Games, Health, Science, News, and Sports. The webpages are manually categorized into eight different categories. The manual categorization of webpages will then be compared to the system-generated categories to evaluate its accuracy. 用於比較和評估系統結果的數據集根據隨機選擇了一個採取的形式 dmoz.org 五 個,每個類別總數達到 8 個不同的主要分類四十網頁共有。這些類別是藝術,商 業,電腦,遊戲,健康,科學,新聞,體育。手動分類為 8 個不同類別的網頁。 手動網頁分類,然後將系統生成的類別進行比較,以評估其準確性。 B. System Evaluations We evaluate our classification technique by using the categories that is created manually in the previous section. We then use our system to evaluate the category of web pages so that we can compare them with our manual input. When the category generated from the system match the human evaluated category for the same page, a "matched" status will be inserted to indicate the system has correctly identified and classified the page correctly. If the system generated category differs from the human evaluated category, a "not matched" status will be recorded to indicate that the system has failed to identify and classify the page correctly. For the forty pages that we have tested, the results show that twenty-nine out of forty pages has been classified correctly. In other words, the results indicate that 72.5% of the pages have been classified correctly. Our webpage classification technique is able to perform its operation in 200 miliseconds. 我們評估我們的分類技術,通過使用手動方式在前面的部分創建的類別。然後我 們使用本系統評估網頁的類別,使我們可以比較他們與我們的手工輸入。當從系 統中產生的類別相匹配人類在同一頁的評估類別,“匹配”的地位將被插入來表 示系統已經正確識別和正確歸類了網頁。 如果系統生成的類別不同,從人力的評估類別的“不匹配”的地位將被記錄表明, 該系統未能正確識別和分類網頁。 對於 40 頁,我們已經測試,結果表明,40 頁中,有二十九個已經正確分類。換 句話說,結果表明,72.5%的網頁被正確的分類。 我們的網頁分類技術是能夠在 200 毫秒執行其操作。 V. CONCLUSION We propose a novel ontological based classifier for webpages. Unlike existing methods, our approach is fast and accurate as we use segmentation and ontology techniques for classifying a webpage. We segment a webpage into several regions, and then match the keywords in each of these regions to construct a set of labels. This approach could significantly increase our speed performance as we not match all the keywords in the webpage as used in conventional techniques. Unlike existing techniques, we match keywords based on their semantic properties rather than textual contents. Matching keywords using their semantic properties could lead to high accuracy as we can identify related keywords with similar semantic properties. 我們提出了一種新的基於本體論的網頁的分類。與現行方法不同的是,我們的方 法快速和準確,因為我們使用的網頁分類的分割和本體論技術。我們網頁的分割 成幾個區域,然後在這些區域,每個的關鍵字的匹配,構建了一套標籤。這種方 法可以顯著增加我們的速度績效,因為我們不匹配網頁中的全部關鍵字,在傳統 技術中使用。有別於現有的技術,我們根據其語義的屬性而不是文字內容的關鍵 字相匹配。匹配使用的語義屬性的關鍵字,將導致準確度高,我們能夠識別類似 的詞語的語義屬性的相關關鍵字。 VI. REFERENCES