A Fast search Algorithm for Vector Quantization 授課老師:王立洋 老師

advertisement

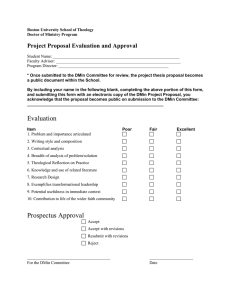

A Fast search Algorithm for Vector Quantization 授課老師:王立洋 老師 製作學生:M9535204 蔡鐘葳 Outline ▓ Full Search for VQ ▓ Principle ▓ Fast Search for VQ ▓ Experimental Results ▓ Reference 2/25 Full Search for VQ 優點: 作法直接簡單 較少的失真以達到較佳的影像品質 缺點: 編解碼計算耗時 3/25 Principle Rejects those codewords that are impossible to be the nearest codeword. It produces the same output as the conventional full search algorithm. 4/25 Fast search Algorithm ENNS: Equal-average nearest neighbor search IENNS: Improved equal-average nearest neighbor search EENNS: Equal-average equal-variance nearest neighbor search IEENNS: Improved equal-average equal-variance nearest neighbor search 5/25 Preview (1/3) Let X = (x1,x2,…,xk) be a k-dimensional vector. X(h) = (x1,x2,…,xh) be a h-dimensional subvector of X. Xf = (x1,x2,…,xk/2) be composed of the first half vector components of X. Xs = (xk/2+1,xk/2+2,…,xk) be composed of the remaining vector components of X, where k is an even number. 6/25 Preview (2/3) The sum of the h vextor components for X(h) can be expressed as: h SX ( h ) xi i 1 where 1 ≦ h ≦ k and SX(h) is the sum of the hdimensional vector X(h) . If h = k, we denote the sum of the vector X as SX . SXf and SXs are the sum of the vector componenets of Xf and Xs . 7/25 Preview (3/3) If the mean of the h-dimensional vector X(h) is mX(h) = SX(h)/h, then the variance of the h-dimensional vector X(h) can be expressed as: VX ( h ) h (x m i ) X (h) 2 i 1 Likewise, VXf and VXs are the sum of the vector componenets of Xf and Xs . 8/25 ENNS (1/3) The ENNS algorithm takes advantage of the fact that the nearest codeword is usually in the neighborhood of the minimum squared sum distance. Assuming the current minimum distortion is Dmin, the main spirit of ENNS algorithm can be stated as follows: if (SX-SCj)2 ≧ k · Dmin then D(X, Cj) ≧ Dmin 9/25 ENNS (2/3) Then the codewords Cj for which SX ≧ SCj+ k D min or SX ≦ SCj- k D min are eliminated. If the condition is satisfied The search will be stopped in this direction. Continued in another direction until the nearest codeword is found. The search shows in Fig. 1. 10/25 ENNS (3/3) Fig .1 11/25 IENNS (1/3) The basic inequality for IENNS algorithm is as follows: if (SX(h)-SCj(h))2 ≧ v · Dmin then D(X, Cj) ≧ Dmin where h ≦ v ≦ k. Let v = h = k/2 and if k is an even number, two inequalities can be expressed as follows. 12/25 IENNS (2/3) a) For vector Xf and codeword Cjf if (SXf-SCjf)2 ≧ k/2 · Dmin then D(X, Cj) ≧ Dmin 13/25 IENNS (3/3) b) For vector Xs and codeword Cjs if (SXs-SCjs)2 ≧ k/2 · Dmin then D(X, Cj) ≧ Dmin Thus, besides the elimination criterion of ENNS, inequalities (a) and (b) can be used to eliminate more unlikely codewords. 14/25 EENNS (1/2) By ENNS, however, two vectors with the same mean value may have a large distance. Based on this condition, the EENNS algorithm introduces the variance to reject more codewords. 15/25 EENNS (2/2) The EENNS algorithm uses the following elimination criterion to eliminate unlikely codewords: if (VX-VCj)2 ≧ Dmin then D(X, Cj) ≧ Dmin 16/25 IEENNS (1/7) The IEENNS algorithm uses the variance and the sum of a vector simultaneously, while the EENNS algorithm uses them separately. The following theorem: Theorem 1: k · D(X, Cj) ≧ (SX-SCj)2+k · (VX-VCj)2 17/25 IEENNS (2/7) Based on this theorem, the following inequality can be obtained: if (SX-SCj)2+k · (VX-VCj)2 ≧ k · Dmin then D(X, Cj) ≧ Dmin 18/25 IEENNS (3/7) Theorem 2: v · D(X, Cj) ≧ (SX(h)-SCj(h))2+k · (VX(h)-VCj(h))2 where h ≦ v ≦ k Based on Theorem 2, three elimination criteria can be stated as follows. 19/25 IEENNS (4/7) Based on this theorem, the following inequality can be obtained: if (SX-SCj)2+k · (VX-VCj)2 ≧ k · Dmin then D(X, Cj) ≧ Dmin 20/25 IEENNS (5/7) a) For vector X and codeword Cj, set c = h = k, the following elimination criterion is obtained: if (SX-SCj)2+k · (VX-VCj)2 ≧ k · Dmin then D(X, Cj) ≧ Dmin 21/25 IEENNS (6/7) b) For vector Xf and codeword Cjf, set v = h = k/2, the following elimination criterion is obtained: if (SXf-SCjf)2+k · (VXf-VCjf)2 ≧ k/2 · Dmin then D(X, Cj) ≧ Dmin 22/25 IEENNS (7/7) c) For vector Xs and codeword Cjs, set v = h = k/2, the following elimination criterion is obtained: if (SXs-SCjs)2+k · (VXs-VCjs)2 ≧ k/2 · Dmin then D(X, Cj) ≧ Dmin 23/25 Experimental Results 24/25 Unit: Second(s) Reference [1] Jeng-Shyang Pan, Zhe-Ming Lu, and Sheng-He Sun, “An Efficient Encoding Algorithm for Vector Quantization Based on Subvector Technique,” IEEE Signal Processing Lett., vol. 12, Mar, 2003. [2] S. J. Baek, B. K. Jeon, and K. M. Sung, “A fast encoding algorithm for vector quantization, “ IEEE Signal Processing Lett., vol. 12, Mar, 2003. 25/25