Face Recognition by Support Vector Machines

advertisement

Face Recognition by Support Vector

Machines

Guodong Guo, Stan Z. Li, and Kapluk Chan

School of Electrical and Electronic Engineering

Proceedings of the Fourth IEEE International Conference on Automatic

Face and Gesture Recognition 2000

Nanyang Technological University, Singapore 639798

指導教授:王啟州 教授

學生:陳桂華

Outline

Abstract

Introduction

Basic Theory of Support Vector Machines

Multi-class Recognition

Experimental Results-Face Recognition on the ORL Face Database

Experimental Results-Face Recognition on a Larger Compound Database

Conclusions

Abstract

In this paper, the SVMs with a binary tree recognition

strategy are used to tackle the face recognition problem.

We compare the SVMs based recognition with the standard

eigenface approach using the Nearest Center Classification

(NCC) criterion.

Introduction

Face recognition technology can be used in wide range of

applications such as identity authentication, access control,

and surveillance.

We propose to construct a binary tree structure to recognize

the testing samples.

Basic Theory of Support Vector

Machines(1/5)

Fig 1. Classification between two classes using hyperplanes

Basic Theory of Support Vector

Machines(2/5)

1

點集合 {xi , yi }, i 1,..., n

xi R d , yi {1,1}

直線 f ( x) wT x b

使所有

(2)

(3)

的點落在 yi 1 這一邊,而所有

f ( x) 0 的點落在 yi 1 這一邊,就可以根據 f (x) 的

正負號來區分點是屬於兩個集合的哪一邊。

f ( x) 0

Basic Theory of Support Vector

Machines(3/5)

那兩條虛線是支援超平面(Support Hyperplane),式子

如下: T

w x b

(4)

wT x b

(5)

中間那條線為最佳支援超平面與margin距離為d

margin 2d 2 / w w 越小則margin越大

將限制條件寫成下面兩個式子:

wT xi b 1

yi 1

(6)

wT xi b 1

yi 1

( 7)

Basic Theory of Support Vector

Machines(4/5)

兩個限制式可以寫成一個限制式,如下:

yi wT xi b 1 0

(8)

總合上式,得以下的目標函式:

1 2

minimize 2 w

i

subject to yi ( wT xi b) 1 0

可以利用 Lagrange Multiplier Method將上面的式子轉成

一個二次方程式,找出可以使L為最小值的w, b, i。

1 2 N

Lw, b, w i yi wT xi b 1

2

i 1

(9)

Basic Theory of Support Vector

Machines(5/5)

符合條件的極值點會出現在:

T

當 yi w xi -b 1 0 時, i 0

當 yi wT xi -b 1 0 時, i 必為0

Multi-class Recognition

One is the one-against-all strategy to classify between each

class and all the remaining; The other is the one-againstone strategy to classify between each pair.

Fig 2. The binary tree structure for 8 classes face recognition.

Experimental Results-Face Recognition on the

ORL Face Database(1/5)

The first experiment is performed on the CambridgeORL

face database, which contains 40 distinct persons. Each

person has ten different images, taken at different times.

Fig 3. Four individuals (each in one row) in the ORL face database.

Experimental Results-Face Recognition on the

ORL Face Database(2/5)

Hidden Markov model (HMM) based approach is used,

and the best model resulted in a 13% error rate.

Later, Samaria extends the top-down HMM [14] with

pseudo two-dimensional HMMs [13], and the error rate

reduces to 5%.

Experimental Results-Face Recognition on the

ORL Face Database(3/5)

Lawrence et al [6] takes the convolutional neural network

(CNN) approach for the classification of ORL database,

and the best error rate reported is 3:83%(in the average of

three runs).

Face recognition experiments on the ORL database, we

select 200 samples randomly as the training set.

Experimental Results-Face Recognition on the

ORL Face Database(4/5)

The remaining 200 samples are used as the test set.

The average minimum error rate of SVM in average is

3.0%, while the NCC is 5.25%.

Experimental Results-Face Recognition on the

ORL Face Database(5/5)

Fig 4. Comparison of error rates versus the number of eigenfaces of the

standard NCC and SVM algorithms on the ORL face database.

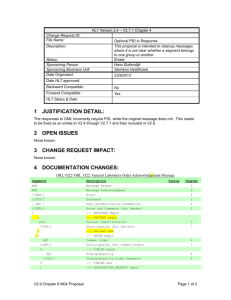

Experimental Results-Face Recognition on a

Larger Compound Database

The second experiment is performed on a compound data

set of 1079 face images of 137 persons.

It is composed of 544 images.

The remaining 535 images are used as the test set.

In this experiment, the number of classes c = 137, and the

c c ‐1

SVMs based methods are trained for

= 18632 pairs.

2

Experimental Results-Face Recognition on a

Larger Compound Database

To construct the binary trees for testing, we decompose

137 = 32 + 32 + 32 + 32 + 8 + 1.

Experimental Results-Face Recognition on a

Larger Compound Database

The minimum error rate of SVM is 8.79%,which is much

better than the 15.14% of NCC.

Fig 5. Comparison of error rates versus the number of eigenfaces of the

standard NCC and SVM algorithms on the compound face database.

Conclusions

We have presented the face recognition experiments using

linear support vector machines with a binary tree

classification strategy.

The experimental results show that the SVMs are a better

learning algorithm than the nearest center approach for

face recognition.

Thanks for your attendance!