Code Generation with DxT: Improved Prototyping and Development Bryan Marker and Dillon Huff

advertisement

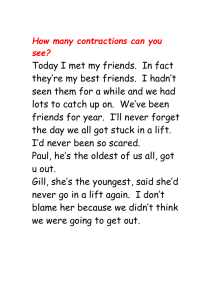

Code Generation with DxT: Improved Prototyping and Development Bryan Marker and Dillon Huff The University of Texas at Austin BLIS2014-1 Recap • Last year I presented how code generation helps – to improve or (performance) validate existing code – to explore and develop implementation options for new code • Generated distributed memory dense linear algebra (DLA) code using the Elemental API • Generated sequential and parallel BLAS3 code for large matrices using BLIS packing and macrokernel calls as an API – Quickly explored parallelization options via code generation – Performance rivaled or beat MKL BLIS2014-2 Code Generation • Now, we are generating code for distributed-memory tensor contractions – Using Martin Schatz’s notation and API for output code • Dillon will discuss complimentary work to generate code at a much lower level – Calling vector intrinsics BLIS2014-3 Code Generation • In all cases, a system is searching many software design options as a person would – Parallelization options – Blocking options – Loop transformations • Sometimes the combination of options is not massive, but people still make mistakes – Correctness bugs – Performance bug • Sometimes the combination is massive and a system must be used BLIS2014-4 DESIGN BY TRANSFORMATION BLIS2014-5 Graphs • Data-flow, directed acyclic graphs • A box or node represents an operation – An interface without implementation details – OR a primitive operation that maps to given code • A starting algorithm specification is represented as a graph without implementation details • We want to transform it into a graph with complete implementation details BLIS2014-6 Transform with Implementations • Refinements replace a box without implementation details – Chooses a specific way to implement the box’s functionality – E.g., choose how to parallelize a contraction or how to load data into CPU registers interface graph1 primitive graph2 BLIS2014-7 Transform to Optimize • Optimizations replace a subgraph with another subgraph – Same functionality – A different way of implementing it – E.g., fuse loops or remove redundant communication interface ok better BLIS2014-8 Examples from Past Work • Refinements choose – Parallelization of BLAS operations like Gemm and Trsm – Dimensions over which to partition data (maybe from FLAME-derived algorithms) • Optimizations – Explore alternate collective communication patterns to redistribute data – Remove redundant communication or computation • Just rewrite rules to explore design options BLIS2014-9 Tensors! BLIS2014-10 Tensors! • Martin Schatz will talk about his notation for parallelizing contractions – Generalization of Jack Poulson’s Elemental notation • Tensors have an arbitrary number of modes (aka dimensions) – Not known until algorithm is specified • Therefore, Martin cannot implement (an efficient) distributed contraction as Jack has done for Gemm • Infinite options for contractions a user might need – Infinite options for data distributions within the contractions BLIS2014-11 Let’s Look at Code BLIS2014-12 AND IT WAS A MOVING TARGET (KIND OF) BLIS2014-13 Code Generation! • We encode software design knowledge – The knowledge one would use to implement a given contraction (once it is known) • Apply design knowledge automatically for a user-specified contraction – Explore a search space of options – Use cost estimates to rank order them – Output the fastest • Notice that even implementing a given contraction is HARD – Could have tens of billions of ways to distribute data and parallelize for a relatively simple contraction – Why should a person explore the options manually? BLIS2014-14 Knowledge Encoding • What are the valid ways to parallelize a contraction and how must the data be distributed to enable that? • Three options – Keep tensor A, B, or C stationary – or don’t communicate it from the default distribution – Encoded as refinements in DxT • Martin’s proposal shows how to formalize these ideas – Martin will talk about that later – We encode his ideas • • Two of the inputs must be redistributed The output might need to be redistributed (and summed across processes) Martin Schatz. “Anatomy of Parallel Computation with Tensors.” PhD Proposal. UT CS TR-3-21. 2013. BLIS2014-15 Knowledge Encoding • How can relatively simple communication patterns from Martin’s API be combined to form arbitrarily complex communication? • Martin’s API provides collective communication for an arbitrary number of modes – AllToAll, AllGather, ReduceScatter, etc. – Not combinations of multiple collectives • How can we combine these collectives? BLIS2014-16 Knowledge Encoding • How can we use complicated communication to redistribute data as needed? • Allows for communication in an arbitrary number of modes and with arbitrary complexity – Implementations are generated when the required communication pattern is known • Refinements encode how to breakdown arbitrary communication into pieces of implemented communication patterns BLIS2014-17 Knowledge Encoding • Parallelizing a given contraction is not sufficient – The same tensor might be redistributed for multiple contractions • How can combinations of communication be optimized? • How can we reduce memory operation to copy / pack data? • Optimization transformations explore these options BLIS2014-18 Implementation Search Space • This leads to many options – Combinatorial search space – Billions of implementations • We are studying how to limit the search space by cutting out clearly bad options – A developer does not consider every single design option – He uses intuition / heuristics to limit consideration • Search optimization Marker, van de Geijn, Batory. “Understanding Performance Stairs: Elucidating Heuristics.” ASE 2014. BLIS2014-19 Lessons Learned • By formally defining and encoding design options we learn a lot • Different experience to implement code directly than to encode the knowledge to implement all similar code – You have to justify your design choices BLIS2014-20 Lessons Learned • We find misconceptions in what we need from the API – New communication patterns – New computation variants BLIS2014-21 Lessons Learned • We identify new optimizations – We are spending less time encoding knowledge than we would to implement the code directly – We are free to identify (and effect) optimizations • Optimizations can be turned on and off easily, so we can see the effects • Can add optimizations to the API and to DxTer hand-in-hand – Quickly re-generate all code with new optimizations – Trust generated code for correctness BLIS2014-22 AND NOW FOR SOMETHING COMPLETELY DIFFERENT BLIS2014-23 Small DLA • Think of the software stack for a DLA library – Layers of loops reduce a large problem to a problem that is small in some (or all) dimensions – Small, fixed problem sizes are not usually an optimized case in libraries • Such operations also show up as part of – Optimization algorithms – Real-time control – Tensor contractions – Small dense blocks in sparse problems BLIS2014-24 Low-Level DLA • Intel’s compilers are really good, but they’re not as good as expert developers – That’s why you pay for MKL • DLA experts are very good – But not available to optimize every problem size in every application for every hardware architecture • Once again, why not encode the experts’ design knowledge to enable a system to generate code? BLIS2014-25 Existing Work • The BTO BLAS does this for level-1 and level-2 – Genetic representation and search • SPIRAL and LGen do this with a mathematical operator notation and rewrite rules • LGen generates code calling functions that are specialized to a particular machine using vector intrinsics – Problem sizes small enough to fit in the L1 cache BLIS2014-26 DxT’s Flavor • Bryan wanted to see if the DxT approach would work at this low level • I was interested in DLA code generation • We aimed to generate small BLAS like operations using a DxT representation (data fit in L1) – Where does DxT fail? – How much encoded design knowledge can be reused from previous work? BLIS2014-27 Knowledge Encoding • DxTer needs a way to do loop tiling and unrolling • Loop fusion is already included in DxTer • Encode how to implement (really) small operations in terms of loading into registers, computing with registers, and saving back to main memory BLIS2014-28 DxTer’s 3 stage approach • Generate a variety of loop structures with different tiling, blocking, and loop fusion patterns • Decide which loops to unroll and the factors to unroll them by • Convert the updates specified in each implementation into primitives that map to C vector intrinsics BLIS2014-29 Charts! Square gemv Square gemm 20 20 15 15 10 10 5 5 0 4 8 16 32 64 128 256 512 0 1024 4 8 16 32 Long n, Short m, varied p gemm 20 15 dxter GFLOPS 10 mkl GFLOPS handwritten GFLOPS 5 0 128 256 384 512 640 786 896 1024 BLIS2014-30 Lessons Learned • DxTer’s partial unrolling is important – Full unrolling is not good • Functions implemented independently via vector operations do not perform as well as generating code using vector operations directly – And the overhead in the representation is small – So we generate code that calls vector intrinsics • Empirical + cost modeling is effective and possible in DxTer – Weed out really bad with cost models and test the rest • Good compilers are important (when the code is amenable) – icc is much better than gcc and clang BLIS2014-31 Questions? Comments? bamarker@cs.utexas.edu www.cs.utexas.edu/~bamarker Thanks to Martin Schatz, Field Van Zee, Tyler Smith, and TACC! BLIS2014-32