Chapter 2 Introductory Econometrics: A Modern Approach, 4 ed.

advertisement

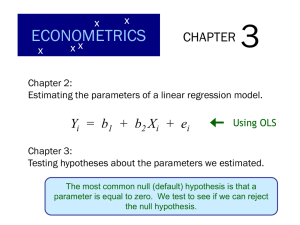

Chapter 2

Introductory Econometrics:

A Modern Approach, 4th ed.

By Jeffrey M. Wooldridge

1

2.1 Definition of the Simple Regression

Model

In the simple linear regression model, where

y = β0 + β1 x + u

(2.1)

It is also call the two-variable (bivariate) linear

regression model

we typically refer to y as the

Dependent Variable, or

Explained Variable, or

Response Variable, or

Regressand

2

2.1 Definition of the Simple Regression

Model

In the simple linear regression of y on x, we

typically refer to x as the

Independent Variable, or

Explanatory Variable, or

Control Variables

Regressor

3

4

2.1 Definition of the Simple Regression

Model

The variable u, called the error term or

disturbance (innovation) in the relationship,

represents factors other than x that affect y.

You can usefully think of u as standing for

“unobserved”

Δy =β1Δx if Δu=0

(2.2)

The intercept parameterβ0 ,sometimes called the

constant term.

5

2.1 Definition of the Simple Regression

Model (Expample)

Soybean Yield and Fertilizer

yield 0 1 fertilizer u

A Simple Wage Equation

wage 0 1 educ u

6

A Simple Assumption

u and x are random variable, we need a concept

grounded in probability.

Mathematically The average value of u, in the

population is 0. That is,

E(u) = 0

(2.5)

As long as the intercept β0 is included in the

equation, nothing by assuming that the average

value of u in population is zero

7

Zero Conditional Mean

We need to make a crucial assumption about how

u and x are related

If u and x are uncorrelated, then, as random

variables, they are not linearly related

The crucial assumption is that the average value of

u does not depend on the value of x

E(u|x) = E(u)

(2.6)

zero conditional mean assumption

E(u|x) = 0

E(y|x) = β0 + β1x

(2.8)

8

Zero Conditional Mean

Take the expected value of (2.1) conditional on x

and using E(u|x) = 0

E(y|x) = β0 + β1x

(2.8)

E(2.8) population regression function (PRF)

E(y|x), is a linear function of x. (systematic part)

The linarity means that a one-unit increase in x

change the expected value of y by the amount β1

The u is call the unsystematic part.

9

E(y|x) as a linear function of x, the mean one-unit

increase in x, change the E(y), byβ1 amount.

10

2.2 Deriving the Ordinary Least Squares

Estimates (OLS, OLSE)

Basic idea of regression is to estimate the

population parameters from a sample

Let {(xi,yi): i=1, …,n} denote a random sample

of size n from the population

For each observation in this sample, it will be the

case that

yi = β0 +β1xi + ui

(2.9)

y: the annual saving , x: the annual income

11

E(savings=y l income=x ) linear model

E(y|x) = 0 + 1x

.{

u4

y

y4

y3

y2

y1

u2 {.

.} u3

} u1

.

x1

x2

x3

x4

x

12

Deriving OLS Estimates

To derive the OLS estimates we need to realize

that our main assumption of E(u|x) = E(u) = 0

also implies that

E(u) = 0

(2.10)

Cov(x,u) = E(xu) = 0

(2.11)

Why? Remember from basic probability that

Cov(X,Y) = E(XY) – E(X)E(Y)

13

Deriving OLS continued

We can write our 2 restrictions just in terms of x,

y, β0 and β1 , since u = y –β0 –β1x

E(y –β0 –β1x) = 0

(2.12)~(2.10)

E[x(y –β0 –β1x)] = 0

(2.13)~(2.11)

These are called moment restrictions

14

Deriving OLS using M.O.M.

The method of moments approach to estimation

implies imposing the population moment

restrictions on the sample moments

What does this mean? Recall that for E(X), the

mean of a population distribution.

1 n

E( X ) X i

n i 1

15

More Derivation of OLS

We want to choose values of the parameters that will

ensure that the sample versions of our moment

restrictions are true

The sample versions are as follows: (2.14), (2.15)

n

n 1 yi ˆ0 ˆ1 xi 0

i 1

n

n 1 xi yi ˆ0 ˆ1 xi 0

i 1

(2.14)

(2.15)

16

More Derivation of OLS

Given the definition of a sample mean, and properties

of summation, we can rewrite the first condition as

follows

ˆ

ˆ x,

y

0

1

or

ˆ 0 y ˆ1 x

(2.16)

(2.17)

17

More Derivation of OLS

n

ˆ x ˆ x 0

y

y

x

i i

1 i

1

i 1

n

n

i 1

i 1

ˆ

y

y

x

i i

1 xi xi x

n

n

i 1

i 1

2

ˆ

xi x yi y 1 xi x

18

So the OLS estimated slope is

n

provided that

( xi x ) 0

2

(2.18)

i 1

n

̂1

(x

i

i 1

x )( yi y )

n

(x

i 1

i

(2.19)

x)

2

19

More OLS

ordinary least squares (OLS)

ˆ0 , ˆ1 fitted value for y when x xi

yˆ i ˆ0 ˆ1 xi

(2.20)

20

More OLS

Intuitively, OLS is fitting a line through the

sample points such that the sum of squared

residuals is as small as possible, hence the

term least squares

The residual, û, is an estimate of the error term, u,

and is the difference between the fitted line

(sample regression function;SRF) and the sample

point

ˆi yi yˆi yi ˆ0 ˆ1xi

(2.21)

21

Sample regression line, sample data points

and the associated estimated error terms

.

{

.}

.}

22

Alternate approach to derivation

Given the intuitive idea of fitting a line, we can set up

a formal minimization problem

That is, we want to choose our parameters such that

we minimize the following:

n

uˆi

i 1

2

n

yi ˆ 0 ˆ1 xi

i 1

2

(2.22)

23

Alternate approach, continued

If one uses calculus to solve the minimization

problem for the two parameters you obtain the

following first order conditions (F.O.C.), which are

the same as we obtained before, multiplied by n

y

n

i 1

n

i

ˆ 0 ˆ1 xi 0

ˆ ˆ x 0

x

y

i i

0

1 i

i 1

24

OLS regression lins

Sample regression function (SRF)

yˆ ˆ 0 ˆ1 x

ˆ yˆ x

( 2.24)

yˆ ˆ1 x

( 2.25)

1

( 2.23)

25

Summary of OLS slope estimate

The slope estimate is the sample covariance

between x and y divided by the sample variance

of x.

If x and y are positively (negatively) correlated,

the slope will be positive (negative)

26

27

explame

Wage and education

N=526

waˆge 0.90 0.54 educ

( 2.27)

that E ( 2.23) has been obtained by OLS

in saying that we run the regression of

y on x

( 2.28)

28

2.3 Properties of OLS on Any Sample of

Data

(1) The sum, and the sample average of OLS residuals,

is zero.

n

̂

i 1

i

0

(2.30)

(2) The sample covariance between the regressors

and OLS residuals is zero.

n

x

i 1

i

̂i 0

(2.31)

29

2.3 Properties of OLS on Any Sample of

Data

The OLS regression line always goes through

the mean (X,Y) of the sample

y 0 1 x

30

More terminology

We can think of each observatio n as being made

up of an explained part, and an unexplaine d part,

yi yˆ i uˆi

(2.32)

We then define the following :

(2.33)

y y is the total sum of squares (SST)

yˆ y is the explained sum of squares (SSE) (2.34)

(2.35)

uˆ is the residual sum of squares (SSR)

2

i

2

i

2

i

Then SST SSE SSR

(2.36)

31

Proof that SST = SSE + SSR

2

ˆ

ˆ

yi yi yi y

y y

uˆ yˆ y

uˆ 2 uˆ yˆ y yˆ

SSR 2 uˆ yˆ y SSE

2

i

2

i

i

2

i

i

i

i

y

2

i

i

Now, (2.36) holds, if we show that

uˆ yˆ

i

i

y 0

(2.37)

32

Goodness-of-Fit

How do we think about how well our sample

regression line fits our sample data?

Can compute the fraction of the total sum of

squares (SST) that is explained by the model, call

this the R-squared of regression

R2 = SSE/SST = 1 – SSR/SST

(2.38)

33

Explame 2.8

In the CEO salary rgression

The equation

salˆary 963.191 18.501roe

(2.39)

n 209, R 2 0.0132

where salary is measured in thousan ds of dollars.

34

Units of Measurement and Functional

Form

The equation

salardol 1000 salary

salaˆrdol 963,191 18,501 roe

(2.40)

where salardol is salary in dollars.

salaˆrdol 963.191 18.501 roe

(2.41)

35

Units of Measurement and Functional

Form (cont)

The above equation can also be written as

salˆary 963.191 1850.1 roedec

(2.41)

where roedec is the decimal equivalent of roe.

roedec 0.01* roe

36

Incoporating Nonlinearities in Simple

Regression

Wage-education explame

log( wage) ˆ0 ˆ1 educ

(2.42)

%wage (1001 )educ

(2.43)

37

Units of Measurement and Functional

Form (cont)

38

2.5 Expected Values and Variances of the

OLS Estimators

Unbiasedness of OLS

Assumption SLR.1: the population model is linear

in parameters as y = β0 + β1x + u

(2.47)

Assumption SLR.2: we can use a random sample

of size n, {(xi, yi): i=1, 2, …, n}, from the

population model. Thus we can write the sample

model

yi = β0+β1xi + ui

(2.48)

39

2.5 Expected Values and Variances of the

OLS Estimators

Assumption SLR.3:

(sample variation in the Explanatory variable )

The sample outcomes on xi , namely, {xi,

i=1,…n}, are not all the same value.

Assumption SLR.4: (zero condition Mean)

The error u has expected value of zero given any

value of the explanatory variable.

E(u|x) = 0

40

Unbiasedness of OLS (cont)

In order to think about unbiasedness, we need to

rewrite our estimator in terms of the population

parameter

Start with a simple rewrite of the formula as

n

̂1

n

n

( x x )( y y ) x ( y y ) ( x x ) y

i

i 1

n

i

2

(

x

x

)

i

i 1

i 1

n

i

i

2

(

x

x

)

i

i 1

i 1

n

i

2

(

x

x

)

i

i

(2.49)

i 1

41

Unbiasedness of OLS (cont)

From the numerator of bate1_hat

x x y x x x u

x x x x x x x u

x x x x x x x u

i

i

i

0

i

0

i

0

i

1

i

1 i

i

1 i

i

i

i

i

i

(2.51)

42

Unbiasedness of OLS (cont)

x x 0,

x x x x x

i

2

i

i

i

SSTx

so, the numerator can be rewritten as

1SSTx xi x ui , and thus

xi x ui

ˆ

1 1

1 (1 / SSTx ) diui (2.52)

SSTx

43

Unbiasedness of OLS (cont)

let d i xi x , so that

ˆi 1 1 SSTx d i ui , then

E ˆ1 1 1

d i E ui 1

SSTx

n

( x x )u

E ( ˆ1 ) E ( 1 ) E ( i n1

i

i

2

(

x

x

)

i

i 1

n

) 1

( x x )E(u )

i

i 1

n

2

(

x

x

)

i

i

1

i 1

44

Unbiasedness Summary

The OLS estimates of β1 and β0 are unbiased

Proof of unbiasedness depends on our 4

assumptions – if any assumption fails, then OLS

is not necessarily unbiased

Remember unbiasedness is a description of the

estimator – in a given sample we may be “near”

or “far” from the true parameter

45

Variance of the OLS Estimators

Now we know that the sampling distribution of our

estimate is centered around the true parameter

Want to think about how spread out this distribution

is

Much easier to think about this variance under an

additional assumption, so

Assumption SLR.5: (Homoskedasticity)

Var(u|x) = s2

46

Variance of OLS (cont)

Var(u|x) = E(u2|x)-[E(u|x)]2

E(u|x) = 0,

so s2 = E(u2|x) = E(u2) = Var(u)

Thus s2 is also the unconditional variance,

called the error variance

s, the square root of the error variance is called

the standard deviation of the error

E(y|x)=β0 + β1x

(2.55)

Var(y|x) = s2

(2.56)

47

Homoskedastic Case

y

f(y|x)

.

x1

. E(y|x) = + x

0

1

x2

48

Heteroskedastic Case

f(y|x)

.

.

x1

x2

x3

.

E(y|x) = 0 + 1x

x

49

Variance of OLS (cont)

Var ˆ1 Var 1 1

SSTx

d u

i

SST Var d u

d Var u

1

SST

d

1

SST

d

1

SST

1

SST

SST

1

2

i

x

2

2

i

x

2

2

i

x

2

2

x

i

i

2

2

i

2

2

x

2

i

x

SSTx

50

Variance of OLS Summary

The larger the error variance, s2, the larger the

variance of the slope estimate

The larger the variability in the xi, the smaller the

variance of the slope estimate

As a result, a larger sample size should decrease

the variance of the slope estimate

Problem that the error variance is unknown

51

Estimating the Error Variance

We don’t know what the error variance, s2, is,

because we don’t observe the errors, ui

What we observe are the residuals, ûi

We can use the residuals to form an estimate of

the error variance

52

Error Variance Estimate (cont)

uˆi yi ˆ0 ˆ1 xi

0 1 xi ui ˆ0 ˆ1 xi

u ˆ ˆ

i

0

0

n

FOC. uˆi 0,

i 1

1

1

n

x uˆ

i 1

i

i

( 2.59)

0

(2.60)

Then, an unbiased estimator of 2 is

1

2

2

ˆ

ˆ

u

( 2.61)

i SSR / n 2

n 2

53

Error Variance Estimate (cont)

ˆ ˆ 2

( 2.62)

Standard error of the regression

recall that sd ˆ

SSTx

if we substitute ˆ for then we have

the standard error of ˆ ,

se ˆ1 ˆ /

1

x x

2

1

2

i

54

EX 2.2

GPT ˆ0 ˆ1 ACT

55