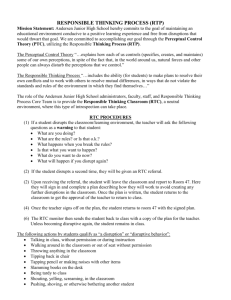

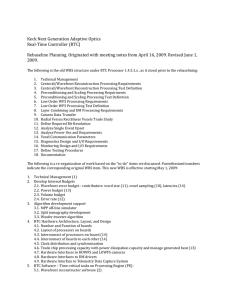

NGAO Real Time Controller Processing Engine Design Document

advertisement