Learning Language from Ambiguous Perceptual Context David L. Chen

advertisement

Learning Language from

Ambiguous Perceptual Context

David L. Chen

Supervisor: Professor Raymond J. Mooney

Ph.D. Dissertation Defense

January 25, 2012

Semantics of Language

• The meaning of words, phrases, etc

• Learning semantics of language is one of the

ultimate goals in natural language processing

• The meanings of many words are grounded in

our perception of the physical world: red, ball,

cup, run, hit, fall, etc. [Harnad, 1990]

• Computer representation should also be

grounded in real world perception

1

Grounding Language

Spanish goalkeeper

Casillas blocks the

ball

2

Grounding Language

Spanish goalkeeper

Casillas blocks the

ball

Block(Casillas)

3

Natural Language and

Meaning Representation

Spanish goalkeeper

Casillas blocks the

ball

Block(Casillas)

4

Natural Language and

Meaning Representation

Natural Language (NL)

Spanish goalkeeper

Casillas blocks the

ball

Block(Casillas)

NL: A language that has evolved naturally, such

as English, German, French, Chinese, etc

4

Natural Language and

Meaning Representation

Natural Language (NL)

Spanish goalkeeper

Casillas blocks the

ball

Meaning Representation

Language (MRL)

Block(Casillas)

NL: A language that has evolved naturally, such

as English, German, French, Chinese, etc

MRL: Formal languages such as logic or any

computer-executable code

4

Semantic Parsing and

Surface Realization

NL

Spanish goalkeeper

Casillas blocks the

ball

MRL

Block(Casillas)

Semantic Parsing (NL MRL)

Semantic Parsing: maps a natural-language sentence to a

complete, detailed semantic representation

5

Semantic Parsing and

Surface Realization

NL

Surface Realization (NL MRL)

Spanish goalkeeper

Casillas blocks the

ball

MRL

Block(Casillas)

Semantic Parsing (NL MRL)

Semantic Parsing: maps a natural-language sentence to a

complete, detailed semantic representation

Surface Realization: Generates a natural-language sentence

from a meaning representation.

5

Applications

• Natural language interface

– Issue commands and queries in natural language

– Computer responds with answer in natural

language

• Knowledge acquisition

• Computer assisted tasks

6

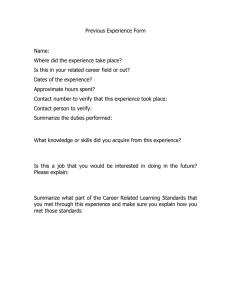

Traditional Learning Approach

Semantic Parser

Learner

Manually Annotated

Training Corpora

(NL/MRL pairs)

Semantic Parser

NL

MRL

7

Example of Annotated

Training Corpus

Natural Language (NL)

Meaning Representation

Language (MRL)

Alice passes the ball to Bob

Pass(Alice, Bob)

Bob turns the ball over to John

Turnover(Bob, John)

John passes to Fred

Pass(John, Fred)

Fred shoots for the goal

Kick(Fred)

Paul blocks the ball

Block(Paul)

Paul kicks off to Nancy

Pass(Paul, Nancy)

8

Learning Language from

Perceptual Context

• Constructing annotated corpora for

language learning is difficult

• Children acquire language through

exposure to linguistic input in the context

of a rich, relevant, perceptual environment

• Ideally, a computer system can learn

language in the same manner

9

Navigation Example

Alice: 在餐廳的地方右轉

Bob

10

Navigation Example

Alice: 在醫院的地方右轉

Bob

11

Navigation Example

Scenario 1

在餐廳的地方右轉

Scenario 2

在醫院的地方右轉

12

Navigation Example

Scenario 1

在

的地方右轉

Scenario 2

在

的地方右轉

13

Navigation Example

Scenario 1

在

的地方右轉

Scenario 2

在

的地方右轉

Make a right turn

13

Navigation Example

Scenario 1

在餐廳的地方右轉

Scenario 2

在醫院的地方右轉

14

Navigation Example

Scenario 1

餐廳

Scenario 2

15

Navigation Example

Scenario 1

在餐廳的地方右轉

Scenario 2

在醫院的地方右轉

16

Navigation Example

Scenario 1

Scenario 2

醫院

17

Thesis Contributions

• Learning from limited ambiguity

– Sportscasting task [Chen & Mooney, ICML, 2008; Chen, Kim &

Mooney, JAIR, 2010]

– Collected English corpus of 2000 sentences that has

been adopted by other researchers [Liang et al., 2009;

Bordes, Usunier, & Weston, 2010; Angeli et al., 2010; Hajishirzi,

Hockenmaier, Muellar, & Amir, 2011]

• Learning from ambiguously supervised relational data

– Navigation task [Chen & Mooney, AAAI, 2011]

– Organized and semantically annotated corpus from

MacMahon et al. (2006)

18

Overview

•

•

•

•

•

Background

Learning from limited ambiguity

Learning from ambiguous relational data

Future directions

Conclusions

19

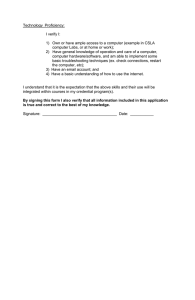

Semantic Parser Learners

• Learn a function from NL to MR

NL: “Purple3 passes the ball to Purple5”

Semantic Parsing

(NL MR)

Surface Realization

(MR NL)

MR: Pass ( Purple3, Purple5 )

•We experiment with two semantic parser learners

–WASP [Wong & Mooney, 2006; 2007]

–KRISP [Kate & Mooney, 2006]

20

WASP: Word Alignment-based Semantic Parsing

• Uses statistical machine translation

techniques

– Synchronous context-free grammars (SCFG) [Wu,

1997; Melamed, 2004; Chiang, 2005]

– Word alignments [Brown et al., 1993; Och & Ney,

2003]

• Capable of both semantic parsing and surface

realization

21

KRISP: Kernel-based Robust Interpretation by

Semantic Parsing

• Productions of MR language are treated like

semantic concepts

• SVM classifier is trained for each production with

string subsequence kernel

• These classifiers are used to compositionally build

MRs of the sentences

• More resistant to noisy supervision but incapable of

language generation

22

Overview

•

•

•

•

•

Background

Learning from limited ambiguity

Learning from ambiguous relational data

Future directions

Conclusions

23

Tractable Challenge Problem:

Learning to Be a Sportscaster

• Goal: Learn from realistic data of natural language

used in a representative context while avoiding

difficult issues in computer perception (i.e.

speech and vision).

• Solution: Learn from textually annotated traces of

activity in a simulated environment.

• Example: Traces of games in the RoboCup

simulator paired with textual sportscaster

commentary.

24

Sample Human Sportscast in Korean

25

Robocup Sportscaster Trace

Natural Language Commentary

Meaning Representation

badPass ( Purple1, Pink8 )

Purple goalie turns the ball over to Pink8

Purple team is very sloppy today

Pink8 passes the ball to Pink11

Pink11 looks around for a teammate

turnover ( Purple1, Pink8 )

kick ( Pink8)

pass ( Pink8, Pink11 )

kick ( Pink11 )

kick ( Pink11 )

ballstopped

Pink11 makes a long pass to Pink8

Pink8 passes back to Pink11

kick ( Pink11 )

pass ( Pink11, Pink8 )

kick ( Pink8 )

pass ( Pink8, Pink11 )

26

Robocup Sportscaster Trace

Natural Language Commentary

Meaning Representation

badPass ( Purple1, Pink8 )

Purple goalie turns the ball over to Pink8

Purple team is very sloppy today

Pink8 passes the ball to Pink11

Pink11 looks around for a teammate

turnover ( Purple1, Pink8 )

kick ( Pink8)

pass ( Pink8, Pink11 )

kick ( Pink11 )

kick ( Pink11 )

ballstopped

Pink11 makes a long pass to Pink8

Pink8 passes back to Pink11

kick ( Pink11 )

pass ( Pink11, Pink8 )

kick ( Pink8 )

pass ( Pink8, Pink11 )

26

Robocup Sportscaster Trace

Natural Language Commentary

Meaning Representation

badPass ( Purple1, Pink8 )

Purple goalie turns the ball over to Pink8

Purple team is very sloppy today

Pink8 passes the ball to Pink11

Pink11 looks around for a teammate

turnover ( Purple1, Pink8 )

kick ( Pink8)

pass ( Pink8, Pink11 )

kick ( Pink11 )

kick ( Pink11 )

ballstopped

Pink11 makes a long pass to Pink8

Pink8 passes back to Pink11

kick ( Pink11 )

pass ( Pink11, Pink8 )

kick ( Pink8 )

pass ( Pink8, Pink11 )

26

Robocup Sportscaster Trace

Natural Language Commentary

Meaning Representation

P6 ( C1, C19 )

Purple goalie turns the ball over to Pink8

Purple team is very sloppy today

Pink8 passes the ball to Pink11

Pink11 looks around for a teammate

P5 ( C1, C19 )

P1( C19 )

P2 ( C19, C22 )

P1 ( C22 )

P1 ( C22 )

P0

Pink11 makes a long pass to Pink8

Pink8 passes back to Pink11

P1 ( C22 )

P2 ( C22, C19 )

P1 ( C19 )

P2 ( C19, C22 )

27

Robocup Data

• Collected human textual commentary for the 4

Robocup championship games from 2001-2004.

– Avg # events/game = 2,613

– Avg # English sentences/game = 509

– Avg # Korean sentences/game = 499

• Each sentence matched to all events within previous

5 seconds.

– Avg # MRs/sentence = 2.5 (min 1, max 12)

• Manually annotated with correct matchings of

sentences to MRs (for evaluation purposes only).

28

Machine Sportscast in English

29

Overview

•

•

•

•

•

Background

Learning from limited ambiguity

Learning from ambiguous relational data

Future directions

Conclusions

30

Navigation Task

• Learn to interpret and follow free-form

navigation instructions

– e.g. Go down this hall and make a right when you see

an elevator to your left

• Assume no prior linguistic knowledge

• Learn by observing how humans follow

instructions

• Use virtual worlds and instructor/follower data

from MacMahon et al. (2006)

31

Environment

H

H – Hat Rack

L

L – Lamp

E

E

C

S

S – Sofa

S

B

E – Easel

C

B – Barstool

C - Chair

H

L

32

Environment

33

Sample Instructions

•Take your first left. Go all the way

down until you hit a dead end.

• Go towards the coat hanger and

turn left at it. Go straight down

the hallway and the dead end is

position 4.

Start 3

End

H

4

•Walk to the hat rack. Turn left.

The carpet should have green

octagons. Go to the end of this

alley. This is p-4.

•Walk forward once. Turn left.

Walk forward twice.

34

Sample Instructions

•Take your first left. Go all the way

down until you hit a dead end.

• Go towards the coat hanger and

turn left at it. Go straight down

the hallway and the dead end is

position 4.

Start 3

End

H

4

Observed primitive actions:

Forward, Left, Forward, Forward

•Walk to the hat rack. Turn left.

The carpet should have green

octagons. Go to the end of this

alley. This is p-4.

•Walk forward once. Turn left.

Walk forward twice.

34

Formal Problem Definition

Given:

{ (e1, a1, w1), (e2, a2, w2), … , (en, an, wn) }

ei – A natural language instruction

ai – An observed action sequence

wi – A world state

Goal:

Build a system that produces the correct aj

given a previously unseen (ej, wj).

35

Challenges

• Many different instructions for the same task

– Describe different actions

– Use different parameters

– Different ways to describe the same parameters

• Hidden plans

– Needs to infer the plans from observed actions

• Exponential number of possible plans

36

Observation

World State

Action Trace

Instruction

Training

37

Observation

World State

Learning system for parsing

navigation instructions

Navigation Plan Constructor

Action Trace

Instruction

Training

37

Observation

World State

Learning system for parsing

navigation instructions

Navigation Plan Constructor

Action Trace

Instruction

Training

Semantic Parser Learner

37

Observation

World State

Learning system for parsing

navigation instructions

Navigation Plan Constructor

Action Trace

Instruction

Training

Plan Refinement

Semantic Parser Learner

37

Observation

World State

Learning system for parsing

navigation instructions

Navigation Plan Constructor

Action Trace

Instruction

Plan Refinement

Semantic Parser Learner

Training

Testing

Instruction

World State

37

Observation

World State

Learning system for parsing

navigation instructions

Navigation Plan Constructor

Action Trace

Instruction

Plan Refinement

Semantic Parser Learner

Training

Testing

Instruction

Semantic Parser

World State

37

Observation

World State

Learning system for parsing

navigation instructions

Navigation Plan Constructor

Action Trace

Instruction

Plan Refinement

Semantic Parser Learner

Training

Testing

Instruction

World State

Action Trace

Semantic Parser

Execution Module (MARCO)

37

Observation

World State

Learning system for parsing

navigation instructions

Navigation Plan Constructor

Action Trace

Instruction

Plan Refinement

Semantic Parser Learner

Training

Testing

Instruction

World State

Action Trace

Semantic Parser

Execution Module (MARCO)

37

Constructing Navigation Plans

Instruction: Walk to the couch and turn left

Action Trace: Forward, Left

Basic plan: Directly model the observed actions

Travel

Turn

steps:

1

LEFT

Landmarks plan: Add interleaving verification steps

Travel

Verify

steps:

1

at:

SOFA

Turn

Verify

LEFT

front:

BLUE

HALL

38

Plan Refinement

There are generally many ways to describe the same

route. The plan refinement step aims to find the

correct plan that corresponds to the instruction.

Turn

LEFT

Verify

front:

BLUE

HALL

front:

SOFA

Travel

Verify

steps

:2

at:

SOFA

39

Plan Refinement

Turn and walk to the couch

Turn

LEFT

Verify

front:

BLUE

HALL

front:

SOFA

Travel

Verify

steps

:2

at:

SOFA

40

Plan Refinement

Face the blue hall and walk 2 steps

Turn

LEFT

Verify

front:

BLUE

HALL

front:

SOFA

Travel

Verify

steps

:2

at:

SOFA

41

Plan Refinement

Turn left. Walk forward twice.

Turn

LEFT

Verify

front:

BLUE

HALL

front:

SOFA

Travel

Verify

steps

:2

at:

SOFA

42

Plan Refinement

• Remove extraneous details in the plans

• First learn the meaning of words and short

phrases

• Use the learned lexicon to remove parts of the

plans unrelated to the instructions

43

Subgraph Generation Online Lexicon Learning

(SGOLL)

1. As an example comes in, break down the

sentence and the graph into n-grams and

connected subgraphs

Turn and walk to the couch

Turn

LEFT

Verify

front:

BLUE

HALL

front:

SOFA

Travel

Verify

steps

:2

at:

SOFA

44

Subgraph Generation Online Lexicon Learning

(SGOLL)

Turn and walk to the couch

1-gram

turn, and, walk, to, the,

couch

Turn

Verify

front

:

BLUE

HALL

LEFT

front

:

SOF

A

Travel

Verify

step

s: 2

at:

SOF

A

Connected subgraph of size 1

2-gram

turn and, and walk, walk

to, to the, the couch

3-gram

turn and walk, and walk

to, walk to the, to the

couch

…

Turn

Verify

LEFT

…

Connected subgraph of size 2

Turn

Turn

Verify

LEFT

…

45

Subgraph Generation Online Lexicon Learning

(SGOLL)

Turn and walk to the couch

1-gram

turn, and, walk, to, the,

couch

Turn

Verify

front

:

BLUE

HALL

LEFT

front

:

SOF

A

Travel

Verify

step

s: 2

at:

SOF

A

Connected subgraph of size 1

2-gram

turn and, and walk, walk

to, to the, the couch

3-gram

turn and walk, and walk

to, walk to the, to the

couch

…

Turn

Verify

LEFT

…

Connected subgraph of size 2

Turn

Turn

Verify

LEFT

…

45

Subgraph Generation Online Lexicon Learning

(SGOLL)

Turn and walk to the couch

1-gram

turn, and, walk, to, the,

couch

Turn

Verify

front

:

BLUE

HALL

LEFT

front

:

SOF

A

Travel

Verify

step

s: 2

at:

SOF

A

Connected subgraph of size 1

2-gram

turn and, and walk, walk

to, to the, the couch

3-gram

turn and walk, and walk

to, walk to the, to the

couch

…

Turn

Verify

LEFT

…

Connected subgraph of size 2

Turn

Turn

Verify

LEFT

…

45

Subgraph Generation Online Lexicon Learning

(SGOLL)

Turn and walk to the couch

1-gram

turn, and, walk, to, the,

couch

Turn

Verify

front

:

BLUE

HALL

LEFT

front

:

SOF

A

Travel

Verify

step

s: 2

at:

SOF

A

Connected subgraph of size 1

2-gram

turn and, and walk, walk

to, to the, the couch

3-gram

turn and walk, and walk

to, walk to the, to the

couch

…

Turn

Verify

LEFT

…

Connected subgraph of size 2

Turn

Turn

Verify

LEFT

…

45

Subgraph Generation Online Lexicon Learning

(SGOLL)

Turn and walk to the couch

1-gram

turn, and, walk, to, the,

couch

Turn

Verify

front

:

BLUE

HALL

LEFT

front

:

SOF

A

Travel

Verify

step

s: 2

at:

SOF

A

Connected subgraph of size 1

2-gram

turn and, and walk, walk

to, to the, the couch

3-gram

turn and walk, and walk

to, walk to the, to the

couch

…

Turn

Verify

LEFT

…

Connected subgraph of size 2

Turn

Turn

Verify

LEFT

…

45

Subgraph Generation Online Lexicon Learning

(SGOLL)

Turn and walk to the couch

1-gram

turn, and, walk, to, the,

couch

Turn

Verify

front

:

BLUE

HALL

LEFT

front

:

SOF

A

Travel

Verify

step

s: 2

at:

SOF

A

Connected subgraph of size 1

2-gram

turn and, and walk, walk

to, to the, the couch

3-gram

turn and walk, and walk

to, walk to the, to the

couch

…

Turn

Verify

LEFT

…

Connected subgraph of size 2

Turn

Turn

Verify

LEFT

…

45

Subgraph Generation Online Lexicon Learning

(SGOLL)

Turn and walk to the couch

1-gram

turn, and, walk, to, the,

couch

Turn

Verify

front

:

BLUE

HALL

LEFT

front

:

SOF

A

Travel

Verify

step

s: 2

at:

SOF

A

Connected subgraph of size 1

2-gram

turn and, and walk, walk

to, to the, the couch

3-gram

turn and walk, and walk

to, walk to the, to the

couch

…

Turn

Verify

LEFT

…

Connected subgraph of size 2

Turn

Turn

Verify

LEFT

…

45

Subgraph Generation Online Lexicon Learning

(SGOLL)

Turn and walk to the couch

1-gram

turn, and, walk, to, the,

couch

Turn

Verify

front

:

BLUE

HALL

LEFT

front

:

SOF

A

Travel

Verify

step

s: 2

at:

SOF

A

Connected subgraph of size 1

2-gram

turn and, and walk, walk

to, to the, the couch

3-gram

turn and walk, and walk

to, walk to the, to the

couch

…

Turn

Verify

LEFT

…

Connected subgraph of size 2

Turn

Turn

Verify

LEFT

…

45

Subgraph Generation Online Lexicon Learning

(SGOLL)

Turn and walk to the couch

1-gram

turn, and, walk, to, the,

couch

Turn

Verify

front

:

BLUE

HALL

LEFT

front

:

SOF

A

Travel

Verify

step

s: 2

at:

SOF

A

Connected subgraph of size 1

2-gram

turn and, and walk, walk

to, to the, the couch

3-gram

turn and walk, and walk

to, walk to the, to the

couch

…

Turn

Verify

LEFT

…

Connected subgraph of size 2

Turn

Turn

Verify

LEFT

…

45

Subgraph Generation Online Lexicon Learning

(SGOLL)

Turn and walk to the couch

1-gram

turn, and, walk, to, the,

couch

Turn

Verify

front

:

BLUE

HALL

LEFT

front

:

SOF

A

Travel

Verify

step

s: 2

at:

SOF

A

Connected subgraph of size 1

2-gram

turn and, and walk, walk

to, to the, the couch

3-gram

turn and walk, and walk

to, walk to the, to the

couch

…

Turn

Verify

LEFT

…

Connected subgraph of size 2

Turn

Turn

Verify

LEFT

…

45

Subgraph Generation Online Lexicon Learning

(SGOLL)

Turn and walk to the couch

1-gram

turn, and, walk, to, the,

couch

Turn

Verify

front

:

BLUE

HALL

LEFT

front

:

SOF

A

Travel

Verify

step

s: 2

at:

SOF

A

Connected subgraph of size 1

2-gram

turn and, and walk, walk

to, to the, the couch

3-gram

turn and walk, and walk

to, walk to the, to the

couch

…

Turn

Verify

LEFT

…

Connected subgraph of size 2

Turn

Turn

Verify

LEFT

…

45

Subgraph Generation Online Lexicon Learning

(SGOLL)

Turn and walk to the couch

1-gram

turn, and, walk, to, the,

couch

Turn

Verify

front

:

BLUE

HALL

LEFT

front

:

SOF

A

Travel

Verify

step

s: 2

at:

SOF

A

Connected subgraph of size 1

2-gram

turn and, and walk, walk

to, to the, the couch

3-gram

turn and walk, and walk

to, walk to the, to the

couch

…

Turn

Verify

LEFT

…

Connected subgraph of size 2

Turn

Turn

Verify

LEFT

…

45

Subgraph Generation Online Lexicon Learning

(SGOLL)

Turn and walk to the couch

1-gram

turn, and, walk, to, the,

couch

Turn

Verify

front

:

BLUE

HALL

LEFT

front

:

SOF

A

Travel

Verify

step

s: 2

at:

SOF

A

Connected subgraph of size 1

2-gram

turn and, and walk, walk

to, to the, the couch

3-gram

turn and walk, and walk

to, walk to the, to the

couch

…

Turn

Verify

LEFT

…

Connected subgraph of size 2

Turn

Turn

Verify

LEFT

…

45

Subgraph Generation Online Lexicon Learning

(SGOLL)

Turn and walk to the couch

1-gram

turn, and, walk, to, the,

couch

Turn

Verify

front

:

BLUE

HALL

LEFT

front

:

SOF

A

Travel

Verify

step

s: 2

at:

SOF

A

Connected subgraph of size 1

2-gram

turn and, and walk, walk

to, to the, the couch

3-gram

turn and walk, and walk

to, walk to the, to the

couch

…

Turn

Verify

LEFT

…

Connected subgraph of size 2

Turn

Turn

Verify

LEFT

…

45

Subgraph Generation Online Lexicon Learning

(SGOLL)

2. Increase the counts and co-occurrence count of

each n-gram, connected-subgraph pair. Hash

the connected-subgraphs for efficient update.

Turn

48

Turn

turn

LEFT

22

Turn

RIGHT

…

26

46

Subgraph Generation Online Lexicon Learning

(SGOLL)

2. Increase the counts and co-occurrence count of

each n-gram, connected-subgraph pair. Hash

the connected-subgraphs for efficient update.

Turn

48+1

Turn

turn

LEFT

22+1

Turn

RIGHT

…

26

46

Subgraph Generation Online Lexicon Learning

(SGOLL)

2. Increase the counts and co-occurrence count of

each n-gram, connected-subgraph pair. Hash

the connected-subgraphs for efficient update.

Turn

49

Turn

turn

LEFT

23

Turn

RIGHT

…

26

46

Subgraph Generation Online Lexicon Learning

(SGOLL)

3. Rank the entries by the scoring function

Turn

Turn

turn

RIGHT

0.56

0.31

Turn

LEFT

…

0.27

47

Refining Plans Using A Lexicon

Walk to the couch and turn left

Travel

Verify

steps:

1

at:

SOFA

Turn

Verify

LEFT

front:

BLUE

HALL

Look for the highest-scoring lexical entry that

matches both the words in the instruction and

the nodes in the graph.

48

Refining Plans Using A Lexicon

Walk to the couch and turn left

Travel

Verify

steps:

1

at:

SOFA

Turn

Verify

LEFT

front:

BLUE

HALL

Lexicon entry:

Turn

turn left (score:

0.84)

LEFT

49

Refining Plans Using A Lexicon

Walk to the couch and

Travel

Verify

steps:

1

at:

SOFA

Turn

Verify

LEFT

front:

BLUE

HALL

Lexicon entry:

walk to the couch

(score: 0.75)

Travel

Verify

at:

SOFA

50

Refining Plans Using A Lexicon

and

Travel

Verify

steps:

1

at:

SOFA

Turn

Verify

LEFT

front:

BLUE

HALL

Lexicon exhausted

51

Refining Plans Using A Lexicon

and

Travel

Verify

Turn

at:

SOFA

LEFT

Remove all unmarked nodes

52

Refining Plans Using A Lexicon

Walk to the couch and turn left

Travel

Verify

Turn

at:

SOFA

LEFT

53

Evaluation Data Statistics

• 3 maps, 6 instructors, 1-15 followers/direction

• Hand-segmented into single sentence steps

Paragraph

Take the wood path towards the easel. At the easel, go left and then take a right

on the the blue path at the corner. Follow the blue path towards the chair and at

the chair, take a right towards the stool. When you reach the stool, you are at 7.

Turn, Forward, Turn left, Forward, Turn right, Forward x 3, Turn right, Forward

Single sentence

Take the wood path towards the easel.

Turn

At the easel, go left and then take a right on the the blue path at the corner.

Forward, Turn left, Forward, Turn right

…

54

Evaluation Data Statistics

Paragraph

Single-Sentence

706

3236

5.0 (±2.8)

1.0 (±0)

Avg. # words

37.6 (±21.1)

7.8 (±5.1)

Avg. # actions

10.4 (±5.7)

2.1 (±2.4)

# Instructions

Avg. # sentences

55

Plan Construction

• Test how well the system infers the correct

navigation plans

• Gold-standard plans annotated manually

• Use partial parse accuracy as metric

– Credit for the correct action type (e.g. Turn)

– Additional credit for correct arguments (e.g. LEFT)

• Lexicon learned and tested on the same data

from two maps out of three

56

Plan Construction

Precision

Recall

F1

Basic Plans

81.46

55.88

66.27

Landmarks Plans

45.42

85.46

59.29

Refined Landmarks

Plans

87.32

72.96

79.49

57

Parse Accuracy

• Examine how well the learned semantic

parsers can parse novel sentences

• Train semantic parsers on the inferred plans

using KRISP [Kate and Mooney, 2006]

• Leave-one-map-out approach

• Use partial parse accuracy as metric

• Upper baseline: train on human annotated

plans

58

Parse Accuracy

Basic Plans

Landmarks Plans

Refined

Landmarks Plans

Human

Annotated Plans

Precision

85.39

52.20

Recall

50.95

50.48

F1

63.76

51.11

88.36

57.03

69.31

90.09

76.29

82.62

59

End-to-end Execution

• Test how well the system can perform the

overall navigation task

• Leave-one-map-out approach

• Strict metric: Only successful if the final

position matches exactly

60

End-to-end Execution

• Lower baseline

– A simple generative model based on the

frequency of actions alone

• Upper baselines

– Training with human annotated gold plans

– Complete MARCO system [MacMahon, 2006]

– Humans

61

End-to-end Execution

Simple Generative Model

Basic Plans

Landmarks Plans

Single Sentences

11.08%

58.72%

18.67%

Paragraphs

2.15%

16.21%

2.66%

Refined Landmarks Plans

Human Annotated Plans

MARCO

57.28%

62.67%

77.87%

19.18%

29.59%

55.69%

N/A

69.64%

Human Followers

62

End-to-end Execution

Simple Generative Model

Basic Plans

Landmarks Plans

Single Sentences

11.08%

58.72%

18.67%

Paragraphs

2.15%

16.21%

2.66%

Refined Landmarks Plans

Human Annotated Plans

MARCO

57.28%

62.67%

77.87%

19.18%

29.59%

55.69%

N/A

69.64%

Human Followers

62

End-to-end Execution

Simple Generative Model

Basic Plans

Landmarks Plans

Single Sentences

11.08%

58.72%

18.67%

Paragraphs

2.15%

16.21%

2.66%

Refined Landmarks Plans

Human Annotated Plans

MARCO

57.28%

62.67%

77.87%

19.18%

29.59%

55.69%

N/A

69.64%

Human Followers

62

End-to-end Execution

Simple Generative Model

Basic Plans

Landmarks Plans

Single Sentences

11.08%

58.72%

18.67%

Paragraphs

2.15%

16.21%

2.66%

Refined Landmarks Plans

Human Annotated Plans

MARCO

57.28%

62.67%

77.87%

19.18%

29.59%

55.69%

N/A

69.64%

Human Followers

62

Example Parse

Instruction:

Parse:

“Place your back against the wall of the ‘T’ intersection.

Turn left. Go forward along the pink-flowered carpet hall

two segments to the intersection with the brick hall. This

intersection contains a hatrack. Turn left. Go forward three

segments to an intersection with a bare concrete hall,

passing a lamp. This is Position 5.”

Turn ( ),

Verify ( back: WALL ),

Turn ( LEFT ),

Travel ( ),

Verify ( side: BRICK HALLWAY ),

Turn ( LEFT ),

Travel ( steps: 3 ),

Verify ( side: CONCRETE HALLWAY )

63

Mandarin Chinese Experiment

• Translated all the instructions from English to

Chinese

• Train and test in the same way

• Chinese does not include word boundaries

(spaces)

• Naively segment each character

• Use a trained Chinese word segmenter

[Chang, Galley & Manning, 2008]

64

Mandarin Chinese Experiment

Single Sentences

Paragraphs

Refined Landmarks Plans

(segment by character)

58.54%

16.11%

Refined Landmarks Plans

(segment by Stanford

segmenter)

58.70%

20.13%

65

Collect Additional Training Data Using

Mechanical Turk

• Needs 2 types of data

– Navigation instructions

– Action traces corresponding to those instructions

• Design 2 Human Intelligence Tasks (HITs) to

collect them from Amazon’s Mechanical Turk

66

Instructor Task

• Shown a randomly generated simulation that

contains 1 to 4 actions

• Workers asked to write an instruction that

would lead someone to perform those actions

– Intended to be single sentences, but not enforced

67

Follower Task

• Given an instruction and placed at the starting

position

• Worker asked to follow the instruction as best

as they can

68

Data Statistics

• Two-month period, 653 workers, $277.92

• Over 2,400 instructions and 14,000 follower traces

• Filter so that instructions need to have > 5 followers

and majority agreement on a single path

MTurk

Original Data

# Instructions

1011

3236

Vocabulary size

590

629

Avg. # words

7.69 (±7.12)

7.8 (±5.1)

Avg. # actions

1.84 (±1.24)

2.1 (±2.4)

69

Mechanical Turk Training Data

Experiment

Refined Landmarks Plans

Refined Landmarks Plans

(with additional training

data from Mechanical Turk)

Single Sentences

57.28%

Paragraphs

19.18%

57.62%

20.64%

70

Overview

•

•

•

•

•

Background

Learning from limited ambiguity

Learning from ambiguous relational data

Future directions

Conclusions

71

System Improvements

•

•

•

•

•

Tighter integration of the components

Learning higher-order concepts

Incorporating syntactic information

Scaling the system

Sportscasting task

– More detailed meaning representation

– Entertainment (variability, emotion, etc)

• Navigation task

– Generating instructions

– Dialogue system for more interactions

72

Long-term Directions

• Data acquisition framework

– Let users of the system also provide training data

• Real perceptions

– Use computer vision to recognize objects and

events

• Machine Translation

– Learn translation models from comparable

corpora

73

Conclusion

• Traditional language learning approach relies on

expensive, annotated training data.

• We have developed language learning systems that

can learn by observing language used in a relevant,

perceptual environment.

• We have devised two test applications that address

two different scenarios:

– Learning to sportscast (limited ambiguity)

– Learning to follow navigation instructions (exponential

ambiguity)

74