Grounded Language Learning Models for Ambiguous Supervision Joohyun Kim

advertisement

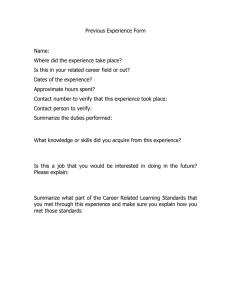

Grounded Language Learning Models for Ambiguous Supervision Joohyun Kim Supervising Professor: Raymond J. Mooney Ph.D Thesis Defense Talk August 23, 2013 Outline • Introduction/Motivation • Grounded Language Learning in Limited Ambiguity (Kim and Mooney, COLING 2010) – Learning to sportscast • Grounded Language Learning in High Ambiguity (Kim and Mooney, EMNLP 2012) – Learn to follow navigational instructions • Discriminative Reranking for Grounded Language Learning (Kim and Mooney, ACL 2013) • Future Directions • Conclusion 2 Outline • Introduction/Motivation • Grounded Language Learning in Limited Ambiguity (Kim and Mooney, COLING 2010) – Learning to sportscast • Grounded Language Learning in High Ambiguity (Kim and Mooney, EMNLP 2012) – Learn to follow navigational instructions • Discriminative Reranking for Grounded Language Learning (Kim and Mooney, ACL 2013) • Future Directions • Conclusion 3 Language Grounding • The process to acquire the semantics of natural language with respect to relevant perceptual contexts • Human child grounds language to perceptual contexts via repetitive exposure in statistical way (Saffran et al. 1999, Saffran 2003) • Ideally, we want computational system to learn from the similar way 4 Language Grounding: Machine Iran’s goalkeeper blocks the ball 5 Language Grounding: Machine Iran’s goalkeeper blocks the ball Block(IranGoal Keeper) Machine 6 Language Grounding: Machine Language Learning Iran’s goalkeeper blocks the ball Computer Vision Block(IranGoal Keeper) 7 Natural Language and Meaning Representation Iran’s goalkeeper blocks the ball Block(IranGoalKeeper) 8 Natural Language and Meaning Representation Natural Language (NL) Iran’s goalkeeper blocks the ball Block(IranGoalKeeper) NL: A language that arises naturally by the innate nature of human intellect, such as English, German, French, Korean, etc 9 Natural Language and Meaning Representation Natural Language (NL) Iran’s goalkeeper blocks the ball Meaning Representation Language (MRL) Block(IranGoalKeeper) NL: A language that arises naturally by the innate nature of human intellect, such as English, German, French, Korean, etc MRL: Formal languages that machine can understand such as logic or any computer-executable code 10 Semantic Parsing and Surface Realization NL Iran’s goalkeeper blocks the ball MRL Block(IranGoalKeeper) Semantic Parsing (NL MRL) Semantic Parsing: maps a natural-language sentence to a full, detailed semantic representation → Machine understands natural language 11 Semantic Parsing and Surface Realization NL Surface Realization (NL MRL) Iran’s goalkeeper blocks the ball MRL Block(IranGoalKeeper) Semantic Parsing (NL MRL) Semantic Parsing: maps a natural-language sentence to a full, detailed semantic representation → Machine understands natural language Surface Realization: Generates a natural-language sentence from a meaning representation. → Machine communicates with natural language 12 Conventional Language Learning Systems • Requires manually annotated corpora • Time-consuming, hard to acquire, and not scalable Semantic Parser Learner Manually Annotated Training Corpora (NL/MRL pairs) Semantic Parser NL MRL 13 Learning from Perceptual Environment • Motivated by how children learn language in rich, ambiguous perceptual environment with linguistic input • Advantages – Naturally obtainable corpora – Relatively easy to annotate – Motivated by natural process of human language learning 14 Navigation Example Alice: 식당에서 우회전 하세요 Bob Slide from David Chen 15 Navigation Example Alice: 병원에서 우회전 하세요 Bob Slide from David Chen 16 Navigation Example Scenario 1 식당에서 우회전 하세요 Scenario 2 병원에서 우회전 하세요 Slide from David Chen 17 Navigation Example Scenario 1 병원에서 우회전 하세요 Scenario 2 식당에서 우회전 하세요 Slide from David Chen 18 Navigation Example Scenario 1 병원에서 우회전 하세요 Scenario 2 식당에서 우회전 하세요 Make a right turn Slide from David Chen 19 Navigation Example Scenario 1 식당에서 우회전 하세요 Scenario 2 병원에서 우회전 하세요 Slide from David Chen 20 Navigation Example Scenario 1 식당 Scenario 2 Slide from David Chen 21 Navigation Example Scenario 1 식당에서 우회전 하세요 Scenario 2 병원에서 우회전 하세요 Slide from David Chen 22 Navigation Example Scenario 1 Scenario 2 병원 Slide from David Chen 23 Thesis Contributions • Generative models for grounded language learning from ambiguous, perceptual environment – Unified probabilistic model incorporating linguistic cues and MR structures (vs. previous approaches) – General framework of probabilistic approaches that learn NL-MR correspondences from ambiguous supervision • Adapting discriminative reranking to grounded language learning – Standard reranking is not available – No single gold-standard reference for training data – Weak response from perceptual environment can train discriminative reranker 24 Outline • Introduction/Motivation • Grounded Language Learning in Limited Ambiguity (Kim and Mooney, COLING 2010) – Learning to sportscast • Grounded Language Learning in High Ambiguity (Kim and Mooney, EMNLP 2012) – Learn to follow navigational instructions • Discriminative Reranking for Grounded Language Learning (Kim and Mooney, ACL 2013) • Future Directions • Conclusion 25 Navigation Task (Chen and Mooney, 2011) • Learn to interpret and follow navigation instructions – e.g. Go down this hall and make a right when you see an elevator to your left • Use virtual worlds and instructor/follower data from MacMahon et al. (2006) • No prior linguistic knowledge • Infer language semantics by observing how humans follow instructions 26 Sample Environment (MacMahon et al., 2006) H H – Hat Rack L L – Lamp E E C S S – Sofa S B E – Easel C B – Barstool C - Chair H L 27 Executing Test Instruction 28 Task Objective • Learn the underlying meanings of instructions by observing human actions for the instructions – Learn to map instructions (NL) into correct formal plan of actions (MR) • Learn from high ambiguity – Training input of NL instruction / landmarks plan (Chen and Mooney, 2011) pairs – Landmarks plan Describe actions in the environment along with notable objects encountered on the way Overestimate the meaning of the instruction, including unnecessary details Only subset of the plan is relevant for the instruction 29 Challenges Instruction: "at the easel, go left and then take a right onto the blue path at the corner" Landmarks Travel ( steps: 1 ) , plan: Verify ( at: EASEL , side: CONCRETE HALLWAY ) , Turn ( LEFT ) , Verify ( front: CONCRETE HALLWAY ) , Travel ( steps: 1 ) , Verify ( side: BLUE HALLWAY , front: WALL ) , Turn ( RIGHT ) , Verify ( back: WALL , front: BLUE HALLWAY , front: CHAIR , front: HATRACK , left: WALL , right: EASEL ) 30 Challenges Instruction: "at the easel, go left and then take a right onto the blue path at the corner" Landmarks Travel ( steps: 1 ) , plan: Verify ( at: EASEL , side: CONCRETE HALLWAY ) , Turn ( LEFT ) , Verify ( front: CONCRETE HALLWAY ) , Travel ( steps: 1 ) , Verify ( side: BLUE HALLWAY , front: WALL ) , Turn ( RIGHT ) , Verify ( back: WALL , front: BLUE HALLWAY , front: CHAIR , front: HATRACK , left: WALL , right: EASEL ) 31 Challenges Instruction: "at the easel, go left and then take a right onto the blue path at the corner" Correct plan: Travel ( steps: 1 ) , Verify ( at: EASEL , side: CONCRETE HALLWAY ) , Turn ( LEFT ) , Verify ( front: CONCRETE HALLWAY ) , Travel ( steps: 1 ) , Verify ( side: BLUE HALLWAY , front: WALL ) , Turn ( RIGHT ) , Verify ( back: WALL , front: BLUE HALLWAY , front: CHAIR , front: HATRACK , left: WALL , right: EASEL ) Exponential Number of Possibilities! Combinatorial matching problem between instruction and landmarks plan 32 Previous Work (Chen and Mooney, 2011) • Circumvent combinatorial NL-MR correspondence problem – Constructs supervised NL-MR training data by refining landmarks plan with learned semantic lexicon Greedily select high-score lexemes to choose probable MR components out of landmarks plan – Trains supervised semantic parser to map novel instruction (NL) to correct formal plan (MR) – Loses information during refinement Deterministically select high-score lexemes Ignores possibly useful low-score lexemes Some relevant MR components are not considered at all 33 Proposed Solution (Kim and Mooney, 2012) • Learn probabilistic semantic parser directly from ambiguous training data – Disambiguate input + learn to map NL instructions to formal MR plan – Semantic lexicon (Chen and Mooney, 2011) as basic unit for building NL-MR correspondences – Transforms into standard PCFG (Probabilistic Context-Free Grammar) induction problem with semantic lexemes as nonterminals and NL words as terminals 34 System Diagram (Chen and Mooney, 2011) Observation World State Action Trace Possible information loss Landmarks Plan Plan Refinement Supervised Refined Plan (Supervised) Semantic Parser Learner Training Testing Instruction World State Semantic Parser Execution Module (MARCO) Action Trace 35 Learning/Inference Instruction Learning system for parsing navigation instructions Navigation Plan Constructor System Diagram of Proposed Solution Observation World State Action Trace Learning system for parsing navigation instructions Navigation Plan Constructor Landmarks Plan Instruction Probabilistic Semantic Parser Learner (from ambiguous supervison) Instruction Semantic Parser Training Testing World State Execution Module (MARCO) Action Trace 36 PCFG Induction Model for Grounded Language Learning (Borschinger et al. 2011) • PCFG rules to describe generative process from MR components to corresponding NL words 37 Hierarchy Generation PCFG Model (Kim and Mooney, 2012) • Limitations of Borschinger et al. 2011 – Only work in low ambiguity settings 1 NL – a handful of MRs (≤ order of 10s) – Only output MRs included in the constructed PCFG from training data • Proposed model – Use semantic lexemes as units of semantic concepts – Disambiguate NL-MR correspondences in semantic concept (lexeme) level – Disambiguate much higher level of ambiguous supervision – Output novel MRs not appearing in the PCFG by composing MR parse with semantic lexeme MRs 38 Semantic Lexicon (Chen and Mooney, 2011) • Pair of NL phrase w and MR subgraph g • Based on correlations between NL instructions and context MRs (landmarks plans) – How graph g is probable given seeing phrase w • Examples cooccurrence of g and w general occurrence of g without w – “to the stool”, Travel(), Verify(at: BARSTOOL) – “black easel”, Verify(at: EASEL) – “turn left and walk”, Turn(), Travel() 39 Lexeme Hierarchy Graph (LHG) • Hierarchy of semantic lexemes by subgraph relationship, constructed for each training example – Lexeme MRs = semantic concepts – Lexeme hierarchy = semantic concept hierarchy – Shows how complicated semantic concepts hierarchically generate smaller concepts, and further connected to NL word groundings Turn Verify side: front: RIGHT HATRACK SOFA Turn Verify RIGHT side: HATRACK Travel Verify steps: 3 at: EASEL Travel Travel Verify at: EASEL Verify Verify side: HATRACK at: EASEL Turn Turn 40 PCFG Construction • Add rules per each node in LHG – Each complex concept chooses which subconcepts to describe that will finally be connected to NL instruction Each node generates all k-permutations of children nodes – we do not know which subset is correct – NL words are generated by lexeme nodes by unigram Markov process (Borschinger et al. 2011) – PCFG rule weights are optimized by EM Most probable MR components out of all possible combinations are estimated 41 PCFG Construction 𝑅𝑜𝑜𝑡 → 𝑆𝑐 , ∀𝑐 ∈ 𝑐𝑜𝑛𝑡𝑒𝑥𝑡𝑠 ∀𝑛𝑜𝑛 − 𝑙𝑒𝑎𝑓 𝑛𝑜𝑑𝑒 𝑎𝑛𝑑 𝑖𝑡𝑠 𝑀𝑅 m 𝑆𝑚 → 𝑆𝑚1 , … , 𝑆𝑚𝑛 , 𝑤ℎ𝑒𝑟𝑒 𝑚1 , … , 𝑚𝑛 : 𝑐ℎ𝑖𝑙𝑑𝑟𝑒𝑛 𝑙𝑒𝑥𝑒𝑚𝑒 𝑀𝑅 𝑜𝑓 𝑚, ∙ : 𝑎𝑙𝑙 𝑘 − 𝑝𝑒𝑟𝑚𝑢𝑡𝑎𝑡𝑖𝑜𝑛𝑠 𝑓𝑜𝑟 𝑘 = 1, … , 𝑛 ∀𝑙𝑒𝑥𝑒𝑚𝑒 𝑀𝑅 𝑚 𝑆𝑚 → 𝑃ℎ𝑟𝑎𝑠𝑒𝑚 𝑃ℎ𝑟𝑎𝑠𝑒𝑚 → 𝑊𝑜𝑟𝑑𝑚 𝑃ℎ𝑋𝑚 → 𝑃ℎ𝑋𝑚 𝑊𝑜𝑟𝑑𝑚 𝑃ℎ𝑟𝑎𝑠𝑒𝑚 → 𝑃ℎ𝑋𝑚 𝑊𝑜𝑟𝑑𝑚 𝑃ℎ𝑋𝑚 → 𝑃ℎ𝑋𝑚 𝑊𝑜𝑟𝑑∅ 𝑃ℎ𝑟𝑎𝑠𝑒𝑚 → 𝑃ℎ𝑚 𝑊𝑜𝑟𝑑∅ 𝑃ℎ𝑚 → 𝑃ℎ𝑋𝑚 𝑊𝑜𝑟𝑑𝑚 𝑃ℎ𝑋𝑚 → 𝑊𝑜𝑟𝑑𝑚 𝑃ℎ𝑚 → 𝑃ℎ𝑚 𝑊𝑜𝑟𝑑∅ 𝑃ℎ𝑋𝑚 → 𝑊𝑜𝑟𝑑∅ 𝑃ℎ𝑚 → 𝑊𝑜𝑟𝑑𝑚 𝑊𝑜𝑟𝑑𝑚 → 𝑠, ∀𝑠 s. t. 𝑠, 𝑚 ∈ 𝑙𝑒𝑥𝑖𝑐𝑜𝑛 𝐿 𝑊𝑜𝑟𝑑𝑚 → 𝑤, ∀𝑤𝑜𝑟𝑑 𝑤 ∈ 𝑠 s. t. 𝑠, 𝑚 ∈ 𝑙𝑒𝑥𝑖𝑐𝑜𝑛 𝐿 𝑊𝑜𝑟𝑑∅ → 𝑤, ∀𝑤𝑜𝑟𝑑 𝑤 ∈ 𝑁𝐿𝑠 Child concepts are generated from parent concepts selectively All semantic concepts generate relevant NL words Each semantic concept generates at least one NL word 42 Parsing New NL Sentences • PCFG rule weights are optimized by Inside-Outside algorithm with training data • Obtain the most probable parse tree for each test NL sentence from the learned weights using CKY algorithm • Compose final MR parse from lexeme MRs appeared in the parse tree – Consider only the lexeme MRs responsible for generating NL words – From the bottom of the tree, mark only responsible MR components that propagate to the top level – Able to compose novel MRs never seen in the training data 43 Most probable parse tree for a test NL instruction Turn Verify front: BLUE HALL LEFT Verify Turn steps: 2 at: SOFA RIGHT Turn Verify Travel Verify Turn LEFT front: SOFA steps: 2 at: SOFA RIGHT Travel Verify Turn Turn LEFT NL: front: SOFA Travel Turn left and at: SOFA find the sofa then turn around the corner Turn Verify front: BLUE HALL LEFT front: SOFA Travel Verify Turn steps: 2 at: SOFA RIGHT Turn Verify Travel Verify Turn LEFT front: SOFA steps: 2 at: SOFA RIGHT Travel Verify Turn Turn LEFT at: SOFA Turn LEFT Verify front: BLUE HALL Turn LEFT front: SOFA Travel Travel Verify Turn steps: 2 at: SOFA RIGHT Verify Turn at: SOFA 46 Unigram Generation PCFG Model • Limitations of Hierarchy Generation PCFG Model – Complexities caused by Lexeme Hierarchy Graph and k-permutations – Tend to over-fit to the training data • Proposed Solution: Simpler model – Generate relevant semantic lexemes one by one – No extra PCFG rules for k-permutations – Maintains simpler PCFG rule set, faster to train 47 PCFG Construction • Unigram Markov generation of relevant lexemes – Each context MR generates relevant lexemes one by one – Permutations of the appearing orders of relevant lexemes are already considered 48 PCFG Construction 𝑅𝑜𝑜𝑡 → 𝑆𝑐 , ∀𝑐 ∈ 𝑐𝑜𝑛𝑡𝑒𝑥𝑡𝑠 ∀𝑙𝑒𝑥𝑒𝑚𝑒 𝑀𝑅 𝑚 𝑆𝑐 → 𝐿𝑚 𝑆𝑐 𝑆𝑐 → 𝐿𝑚 𝐿𝑚 → 𝑃ℎ𝑟𝑎𝑠𝑒𝑚 𝑃ℎ𝑋𝑚 → 𝑃ℎ𝑋𝑚 𝑊𝑜𝑟𝑑𝑚 𝑃ℎ𝑟𝑎𝑠𝑒𝑚 → 𝑊𝑜𝑟𝑑𝑚 𝑃ℎ𝑋𝑚 → 𝑃ℎ𝑋𝑚 𝑊𝑜𝑟𝑑∅ 𝑃ℎ𝑟𝑎𝑠𝑒𝑚 → 𝑃ℎ𝑋𝑚 𝑊𝑜𝑟𝑑𝑚 𝑃ℎ𝑟𝑎𝑠𝑒𝑚 → 𝑃ℎ𝑚 𝑊𝑜𝑟𝑑∅ 𝑃ℎ𝑚 → 𝑃ℎ𝑋𝑚 𝑊𝑜𝑟𝑑𝑚 𝑃ℎ𝑚 → 𝑃ℎ𝑚 𝑊𝑜𝑟𝑑∅ 𝑃ℎ𝑋𝑚 → 𝑊𝑜𝑟𝑑𝑚 𝑃ℎ𝑚 → 𝑊𝑜𝑟𝑑𝑚 𝑃ℎ𝑋𝑚 → 𝑊𝑜𝑟𝑑∅ 𝑊𝑜𝑟𝑑𝑚 → 𝑠, ∀𝑠 s. t. 𝑠, 𝑚 ∈ 𝑙𝑒𝑥𝑖𝑐𝑜𝑛 𝐿 𝑊𝑜𝑟𝑑𝑚 → 𝑤, ∀𝑤𝑜𝑟𝑑 𝑤 ∈ 𝑠 s. t. 𝑠, 𝑚 ∈ 𝑙𝑒𝑥𝑖𝑐𝑜𝑛 𝐿 Each semantic concept is generated by unigram Markov process All semantic concepts generate relevant NL words 𝑆∅ → 𝑃ℎ𝑟𝑎𝑠𝑒∅ 𝑃ℎ𝑟𝑎𝑠𝑒∅ → 𝑃ℎ𝑟𝑎𝑠𝑒∅ 𝑊𝑜𝑟𝑑∅ 𝑊𝑜𝑟𝑑∅ → 𝑤, ∀𝑤𝑜𝑟𝑑 𝑤 ∈ 𝑁𝐿𝑠 49 Parsing New NL Sentences • Follows the similar scheme as in Hierarchy Generation PCFG model • Compose final MR parse from lexeme MRs appeared in the parse tree – Consider only the lexeme MRs responsible for generating NL words – Mark relevant lexeme MR components in the context MR appearing in the top nonterminal 50 Most probable parse tree for a test NL instruction Context MR Turn Verify front: BLUE HALL LEFT front: SOFA Travel Verify Turn steps: 2 at: SOFA RIGHT Context MR Relevant Lexemes Turn Turn Verify Travel Verify Turn LEFT front: front: BLUE SOFA HALL steps: 2 at: SOFA RIGHT LEFT Context MR Travel Verify at: SOFA Turn Verify Travel Verify Turn LEFT front: front: BLUE SOFA HALL steps: 2 at: SOFA RIGHT Turn NL: Turn left and find the sofa then turn around the corner Context MR Turn LEFT Verify front: BLUE HALL Turn Relevant Lexemes front: SOFA Travel Travel Verify Turn steps: 2 at: SOFA RIGHT Verify Turn LEFT at: SOFA Context MR Turn LEFT Verify front: BLUE HALL Turn Relevant Lexemes front: SOFA Travel Travel Verify Turn steps: 2 at: SOFA RIGHT Verify Turn LEFT at: SOFA Turn LEFT Verify front: BLUE HALL Turn LEFT front: SOFA Travel Travel Verify Turn steps: 2 at: SOFA RIGHT Verify Turn at: SOFA 54 Data • 3 maps, 6 instructors, 1-15 followers/direction • Hand-segmented into single sentence steps to make the learning easier (Chen & Mooney, 2011) • Mandarin Chinese translation of each sentence (Chen, 2012) • Word-segmented version by Stanford Chinese Word Segmenter • Character-segmented version Paragraph Take the wood path towards the easel. At the easel, go left and then take a right on the the blue path at the corner. Follow the blue path towards the chair and at the chair, take a right towards the stool. When you reach the stool, you are at 7. Turn, Forward, Turn left, Forward, Turn right, Forward x 3, Turn right, Forward Single sentence Take the wood path towards the easel. Turn At the easel, go left and then take a right on the the blue path at the corner. Forward, Turn left, Forward, Turn right 55 Data Statistics Paragraph Single-Sentence 706 3236 Avg. # sentences 5.0 (±2.8) 1.0 (±0) Avg. # actions 10.4 (±5.7) 2.1 (±2.4) English Avg. # words Chinese-Word / sent Chinese-Character 37.6 (±21.1) 7.8 (±5.1) 31.6 (±18.1) 6.9 (±4.9) 48.9 (±28.3) 10.6 (±7.3) 660 629 661 508 448 328 # Instructions English Vocab Chinese-Word ulary Chinese-Character 56 Evaluations • Leave-one-map-out approach – 2 maps for training and 1 map for testing – Parse accuracy & Plan execution accuracy • Compared with Chen and Mooney, 2011 and Chen, 2012 – Ambiguous context (landmarks plan) is refined by greedy selection of high-score lexemes with two different lexicon learning algorithms Chen and Mooney, 2011: Graph Intersection Lexicon Learning (GILL) Chen, 2012: Subgraph Generation Online Lexicon Learning (SGOLL) – Semantic parser KRISP (Kate and Mooney, 2006) trained on the resulting supervised data 57 Parse Accuracy • Evaluate how well the learned semantic parsers can parse novel sentences in test data • Metric: partial parse accuracy 58 Parse Accuracy (English) 90.16 88.36 87.58 86.1 74.81 68.79 68.59 76.44 69.31 65.41 55.41 PRECISION 57.03 RECALL Chen & Mooney (2011) Chen (2012) Hierarchy Generation PCFG Model Unigram Generation PCFG Model F1 59 Parse Accuracy (Chinese-Word) 88.87 80.56 79.45 75.53 73.66 71.14 76.41 70.74 58.76 PRECISION Chen (2012) RECALL Hierarchy Generation PCFG Model F1 Unigram Generation PCFG Model 60 Parse Accuracy (Chinese-Character) 92.48 79.77 79.73 77.55 75.52 73.05 70.01 67.38 56.47 PRECISION Chen (2012) RECALL Hierarchy Generation PCFG Model F1 Unigram Generation PCFG Model 61 End-to-End Execution Evaluations • Test how well the formal plan from the output of semantic parser reaches the destination • Strict metric: Only successful if the final position matches exactly – Also consider facing direction in single-sentence – Paragraph execution is affected by even one single-sentence execution 62 End-to-End Execution Evaluations (English) 67.14 54.4 57.28 57.22 28.12 16.18 SINGLE-SENTENCE 19.18 20.17 PARAGRAPH Chen & Mooney (2011) Chen (2012) Hierarchy Generation PCFG Model Unigram Generation PCFG Model 63 End-to-End Execution Evaluations (Chinese-Word) 61.03 58.7 63.4 20.13 SINGLE-SENTENCE Chen (2012) Hierarchy Generation PCFG Model 23.12 19.08 PARAGRAPH Unigram Generation PCFG Model 64 End-to-End Execution Evaluations (Chinese-Character) 62.85 57.27 55.61 23.33 16.73 SINGLE-SENTENCE Chen (2012) Hierarchy Generation PCFG Model 12.74 PARAGRAPH Unigram Generation PCFG Model 65 Discussion • Better recall in parse accuracy – Our probabilistic model uses useful but low score lexemes as well → more coverage – Unified models are not vulnerable to intermediate information loss • Hierarchy Generation PCFG model over-fits to training data – Complexities: LHG and k-permutation rules Particularly weak in Chinese-character corpus Longer avg. sentence length: hard to estimate PCFG weights • Unigram Generation PCFG model is better – Less complexity, avoid over-fitting, better generalization • Better than Borschinger et al. 2011 – Overcome intractability in complex MRL – Learn from more general, complex ambiguity – Novel MR parses never seen during training 66 Comparison of Grammar Size and EM Training Time Data Hierarchy Generation PCFG Model Unigram Generation PCFG Model |Grammar| Time (hrs) |Grammar| Time (hrs) English 20451 17.26 16357 8.78 Chinese (Word) 21636 15.99 15459 8.05 Chinese (Character) 19792 18.64 13514 12.58 67 Outline • Introduction/Motivation • Grounded Language Learning in Limited Ambiguity (Kim and Mooney, COLING 2010) – Learning to sportscast • Grounded Language Learning in High Ambiguity (Kim and Mooney, EMNLP 2012) – Learn to follow navigational instructions • Discriminative Reranking for Grounded Language Learning (Kim and Mooney, ACL 2013) • Future Directions • Conclusion 68 Discriminative Reranking • Effective approach to improve performance of generative models with secondary discriminative model • Applied to various NLP tasks – – – – – – – Syntactic parsing (Collins, ICML 2000; Collins, ACL 2002; Charniak & Johnson, ACL 2005) Semantic parsing (Lu et al., EMNLP 2008; Ge and Mooney, ACL 2006) Part-of-speech tagging (Collins, EMNLP 2002) Semantic role labeling (Toutanova et al., ACL 2005) Named entity recognition (Collins, ACL 2002) Machine translation (Shen et al., NAACL 2004; Fraser and Marcu, ACL 2006) Surface realization in language generation (White & Rajkumar, EMNLP 2009; Konstas & Lapata, ACL 2012) • Goal: – Adapt discriminative reranking to grounded language learning 69 Discriminative Reranking • Generative model – Trained model outputs the best result with max probability 1-best candidate with maximum probability Candidate 1 Trained Generative Model Testing Example 70 Discriminative Reranking • Can we do better? – Secondary discriminative model picks the best out of n-best candidates from baseline model n-best candidates Candidate 1 GEN Candidate 2 Trained Baseline Generative Model Candidate 3 Output Candidate 4 … … Testing Example Trained Secondary Discriminative Model Best prediction Candidate n 71 How can we apply discriminative reranking? • Impossible to apply standard discriminative reranking to grounded language learning – Lack of a single gold-standard reference for each training example – Instead, provides weak supervision of surrounding perceptual context (landmarks plan) • Use response feedback from perceptual world – Evaluate candidate formal MRs by executing them in simulated worlds Used in evaluating the final end-task, plan execution – Weak indication of whether a candidate is good/bad – Multiple candidate parses for parameter update Response signal is weak and distributed over all candidates 72 Reranking Model: Averaged Perceptron (Collins, 2000) • Parameter weight vector is updated when trained model predicts a wrong candidate Our generative models feature n-best candidates vector Candidate 1 GEN Trained Baseline Generative Model 𝒂𝟏 𝒂𝒈 − 𝒂𝟒 𝒂𝟐 1.21 Candidate 3 𝒂𝟑 -1.09 Candidate 4 𝒂𝟒 Candidate n Perceptron 𝑊 1.46 Not Available Gold Standard Reference Best prediction 𝒂𝒏 Update -0.16 Candidate 2 … … Training Example perceptron score (𝑊 ∙ 𝑎) 0.59 𝒂𝒈 73 Response-based Weight Update • Pick a pseudo-gold parse out of all candidates – Most preferred one in terms of plan execution – Evaluate composed MR plans from candidate parses – MARCO (MacMahon et al. AAAI 2006) execution module runs and evaluates each candidate MR in the world Also used for evaluating end-goal, plan execution performance – Record Execution Success Rate Whether each candidate MR reaches the intended destination MARCO is nondeterministic, average over 10 trials – Prefer the candidate with the best execution success rate during training 74 Response-based Update • Select pseudo-gold reference based on MARCO execution results n-best candidates Candidate 1 Derived MRs Best prediction 𝑴𝑹𝟏 Execution Success Rate 𝟎. 𝟔 Perceptron Score (𝑊 ∙ 𝑎) 1.79 Candidate 2 𝑴𝑹𝟐 𝟎. 𝟒 0.21 Candidate 3 𝑴𝑹𝟑 𝟎. 𝟎 -1.09 Candidate 4 𝑴𝑹𝟒 MARCO Execution Module 𝟎. 𝟗 𝑴𝑹𝒏 𝟎. 𝟐 Feature vector difference Perceptron 𝑊 1.46 Pseudo-gold Reference … Candidate n Update 0.59 75 Weight Update with Multiple Parses • Candidates other than pseudo-gold could be useful – Multiple parses may have same maximum execution success rates – “Lower” execution success rates could mean correct plan given indirect supervision of human follower actions MR plans are underspecified or ignorable details attached Sometimes inaccurate, but contain correct MR components to reach the desired goal • Weight update with multiple candidate parses – Use candidates with higher execution success rates than currently best-predicted candidate – Update with feature vector difference weighted by difference between execution success rates 76 Weight Update with Multiple Parses • Weight update with multiple candidates that have higher execution success rate than currently predicted parse n-best candidates Candidate 1 Derived MRs Best prediction 𝑴𝑹𝟏 Execution Success Rate 𝟎. 𝟔 Perceptron Score (𝑊 ∙ 𝑎) 1.24 Candidate 2 𝑴𝑹𝟐 𝟎. 𝟒 1.83 Candidate 3 𝑴𝑹𝟑 𝟎. 𝟎 -1.09 Candidate 4 𝑴𝑹𝟒 MARCO Execution Module 𝟎. 𝟗 Update (1) Feature vector Difference × (𝟎. 𝟗 − 𝟎. 𝟒) Perceptron 𝑊 1.46 … Candidate n 𝑴𝑹𝒏 𝟎. 𝟐 0.59 77 Weight Update with Multiple Parses • Weight update with multiple candidates that have higher execution success rate than currently predicted parse n-best candidates Candidate 1 Derived MRs Best prediction 𝑴𝑹𝟏 Execution Success Rate 𝟎. 𝟔 Perceptron Score (𝑊 ∙ 𝑎) 1.24 Candidate 2 𝑴𝑹𝟐 𝟎. 𝟒 1.83 Candidate 3 𝑴𝑹𝟑 𝟎. 𝟎 -1.09 Candidate 4 𝑴𝑹𝟒 MARCO Execution Module 𝟎. 𝟗 Update (2) Feature vector Difference × (𝟎. 𝟔 − 𝟎. 𝟒) Perceptron 𝑊 1.46 … Candidate n 𝑴𝑹𝒏 𝟎. 𝟐 0.59 78 Features • Binary indicator whether a certain composition of nonterminals/terminals appear in parse tree (Collins, EMNLP 2002, Lu et al., EMNLP 2008, Ge & Mooney, ACL 2006) L1: Turn(LEFT), Verify(front:SOFA, back:EASEL), Travel(steps:2), Verify(at:SOFA), Turn(RIGHT) L2: Turn(LEFT), Verify(front:SOFA) 𝒇𝒇 𝑳𝑳𝟑𝟏𝟓𝟑 ⇒ → |𝑳 𝟏=𝟏𝟏 ↝ 𝑳𝑳𝐟𝐢𝐧𝐝 𝟓𝟑 𝟓𝑳 𝟔= 𝟏= L3: Travel(steps:2), Verify(at:SOFA), Turn(RIGHT) L4: Turn(LEFT) L5: Travel(), Verify(at:SOFA) L6: Turn() Turn left and find the sofa then turn around the corner 79 Evaluations • Leave-one-map-out approach – 2 maps for training and 1 map for testing – Parse accuracy – Plan execution accuracy (end goal) • Compared with two baseline models – Hierarchy and Unigram Generation PCFG models – All reranking results use 50-best parses – Try to get 50-best distinct composed MR plans and according parses out of 1,000,000-best parses Many parse trees differ insignificantly, leading to same derived MR plans Generate sufficiently large 1,000,000-best parse trees from baseline model 80 Response-based Update vs. Baseline (English) Parse F1 Single-sentence Paragraph 68.27 77.24 29.2 67.14 28.12 76.44 74.81 59.65 57.22 73.32 HIERARCHY Baseline 22.62 UNIGRAM Response-based 20.17 HIERARCHY Baseline UNIGRAM Single HIERARCHY Baseline UNIGRAM Response-based 81 Response-based Update vs. Baseline (Chinese-Word) Parse F1 Single-sentence Paragraph 65.64 77.74 23.74 77.26 23.12 64.12 63.4 21.29 76.41 75.53 HIERARCHY Baseline 19.08 61.03 UNIGRAM Response-based HIERARCHY Baseline UNIGRAM Response-based HIERARCHY Baseline UNIGRAM Response-based 82 Response-based Update vs. Baseline (Chinese-Character) Parse F1 Single-sentence Paragraph 65.5 79.76 25.35 64.08 23.33 62.85 77.55 22.25 76.26 73.05 55.61 12.74 HIERARCHY Baseline UNIGRAM Response-based HIERARCHY Baseline UNIGRAM Response-based HIERARCHY Baseline UNIGRAM Response-based 83 Response-based Update vs. Baseline • vs. Baseline – Response-based approach performs better in the final end-task, plan execution. – Optimize the model for plan execution 84 Response-based Update with Multiple vs. Single Parses (English) Parse F1 Single-sentence 68.27 77.81 Paragraph 68.93 29.2 29.1 77.24 26.57 62.81 73.32 73.43 HIERARCHY Single 22.62 59.65 UNIGRAM Multi HIERARCHY Single UNIGRAM Multi HIERARCHY Single UNIGRAM Multi 85 Response-based Update with Multiple vs. Single Parses (Chinese-Word) Parse F1 Single-sentence Paragraph 66.27 78.8 25.95 65.64 78.11 77.74 23.74 77.26 64.12 64.15 21.29 HIERARCHY Single UNIGRAM Multi HIERARCHY Single UNIGRAM Multi 21.55 HIERARCHY Single UNIGRAM Multi 86 Response-based Update with Multiple vs. Single Parses (Chinese-Character) Parse F1 79.44 79.76 Single-sentence Paragraph 27.16 66.84 79.94 25.35 65.5 76.26 64.08 64.08 HIERARCHY Single UNIGRAM Multi HIERARCHY Single 22.25 UNIGRAM Multi 22.58 HIERARCHY Single UNIGRAM Multi 87 Response-based Update with Multiple vs. Single Parses • Using multiple parses improves the performance in general – Single-best pseudo-gold parse provides only weak feedback – Candidates with low execution success rates produce underspecified plans or plans with ignorable details, but capturing gist of preferred actions – A variety of preferable parses help improve the amount and the quality of weak feedback 88 Outline • Introduction/Motivation • Grounded Language Learning in Limited Ambiguity (Kim and Mooney, COLING 2010) – Learning to sportscast • Grounded Language Learning in High Ambiguity (Kim and Mooney, EMNLP 2012) – Learn to follow navigational instructions • Discriminative Reranking for Grounded Language Learning (Kim and Mooney, ACL 2013) • Future Directions • Conclusion 89 Future Directions • Integrating syntactic components – Learn joint model of syntactic and semantic structure • Large-scale data – Data collection, model adaptation to large-scale • Machine translation – Application to summarized translation • Real perceptual data – Learn with raw features (sensory and vision data) 90 Outline • Introduction/Motivation • Grounded Language Learning in Limited Ambiguity (Kim and Mooney, COLING 2010) – Learning to sportscast • Grounded Language Learning in High Ambiguity (Kim and Mooney, EMNLP 2012) – Learn to follow navigational instructions • Discriminative Reranking for Grounded Language Learning (Kim and Mooney, ACL 2013) • Future Directions • Conclusion 91 Conclusion • Conventional language learning is expensive and not scalable due to annotation of training data • Grounded language learning from relevant, perceptual context is promising and training corpus is easy to obtain • Our proposed models provide general framework of full probabilistic model for learning NL-MR correspondences with ambiguous supervision • Discriminative reranking is possible and effective with weak feedback from perceptual environment 92 Thank You!