Bayesian analysis of mapped data Lecture #4: Spatial statistics in practice

advertisement

Lecture #4:

Bayesian analysis

of mapped data

Spatial statistics in practice

Center for Tropical Ecology and Biodiversity,

Tunghai University & Fushan Botanical Garden

Topics for today’s lecture

• Frequentist versus Bayesian perspectives.

• Implementing random effects models in

GeoBUGS.

• Spatially structured and unstructured

random effects: the CAR, the ICAR, and

the spatial filter specifications

LMM & GLMM move in the direction

of Bayesian modeling

• Fixed effects are in keeping with a

frequentist viewpoint―individual

unknown parameters

• Random effects are distributions of

parameters, and are in keeping with a

Bayesian viewpoint

• The model specifications tend to be

the same, with estimation methods

tending to differ

Sampling distribution-based

inference: the reported p values

• Frequentism: assigns probabilities to random

events only according to their relative frequencies

of occurrence, rejecting degree-of-belief

interpretations of mathematical probability theory.

• The frequentist approach considers to be an

unknown constant. Allowing for the possibility that

can take on a range of values, frequentist inference

is based on a hypothetical repeated sampling

principle that obtains desirable and (often)

physically interpretable properties (e.g., CIs).

• Frequentist statistics typically is limited to posing

questions in terms of a null hypothesis (i.e., H0) that

a parameter takes on a single value.

What is Bayesian analysis?

• Bayesianism: contends that mathematical

probability theory pertains to degree of

plausibility/belief; when used with Bayes theorem

(which casts analysis in conditional terms), it

becomes Bayesian inference.

• The Bayesian approach considers as the realized

value of a random variable Θ with a probability

density (mass) function called the prior distribution

(a marginal probability distribution that is interpreted

as a description of what is known about a variable

in the absence of empirical/theoretical evidence).

• Bayesian statistics furnishes a generic tool for

inferring model parameter probability distribution

functions from data: a parameter has a distribution,

not a single value.

• The Bayesian interpretation of probability allows

(proper prior) probabilities to be assigned

subjectively to random events, in accordance with

a researcher’s beliefs.

• Proper prior: a probability distribution that is

normalized to one, and asymptotically

dominated by the likelihood function

– improper prior: a handy analytic form (such as

a constant or logarithmic distribution) that,

when integrated over a parameter space,

tends to 1 and so is not normalizable.

• Noninformative prior: because no prior information

is available, the selected prior contains

vague/general information about a parameter,

having minimal influence on inferences.

• conjugate prior: a prior probability distribution

naturally connected with the likelihood function that,

when combined with the likelihood and normalized,

produces a posterior probability distribution that is of

the same type as the prior, making the analytical

mathematics of integration easy. (NOTE: MCMC

techniques relaxes the need for this type of

specification)

• The hierarchical Bayes model is called “hierarchical”

because it has two levels. At the higher level, hyperparameter distributions are described by multivariate

priors. Such distributions are characterized by

vectors of means and covariance matrices; spatial

autocorrelation is captured here. At the lower

level, individuals’ behavior is described by

probabilities of achieving some outcome that are

governed by a particular model specification.

• posterior distribution: a conditional probability

distribution for a parameter taking empirical evidence

into account computed with Bayes’ theorem as a

normalized product of the likelihood function and the

prior distributions that supports Bayesian inference

about the parameter

• Bayesian statistics assumes that the probability

distribution in question is known, and hence involves

integration to get the normalizing constant. This

integration might be tricky, and in many cases there is no

analytical solution. With the advent of computers and

various integration techniques, this problem can partially

be overcome. In many Bayesian statistics applications a

prior is tabulated and then sophisticated numerical

integration techniques (e.g., MCMC) are used to derive

posterior distributions.

The controversy

• A frequentist generally has no objection to

the use of the Bayes formula, but when prior

probabilities are lacking s/he deplores the

Bayesians’ tendency to make them up out of

thin air.

• Does a parameter have one or many

values?

• For many (but not all) of the simpler

problems where frequentist methodology

seems to give satisfying answers, the

Bayesian approach yields basically the

same answers, provided noninformative

proper priors are used.

Frequentist

Bayesian

Definition

of

probability

Long-run expected

Relative degree of

frequency in repeated

belief in the state of the

(actual or hypothetical) world

experiments (Law of LN)

Point

estimate

Maximum likelihood

estimate

Mean, mode or median

of the posterior

probability distribution

Confidence

intervals

for

parameters

Based on the

Likelihood Ratio Test

(LRT)

i.e., the

expected probability

distribution of the

maximum likelihood

estimate over many

experiments

“credible intervals”

based on the posterior

probability distribution

Confidence

intervals of

nonparameters

Based on likelihood

profile/LRT, or by

resampling from the

sampling distribution of

the parameter

Calculated directly from

the distribution of

parameters

Model

selection

Discard terms that are not

significantly different from

a nested (null) model at a

previously set confidence

level

Retain terms in models,

on the argument that

processes are not absent

simply because they are

not statistically significant

Difficulties

Confidence intervals are

Subjectivity; need to

confusing (range that will specify priors

contain the true value in a

proportion α of repeated

experiments); rejection of

model terms for “nonsignificance”

Impact of sample size

prior

distribution

As the sample size

increases, a prior distribution has less and less

impact on results; BUT

likelihood

distribution

effective

sample size

for spatially

autocorrelated

data

What is BUGS?

Bayesian inference Using Gibbs Sampling

• is a piece of computer software for the

Bayesian analysis of complex statistical

models using Markov chain Monte Carlo

(MCMC) methods.

• It grew from a statistical research project at

the MRC BIOSTATISTICAL UNIT in

Cambridge, but now is developed jointly

with the Imperial College School of

Medicine at St Mary’s, London.

Classic BUGS

BUGS

WinBUGS (Windows Version)

GeoBUGS (spatial models)

PKBUGS (pharmokinetic modelling)

• The Classic BUGS program uses textbased model description and a commandline interface, and versions are available

for major computer platforms (e.g., Sparc,

Dos). However, it is not being further

developed.

What is WinBUGS?

• WinBUGS, a windows program with an option of

a graphical user interface, the standard ‘pointand-click’ windows interface, and on-line

monitoring and convergence diagnostics. It also

supports Batch-mode running (version 1.4).

• GeoBUGS, an add-on to WinBUGS that fits

spatial models and produces a range of maps

as output.

• PKBUGS, an efficient and user-friendly interface

for specifying complex population

pharmacokinetic and pharmacodynamic

(PK/PD) models within the WinBUGS software.

What is GeoBUGS?

• Available via

http://www.mrc-bsu.cam.ac.uk/

bugs/winbugs/geobugs.shtml

• Bayesian inference is used to spatially

smooth the standardized incidence ratios

using Markov chain Monte Carlo (MCMC)

methods. GeoBUGS implements models for

data that are collected within discrete regions

(not at the individual level), and smoothing is

done based on Markov random field models

for the neighborhood structure of the regions

relative to each other.

Choice of Priors

• Can effect

– model convergence

– execution speed

– computational overflow errors

• Consider

– Conjugate prior

– Sensible prior for the range of a parameter

• e.g. Poisson mean must be positive; hence, a normal

distribution is not a good prior.

– Truncated Prior

• useful if it is known that sensible or useful parameter

values are within a particular range

Parameter Initialization

• Initialize important parameters.

– Parameters distributed according to a noninformative prior must be initialized (otherwise

an overflow error is very likely).

GeoBUGs

•

•

•

•

Regions are numbered sequentially from 1 to n

Each region is defined as a polygon in a map file

Each region is associated with a unique index

BUGs can import map files from Arcinfo,

Epimap, SPLUS.

Review: What is MCMC?

MCMC is used to simulate from some

distribution p known only up to a constant

factor, C:

pi = Cqi

where qi is known but C is unknown and too

horrible to calculate.

MCMC begins with conditional (marginal)

distributions, and MCMC sampling outputs a

sample of parameters drawn from their joint

(posterior) distribution.

GeoBugs: spatial Poisson with a map

model;

{

for (i in 1:M){

for (j in 1:N){

#Poisson with a constant but unknown mean

y[i,j] ~ dpois(l)

#GeoBUGS numbers regions in a sequence

#This statement turns a 2-D array into a 1-D array for GeoBUGS

mapy[j + (i-1)N] <- y[I,j]

}

}

#priors

#Noninformative conjugate priors for Poisson mean.

l~dgamma(0.001,0.001)

}

Displaying a map with GeoBugs

• Compile model, load data, load initial

conditions

• Set sample monitor on desired variables

• Set trace

• Set sample monitor on map variable OR

set summary monitor on map variable

• Run chain

• Activate map tool, load appropriate map

• Set cut points, colour spectrum as desired.

Poisson mixture on a spatial

lattice

model;

Poisson mean varies

{

for (i in 1:M){

for (j in 1:N){

y[i,j] ~ dpois(l[i,j])

x[i,j] in (0,1)

l[i,j] <- mu1*x[i,j]+ (1-x[i,j])*mu2

x[i,j] ~ dbin(p,1)

x[i,j] independent binomials

#These statements are for the benefit of GeoBugs

mapx[j + (i-1)*N] <- x[i,j]

mapy[j + (i-1)*N] <- y[i,j]

mapl[j+(i-1)*N] <-l[i,j]

Map the hidden lattice

}

and the Poisson means

}

#priors

p~dunif(0,1)

#Noninformative conjugate priors for Poisson means.

mu1~dgamma( 0. 001, 0. 001)

mu2~dgamma( 0. 001, 0. 001)

}

a

b

E(mu) = a/b; var(mu) = a/b2

Introducing a covariate

model;

{

Poisson mean varies with location

for (i in 1:M){

for (j in 1:N){

y[i,j] ~ dpois(l[i,j])

l[i,j] <- mu1*x[i,j]+ (1-x[i,j])*mu2[i,j]

l og( mu2[ i , j ] ) <- cov[ i , j ] * bet a2

x[i,j] ~ dbin(p,1)

Log link function

#These statements are for the benefit of GeoBugs

mapx[j + (i-1)*N] <- x[i,j]

mapy[j + (i-1)*N] <- y[i,j]

mapl[j+(i-1)*N] <-l[i,j]

}

}

#priors

p~dunif(0,1)

mu1~dgamma( 0. 001, 0. 001)

bet a2~dnor m( 0, 0. 001)

}

Normal prior for covariate parameter

Adding a random effect

model;

{

Random effect

for (i in 1:M){

for (j in 1:N){

y[i,j] ~ dpois(l[i,j])

l[i,j] <- mu1*x[i,j]+ (1-x[i,j])*mu2[i,j]

log(mu2[i,j])<- cov[i,j]*beta2 + alpha[i,j]

alpha[i,j]~dnorm(0, tau_alpha)

x[i,j] ~ dbin(p,1)

#These statements are for the benefit of GeoBugs

mapx[j + (i-1)*N] <- x[i,j]

mapy[j + (i-1)*N] <- y[i,j]

mapl[j+(i-1)*N] <-l[i,j]

}

}

}

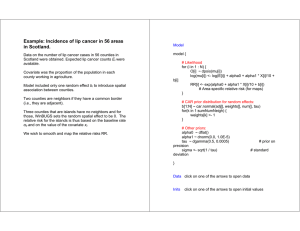

GeoBUGS Example

NOTE: Possible mappings for the

Scottish lip cancer data include

b – area-specific random effects term

mu – area-specific means

RR – area-specific relative risks

O – observed values

E – expected values

The Scottish lip cancer example

Open a window in WinBUGS and insert the following items:

- model

- data

- initial

values

#MODEL

model

{

for (i in 1 : N) {

O[i] ~ dpois(mu[i])

log(mu[i]) <- log(E[i]) + alpha0 + alpha1 * X[i]/10 + b[i]

# Area-specific relative risk (for maps)

RR[i] <- exp(alpha0 + alpha1 * X[i]/10 + b[i])}

# CAR prior distribution for random effects:

b[1:N] ~ car.normal(adj[], weights[], num[], tau)

for(k in 1:sumNumNeigh) {

weights[k] <- 1

}

# Other priors:

alpha0 ~ dflat()

alpha1 ~ dnorm(0.0, 1.0E-5)

tau ~ dgamma(0.5, 0.0005) # prior on precision

sigma <- sqrt(1 / tau)

# standard deviation

}

#DATA

list(N = 56,

O = c( 9, 39, 11, 9, 15, 8, 26, 7, 6, 20,

13, 5, 3, 8, 17, 9, 2, 7, 9, 7,

16, 31, 11, 7, 19, 15, 7, 10, 16, 11,

5, 3, 7, 8, 11, 9, 11, 8, 6, 4,

10, 8, 2, 6, 19, 3, 2, 3, 28, 6,

1, 1, 1, 1, 0, 0),

E = c( 1.4, 8.7, 3.0, 2.5, 4.3, 2.4, 8.1, 2.3, 2.0, 6.6,

4.4, 1.8, 1.1, 3.3, 7.8, 4.6, 1.1, 4.2, 5.5, 4.4,

10.5, 22.7, 8.8, 5.6, 15.5, 12.5, 6.0, 9.0, 14.4, 10.2,

4.8, 2.9, 7.0, 8.5, 12.3, 10.1, 12.7, 9.4, 7.2, 5.3,

18.8, 15.8, 4.3, 14.6, 50.7, 8.2, 5.6, 9.3, 88.7, 19.6,

3.4, 3.6, 5.7, 7.0, 4.2, 1.8),

X = c(16, 16, 10, 24, 10, 24, 10, 7, 7, 16,

7, 16, 10, 24, 7, 16, 10, 7, 7, 10,

7, 16, 10, 7, 1, 1, 7, 7, 10, 10,

7, 24, 10, 7, 7, 0, 10, 1, 16, 0,

1, 16, 16, 0, 1, 7, 1, 1, 0, 1,

1, 0, 1, 1, 16, 10),

num = c(3, 2, 1, 3, 3, 0, 5, 0, 5,

4,

0, 2, 3, 3, 2, 6, 6, 6, 5, 3,

3, 2, 4, 8, 3, 3, 4, 4, 11, 6,

7, 3, 4, 9, 4, 2, 4, 6, 3, 4,

5, 5, 4, 5, 4, 6, 6, 4, 9, 2,

4, 4, 4, 5, 6, 5

),

adj = c(

19, 9, 5,

10, 7,

12,

28, 20, 18,

19, 12, 1,

17, 16, 13, 10, 2,

29, 23, 19, 17, 1,

22, 16, 7, 2,

5, 3,

19, 17,

35, 32,

29, 25,

29, 22,

29, 19,

56, 55,

17, 13,

56, 18,

50, 29,

16, 10,

39, 34,

56, 55,

29, 26,

7,

31,

21, 17, 10, 7,

16, 13, 9, 7,

33, 28, 20, 4,

9, 5, 1,

4,

16,

29, 9,

48, 47, 44, 31, 30, 27,

15,

43, 29, 25,

56, 32, 31,

45, 33, 18,

50, 43, 34,

55, 45, 44,

47, 46, 35,

31, 27, 14,

55, 45, 28,

54, 52, 51,

46, 37, 31,

41, 37,

46, 41, 36,

54, 51, 49,

40, 34, 23,

52, 49, 39,

53, 49, 46,

51, 43, 38,

42, 34, 29,

49, 48, 38,

55, 33, 30,

53, 47, 41,

53, 49, 48,

49, 47, 44,

54, 53, 52,

29, 21,

54, 42, 38,

54, 49, 40,

49, 47, 46,

52, 51, 49,

56, 45, 33,

55, 27, 24,

),

sumNumNeigh

24,

4,

26, 25, 23, 21, 17, 16, 15, 9,

42, 38, 24,

32, 27, 24, 14,

18,

43, 42, 40, 39, 29, 23,

14,

35,

44, 42, 30,

34,

37,

34,

26,

30,

28,

37,

46,

24,

48,

36,

30,

24,

35, 31,

31, 24,

47, 44, 41, 40, 38,

34,

34,

41,

38, 34,

30, 24, 18,

20, 18

= 234)

#INITIAL VALUES

list(tau = 1, alpha0 = 0, alpha1 = 0,

b=c(0,0,0,0,0,NA,0,NA,0,0,

NA,0,0,0,0,0,0,0,0,0,

0,0,0,0,0,0,0,0,0,0,

0,0,0,0,0,0,0,0,0,0,

0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0))

GeoBUGS Execution Instructions

Step 1: open a new window in WinBUGS (this will be

referred to as the user window)

Step 2: enter the model syntax, data, and initial values,

using the WinBUGS format, in the user window

Step 3: select “Specification” in the “Model” pull down

window

Step 4: highlight “model” in the user window, appearing at

the beginning of the model syntax, and click once

on the “check model” button in the “Specification

Tool” window

NOTE: feedback from the program appears in the lower

left-hand corner of the WinBUGS program window,

and should be monitored

Step 5: highlight “list” in the user window,

appearing at the beginning of the data,

and click once on the “load data” button

in the “Specification Tool” window

Step 6: insert the number of chains to be run (the

default number is 1) in the “Specification

Tool” window

Step 7: click once on the “compile” button in the

“Specification Tool” window

Step 8: highlight “list” in the user window,

appearing at the beginning of the initial

values, and click once on the “load inits”

button in the “Specification Tool” window

(one set is needed for each chain to be

run; clicking the “gen inits” button can be

dangerous for sound analysis)

Step 9: close the “Specification Tool” window

Step 10: select “Samples” in the “Inference” pull

down menu

Step 11: in the “Sample Monitor Tool” window:

type in the name (as it appears in the

model syntax) of the 1st parameter to be

monitored, and click once on the “set”

button; type in the name of the 2nd

parameter to be monitored, and click

once on the “set” button; …; type in the

name of the pth parameter to be

monitored, and click once on the “set”

button

Step 12: close the “Sample Monitor Tool” window

Step 13: select “Update” in the “Model” pull down

menu

Step 14: the default value in the “updates” box is

1000 this value most likely needs to be

substantially increased (say, to 50,000;

once any desired changes are made,

click once on the “updates” button

Step 15: once the number appearing in the

“iteration” box equals the number in the

“updates” box, close the “Update Tool”

window

Step 16: select “Samples” in the “Inference” pull

down menu

Step 17: click on the down arrow to the right of

the “node” box, and select the

parameter to be monitored

Step 18: click once of the “history” button to view the timeseries plot for all iterations; click once on the

“trace” plot to view the time-series plot for the last

iterations; click once on the “auto cor” button to

view the time-series correlogram (you may wish to

enlarge the graphic in this window); click once on

the “stats” button to view a parameter estimate’s

statistics

Step 19: close the “Sample Monitor Tool” window

Step 20: select “Mapping Tool” from the “Map” pull down

menu

Step 21: select the appropriate map from the list appearing

for the “Map” box when the down arrow to its right

is clicked once (hint: your map that you imported

from an Arc shapefile should appear here)

Step 22: type the name of the variable (exactly as it

appears in the model syntax) to be mapped in the

“variable” box, and click once on the “plot” box

Puerto Rico Binomial with

Spatial Autocorrelation Example

#MODEL

model

{

for (i in 1 : N) {

U[i] ~ dbin(p[i], T[i])

p[i] <- exp(alpha0 + b[i])/(1+exp(alpha0

}

# CAR prior distribution for random effects:

b[1:N] ~ car.normal(adj[], weights[], num[],

for(k in 1:sumNumNeigh) {

weights[k] <- 1

}

# Other priors:

alpha0 ~ dflat()

tau ~ dgamma(0.5, 0.0005)

# prior on

sigma <- sqrt(1 / tau)

# standard

}

+ b[i]))

tau)

precision

deviation

#DATA

list(N = 76,

U = c(42527,

25584,

41997,

27605,

23829,

23848,

38583,

23852,

40919,

23077,

27850,

42467,

14262,

33421,

21087,

58848

),

T = c(44444,

34415,

45409,

29965,

26719,

25935,

44204,

35336,

46384,

26493,

28909,

43335,

19811,

34017,

23072,

59035

),

11048, 46236, 35859, 21330,

3792, 32126, 32389, 18664,

2839, 11787, 95880, 37713,

21499, 29709, 17200, 14688,

178792, 24196, 14767, 50242,

28462, 34650, 434374, 35130,

17412, 63929, 94085, 75728,

36971, 59572, 23364, 37238,

11062, 42042, 64685, 27305,

25387, 91593, 18346, 22105,

224044, 40875, 139445, 30886,

185703, 30071, 43707, 16671,

40457, 29802, 16800, 35270,

39958, 10176, 20682, 40395,

99850, 35476, 36201, 16472,

17318, 50531, 36452, 26261,

11061, 34485, 32537, 19817,

6449, 12741, 98434, 39697,

23753, 29709, 23844, 20152,

186475, 25450, 14767, 52362,

31113, 37105, 434374, 40997,

21665, 63929, 94085, 75728,

37910, 61929, 27913, 39246,

19143, 42042, 64685, 29032,

28348, 100131, 19117, 22322,

224044, 46911, 140502, 35244,

186076, 30071, 47370, 18004,

42753, 37597, 20002, 36867,

40712, 12367, 21888, 44301,

100053, 36743, 38925, 16614,

num = c(4,

3,

4,

7,

4,

6,

7,

5,

),

3,

4,

5,

6,

4,

7,

4,

4,

5,

5,

4,

8,

8,

6,

3,

2,

3,

5,

6,

7,

5,

3,

6,

3,

4,

3,

7,

5,

5,

5,

5,

2,

4,

3,

3,

6,

7,

3,

3,

3

3,

6,

2,

7,

6,

7,

3,

3,

4,

4,

5,

5,

6,

5,

5,

7,

6,

6,

6,

5,

6,

5,

6,

2,

5,

7,

6,

4,

adj = c(

2, 7, 14, 25,

1, 14, 21,

5, 8, 23, 31, 34,

9, 10, 29,

3, 6, 33, 34,

5, 7, 25, 33,

1, 6, 25,

3, 10, 23,

4, 11, 24, 29, 32,

4, 8, 23, 29, 31,

9, 12, 24,

11, 16, 19, 24,

17, 18, 28, 37, 39,

1, 2, 21, 25, 36,

17, 20, 22,

12, 18, 19,

13, 15, 22, 28, 38, 48,

13, 16, 19, 39,

12, 16, 18, 24, 35, 39, 45,

15, 22, 26, 41, 42, 46,

2, 14, 30, 36,

15, 17, 20, 46, 48,

3, 8, 10, 31,

9, 11, 12, 19, 32, 35,

1, 6, 7, 14, 33, 36, 44,

20, 27, 41,

26, 41,

13, 17, 37, 38,

4, 9, 10, 31, 32, 43,

21, 36,

3, 10, 23, 29, 34, 40, 43,

9, 24, 29, 35, 43, 50,

5, 6, 25, 34, 44, 49, 53, 60,

3, 5, 31, 33, 40, 49, 59,

19, 24, 32, 45, 50,

14, 21, 25, 30, 44, 47,

13, 28, 38, 39, 51, 52, 61,

17, 28, 37, 48, 52,

13, 18, 19, 37, 45, 51,

31, 34, 43, 59, 64,

20, 26, 27, 42,

20, 41, 46, 54,

29, 31, 32, 40, 50, 56, 57, 64,

25, 33, 36, 47, 53,

19, 35, 39, 50, 51,

20, 22, 42, 48, 52, 54, 68,

36, 44, 53, 58, 62, 63,

17, 22, 38, 46, 52,

33, 34, 59, 60, 66, 67,

32, 35, 43, 45, 51, 55, 57,

37, 39, 45, 50, 55, 61,

37, 38, 46, 48, 61, 68, 69,

33, 44, 47, 58, 60, 65,

42, 46, 68,

50, 51, 57, 61, 71,

43, 57, 64,

43, 50, 55, 56, 64, 71, 74,

47, 53, 62, 63, 65, 72,

34, 40, 49, 64, 67,

33, 49, 53, 65, 66, 76,

37, 51, 52, 55, 69, 70, 71,

47, 58, 63, 72,

47, 58, 62,

40, 43, 56, 57, 59, 74,

53, 58, 60, 72, 76,

49, 60, 67,

49, 59, 66,

46, 52, 54, 69, 73,

52, 61, 68, 70, 73, 75,

61, 69, 71, 75,

55, 57, 61, 70, 74,

58, 62, 65, 76,

68, 69,

57, 64, 71,

69, 70,

60, 65, 72, 2, 7, 14, 25,

1, 14, 21,

5, 8, 23, 31, 34,

9, 10, 29,

3, 6, 33, 34,

5, 7, 25, 33,

1, 6, 25,

3, 10, 23,

4, 11, 24, 29, 32,

4, 8, 23, 29, 31,

9, 12, 24,

11, 16, 19, 24,

17, 18, 28, 37, 39,

1, 2, 21, 25, 36,

17, 20, 22,

12, 18, 19,

13, 15, 22, 28, 38, 48,

13, 16, 19, 39,

12, 16, 18, 24, 35, 39, 45,

15, 22, 26, 41, 42, 46,

2, 14, 30, 36,

15, 17, 20, 46, 48,

3, 8, 10, 31,

queen’s

connectivity

definition

9, 11, 12, 19, 32, 35,

1, 6, 7, 14, 33, 36, 44,

20, 27, 41,

26, 41,

13, 17, 37, 38,

4, 9, 10, 31, 32, 43,

21, 36,

3, 10, 23, 29, 34, 40, 43,

9, 24, 29, 35, 43, 50,

5, 6, 25, 34, 44, 49, 53, 60,

3, 5, 31, 33, 40, 49, 59,

19, 24, 32, 45, 50,

14, 21, 25, 30, 44, 47,

13, 28, 38, 39, 51, 52, 61,

17, 28, 37, 48, 52,

13, 18, 19, 37, 45, 51,

31, 34, 43, 59, 64,

20, 26, 27, 42,

20, 41, 46, 54,

29, 31, 32, 40, 50, 56, 57, 64,

25, 33, 36, 47, 53,

19, 35, 39, 50, 51,

20, 22, 42, 48, 52, 54, 68,

36, 44, 53, 58, 62, 63,

17, 22, 38, 46, 52,

33, 34, 59, 60, 66, 67,

32, 35, 43, 45, 51, 55, 57,

37, 39, 45, 50, 55, 61,

37, 38, 46, 48, 61, 68, 69,

33, 44, 47, 58, 60, 65,

42, 46, 68,

50, 51, 57, 61, 71,

43, 57, 64,

43, 50, 55, 56, 64, 71, 74,

47, 53, 62, 63, 65, 72,

34, 40, 49, 64, 67,

33, 49, 53, 65, 66, 76,

37, 51, 52, 55, 69, 70, 71,

47, 58, 63, 72,

47, 58, 62,

40, 43, 56, 57, 59, 74,

53, 58, 60, 72, 76,

49, 60, 67,

49, 59, 66,

46, 52, 54, 69, 73,

52, 61, 68, 70, 73, 75,

61, 69, 71, 75,

55, 57, 61, 70, 74,

58, 62, 65, 76,

68, 69,

57, 64, 71,

69, 70,

60, 65, 72

),

sumNumNeigh = 366)

#INITIAL VALUES

list(tau = 1, alpha0 = 0,

b=c(0,0,0,0,0,0,0,0,0,0,

0,0,0,0,0,0,0,0,0,0,

0,0,0,0,0,0,0,0,0,0,

0,0,0,0,0,0,0,0,0,0,

0,0,0,0,0,0,0,0,0,0,

0,0,0,0,0,0,0,0,0,0,

0,0,0,0,0,0,0,0,0,0,

0,0,0,0,0,0)

)

Model {

for (i in 1:N) {m[i] <- 1/num[i]}

cumsum[1] <- 0

for(i in 2:(N+1)) {cumsum[i] <- sum(num[1:(i-1)])}

for(k in 1 : sumNumNeigh)

{for(i in 1:N){pick[k,i] <- step(k - cumsum[i] - epsilon)*step(cumsum[i+1] - k)

# pick[k,i] = 1 if cumsum[i] < k <= cumsum[i=1];

otherwise, pick[k,i] = 0}

C[k] <- sqrt(num[adj[k]]/inprod(num[], pick[k,]))

# weight for each pair of

neighbours}

epsilon <- 0.0001

for (i in 1 : N) {

U[i] ~ dbin(p[i], T[i])

p[i] <- exp(S[i])/(1+exp(S[i]))

theta[i] <- alpha}

# proper CAR prior distribution for random effects:

S[1:N] ~ car.proper(theta[],C[],adj[],num[],m[],prec,rho)

for(k in 1:sumNumNeigh) {weights[k] <- 1}

# Other priors:

alpha ~ dnorm(0,0.0001)

prec ~ dgamma(0.5,0.0005)

# prior on precision

sigma <- sqrt(1/prec)

# standard deviation

rho.min <- min.bound(C[],adj[],num[],m[])

rho.max <- max.bound(C[],adj[],num[],m[])

rho ~ dunif(rho.min,rho.max)

}

node

rhoCAR

mean

0.9378

sd

MC error2.5%

0.05255 5.995E-4 0.8047

MCMC iteration time series plot

MCMC iteration correlogram

median

0.9514

97.5%

0.9973

start

50001

sample

10000

GeoBUGS demonstration: % urban

population in Puerto Rico – no

random effect

#MODEL

model

{

for (i in 1 : N) {

U[i] ~ dbin(p[i], T[i])

p[i] <- exp(alpha)/(1+exp(alpha ))

}

# priors:

alpha ~ dflat()

}

#DATA

list(N = 73,

U = c(11062, 42042, 64685, 27305, 23077, 25387, 91593, 18346, 32281,

27850, 254115,

40875, 139445, 30886, 185703, 43707, 16671, 14262, 40457, 29802, 16800, 35270,

33421, 39958, 20682, 40395, 21087, 99850, 35476, 36201, 16472, 58848, 42527, 11048,

46236, 35859, 21330, 25584, 3792, 32126, 74856, 18664, 41997, 2839, 11787, 95880,

37713, 27605, 21499, 29709, 17200, 14688, 23829, 178792, 24196, 14767, 50242,

23848, 28462, 34650, 434374, 35130, 38583, 17412, 63929, 94085, 75728, 23852,

36971, 59572, 23364, 37238, 40919

),

T = c(19143,

42042, 64685, 29032, 26493, 28348, 100131, 19117, 34689,

28909, 254115,

46911, 140502, 35244, 186076, 47370, 18004, 19811, 42753, 37597, 20002, 36867,

34017, 40712, 21888, 44301, 23072, 100053, 36743, 38925, 16614, 59035, 44444,

17318, 50531, 36452, 26261, 34415, 11061, 34485, 75872, 19817, 45409, 6449, 12741,

98434, 39697, 29965, 23753, 29709, 23844, 20152, 26719, 186475, 25450, 14767,

52362, 25935, 31113, 37105, 434374, 40997, 44204, 21665, 63929, 94085, 75728,

35336, 37910, 61929, 27913, 39246, 46384

),

)

#INITIAL VALUES

list(alpha=-3)

The German labor market

revisited:

frequentist and Bayesian

random effects models

Frequentist random intercept term

The spatial filter contains 27 (of 98) eigenvectors,

with R2 = 0.4542, P(S-Wresiduals) < 0.0001.

measure

No

covariates

Spatial

filter

exponential

semivariogram

-2log(L)

1445.1

1179.3

1349.8

Intercept

variance

0.9631

0.2538

0.5873

Residual

variance

0.6116

0.6011

0.9154

Intercept

estimate

5.2827

(0.0599)

5.2827

(0.0443)

5.0076

(0.1835)

MCMC results from GeoBUGS

1000 weeded

25,000

burn-in

the

proper

CAR

model

Bayesian model estimation

measure

No

covariates

Spatial

filter

-2log(L): post mean

1477.8

1213.4

Spatial structure

parameter

***

Residual

variance

1.69

1.028

(0.0517)

0.9277

Intercept

estimate

5.255

(0.0640)

5.258

(0.0473)

CAR

computer

execution

time

required

to

estimate

is

prohibitive

The ICAR alternative

• #MODEL

• model

• {for (i in 1 : N) {

offset

•

W[i] ~ dpois(mu[i])

•

log(mu[i]) <- alpha + S[i] + U[i] + log(A[i])

• U[i] ~ dnorm(0,tau.U) }

• # improper CAR prior distribution for random effects:

• S[1:N] ~ car.normal(adj[],weights[],num[],tau.S)

• for(k in 1:sumNumNeigh) {weights[k] <- 1}

•

•

•

•

•

•

# Other priors:

alpha ~ dflat()

tau.S ~ dgamma(0.5, 0.0005)

sigma2.S <- 1/tau.S

tau.U ~ dgamma(0.5, 0.0005)

sigma2.U <- 1/tau.U }

•

•

•

•

•

•

•

•

•

•

•

•

•

•

•

•

•

•

•

•

•

•

•

•

#DATA

list(N = 439,

W = c(

25335, 74162, 64273, 25080, 39848, 59407, 49685, 60880, 101563, 39206, 83512,

…

32999, 26534, 45267, 35232, 35788, 41961, 36129

attribute

),

variables

A = c(

23.51, 43.81, 87.14, 27.53, 558.57, 512.17, 855.22, 578.86, 261.45, 447.18, 877.26,

…

369.50, 382.46, 452.02, 350.84, 219.97

),

num = c(1, 2, 4, 3, 4, 7, 2, 4, 4, 5,

…

number of neighbors

8, 7, 8, 4, 7, 7, 6, 8, 6

),

adj = c(

This can be

12,

generated by

11, 10,

GeoBUGS; the

lists of neighbors

359, 15, 8, 6,

queen’s definition

…

of adjacency is used

438, 400, 390, 375, 372, 368

),

sumNumNeigh = 2314)

• #INITIAL VALUES

• list(tau.U=1, tau.S=1, alpha=0,

• S=c(0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,

0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,

•

…

•

0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0

,0,0,0,0,0,0,0,0,0,0,0,0,0),

• U=c(0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,

0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,

•

…

•

0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0

,0,0,0,0,0,0,0,0,0,0,0,0,0)

• )

intercept

Convergence has

not been

achieved (10,000

burn in; +

25,000/100)

Random effects: spatially

structured and unstructured

SA:

2.67/(30.39+2.67)

= 0.08

(8% of variance)

Variance diagnostics

bugs.R: functions for running

WinBUGS from R

The bugs() function takes data and

starting values as input, calls a Bugs

model, summarizes inferences and

convergence in a table and graph, and

saves the simulations in arrays for easy

access in R.

1st: Download WinBugs1.4

2nd: Download OpenBUGS and extract

the zip file into "c:/Program Files/“

3rd: Go into R and type

install.packages("R2WinBUGS")

Some prediction comparisons

n = 56;

effective sample

size = 24