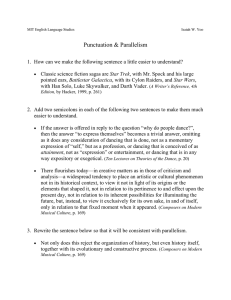

Where do We Go from Here? Thoughts on Computer Architecture Jack Dennis

advertisement

Where do We Go from Here? Thoughts on Computer Architecture Jack Dennis MIT Computer Science and Artificial Intelligence Laboratory Thesis • The purpose of a computer is to execute programs that implement applications. • Programs are expressed in programming languages. • It makes no sense to design computers without taking into account the principles of software methodology and programming language design. Principles • Modularity: It should be possible to use a program module in the construction of other programs without knowledge of its internal details. • Generality: It should be possible to pass any data object between program modules. • Determinacy: Unexpected asynchronous hazards should not be possible. These principles apply to parallel as well as sequential programs. Lessons from Multics • Address Space: Many segments per process. • Why such a large address space? • “To permit a degree of programming generality not previously practical. This includes the ability of one procedure to use [any] other procedure knowing only its name.” • “to permit sharing of procedures and data among users subject only to proper authorization.” Lessons from MULTICS • In Multics, segments have independently assigned numbers in each process, and unlinking segments leaves gaps that may never get reused. • This makes it difficult for processes to collaborate in a modular fashion. • In IBM System/38 and AS/400, “handles” are universal, but the system software does not support the generality achieved per process in Multics. Data Flow: Motivation • Question: How to express concurrency? • Answer: Use implicit parallelism! • Forms of implicit parallelism: – – – – . Expressions Functions Data Parallel Producer/Consumer Expressions In an expression such as (u + v) * (x + y) the subexpressions (u + v) and (x + y) may be evaluated in parallel. Functions If neither of two functions depends on the results of the other, as in z = f (x, u) + g (x) the two function applications may be evaluated concurrently. Data Parallelism A new array Z may be specified in Sisal by Z := for i in [1..n] z = X[i - 1] + Y[i +1] * 2.0; returns array of z end for; where the body code may be executed independently for each value of index i. Producer/Consumer In a computation such as: Producer: Consumer: While true do x = f(x); send g(x) at port; While true do y = receive at port; z= h(z,y); the producer and consumer processes can operate concurrently. Data Flow: Influence Data Flow ideas were presented at: • The Second IEEE Symposium on Computer Architecture, 1975: “A Preliminary Architecture for a Basic Data-Flow Processor.” • The 1975 Sagamore Conference on Parallel Processing: “Packet Communication Architecture.” where I presented an impromptu seminar on the work of my MIT group. Data Flow: Projects Experimental machines were designed or prototyped in laboratories worldwide, including: Texas Instruments ESL Hughes NEC (two products) Manchester University (England) ETL,Tskuba (Japan) Tokyo, Keio, and Osaka Universities (Japan) Data Flow: A Conclusion • It is possible to build efficient processors that exploit implicit parallelism. • Related ideas are embodied in contemporary superscalar processor designs. Data Flow: Monsoon • An MIT group led by Professor Arvind built the Monsoon data flow multiprocessor in cooperation with the Motorola Corp. • Monsoon demonstrated that: Significant scientific applications can be written in a functional programming language to fully exploit their implicit parallelism on a data flow multiprocessor. The Sisal Programming Language • Developed at Lawrence Livermore National Laboratory (LLNL) based on work at the MIT Computation Structures Group. (1985) • Compiler developed by LLNL competitive with Fortran for kernels of important scientific codes. • Should be extended to support streams and transactions. Whereas: • Single Processor Computers are reaching their limit; computers with multiple processors will be the rule in the future. • Programming for multiple processors is a disaster area due to code explosion and unwanted asynchronous hazards. Whereas: • A global address space for all activities simplifies process multiplexing and storage management. • A global address space supports effective data protection and security. Whereas: • Functional programming permits expression of programs with implicit parallelism. • Functional programming languages support a general form of modular programming. • It can be shown how to implement efficient transaction processing within a functional programming framework. Whereas • With only a small sacrifice in single-thread performance, it is possible to build superscalar simultaneous multithread processors that are much simpler than today’s superscalar processors. • Exploitation of parallelism eliminates most of the benefits of speculative execution. Therefore: Therefore future computer architecture should: • Employ simultaneous multithread processors • Implement a global shared name space • Support modular program construction through functional programming. Some Radical Ideas • Fixed-size “Chunks” of Memory • No Update of Chunks • Built-in Garbage Collection • Cycle-Free Heap A Fresh Breeze System Interprocessor Network MPC MPC MPC SMS Shared Memory System MPC Memory Chunks and Pointers • Chunk: A fixed-size unit of memory allocation. 1024 bits of data; for example, 32 x 32-bit words or 16 x 64-bit longs. • Each chunk has a unique 64-bit unique identifier; this is the pointer or unique identifier of the chunk. Representation of Arrays • An array of arbitrary size may be represented by a tree of chunks. Eight levels suffices to represent an array of 16 ** 8 = ~ 4 * (10**9) 64-bit elements. Multiprocessor Chip Instr Association CAM MTP MTP Instruction Access Net IAU IAU Data Association CAM MTP MTP Data Access Net DAU Shared Memory System Interface DAU Handouts • Towards the Computer Utility: A Career in Computer System Architecture. An essay written for the 50th reunion of the MIT class of 1953. • A Parallel Program Execution Model Supporting Modular Software Construction. A paper presented at the 1997 Massively Parallel Programming Models symposium, London.