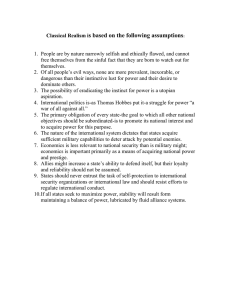

Lightweight Logging For Lazy Release Consistent DSM Costa, et. al.

advertisement

Lightweight Logging For Lazy

Release Consistent DSM

Costa, et. al.

CS 717 - 11/01/01

Definition of a SDSM

In a software distributed shared memory (SDSM), each

node runs its own operating system, and has a local

physical memory

Each node runs a local process. The these processes form

the parallel application

The union of the local memory of each of the local

processes form the global memory of the application

The global memory appears as one virtual address space –

a process accesses all memory locations in the same

manner, using standard load and stores

Basic Implementation of a SDSM

The virtual add. space is divided among different

memory pages, which are distributed among the

local memory of the different processes

Each node has a copy of the page to node

assignments

We use the hardware’s virtual memory support

to provide the appearance of SM (page table and

faults)

The SDSM system is implemented as fault

handler routines

Such a system is also called a SVM system

Illustration

N1

P1

N2

P3

P5

N3

P4

P5

P2

The same virtual page might appear in multiple

physical pages, on multiple nodes

SDSM Operation

If N2 attempts to write x on P2

P2 is marked as invalid on N2’s page table, so access

will cause a fault

Fault handler checks page-node map, and then

requests that N3 send it P2

N3 sends page, and notifies all nodes of the change

N3 sets page access to “invalid”

N2 sets page access to “read/write”

Handler returns

Multiple N’s can have the same P in their

physical add. space, if P is “read-only” for all of

them, but only one N can have a copy of P if it is

“read/write”

Page Size Granularity

Memory access is managed at the

granularity of an OS page

Easy to implement

Can be very inefficient

If N exhibits poor spatial locality, a lot of

unnecessary data transfer

If both x and y are on the same page, P, and

N1 is repeatedly writing to x while N2 is

writing to y, P will be continually sent back

and forth between N1 and N2 – false sharing

Sequential Consistency

Defined by Lamport as:

A multiprocessor is sequentially consistent if

the result of any execution is the same as if

the operations of all the processors were

executed in some sequential order, and the

operations of each individual processor occur

in this sequence in the order specified by its

program

Is this SDSM Sequentially

Consistent?

Assume a and b are on P1 and P2

respectively

N1

a=1

b=1

N2

print b

print a

If N2 does not invalidate its copy of P1,

but does invalidate P2, the output will be

<1,0>

which is invalid under SC

Ensuring Sequential Consistency

For the system to be SC, N1 must ensure that

N2 invalidated its copy of a page before it can

write to that page

Before a write, N1 must tell N2 to invalidate its

copy of the page, and then wait for N2 to

acknowledge that it has done so

Of course, if we know that N2’s copy is already

invalidated, we don’t need to do this

N2 could not have re-obtained access with out N1’s

copy being invalidated

Ping-Pong Effect

SC, combined with the large sharing

granularity (OS page), can lead to

the ping-pong effect

Substantial, expensive,

communication cost due to falsesharing

A Problem With SC

N1 is continually writing to x while N2 is

cont. reading from y, both on the same P

N2 has P in “read-only”, N1 has P in “r-o”

N1 attempts to write to x, faults, tells N2 to

go to “invalid”

N1 waits for N2 to go to “invalid”, N1 goes to

“r/w”, N1 does write

N2 tries to read, faults, tells N1 to go to “r-o”,

and send current copy of P, N2 goes to “r-o”

N2 gets P, does read

Ping-Pong Effect

N1

R/W

R/O

inval

ack

R/O

R/W

…

req

reply

N2

R/O

inval

R/O

inval

Relaxing the Consistency Model

The memory consistency model

specifies constraints on the order in

which memory operations appear to

to execute wrt. each other

Can we relax the consistency model

to improve performance?

Release Consistency

Certain operations are specified as

‘acquire’ and ‘release’ operations

Code below an acquire can never be

moved above the acquire

Code above the release can never be

moved below the release

As long as there are no race-conditions,

behavior of program same under RC or

SC

RC Illustration

I

acq

II

rel

III

acq

I

II

rel

III

Lazy Release Consistency (LRC)

In order for a system to be RC, it

must ensure that all memory writes

above a release become visible

before that release is visible

i.e., before issuing a release, it

must invalidate all other copies of

the same page

Can we relax this further?

LRC

LRC is a further relaxation:

Lets not invalidate pages until absolutely

necessary

N1: I, acquire, II, release

N2: III, acquire, IV, release

Only when N2 is about to issue an acquire,

does N1 ensure that all changes it make

before its release are visible

N1 invalidates N2’s copy of the pages before

N2 does its acquire

Illustration

N1

RC

A

I

R

inval

A

ack

II

R

inval

ack

N2

N1

A…

LRC

A

I

R

A

II

R

inval

N2

ack

A…

TreadMarks

A high performance SDSM

Implements LRC

Keller, Cox, Zwaenepoel 1994

Intervals

The execution of each process is divided

into intervals, beginning at a

synchronization access (acq. or release)

These form a partial order:

intervals on the same process are totally

ordered

intval. x precedes y if the release that ended

x corresponds to the acquire that began y

When a process begins a new interval, it

creates a new IntervalRecord

Vector Clocks

Each process also keeps a current

vector clock, VC, <…,L,M,N,O,…>

If VCN is process N’s vector clock,

VCN(M) is the most recent interval of

process M that process N knows about

VCN(N) is therefore the current interval

of process N

Interval Records

An IntervalRecord is a structure

containing:

The pid of the process that created this

record

The vector-clock timestamp of when

this interval was created

A list of WriteNotices

Write Notices

A WriteNotice is a record

containing:

The page number of the page written

to

A diff showing the changes made to

this page

A pointer to the corresponding

IntervalRecord

Acquiring A Lock

When N1 wants to acquire a lock, it

sends its current vector clock to the

Lock Manager

The Lock Manager forwards this

message to the last process that

acquired this lock (assume N2)

N2 replies (to N1) with all the

IntervalRecords that have a

timestamp between the VC sent by

N1 and the VC of the IR that ended

with the most recent release of that

lock

N1 received IntervalRecords from N2

N1 stores these IntervalRecord in volatile

memory

N1 invalidates all pages for which it

received a WriteNotice (in the IRs)

On a page fault, N1 obtains a copy of the

page, and then applies all the diffs for

that page in interval order

If N1 is about to write to that page, it

makes a copy of it (so that it can

compute the diff of its changes)

Example

N1

<0,0,0>

acq

<1,0,0>

write P

rel

Request <0,0,0>

IR/DIFF <1,0,0>

<1,1,0>

N2

<0,0,0>

acq

Apply diff

write P

rel

IR/DIFF <1,0,0>

Request <0,0,0>

N3

IR/DIFF <1,1,0>

<1,1,1>

<0,0,0>

acq

Apply diff

write P

rel

Example (cont.)

If N1 were to issue another acquire,

it would only have to apply the diffs

in the IR of time <1,1,1> and

<1,1,0>, because its current VC was

<1,0,0>

Improvement: Garbage Collection

Each N is keeping a log of all shared

memory writes that it made, along

with all writes that it needed to

know about

At a barrier, Ns can synchronize, so

that each N has the most up to date

copy of its pages, and the logs could

then be discarded

Improvement: Sending Diffs

You might notice that if N1 writes to

pages P1, P2, P3 during an interval, and

N2 acquires the lock next, N1 needs to

send the three diffs to N2, regardless if

N2 will actually need those pages

In truth, N1 does not send the diffs, it

sends a pointer to its local memory,

where the diff is located

If N2 needs to apply that diff, it will

request that diff from N1, using that

pointer

Adding Fault Tolerance

Assume we would like the ability to

survive single node failure (only one fails

at a time, but multiple failures may occur

during the running of the application)

What information would we need to log,

and where?

Remember, we already log IntervalRecords

and WriteNotices as part of the usual

operation of TreadMarks

Ni fails and then restarts

If it acquires a lock, it must see the same

version of the page that it saw during the

original run

Therefore Nj must send it the same

WriteNotices (diffs) as before, even

though Nj’s current version of the page

might be very different, and Nj’s vector

clock has also changed

Example

<0,0,0> ACQ/WRI/REL <1,0,0>

N1

IR <1,0,0>

N2

<0,0,0> ACQ/WRI/REL<1,1,0>

IR <1,0,0>

IR <1,1,0>

IR <1,1,1>

<0,0,0> ACQ/WRI/REL <1,1,1>

N3

<1,1,0> ACQ/WRI/REL<1,2,1>

X

If N3 is restarted, when it reissues the acquire, it must receive the same

set of WriteNotices as it had during its original run.

If we run the algorithm un-modified, N3 would receive

<1,0,0><1,1,0><1,1,1><1,2,1>, and the application would be incorrect

Send Log

Therefore, N2 needs some way of logging

which IntervalRecords it had sent to N3

It does this by storing the VC of N3 when

it issued the acquire (this was sent to it

with the request) and the VC of N2 when

it received the request

This is stored in N2’s send-log

From these two VC’s, N2 can determine

which IntervalRecords it had sent to N1

Example

Send-Log: {N2, <0,0,0><1,0,0>}

N1

IR <1,0,0>

Send-Log: {N3, <0,0,0><1,1,0>}

N2

<0,0,0> ACQ

WRI

REL<1,1,0>

IR <1,0,0>

IR <1,1,0>

X

N3

<0,0,0> ACQ

WRI

REL <1,1,1>

Restart

When N3 restarts, it will request the

acquire at time <0,0,0>

N2 will look in its send log, and see that

when it received an acquire request from

N3 at <0,0,0>, it was at time <1,1,0>,

so it will send the IR of all the intervening

intervals

Therefore, N3 receives the same diffs as

it did before

Logging, cont.

Is the send-log sufficient to provide

the level of fault-tolerance that we

wanted?

Imagine N2 had failed, and then

restarted, could we then survive the

failure of N3?

Logging

No, we could not survive the

subsequent failure of N3, because

N2 no longer had its send-log

We also need a way to recreate N2’s

send log

Receive-Log

On every acquire, N, logs its vector

time, before the acquire and its new

vector time after seeing the

IntervalRecords sent to it by M in N’s

receive-log

If M fails, M’s send-log can be

recreated from N’s receive-log

Example

Send-Log: {N2, <0,0,0><1,0,0>}

N1

IR <1,0,0>

N2

Send-Log: {N3, <0,0,0><1,1,0>}

Recv-Log: {N1, <0,0,0><1,0,0>}

IR <1,0,0>

IR <1,1,0>

N3

Recv-Log: {N2, <0,0,0><1,1,0>}

X

If N2 were to fail, it would get restarted

N1’s send-log will ensure that N2 sees the

same page as it did originally

When, in the future, N3 sees a VC time

later than that in its receive log (wrt. N2)

it will forward the information in its

receive-log to N2

N2 will recreate its send-log

We could now survive future failures

Checkpointing

When we arrive at garbage

collection point, we could checkpoint

all processes

Minimize rollback

Survive concurrent failures

Empty logs

Results

Results 2

Appl.

Log Size

(MB)

Water

3.10

Avg. Ckpt.

Size

(MB)

3.05

SOR

.33

7.84

TSP

.05

2.49

Results 3