A Special-Purpose Processor System with Software-Defined Connectivity

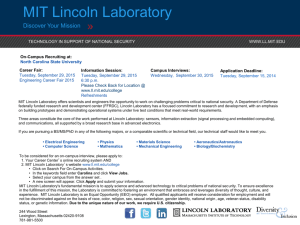

advertisement

A Special-Purpose Processor

System with Software-Defined

Connectivity

Benjamin Miller, Sara Siegal, James Haupt, Huy Nguyen

and Michael Vai

MIT Lincoln Laboratory

22 September 2009

This work is sponsored by the Navy under Air Force Contract FA8721-05-0002. Opinions, interpretations , conclusions

and recommendations are those of the authors and are not necessarily endorsed by the United States Government.

SPP-HPEC09-1

BAM 7/12/2016

MIT Lincoln Laboratory

Outline

• Introduction

• System Architecture

• Software Architecture

• Initial Results and Demonstration

• Ongoing Work/Summary

SPP-HPEC09-2

BAM 7/12/2016

MIT Lincoln Laboratory

Why Software-Defined Connectivity?

• Modern ISR, COMM, EW

systems need to be flexible

– Change hardware and

software in theatre as

conditions change

– Technological upgrade

– Various form factors

• Example: Reactive electronic

warfare (EW) system

– Re-task components as

environmental conditions

change

– Easily add and replace

components as needed

before and during mission

• Want the system to be open

– Underlying architecture

specific enough to reduce

redundant software

development

– General enough to be applied

to a wide range of system

components

E.g., different vendors

SPP-HPEC09-3

BAM 7/12/2016

MIT Lincoln Laboratory

Special Purpose Processor (SPP)

System

Processor 1

Tx 1

Rx 2

FPGA 2

Processor 2

Tx 1

Rx R

FPGA M

Processor N

Tx T

HPA

FPGA 1

S

Rx 1

RF Distribution

RF Distribution

LNA

Antenna Interface

Switch Fabric

• System representative of

advanced EW architectures

– RF and programmable

hardware, processors all

connected through a

switch fabric

Enabling technology: barebone, low-latency pub/sub

middleware

SPP-HPEC09-4

BAM 7/12/2016

configure

backplane

connections

manage

inter-process

connections

Config. Mgr.

Res. Mgr.

data processing

algorithms

Process P

Process 2

Process 1

Thin Communications Layer (TCL) Middleware

OS

OS

OS

Proc. 1

Proc. 2

Proc. N

Switch Fabric

FPGA 1

FPGA M

Rx 1

Rx R

Tx 1

Tx T

MIT Lincoln Laboratory

Mode 1: Hardwired

Config. Mgr.

Process P

Process 2

Process 1

Res. Mgr.

Thin Communications Layer (TCL) Middleware

OS

OS

OS

Proc. 1

Proc. 2

Proc. N

connections to

hardware fixed

Switch Fabric

FPGA

FPGA

FPGA

FPGA

Rx

Rx

Tx

Tx

• Hardware components physically connected

• Connections through backplane are fixed (no configuration

•

SPP-HPEC09-5

BAM 7/12/2016

management)

No added latency but inflexible

MIT Lincoln Laboratory

Mode 2: Pub-Sub

Process 4

Process 3

Process 2

Proxy 1

Process P

Process 2

Process 1

Res. Mgr.

Thin Communications Layer (TCL) Middleware

OS

Proc

FPGA

Rx

OS

Proc

FPGA

OS

FPGA

Tx

Proc

Switch Fabric

OS

Rx

Proc

OS

Proc

• Everything communicates through the middleware

– Hardware components have on-board processors running

proxy processes for data transfer

• Most flexible, but there will be overhead due to the

middleware

SPP-HPEC09-6

BAM 7/12/2016

MIT Lincoln Laboratory

Mode 3: Circuit Switching

Config. Mgr.

Process P

Process 2

Process 1

Res. Mgr.

Thin Communications Layer (TCL) Middleware

OS

OS

OS

Proc. 1

Proc. 2

Proc. N

Switch Fabric

FPGA 1

FPGA M

Rx 1

Rx R

Tx 1

Tx T

• Configuration manager sets up all connections across the

•

•

SPP-HPEC09-7

BAM 7/12/2016

switch fabric

May still be some co-located hardware, or some hardware

that communicates via a processor through the middleware

Overhead only incurred during configuration

MIT Lincoln Laboratory

Today’s Presentation

Config. Mgr.

Process P

Process 2

Process 1

Res. Mgr.

Thin Communications Layer (TCL) Middleware

OS

OS

OS

Proc. 1

Proc. 2

Proc. N

Switch Fabric

FPGA 1

FPGA M

Rx 1

Rx R

Tx 1

Tx T

• TCL middleware developed to support the SPP system

– Essential foundation

• Resource Manager sets up (virtual) connections between

processes

SPP-HPEC09-8

BAM 7/12/2016

MIT Lincoln Laboratory

Outline

• Introduction

• System Architecture

• Software Architecture

• Initial Results and Demonstration

• Ongoing Work/Summary

SPP-HPEC09-9

BAM 7/12/2016

MIT Lincoln Laboratory

System Configuration

• 3 COTS boards connected

through VPX backplane

–

1 Single-board computer,

dual-core PowerPC 8641

– 1 board with 2 Xilinx Virtex- 5

FPGAs and a dual-core 8641

– 1 board with 4 dual-core 8641s

– Processors run VxWorks

• Boards come from same

•

vendor, but have different board

support packages (BSPs)

Data transfer technology of

choice: Serial RapidIO (sRIO)

– Low latency important for our

application

• Implement middleware in C++

SPP-HPEC09-10

BAM 7/12/2016

MIT Lincoln Laboratory

System Model

Application

Components

System Control

Components

Signal Processing Lib

Vendor

Specific

BSP1

HW (Rx/Tx,

ADC, etc.)

BSP2

Operating System

VxWorks

(Realtime: VxWorks;

Standard: Linux)

Physical Interface

VPX + Serial Rapid I/O

VPX + Serial Rapid I/O

SPP-HPEC09-11

BAM 7/12/2016

MIT Lincoln Laboratory

System Model

Application

Components

System Control

Components

Signal Processing Lib

TCL Middleware

Vendor

Specific

BSP1

HW (Rx/Tx,

ADC, etc.)

BSP2

Operating System

VxWorks

(Realtime: VxWorks;

Standard: Linux)

Physical Interface

VPX + Serial Rapid I/O

VPX + Serial Rapid I/O

SPP-HPEC09-12

BAM 7/12/2016

MIT Lincoln Laboratory

System Model

New SW components

Application

Components

Application

Components

System Control

Components

Signal Processing Lib

TCL Middleware

Vendor

Specific

BSP1

BSP2

New HW

components

HW (Rx/Tx,

ADC, etc.)

Operating System

VxWorks

(Realtime: VxWorks;

Standard: Linux)

Physical Interface

VPX + Serial Rapid I/O

VPX + Serial Rapid I/O

New components can easily be added by

complying with the middleware API

SPP-HPEC09-13

BAM 7/12/2016

MIT Lincoln Laboratory

Outline

• Introduction

• System Architecture

• Software Architecture

• Initial Results and Demonstration

• Ongoing Work/Summary

SPP-HPEC09-14

BAM 7/12/2016

MIT Lincoln Laboratory

Publish/Subscribe Middleware

subscribers

Process k

Process l1

TCL Middleware

Topic T

Subscribers

-----------------l1

l2

send to

application

notify

send to l2

send to l1

OS

Proc. k

. . . and the

subscribers are

unconcerned

about where

their data comes

from

OS

Proc. l1

notify

Publishing

application

doesn’t need to

know where the

data is going . . .

Process l2

OS

Proc. l2

Switch

Fabric

Middleware acts as interface to both application and hardware/OS

SPP-HPEC09-15

BAM 7/12/2016

MIT Lincoln Laboratory

Abstract Interfaces to Middleware

interface with application

What datatransfer

technology am I

using? TCL

Middleware

How (exactly)

do I execute a

data transfer?

• Middleware must be abstract to be effective

– Middleware developers are unaware of hardware-specific libraries

– Users have to implement functions that are specific to BSPs

SPP-HPEC09-16

BAM 7/12/2016

MIT Lincoln Laboratory

XML Parser

Config. Mgr.

Parser

setup.xml

Process P

Process 2

Process 1

Res. Mgr.

Thin Communications Layer (TCL) Middleware

OS

OS

OS

Proc. 1

Proc. 2

Proc. N

Switch Fabric

FPGA 1

FPGA M

Rx 1

Rx R

Tx 1

Tx T

• Resource manager is currently in the form of an XML parser

– XML file defines topics, publishers, and subscribers

– Parser sets up the middleware and defines virtual network

topology

SPP-HPEC09-17

BAM 7/12/2016

MIT Lincoln Laboratory

Middleware Interfaces

Interface

to BSP

Interface to Application

DataWriter

derived from

derived from

DataReaderListener

SrioDataReader

change with

comm. tech.

has a

SrioDataWriter

Builder

derived from

has a

derived from

DataReader

has a

has a

CustomBSPBuilder

SrioDataReaderListener

change with hardware

• Base classes

–

DataReader, DataReaderListener and DataWriter

interface with the application

– Builder interfaces with BSPs

• Derive board- and communication-specific classes

SPP-HPEC09-18

BAM 7/12/2016

MIT Lincoln Laboratory

Builder

#include <math.h>

...

//member functions

STATUS Builder::performDmaTransfer(...){}

...

Interface

to BSP

derived from

Builder

#include <math.h>

#include “vendorPath/bspDma.h”

...

//member functions

STATUS CustomBSPBuilder::performDmaTransfer(...){

return bspDmaTransfer(...);

}

...

CustomBSPBuilder

• Follows the Builder pattern in Design Patterns*

• Provides interface for sRIO-specific tasks

– e.g., establish sRIO connections, execute data transfer

• Certain functions are empty (not necessarily virtual) in the

base class, then implemented in the derived class with BSPspecific libraries

SPP-HPEC09-19

BAM 7/12/2016

MIT Lincoln Laboratory

*E. Gamma et. al. Design Patterns: Elements of Reusable Object-Oriented Software. Reading, Mass.: Addison-Wesley, 1995.

Publishers and Subscribers

Interface to Application

DataWriter

SrioDataReader

derived from

DataReaderListener

SrioDataReaderListener

derived from

derived from

DataReader

has a

SrioDataWriter

//member functions

virtual STATUS

DataWriter::write(message)=0;

virtual STATUS

SrioDataWriter::write(message)

{

...

myBuilder->performDmaXfer(…);

...

}

Derived Builder type

determined dynamically

•

DataReaders, DataWriters and DataReaderListeners act as

“Directors” of the Builder

–

•

•

Tell the Builder what to do, Builder determines how to do it

DataWriter used for publishing, DataReader and DataReaderListener

used by subscribers

Derived classes implement communication(sRIO)-specific, but not

BSP-specific, functionality

–

SPP-HPEC09-20

BAM 7/12/2016

e.g., ring a recipient’s doorbell after transferring data

MIT Lincoln Laboratory

Outline

• Introduction

• System Architecture

• Software Architecture

• Initial Results and Demonstration

• Ongoing Work/Summary

SPP-HPEC09-21

BAM 7/12/2016

MIT Lincoln Laboratory

Software-Defined Connectivity

Initial Implementation

• Experiment: Process-toprocess data transfer

latency

– Set up two topics

– Processes use TCL to

send data back and forth

– Measure round trip time

with and without

middleware in place

Process 1

Process 2

TCL Middleware

OS

OS

OS

Proc. 1

Proc. 2

Proc. N

Switch Fabric

FPGA 1

SPP-HPEC09-22

BAM 7/12/2016

FPGA M

Rx 1

Rx R

Tx 1

Tx T

<Topic>

<Name>Send</Name>

<ID>0</ID>

<Sources>

<Source>

<SourceID>8</SourceID>

</Source>

</Sources>

<Destinations>

<Destination>

<DSTID>0</DSTID>

</Destination>

</Destinations>

</Topic>

<Topic>

<Name>SendBack</Name>

<ID>1</ID>

<Sources>

<Source>

<SourceID>0</SourceID>

</Source>

</Sources>

<Destinations>

<Destination>

<DSTID>8</DSTID>

</Destination>

</Destinations>

</Topic>

MIT Lincoln Laboratory

Software-Defined Connectivity

Communication Latency

P1

P2

• One-way latency ~23 us for

• Reach 95% efficiency at 64

•

•

small packet sizes

Latency grows

proportionally to packet

size for large packets

SPP-HPEC09-23

BAM 7/12/2016

KB

Overhead is negligible for

large packets, despite

increasing size

MIT Lincoln Laboratory

Demo 1: System Reconfiguration

TCL Middleware

Processor #1

Processor #2

Processor #3

Configuration 1:

XML1

Detect

Detect

Process

Process

Transmit

Transmit

Transmit

Transmit

Process

Process

Configuration 2:

XML2

Detect

Objective: Demonstrate connectivity reconfiguration

by simply replacing the configuration XML file

SPP-HPEC09-24

BAM 7/12/2016

MIT Lincoln Laboratory

Demo 2: Resource Management

TCL Middleware

Control

Receive

Proc #1

Proc #2

Transmit

I will process this new

information!

Transmit

Proc #2

Receive

Proc #1

I’ve detected

signals!

Low-latency predefined connections allow quick response

SPP-HPEC09-25

BAM 7/12/2016

MIT Lincoln Laboratory

Demo 2: Resource Management

TCL Middleware

Control

Receive

Proc #1

Proc #2

Transmit

Working…

Transmit

Proc #2

I will determine what to

transmit in response!

I need more help to

analyze the signals!

Receive

Proc #1

Resource manager sets up new connections on demand

to efficiently utilize available computing power

SPP-HPEC09-26

BAM 7/12/2016

MIT Lincoln Laboratory

Demo 2: Resource Management

TCL Middleware

Control

I will transmit the

response!

Receive

Proc #1

Proc #2

Transmit

Working…

Transmit

Proc #2

I have determined an

appropriate response!

Receive

Proc #1

Proc #1/Transmit are publisher/subscriber on topic TransmitWaveform

SPP-HPEC09-27

BAM 7/12/2016

MIT Lincoln Laboratory

Demo 2: Resource Management

TCL Middleware

Control

Receive

Proc #1

Proc #2

Transmit

Done!

I will transmit the

response!

Transmit

Proc #2

I have determined an

appropriate response!

Receive

Proc #1

After finishing, components may be re-assigned

SPP-HPEC09-28

BAM 7/12/2016

MIT Lincoln Laboratory

Outline

• Introduction

• System Architecture

• Software Architecture

• Initial Results and Demonstration

• Ongoing Work/Summary

SPP-HPEC09-29

BAM 7/12/2016

MIT Lincoln Laboratory

Ongoing Work

Software Modules

SW Module

Image

Formation

SW Module

Interference

Mitigation

FPGA

Modules

SW Module

DAC

Timing

SW Module

Detection

ADC FFT

DIQ

IP Cores

Config. Mgr.

Res. Mgr.

FPGA

Thin Communications Layer (TCL) Middleware

FPGA

Thin Communications Layer (Pub/Sub)

Control

Physical Layer (e.g., Switch Fabric)

Data Path

• Develop the middleware

(configuration manager) to

set up fixed connections

– Mode 3: Objective system

OS

OS

OS

Proc. 1

Proc. 2

Proc. N

Switch Fabric

FPGA 1

SPP-HPEC09-30

BAM 7/12/2016

Process P

Process 2

Process 1

FPGA M

Rx 1

Rx R

Tx 1

Tx T

• Automate resource management

-

-

Dynamically reconfigure

system as needs change

Enable more efficient use of

resources (load balancing)

MIT Lincoln Laboratory

Summary

• Developing software-defined connectivity of hardware and

software components

• Enabling technology: low-latency pub/sub middleware

– Abstract base classes manage connections between nodes

– Application developer implements only system-specific send

and receive code

• Encouraging initial results

– At full sRIO data rate, overhead is negligible

• Working toward automated resource management for

efficient allocation of processing capability, as well as

automated setup of low-latency hardware connections

SPP-HPEC09-31

BAM 7/12/2016

MIT Lincoln Laboratory