Leveraging Multicomputer Frameworks for Use in Multi-Core Processors •

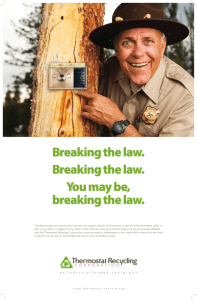

advertisement

Leveraging Multicomputer Frameworks for Use in Multi-Core Processors • • • • • Yael Steinsaltz, ysteinsa@mc.com Scott Geaghan, sgeaghan@mc.com Myra Jean Prelle, mjp@mc.com Brian Bouzas, bbouzas@mc.com Michael Pepe, mpepe@mc.com High Performance Embedded Computing Workshop September 21, 2006 © 2005 Mercury Computer Systems, Inc. Outline • • • • 2 Introduction Channelizer Problem Preliminary Results Summary © 2005 Mercury Computer Systems, Inc. Multi-Core Processors • Multi-Core processors vary in architecture from 2-4 identical cores (Intel Xeon, Freescale 8641), to a single Manager, several Workers on a die (IBM Cell Broadband Engine™ (BE) processor). • Focusing on the IBM Cell BE processor, and using the standard presented in www.datare.org, we implemented an API ‘Multi-Core Framework’ (MCF). • MCF is applicable across architectures as long as one process acts as a Manager; more established APIs would work as well. 3 © 2005 Mercury Computer Systems, Inc. Multi-Core Framework • MCF is based on Mercury's prior implementation of www.data-re.org, a product named “Parallel Acceleration System” or PAS. • Distributed data flows in a Manager-Worker fashion enabling concurrent I/O and parallel processing. • Function Offload model, where user programs both Manager and Workers. MCF simplifies development. • LS memory is used efficiently (< 5% for MCF kernel). • Runs tasks on SPE without Linux® overhead (thread create is bypassed). 4 © 2005 Mercury Computer Systems, Inc. Data Movement • Multi-buffered, strip mining of N-dimensional data sets between a large main memory (XDR) and small worker memories. • Provides for overlap and duplication when distributing data as well as different partitioning. • Data re-organization enables easy transfer of data between local stores. 5 © 2005 Mercury Computer Systems, Inc. Outline • • • • 6 Introduction Channelizer Problem Preliminary Results Summary © 2005 Mercury Computer Systems, Inc. Objective and Motivation • Objective : Develop a Cell BE based realtime signal acquisition system composed of frequency channelizers and signal detectors in a single ~6U slot. • Motivation : Benchmark computational density between PPCs, FPGAs & Cell-BE for a typical streaming application 7 © 2005 Mercury Computer Systems, Inc. The Channelizer Problem • FM3TR Signal (Hopping, Multi-Waveform, Multiband) • Channelization using 16K real FFT with 75% overlap of the input (Computation signal independent). • Simple threshold for detection of the active channels (Computation is data dependent). 8 © 2005 Mercury Computer Systems, Inc. Channelizer Problem • The signal acquisition system separates a wide radio frequency band into a set of narrow frequency bands. • Implementation Specifications 9 4:1 Overlap Buffer: 16K sample buffer -> 8K complex FFT. Blackman Window (Embedded Multipliers). Log-magnitude Threshold: adjustable register and comparator to determine detections © 2005 Mercury Computer Systems, Inc. Data Flow and Work Distribution • manager manager Input data • manager Manager • thread of thread of execution worker Unused processing elements worker worker HSA output Teams perform Channelizer output data parallel math worker Channelizer workers 10 worker High speed Alarm worker manager worker Unused processing elements manager © 2005 Mercury Computer Systems, Inc. Data Flow – Re-org Channels XDR Channelizer team Local Store HSA team LS 11 © 2005 Mercury Computer Systems, Inc. Data Flow – Re-org Channels XDR Channelizer team Local Store HSA team LS 12 © 2005 Mercury Computer Systems, Inc. Data Flow – Re-org Channels XDR Channelizer team Local Store HSA team LS 13 © 2005 Mercury Computer Systems, Inc. Data Flow – Re-org Channels XDR Channelizer team Local Store HSA team LS 14 © 2005 Mercury Computer Systems, Inc. Data Flow – Re-org Channels XDR Channelizer team Local Store HSA team LS 15 © 2005 Mercury Computer Systems, Inc. Data Flow – Re-org Channels XDR Channelizer team Local Store HSA team LS 16 © 2005 Mercury Computer Systems, Inc. Data Flow – Re-org Channels XDR Channelizer team Local Store HSA team LS 17 © 2005 Mercury Computer Systems, Inc. Data Flow – Re-org Channels XDR Channelizer team Local Store HSA team LS 18 © 2005 Mercury Computer Systems, Inc. Data Flow – Re-org Channels XDR Channelizer team Local Store HSA team LS 19 © 2005 Mercury Computer Systems, Inc. Data Flow – Re-org Channels XDR Channelizer team Local Store HSA team LS 20 © 2005 Mercury Computer Systems, Inc. Outline • • • • 21 Introduction Channelizer Problem Preliminary Results Summary © 2005 Mercury Computer Systems, Inc. Development Time and Hardware Use • PPC – 22 PPC needed for the channelizer, and 7 PPC for the HSA; about 2 man-months for development. • FPGA – one half of a VirtexIIPro P70 FPGA (quarter board), about 8 man-months, all the math had to be developed using some Xilinx cores. • Cell BE – single processor (half board), about 4 man-weeks (using the same math and SAL calls as the PPC code). 22 © 2005 Mercury Computer Systems, Inc. Data Rates Tested • PPC implementation accepted data at 70, 80 and 105 MHz (and is easily scalable). • FPGA implementation met data rates at 70 and 80 MHz (MS/sec). • Cell BE implementation met data rates at 70, 80 and 105 MHz (MS/sec). Windowing wasn’t implemented in Cell BE because of insufficient local store for the weights. To add this an extra 2-3 weeks of design modification to the data organization and channels would be needed (Times were measured with a multiply by constant to be true to performance). Math only started to impact data rates when using less than 4 SPEs for the FFT, adding more SPEs didn’t result in added speed. 23 © 2005 Mercury Computer Systems, Inc. Outline • • • • 24 Introduction Channelizer Problem Preliminary Results Summary © 2005 Mercury Computer Systems, Inc. Summary • Morphing a library with similar API to new architecture makes porting applications efficient. • Hardware footprint (6U slots) is comparable to FPGA use. • The small size of the SPE local store is a significant contributor in determining whether an application will port easily or require additional work. • Mercury is fully cognizant of the architecture and works to reduce code size while benefiting from the large I/O bandwidth and fast processing capability of the Cell BE. 25 © 2005 Mercury Computer Systems, Inc.