Application Framework for Computer Vision The PALLAS Group

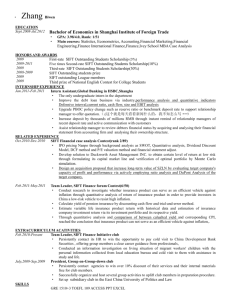

advertisement

Application Framework for Computer Vision The PALLAS Group The reason we are here A programming environment that simultaneously: enables highly efficient implementation on parallel processors allows natural expression of computer vision algorithms What do these programs look like? Hypothesis: They are mostly composed from a collection of preexisting algorithms 2/17 Hypothesis: Assembly of Parts Computer Vision systems are assembled from a set of pre-existing components Even when part of a system is entirely novel Vision and Learning communities have produced a large toolbox, along with standard mathematical tools. Nothing can stand in complete isolation How often is an algorithm developed, deemed useful, and then never used again? When it is used again, how similar is the implementation? 3/17 Example: Recognition via Poselets Gradients Sgemm MS-Vector Distances Histogram Threshold Reduction Update Merge Clusters HOG Feature Extraction Linear SVM Classifiers Mean-Shift Clustering Image Agglomerativ e Clustering Every component existed previously. Novelty is in the composition. Example: gPb Contour Detector Intervening Contour Image Generalized Eigensolver Convert Colorspace SpMV Filter bank Convolution Integral Images K-means Histogram SGEMM Gradients Filter bank Convolution Form Eigenvectors SGEMM BLAS1 BLAS1 BLAS1 BLAS1 BLAS1 Filter bank Convolution Combine, Normalize Relabel Combine Skeletonize Non-max suppression Contours 5/37 Bag-of-Words Object Recognition Harris-Affine Regions Training Set SIFT Descriptors Per-Class Histograms Codebook Naive Bayes K-Means Clustering Test Image Harris-Affine Regions Nearest Neighbor Class Label SIFT Descriptors 6/17 What Components? Feature Detection / Extraction: Clustering: Spectral, Mean-Shift, Agglomerative, K-means, GMM Classification: Must Have: SIFT, SURF, HOG, Pb, MSER Nice to have: Geometric Blur, Shape context, Harris-Laplace, Harris-Affine, ... Must Have: Nearest-Neighbor, Naive Bayes, Logistic Regression, SVM, HMM Nice to Have: Gaussian Process, Decision Trees, RVMs, AdaBoost, ... Distance Functions / Kernels: Manhattan, Euclidean, Mahalanobis, L-infinity Polynomial, RBF, Chi^2, 7/17 Definitions 8 A pattern is a solution to a recurring problem described in such a way that the solution may be uniquely applied to a wide variety of related problems. An software architecture is a hierarchical composition of patterns describing the implementation of a piece of software. A framework is a software environment in which users customization may only be in harmony with the underlying architecture. More Definitions Library: A software implementation of a computational pattern (e.g. BLAS) or a particular sub-problem (e.g. matrix multiply) Domain specific language: A programming language (e.g. Matlab) that provides language constructs that particularly support a particular application domain. The language may also supply library support for common computations in that domain (e.g. BLAS). If the language is restricted to maintain fidelity to a structure and provides library support for common computations then it encompasses a framework (e.g. NPClick). The language necessarily has Turing completeness /computes partial recursive functions. 9 What is the environment? Proposal 1: A Mex-Library of fast, parallelized components Proposal 2: Fixed-Architecture Frameworks with pre-fab parallelized components 10/17 Proposal 1: Mex Functions Status Quo: We parallelize functions a la carte Very difficult to modify: X,000 lines of Cuda code It is very fast, but only as flexible as a C++ library Can still be integrated into Matlab scripts: only the preparallelized functionality is fast. 11/17 Fixed-Architecture Frameworks Example: Extract-classify framework Customizable only via checkboxes and filling in callback functions Includes library of fast, parallel features and classifiers Useful primarily for application development, and building systems to test the algorithms you develop Potential for inter-block parallelization Training Set Diff. of Gaussian Det. of Hessian. Training Features MSER SURF SIFT Geometric Blur Test Image Quantize Feature Extraction Logistic Regression Naive Bayes Linear SVM Classification Object Label 12/17 The Challenge You want to implement fundamentally new approaches We can't provide a library that implements your next algorithm Neither of the "Frameworks" nor the "Scripting Language" approach to composition allows a Vision researcher to produce a parallel implementation of a new component E.G. Max-Margin Hough Transform is brand new. Multi-Month delay from idea's inception to parallel implementation, with us in the loop 13/17 Domain-Specific Language Fine-grained components are integrated as intrinsics, accessed via function-call syntax Some intrinsics (e.g. conjugate gradient) are library calls Others guide implementation and optimization Particular nesting and computation of components determines decisions made by a code generator 14/17 Scripts vs Framework vs DSL Framework enables assembling an application from preimplmented parts Scripting Language also allows assembly from parts Provides access to a fast library of commonly used Vision and Learning algorithms Potentially "easy" inter-block parallelization Provides no ease of inter-block parallelization Still provides access to fast libraries DSL potentially allows implementation of new functionality by composing fine-grained components Code Generator / JIT produces a parallel implementation Enables extensibility of the Framework Framework can provide guarantees about execution safety 15/17 The End 16/17 What is the environment? Proposal 1: A Mex-Library of fast, parallelized components Proposal 2: Fixed-Architecture Frameworks with pre-fab parallelized components Proposal 3: A Domain-Specific Language with intrinsiclevel integration of components 17/17