Name: Problem:

advertisement

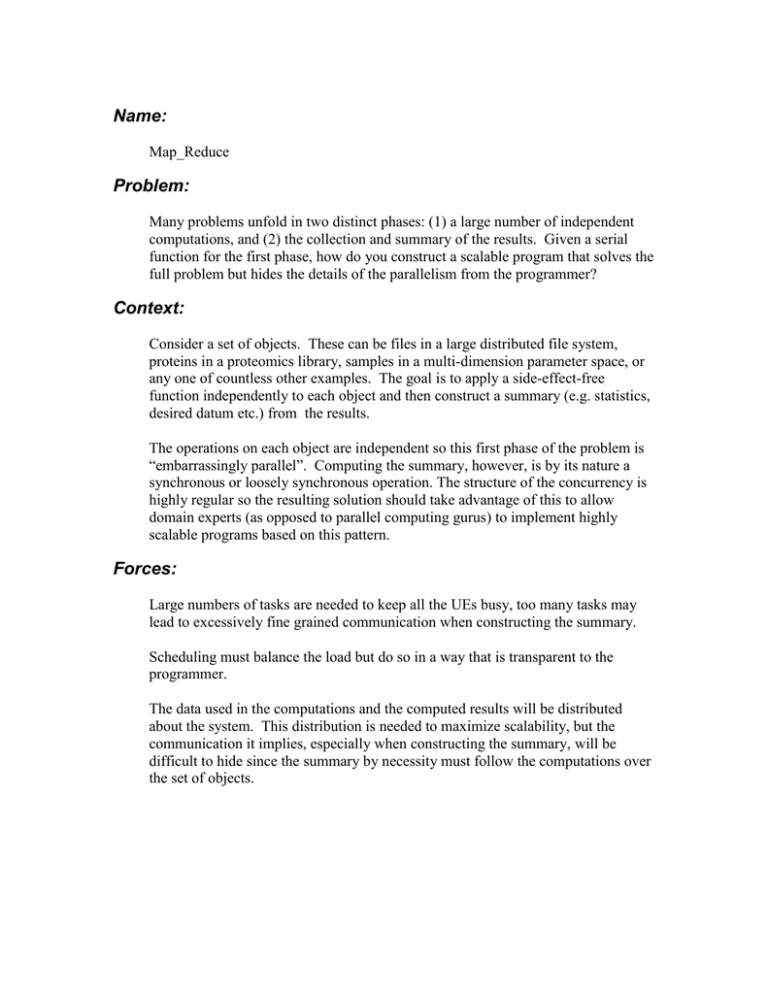

Name: Map_Reduce Problem: Many problems unfold in two distinct phases: (1) a large number of independent computations, and (2) the collection and summary of the results. Given a serial function for the first phase, how do you construct a scalable program that solves the full problem but hides the details of the parallelism from the programmer? Context: Consider a set of objects. These can be files in a large distributed file system, proteins in a proteomics library, samples in a multi-dimension parameter space, or any one of countless other examples. The goal is to apply a side-effect-free function independently to each object and then construct a summary (e.g. statistics, desired datum etc.) from the results. The operations on each object are independent so this first phase of the problem is “embarrassingly parallel”. Computing the summary, however, is by its nature a synchronous or loosely synchronous operation. The structure of the concurrency is highly regular so the resulting solution should take advantage of this to allow domain experts (as opposed to parallel computing gurus) to implement highly scalable programs based on this pattern. Forces: Large numbers of tasks are needed to keep all the UEs busy, too many tasks may lead to excessively fine grained communication when constructing the summary. Scheduling must balance the load but do so in a way that is transparent to the programmer. The data used in the computations and the computed results will be distributed about the system. This distribution is needed to maximize scalability, but the communication it implies, especially when constructing the summary, will be difficult to hide since the summary by necessity must follow the computations over the set of objects. Solution: General Structure: The map reduce problem solves this problem in two distinct phases. The first is a classic instance of the embarrassingly parallel problem (see the PLPP pattern, Task_parallelism) where the provided function is applied independently to each object. The second phase uses a collective communication pattern to collect and summarize the results (the PLPP pattern, reduction). The software architecture is logically based on the master-worker pattern. It is defined in terms of the following components: A manager to generate and manage a set of tasks Workers to apply the “map function” to each object. A repository to hold the results of each application of the map function Workers to carry out the reductions across the contents of the repository. The domain expert, with limited knowledge of parallel computing, just has to define the map function and a reduction function. If the reduction is a simple associative operation, the potential scalability can be much higher. The parallel programming expert implementing this solution must carefully balance the forces exposed by this solution. We will consider these for each of the mapreduce components: Implementation Details The master must issue tasks using a strategy that transparently supports good load balancing. This can be done through a task queue with dynamic access by each worker as they need work. In the general case, this can create large scheduling overhead. If the cost of computing the tasks are similar across the full set of if they can be ordered from large time to small time, a static round-robin schedule may be adequate. The workers are the simplest to implement. They fetch tasks from the master, compute the results which are placed in the central repository. The programmer must assure that they terminate correctly once the set of tasks are complete, but otherwise a simple event loop is sufficient. The repository will be written by many workers concurrently. A concurrent data structure that allows race-free access by multiple writers should be used. Alternatively, results can be stored locally by each worker. In this case, the workers can, upon receipt of a termination message, carry out a local reduction and then cooperatively complete the global reduction. The reduction is fundamentally a concurrent operation with communication costs that are difficult to hide. Scalable reduction algorithms (see the PLPP pattern, reduction) are crucial to use. Note that this problem has a highly regular structure. The manger, workers and reductions can all be incorporated into a framework. In this case, the domain expert programmer only needs provide the map and reduction functions. Invariant: Pre-condition: A set of objects, a side-effect free map function, and an associative reduction function Invariant: map functions are computed correctly. Reduce functions produce the same result independent of the order of reduction, i.e., they obey a sequential semantics. Post-condition: object set unchanged Examples: The biological sciences have become a data centric field. Data bases listing an organism’s full compliment of genes or proteins exist for many interesting systems. One use of these data systems is to find new drug targets. A relevant metric of the bimolecular system is computed for each combination of members from a ligand library (ligands are small molecules that bind with proteins) with each member of a protein. The results are combined to summarize trends and find the subset of results with the desired physical characteristics. This is a classic map_reduce problem. A manager generates every possible combination from two data bases and placed them into a logical task queue. A collection of workers processes the queue and returns results to a central repository. This continues until the desired targets are found. This solution has been used on clusters and large NUMA machines. A particularly interesting application occurred with the “cure@home” program where drug targets for Alzheimer’s disease were studied using this basic approach. Known Uses: The most famous uses of this pattern are the applications of the map-reduce framework used at Google (Dean and Ghemawat, “Map-Reduce: Simplified Data Processing on large clusters” OSDI’04). This pattern, however, is used in a wide range of “parameter sweep” problems in computational biology, financial analytics, operations research, and applications too numerous to list. Related Patterns: The following PLPP patterns are related to Map_Reduce Task parallelism Master worker Reduction Shared Queue Author Tim Mattson