DATA ANALYSIS Module Code: CA660 Lecture Block 7:Non-parametrics

advertisement

DATA ANALYSIS

Module Code: CA660

Lecture Block 7:Non-parametrics

WHAT ABOUT NON-PARAMETRICS?

How Useful are ‘Small Data’?

• General points

-No clear theoretical probability distribution, so empirical

distributions needed

-So, less knowledge of form of data* e.g. ranks instead of values

- Quick and dirty

- Need not focus on parameter estimation or testing; when do frequently based on “less-good” parameters/ estimators, e.g.

Medians; otherwise test “properties”, e.g. randomness, symmetry,

quality etc.

- weaker assumptions, implicit in *

- smaller sample sizes ‘typical’

- different data - implicit from other points. Levels of Measurement Nominal, Ordinal typical for non-parametric/ distribution-free

2

ADVANTAGES/DISADVANTAGES

Advantages

- Power may be better using N-P, if assumptions weaker

- Smaller samples and less work etc. – as stated

Disadvantages - also implicit from earlier points, specifically:

- loss of information /power etc. when do know more on data

/when assumptions do apply

- Separate tables each test

General bases/principles: Binomial - cumulative tables, Ordinal data,

Normal - large samples, Kolmogorov-Smirnov for Empirical

Distributions - shift in Median/Shape, Confidence Intervals- more

work to establish. Use Confidence Regions and Tolerance Intervals

Errors – Type I, Type II . Power as usual.

Relative Efficiency – asymptotic, e.g. look at ratio of sample sizes

needed to achieve same power

3

STARTING SIMPLY: - THE ‘SIGN TEST’

• Example. Suppose want to test if weights of a certain item likely to be

more or less than 220 g.

From 12 measurements, selected at random, count how many above,

how many below. Obtain 9(+), 3(-)

• Null Hypothesis : H0: Median = 220. “Test” on basis of counts of

signs.

• Binomial situation, n=12, p=0.5.

For this distribution

P{3 X 9} = 0.962 while P{X 2 or X 10} = 1-0.962 = 0.038

Result not strongly significant.

• Notes: Need not be Median as “Location of test”

(Describe distributions by Location, dispersion, shape). Location =

median, “quartile” or other percentile.

Many variants of Sign Test - including e.g. runs of + and - signs for

“randomness”

4

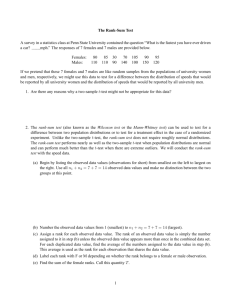

PERMUTATION/RANDOMIZATION TESTS

• Example: Suppose have 8 subjects, 4 to be selected at random for new

training. All 8 ranked in order of level of ability after a given period, ranking

from 1 (best) to 8 (worse).

P{subjects ranked 1,2,3,4 took new training} = ??

n

70

• Clearly any 4 subjects could be chosen. Select r=4 units from n = 8, r

• If new scheme ineffective, sets of ranks equally likely: P{1,2,3,4} = 1/70

• More formally, Sum ranks in each grouping. Low sums indicate that the

training is effective, High sums that it is not.

Sums 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26

No. 1 1 2 3 5 5 7 7 8 7 7 5 5 4 2 1 1

• Critical Region size 2/70 given by rank sums 10 and 11 while

size 4/70 from rank sums 10, 11, 12 (both “Nominal” 5%)

• Testing H0: new training scheme no improvement vs H1: some improvement

5

MORE INFORMATION

WILCOXON ‘SIGNED RANK’

• Direction and Magnitude : H0: = 220 ?Symmetry

• Arrange all sample deviations from median in order of magnitude and

replace by ranks (1 = smallest deviation, n largest). High sum for positive

(or negative) ranks, relative to the other H0 unlikely.

Weights 126 142 156 228 245 246 370 419 433 454 478 503

Diffs.

-94 -78 -64 8 25 26 150 199 213 234 258 283

Rearrange

8 25 26 -64 -78 -94 150 199 213 234 258 383

Signed ranks 1 2 3 -4 -5 -6 7 8 9 10 11 12

Clearly Snegative = 15 and < Spositive

Tables of form: Reject H0 if lower of Snegative , Spositive tabled value

e.g. here, n=12 at = 5 % level, tabled value =13, so do not reject H0

6

LARGE SAMPLES andC.I.

• Normal Approximation for S the smaller in magnitude of rank sums

1 1

n(n 1)

Obs Exp.

2 4

Z (or U S .N .D.)

~ N (0,1)

SE

n(n 1)(2n 1) / 24

S

so C.I. as usual

• General for C.I. Basic idea is to take pairs of observations, calculate

mean and omit largest / smallest of (1/2)(n)(n+1) pairs. Usually,

computer-based - re-sampling or graphical techniques.

• Alternative Forms -common for non-parametrics

e.g. for Wilcoxon Signed Ranks. Use W S p S n = magnitude of

differences between positive /negative rank sums. Different Table

Ties - complicate distributions and significance. Assign mid-ranks

7

KOLMOGOROV-SMIRNOV and EMPIRICAL

DISTRIBUTIONS

• Purpose - to compare set of measurements (two groups with each other) or

one group with expected - to analyse differences.

• Can not assume Normality of underlying distribution, (usual shape), so need

enough sample values to base comparison on (e.g. 4, 2 groups)

• Major features - sensitivity to differences in both shape and location of

Medians: (does not distinguish which is different)

• Empirical c.d.f. not p.d.f. - looks for consistency by comparing popn. curve

(expected case) with empirical curve (sample values)

Step fn.

No. sample values x i.e. value at each step from data

S ( x) E.D.F .

n

S(x) should never be too far from F(x) = “expected” form

• Test Basis is

Max. Diff . F ( xi ) S ( xi )

8

Criticisms/Comparison K-S with other ( 2)

Goodness of Fit Tests for distributions

Main Criticism of Kolmogorov-Smirnov:

- wastes information in using only differences of greatest magnitude; (in

cumulative form)

General Advantages/Disadvantages K-S

- easy to apply

- relatively easy to obtain C.I.

- generally deals well with continuous data. Discrete data also possible,

but test criteria not exact, so can be inefficient.

- For two groups, need same number of observations

- distinction between location/shape differences not established

Note: 2 applies to both discrete and continuous data , and to grouped,

but “arbitrary” grouping can be a problem.

Affects sensitivity of H0 rejection.

9

COMPARISON 2 INDEPENDENT SAMPLES:

WILCOXON-MANN-WHITNEY

• Parallel with parametric (classical) again. H0 : Samples from same

population (Medians same) vs H1 : Medians not the same

• For two samples, size m, n, calculate joint ranking and Sum for each

sample, giving Sm and Sn . Should be similar if populations sampled are

also similar.

1

• Sm + Sn = sum of all ranks = (m n)( m n 1) and result tabulated for

2

1

1

U m S m m(m 1), U n S n n(n 1)

2

2

• Clearly, U m mn U n so need only calculate one from 1st principles

• Tables typically give, for various m, n, the value to exceed for smallest U

in order to reject H0 . 1-tailed/2-tailed.

• Easier : use the sum of smaller ranks or fewer values.

• Example in brief: If sum of ranks =12 say, probability based on no.

possible ways of obtaining a 12 out of Total no. of possible sums

10

Example - W-M-W

• For example on weights earlier. Assume now have 2nd sample set also:

29 39 60 78 82 112 125 170 192 224 263 275 276 286 369 756

• Combined ranks for the two samples are:

Value 29 39 60 78 82 112 125 126 142 156 170 192 224 228 245

Rank 1 2 3 4 5 6 7 8 9 10 11 12 13 14 15

Value 246 263 275 276 286 369 370 419 433 454 478 503 756

Rank 16 17 18 19 20 21 22 23 24 25 26 27 28

Here m = 16, n=12 and Sm= 1+ 2+ 3 + ….+21+ 28 = 187

So Um=51, and Un=141. (Clearly, can check by calculating Un directly also)

For a 2-tailed test at 5% level, Um= 53 from tables and our value is less, i.e. more

extreme, so reject H0 . Medians are different here

11

MANY SAMPLES - Kruskal-Wallis

• Direct extension of W-M-W. Tests: H0: Medians are the same.

• Rank total number of observations for all samples from smallest (rank 1)

to highest (rank N) for N values. Ties given mid-rank.

• rij is rank of observation xij and si = sum of ranks in ith sample (group)

• Compute treatment and total SSQ ranks - uncorrected given as

S

2

t

si2

i

ni

S r2 rij2

,

i, j

• For no ties, this simplifies Sr N ( N 1)(2 N 1) / 6

1

2

• Subtract off correction for average for each, given by C N ( N 1)

2

• Test Statistic

T

( N 1)[ S C ]

2

t 1

S r2 C

2

t

4

i.e. approx. 2 for moderate/large N. Simplifies further if no ties.

12

PAIRING/RANDOMIZED BLOCKS - Friedman

• Blocks of units, so e.g. two treatments allocated at random within block =

matched pairs; can use a variant of sign test (on differences)

• Many samples or units = Friedman (simplest case of R.B. design)

• Recall comparisons within pairs/blocks more precise than between, so

including Blocks term, “removes” block effect as source of variation.

• Friedman’s test- replaces observations by ranks (within blocks) to achieve

this. (Thus, ranked data can also be used directly).

Have xij = response. Treatment i, (i=1,2..t) in each block j, (j=1,2...b)

Ranked within blocks

Sum of ranks obtained each treatment si, i=1,…t

rij2

For rank rij (or mid-rank if tied), raw (uncorrected) rank SSQ S r2

i, j

13

Friedman contd.

• With no ties, the analysis simplifies

• Need also SSQ(All treatments –appear in blocks)

si2

2

St

b

i

• Again, the correction factor analogous to that for Kruskal-Wallis

1

C bt (t 1) 2

4

• and common form of Friedman Test Statistic

b(t 1)( St2 C )

2

T1

t 1

( S r2 C )

t, b not very small, otherwise need exact tables.

14

Other Parallels with Parametric cases

• Correlation - Spearman’s Rho ( Pearson’s P-M calculated using

ranks or mid-ranks)

r s C

i i

where

C

i

i

ri C

2

i

s C

2

i

1

n(n 1) 2

4

used to compare e.g. ranks on two assessments/tests.

• Regression – LSE robust in general. Some use of “median

methods”, such as Theil’s (not dealt with here, so assume usual

least squares form).

15

NON-PARAMETRIC C.I. in Informatics:

BOOTSTRAP

• Bootstrapping = re-sampling technique used to obtain Empirical

distribution for estimator in construction of non-parametric C.I.

- Effective when distribution unknown or complex

- More computation than parametric approaches and may fail when

sample size of original experiment is small

- Re-sampling implies sampling from a sample - usually to estimate

empirical properties, (such as variance, distribution, C.I. of an

estimator) and to obtain EDF of a test statistic- common methods are

Bootstrap, Jacknife, shuffling

- Aim = approximate numerical solutions (like confidence regions). Can

handle bias in this way - e.g. to find MLE of variance 2, mean

unknown

- both Bootstrap and Jacknife used, Bootstrap more often for C.I.

16

Bootstrap/Non-parametric C.I. contd.

Basis - both Bootstrap and others rely on fact that sample cumulative distn

fn. (CDF or just DF) = MLE of a population Distribution Fn. F(x)

Define Bootstrap sample as a random sample, size n, drawn with

replacement from a sample of n objects

For S the original sample, S ( x1 , x2 ,.....xn )

P{drawing each item, object or group} = 1/n

Bootstrap sample SB obtained from original, s.t. sampling n times with

replacement gives

B

B

B

S B ( x1 , x2 ,.....xn )

Power relies on the fact that large number of resampling samples can

be obtained from a single original sample, so if repeat process b times,

obtain SjB, j=1,2,….b, with each of these being a bootstrap replication

17

Contd.

• Estimator - obtained from each sample. If ˆ jB F ( S Bj ) is the estimate for

the jth replication, then bootstrap mean and variance

1

b

b

ˆ jB ,

i 1

1

Vˆ B

b 1

b

(ˆ jB B ) 2

j 1

while BiasB =

B

• CDF of Estimator = P{ˆb x} for b replications

so C.I. with confidence coefficient for some percentile is then

B

{CDF 1[0.5(1 )], CDF 1[0.5(1 )]}

• Normal Approx. for mean: Large b

B

U (1 ) / 2 Vˆ B

U ~ N (0,1)

(tb-1 - distribution if No. bootstrap replications small).

Standardised Normal

Deviate

18

Example

• Recall (gene and marker) or (sex and purchasing) example

• MLE, 1000 bootstrapping replications might give results:

ˆ

Parametric Variance

0.0001357

95% C.I.

(0, 0.0455)

95% Interval (Likelihood) (0.06, 0.056)

Bootstrap Variance

0.0001666

Bias

0.0000800

95% C.I. Normal

(0, 0.048)

95% C.I. (Percentile) (0, 0.054)

̂

0.00099

(0.162, 0.286)

(0.17, 0.288)

0.0009025

0.0020600

(0.1675, 0.2853)

(0.1815, 0.2826)

19

SUMMARY: Non-Parametric Use

Simple property : Sign Tests – large number of variants. Simple basis

Paired data Wilcoxon Signed Rank -Compare medians

Conditions/Assumptions - No. pairs 6; Distributions - Same shape

Independent data Mann-Whitney U - Compare medians - 2 independent groups

Conditions/Assumptions -(N 4); Distributions same shape

Correlation –as before. Parallels parametric case.

Distributions: Kolmogorov-Smirnov - Compare either location, shape

Conditions/Assumptions - (N 4), but the two are not separately

distinguished. If 2 groups compared) need equal no. observations

Many Group/Sample Comparisons:

Friedman – compare Medians. Conditions : Data in Randomised Block design.

Distributions same shape.

Kruskal-Wallis- Independent groups. Conditions : Completely randomised.

Groups can be unequal nos. Distributions same shape.

Regression – robust, as noted, so use parametric form.

20