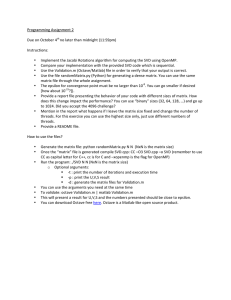

Structure from Motion (With a digression on SVD)

advertisement

Structure from Motion (With a digression on SVD) Structure from Motion • For now, static scene and moving camera – Equivalently, rigidly moving scene and static camera • Limiting case of stereo with many cameras • Limiting case of multiview camera calibration with unknown target • Given n points and N camera positions, have 2nN equations and 3n+6N unknowns Approaches • Obtaining point correspondences – Optical flow – Stereo methods: correlation, feature matching • Solving for points and camera motion – Nonlinear minimization (bundle adjustment) – Various approximations… OrthographicApproximation • Simplest SFM case: camera approximated by orthographic projection Perspective Orthographic Weak Perspective • An orthographic assumption is sometimes well approximated by a telephoto lens Weak Perspective Consequences of Orthographic Projection • Scene can be recovered up to scale • Translation perpendicular to image plane can never be recovered Orthographic Structure from Motion • Method due to Tomasi & Kanade, 1992 • Assume n points in space p1 … pn • Observed at N points in time at image coordinates (xij, yij) – Feature tracking, optical flow, etc. Orthographic Structure from Motion • Write down matrix of data Points x1n x Nn y1n y Nn Frames x11 xN 1 D y11 y N 1 Orthographic Structure from Motion • Step 1: find translation • Translation parallel to viewing direction can not be obtained • Translation perpendicular to viewing direction equals motion of average position of all points Orthographic Structure from Motion • Subtract average of each row x11 x1 ~ xN 1 xN D y11 y1 y N 1 y N x1n x1 x Nn x N y1n y1 y Nn y N Orthographic Structure from Motion • Step 2: try to find rotation • Rotation at each frame defines local coordinate axesî ,ĵ , andk̂ • Then ~ ~ ~ ~ ˆ ˆ xij ii p j , yij ji p j Orthographic Structure from Motion ~ • So, can write D RS where R is a “rotation” matrix and S is a “shape” matrix ˆi1T ˆi T R NT ˆj1 T ˆj N ~ p ~ S p 1 n Orthographic Structure from Motion ~ • Goal is to factorD ~ • Before we do, observe that rank( D )=3 (in ideal case with no noise) • Proof: – Rank of R is 3 unless no rotation – Rank of S is 3 iff have noncoplanar points – Product of 2 matrices of rank 3 has rank 3 ~ • With noise, rank(D ) might be > 3 Digression: Singular Value Decomposition (SVD) • Handy mathematical technique that has application to many problems • Given any mn matrix A, algorithm to find matrices U, V, and W such that A = U W VT U is mn and orthonormal V is nn and orthonormal W is nn and diagonal SVD A U w1 0 0 0 0 0 0 wn V T • Treat as black box: code widely available (svd(A,0) in Matlab) SVD • The wi are called the singular values of A • If A is singular, some of the wi will be 0 • In general rank(A) = number of nonzero wi • SVD is mostly unique (up to permutation of singular values, or if some wi are equal) SVD and Inverses • Why is SVD so useful? • Application #1: inverses • A-1=(VT)-1 W-1 U-1 = V W-1 UT • This fails when some wi are 0 – It’s supposed to fail – singular matrix • Pseudoinverse: if wi=0, set 1/wi to 0 (!) – “Closest” matrix to inverse – Defined for all (even non-square) matrices SVD and Least Squares • Solving Ax=b by least squares • x=pseudoinverse(A) times b • Compute pseudoinverse using SVD – Lets you see if data is singular – Even if not singular, ratio of max to min singular values (condition number) tells you how stable the solution will be – Set 1/wi to 0 if wi is small (even if not exactly 0) SVD and Eigenvectors • Let A=UWVT, and let xi be ith column of V • Consider ATA xi: 0 0 2 2 T T T T 2 T 2 A Axi VW U UWV VW V xi VW 1 V wi wi xi 0 0 • So elements of W are squared eigenvalues and columns of V are eigenvectors of ATA SVD and Eigenvectors • Eigenvectors of ATA turned up in finding least-squares solution to Ax=0 • Solution is eigenvector corresponding to smallest eigenvalue SVD and Matrix Similarity • One common definition for the norm of a matrix is the Frobenius norm: A F aij i 2 j • Frobenius norm can be computed from 2 SVD A F wi i • So changes to a matrix can be evaluated by looking at changes to singular values SVD and Matrix Similarity • Suppose you want to find best rank-k approximation to A • Answer: set all but the largest k singular values to zero • Can form compact representation by eliminating columns of U and V corresponding to zeroed wi SVD and PCA • Principal Components Analysis (PCA): approximating a high-dimensional data set with a lower-dimensional subspace * * Second principal component * * First principal component * * * * * * * ** * * Original axes * * ** * * * * * Data points SVD and PCA • Data matrix with points as rows, take SVD • Columns of Vk are principal components • Value of wi gives importance of each component SVD and Orthogonalization • The matrix U is the “closest” orthonormal matrix to A • Yet another useful application of the matrix-approximation properties of SVD • Much more stable numerically than Graham-Schmidt orthogonalization • Find rotation given general affine matrix End of Digression ~ • Goal is to factorD into R and S ~ • Let’s apply SVD! D UWV T ~ • But D should have rank 3 all but 3 of the wi should be 0 • Extract the top 3 wi, together with the corresponding columns of U and V Factoring for Orthographic Structure from Motion • After extracting columns, U3 has dimensions 2N3 (just what we wanted for R) • W3V3T has dimensions 3n (just what we wanted for S) • So, let R*=U3, S*=W3V3T Affine Structure from Motion • The i and j entries of R* are not, in general, unit length and perpendicular • We have found motion (and therefore shape) up to an affine transformation • This is the best we could do if we didn’t assume orthographic camera Ensuring Orthogonality ~ • Since D can be factored as R* S*, it can also be factored as (R*Q)(Q-1S*), for any Q • So, search for Q such that R = R* Q has the properties we want Ensuring Orthogonality • Want ˆi *T Q ˆi *T Q 1 i i or ˆi *T QQ T ˆi * 1 i i ˆj*T QQ T ˆj* 1 i i ˆi *T QQ T ˆj* 0 i i • Let T = QQT • Equations for elements of T – solve by least squares 1 • Ambiguity – add 0 constraintsQT ˆi1* 0, Q T ˆj1* 1 0 0 Ensuring Orthogonality • Have found T = QQT • Find Q by taking “square root” of T – Cholesky decomposition if T is positive definite – General algorithms (e.g. sqrtm in Matlab) Orthogonal Structure from Motion • Let’s recap: – Write down matrix of observations – Find translation from avg. position – Subtract translation – Factor matrix using SVD – Write down equations for orthogonalization – Solve using least squares, square root • At end, get matrix R = R* Q of camera positions and matrix S = Q-1S* of 3D points Results • Image sequence [Tomasi & Kanade] Results • Tracked features [Tomasi & Kanade] Results • Reconstructed shape Top view Front view [Tomasi & Kanade]