10/14

advertisement

COS 109 Wednesday October 14

• Housekeeping

– Questions about assignments

• Continuing our discussion of algorithms

–

–

–

–

Review of linear time and log time algorithms

Sorting algorithms

More complex algorithms

NP completeness

• Programming languages

– Differences between algorithms and programs

– How programming languages developed over time

Linear time algorithms

• lots of algorithms have this same basic form:

look at each item in turn

do the same simple computation on each item:

does it match something (looking up a name in a list of names)

count it (how many items are in the list)

count it if it meets some criterion (how many of some kind in the list)

remember some property of items found (largest, smallest, …)

transform it in some way (limit size, convert case of letters, …)

• amount of work (running time) is proportional to amount of data

– twice as many items will take twice as long to process

– computation time is linearly proportional to length of input

Binary search

• Examples

– Guessing a number

I’m thinking of a number between 1 and N (N = 2n)?

– Searching for a word in a dictionary

• Every query reduces the problem size by half

• Doubling the problem size adds 1 more step to the running

time.

Logarithms for COS 109

• all logs in 109 are base 2

• all logs in 109 are integers

• if N is a power of 2 like 2m, log2 of N is m

• if N is not a power of 2, log2 of N is

the number of bits needed to represent N

the power of 2 that's bigger than N

the number of times you can divide N by 2 before it becomes 0

• you don't need a calculator for these!

– just figure out how many bits or what's the right power of 2

• logs are related to exponentials: log2 2N is N ; 2

• it's the same as decimal, but with 2 instead of 10

log N

2

is N

Algorithms for sorting

• binary search needs sorted data

• how do we sort names into alphabetical order?

• how do we sort numbers into increasing or decreasing order?

• how do we sort a deck of cards?

• how many comparison operations does sorting take?

• "selection sort":

– find the smallest

using a variant of "find the largest" algorithm

– repeat on the remaining names

– this is what bridge players typically do when organizing a hand

• what other algorithms might work?

Selection sort

• sorting n items takes time proportional to n2

– twice as many items takes 4 times as long to sort

• there are much faster sorting algorithms

– time proportional to n log n

Why does running time matter?

100

200

400

800

7

8

9

10

n

100

200

400

800

n log n

700

1600

3600

8000

10,000

40,000

160,000

640,000

log n

n^2

Quicksort: an n log n sorting algorithm

• make one pass through data, putting all small items in one pile

and all large items on another pile

– there are now two piles, each with about 1/2 of the items

– and each item in the first pile is smaller than any item in the second

• make a second pass; for each pile, put all small items in one

pile and all larger items in another pile

– there are now four piles, each with about 1/4 of the items

– and each item in a pile is smaller than any item in later piles

• repeat until there are n piles

– each item is now smaller than any item in a later pile

• each pass looks at n items

• each pass divides each pile about in half, stops when size is 1

– number of divisions is log n

• n log n operations

Quicksort: an n log n sorting algorithm

• make one pass through data, putting all small items in one pile

and all large items on another pile

• to make the pass,

– start at one end and move forwards until you reach a number larger

than the first number, remember where you stopped

– then, start at the other end and move backwards until you reach a

number smaller than the first number, remember where you stopped

– swap the 2 numbers and repeat until your two motions meet

– swap the first element with the last number smaller than it (or the

first number larger than it)

27

13

25

46

17

29

57

16

^^^

27

13

25

16

17

29

57

^^^

First number smaller

46

Swap

First number larger

^^

First number smaller

^^

17

13

25

16

27

First number larger

29

57

Now, sort (17,13,25,16) and sort (29,57,46)

27 is in the right place

46

Arrows crossed

so swap with first

Sort/merge

• Divide the input into 2 sets (first half and second half)

– Sort each half (by Sort/merge)

– Merge the 2 halfs

What happens in practice when sorting 8 items

Divide into first 4 and second 4

Sort first 4

Divide into first 2 and second 2

Sort first 2

Sort second 2

Merge sets of 2

Sort second 4

Divide into first 2 and second 2

Sort first 2

Sort second 2

Merge sets of 2

Merge sets of 4

27

13

25

46

(

(

) (

17

)

(

)

(

29

57

16

) Split 8 -> 4 and 4

) (

)

13

27

25

46

17

29

16

57

13

25

27

46

16

17

29

57

13

16

17

25

27

29

46

57

Split 4 -> 2 and 2

Sort 2’s

Merge 2’s

Merge 4’s

Input is (27,13,25,46,17,29,57,16)

sort (27,13,25,46) and sort (17,29,57,16)

To sort (27,13,25,46) sort (27,13) and sort (25,46)

then merge the 2 lists

To sort (17,29,57,16) sort (17,29) and sort (57,16)

then merge the 2 lists

Recursion (Divide and conquer)

• Both Quicksort and Sort/Merge are examples of recursion

•

I don’t know how to solve your problem but I can solve 2 problems

of half the size and then combine the answers

•

I don’t know how to solve problems of the new size but I can solve 2

problems of half that size and then combine the answers

•

I don’t know how to solve problems of the new size but I can solve 2 problems of half

that size and then combine the answers

•

I don’t know how to solve problems of the new size but I can solve 2 problems of half that size and

then combine the answers

•

I don’t know how to solve problems of the new size but I can solve 2 problems of half that size and then combine the

answers

•

I don’t know how to solve problems of the new size but I can solve 2 problems of half that size and then combine the answers

•

I don’t know how to solve problems of the new size but I can solve 2 problems of half that size and then combine the answers

•

The hope is that the problem size gets so small that I can solve it

Recap of algorithms

• Algorithms are recipes

– Some algorithms make one (or a few passes) across data and so run

in linear time

– Some algorithms divide the problem size in half at each step and so

run in logarithmic time

– Other algorithms divide the problem into 2 halves each of which has

to be solved and then the results are combined (often run in n log n

time)

• Key algorithms considered

– Finding the maximum (or some property)

– Searching

– Sorting

• Are their algorithms that take significantly more time?

– How much time might they take?

Recap of algorithms

• Algorithms are recipes

– Some algorithms make one (or a few passes) across data and so run

in linear time

– Some algorithms divide the problem size in half at each step and so

run in logarithmic time

– Other algorithms divide the problem into 2 halves each of which has

to be solved and then the results are combined (often run in n log n

time)

• Key algorithms considered

– Finding the maximum (or some property)

– Searching

– Sorting

• Are their algorithms that take significantly more time?

– How much time might they take?

Towers of Hanoi: an exponential algorithm

• Solving for 7 disks

Travelling Salesman problem

Would like to visit all state capitals with the smallest travel distance

There are 1.29x1059 possible tours.

A good travelling salesman tour

Can we do better?

The knapsack problem

I have items of weights

and a knapsack of capacity 20615

can I exactly pack the knapsack

with items from my list?

There are 242 (=4.398x1012) possible packings

Investigating 106 per second, it would take

50 days to try all possibilities

17

27

44

48

53

54

59

61

78

80

81

90

97

99

104

175

289

356

444

459

657

682

756

888

931

944

948

987

1347

1897

2134

2198

2525

2610

2751

3563

3620

7433

8094

8211

8247

9045

The knapsack problem

I have items of weights

and a knapsack of capacity 20615

can I exactly pack the knapsack

with items from my list?

… it would take 50 days to try all possibilities

But,

17 + 27 + 44 + 48 + 53 + 54 = 243

657 + 682 + 756 + 888 + 931 = 3914

8211 + 8247 = 16458

243 + 3914 + 16458 = 20615

17

27

44

48

53

54

59

61

78

80

81

90

97

99

And it just takes a few seconds to check the answer

104

175

289

356

444

459

657

682

756

888

931

944

948

987

1347

1897

2134

2198

2525

2610

2751

3563

3620

7433

8094

8211

8247

9045

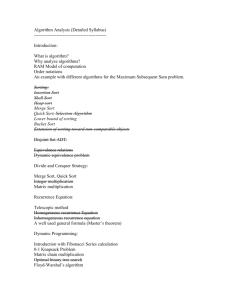

Further on algorithm complexity

log n

n

n log n

..

n2

n3

..

each step divides problem size in half

make one (or several) passes over the input data

divide (into 2 halves) and conquer (merge answers)

2n

all subsets of the input need to be considered

each pair of inputs are used together

each triple of inputs are used together

Complexity hierarchy

log n

(or part of it)

logarithmic

n

linear

polynomial

n log n

..

n2

quadratic

..

n3

cubic

..

*******************************************************

2n

exponential

(not polynomial)

Problems that take exponential time are deemed to

not be practically solvable even as computers get

faster

NP complete problems

• There is a class of problems such that

– No known method is known to solve each problem in polynomial time

– It is easy to verify if a given solution is correct for each problem

– If one of the problems can be solved in polynomial time, then all can

• These problems are said to be NP-complete

– And the underlying problem is the P vs NP (or P=NP?) problem

P=NP?

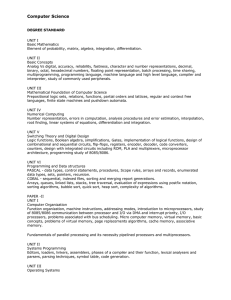

Algorithms in Computer Science

• study and analysis of algorithms is a major component of CS

courses

–

–

–

–

–

what can be done (and what can't)

how to do it efficiently (fast, compact memory)

finding fundamentally new and better ways to do things

basic algorithms like searching and sorting

plus lots of applications with specific needs

• big programs are usually a lot of simple, straightforward

parts, often intricate, occasionally clever, very rarely with a

new basic algorithm, sometimes with a new algorithm for a

specific task

Website (actually YouTube) of the day

A projection to the future from 2005

Algorithms versus Programs

• An algorithm is the computer science version of a really

careful, precise, unambiguous recipe

– defined operations (primitives) whose meaning is completely known

– defined sequence of steps, with all possible situations covered

– defined condition for stopping

– an idealized recipe

• A program is an algorithm converted into a form that a

computer can process directly

– like the difference between a blueprint and a building

– has to worry about practical issues like finite memory, limited speed,

erroneous data, etc.

– a guaranteed recipe for a cooking robot

Characteristics of a programming language

• Has to allow assignment to variables

– We did this in Toy with STORE MEM and LOAD MEM

• Has to allow arithmetic operations

– +,-,*,/;

– often more sophisticated like exponentiation, logarithm, sine, cosine

• Has to have constructs that allow for looping

– IFZERO and GOTO in Toy

– Typically IF …. THEN … [ELSE] … for branching

IF (condition is true)

THEN do these operations

ELSE

do these other operations

– Typically WHILE or FOR or DO for looping

WHILE (this condition is true) DO these operations

FOR (these values) DO these operations

• May have sophisticated ways to structure data

What happens to a program in a higher level

language

• A compiler is written that converts the program text into

assembly language

– The goal is to have the program work on many machines and the

compiler translate it to assembler for a specific machine

• The compiler itself has various steps

– Parsing (think middle school English class)

– Optimizing (using registers/accumulator wisely)

– Code generation (creating assembler or machine code)

• A language is specified by a grammar which is defined by the

standard defining the language

• The compiler further defines the language by how it

processes

Evolution of programming languages

• 1940's: machine level

– use binary or equivalent notations for actual numeric values

• 1950's: "assembly language"

– names for instructions: ADD instead of 0110101, etc.

– names for locations: assembler keeps track of where things are in memory;

translates this more humane language into machine language

– this is the level used in the "toy" machine

– needs total rewrite if moved to a different kind of CPU

loop

done

sum

get

# read a number

ifzero done # no more input if number is zero

add

sum

# add in accumulated sum

store

sum

# store new value back in sum

goto

loop # read another number

load

sum

# print sum

print

stop

0

# sum will be 0 when program starts

assembly lang

program

assembler

binary instrs

Evolution of programming languages, 1950's

• "high level" languages: Fortran, Cobol

– write in a more natural notation, e.g., mathematical formulas

Fortran (formula translation) for math

Cobol (Common business oriented language) for business

– a program ("compiler", "translator") converts into assembler

– potential disadvantage: lower efficiency in use of machine

– enormous advantages:

accessible to much wider population of users

portable: same program can be translated for different machines

more efficient in programmer time

John Backus

created Fortran

compiler at IBM

Grace Murray Hopper

created Cobol

compiler for DoD

Samples of Fortran and Cobol

Evolution of programming languages, 1950's to

1960’s

• Algol

– Developed by an international committee in the late 50’s

– Designed to be universal

– Sabotaged by IBM

• BASIC (Beginner's All-purpose Symbolic Instruction Code)

– Developed at Dartmouth in 1964 by Kemeny and Kurtz

– designed to be simple so that everyone could program

• LISP

– Designed as a practical notation for computer programs in the late

50’s

– Evolved into the language of choice for artificial intelligence

programming

Evolution of programming languages, 1970's

• "system programming" languages: C

– efficient and expressive enough to take on any programming task

writing assemblers, compilers, operating systems

– a program ("compiler", "translator") converts into assembler

– enormous advantages:

accessible to much wider population of programmers

portable: same program can be translated for different machines

faster, cheaper hardware helps make this happen

– Evolved from B

• The initial versions of UNIX were written in C

A sample C program to do GET/PRINT/STOP

#include <stdio.h>

main() {

int num, sum = 0;

while (scanf("%d", &num) != -1 && num != 0)

sum += num;

printf("%d\n", sum);

}

Dennis Ritchie (creator of C)