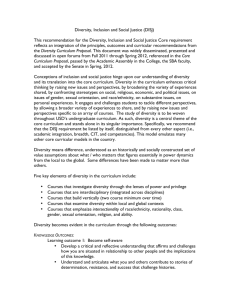

Applications of Information Complexity II David Woodruff

advertisement

Applications of

Information

Complexity II

David Woodruff

IBM Almaden

Outline

New Types of Direct Sum Theorems

1. Direct Sum with Aborts

2. Direct Sum of Compositions

Distributional

Communication

• Dμ, δ(f) = minimum

communication Complexity

over all correct protocols Π

• 2 players with private randomness: Alice and Bob

Can’t we fix the private randomness?

• Joint function f

Alice

Bob

…

• Distribution μ on inputs (x, y)

• Correctness: Pr(X,Y) » μ, private randomness [Π(X,Y) = f(X,Y)] ¸ 1-δ

• Communication: maxx,y, private randomness |Π(x,y)|

Distributional Communication

Complexity vs. Information Complexity

• By averaging, there is a good fixing of the randomness:

D μ, δ (f) = mincorrect deterministic Π maxx,y |Π(x,y)|

• However, we’ll use the notion of information complexity:

ICextμ, δ(f) = mincorrect Π I(X, Y ; Π) = H(X, Y) – H(X, Y | Π)

Can’t fix private randomness of Π and preserve I(X, Y ; Π)

Input X

Randomness R

X©R

Require that

Multiple

Copies of a

the protocol

solves all k

Communication

Problem

instances

Alice

Bob

…

…

• Distribution μk on inputs (x,y) = (x1, y1), …, (xk, yk)

• Correctness: Pr(X,Y) » μk, private randomness [Π(X,Y) = fk(X,Y)] ¸ 1-δ

• Dμk, δ (fk) = mincorrect Π maxx,y, private randomness |Π(x,y)|

How Hard is Solving all k Copies?

?

Jain et al (bounded rounds)

How Hard is Solving all k Copies?

?

None attains above bound!

Impossible for general problems

[FKNN95]

However, general but relaxed statement (with aborts) is true

for information complexity

Protocols with Aborts [MWY]

We fix α = 1/20 and write

?

Definition: A randomized protocol

Π (μ, α, β, δ)-computes f if

ICextμ, 1/20, β, δ (f) = ICext μ, β, δ(f)

with probability 1-α over its private randomness:

• (Abort) Pr(X,Y) » μ [Π aborts] · β

• (Error) Pr(X,Y) » μ [Π(X,Y) f(X,Y) | Π does not abort] · δ

abort

error

right

• When protocol aborts, it “knows it is wrong”

• ICextμ, α, β, δ(f) = min I(X, Y ; Π), over Π that (μ, α, β, δ)-compute f

Direct Sum with Aborts

• Formally, ICext μk, δ (fk) = (k) ¢ ICext μ, 1/10, δ/k (f)

• Number r of communication rounds is preserved

ICext, r μk, δ (fk) = (k) ¢ ICext, r μ, 1/10, δ/k (f)

Proof Idea

• I(X1, Y1, …, Xk, Yk ; Π) = i I(Xi, Yi ; Π | X<i, Y<i)

= i x, y I(Xi, Yi ; Π | X<i = x, Y<i =y )

¢ Pr[X<i = x, Y<i =y]

Bound I(Xi, Yi ; Π | X<i = x, Y<i =y ) by ICextμ, 1/10, δ/k (f)

Create protocol Πx,y (A,B) for f:

• Run Π with inputs (X<i , Y<i) = (x,y) and (Xi, Yi) = (A,B),

and use private randomness to sample X>i, Y>i

• Check if Π’s output is correct on first i-1 instances

• If correct, then Πx,y (A,B) outputs the i-th output of Π

• Else, Abort

Application: Direct Sum for Equality

• For strings x and y, EQ(x,y) = 1 if x = y, else EQ(x,y) = 0

x1, …, xk 2 {0,1}n

y1, …, yk 2 {0,1}n

• EQk = (EQ(x1, y1), EQ(x2, y2), …, EQ(xk, yk))

• Standard direct sum:

ICext, 1 μk, 1/3(EQk) = (k) ¢ ICext, 1 μ, 1/3 (EQ)

For any ¹, ICext, 1 μ, 1/3 (EQ) = O(1), so LB is (k)

• Direct sum with aborts:

ICext, 1 μk, 1/3(EQk) = (k) ¢ ICext, 1 μ, 1/10, 1/(3k) (EQ) = (k log k)

Sketching Applications

Optimal lower bounds (improve by a log k factor)

- Sketching a sequence u1, …, uk of vectors, and

sequence v1, …, vk of vectors in a stream to (1+ε)approximate all distances |ui – vj|p

- Sketching matrices A and B in a stream so that for all

i, j, (A¢B)i,j can be approximated with additive error ε |Ai|*|Bj|

Set Intersection Application

S µ [n]

|S| = k

T µ [n]

|T| = k

Each party should locally output S Å T

• Randomized protocol with O(k) bits of communication.

• In O(r) rounds, obtain O(k ilog(r) k) communication [WY]

• ilog(1) k = log k, ilog(2) k = log log k, etc.

• Combining [BCK] and direct sum with aborts, any r-round

protocol w. pr. ¸ 2/3 requires Ω(k ilog(r) k) communication

Outline

New Types of Direct Sum Theorems

1. Direct Sum with Aborts

2. Direct Sum of Compositions

Composing Functions

• 2-Party Communication Complexity

• Alice has input x. Bob has input y

• Consider x = (x1, …, xr) and y = (y1, …, yr) 2 ({0,1}n)r

• f(x,y) = h(g(x1, y1), …, g(xr, yr)) for Boolean function g

h

Outer function

Inner function

g(x1, y1)

g(x2, y2)

…

g(xr-1, yr-1)

g(xr, yr)

Given information complexity lower bounds for h and g,

when is there an information complexity lower bound for f?

Composing Functions

OR function

• ALL-COPIES = (g(x1, y1), …, g(xr, yrsensitive

))

to individual

coordinates

i

i

• DISJ(x,y) = Çi=1r (x Æ y )

• TRIBES(x,y) = Æi=1r DISJ(xi, yi)

• Key to information complexity lower bounds for these functions is an

embedding step

AND

function

i

i

<i

<i) by creating a

• Lower bound I(X, Y ; Π) = Σi I(X , Y sensitive

; Π | X , Yto

protocol for each i to solve the primitive problemindividual

g

instances of DISJ

• Lower bound I(Xi, Yi ; Π | X<i, Y<i) by information complexity of g

• Combining function h needs to be sensitive to individual

coordinates (under appropriate distribution)

Composing Functions

• What if outer function h is not sensitive to individual

coordinates?

Can

webits

use

• Gap-Thresh(z1, …,

zr) for

z1, …, zr

r zi > r/4 + r1/2

= 1 IC

if Σext

to bound

i=1(Gap-Thresh(AND))

r i < r/4 – r1/2

= 0 if ΣIC

i=1extz(Gap-Thresh(g))

for other

Otherwise outputfunctions

is undefined

g?(promise problem)

• No obvious embedding step for Gap-Thresh!

• For specific choices of inner function [BGPW, CR]:

ICextμ, δ(Gap-Thresh(a1Æb1, … , arÆbr)) = Ω(r) for μ a product

uniform distribution on a=(a1, … , ar), b=(b1, … , br)

Embedding

AND

[WZ]

- On other coordinates

j, choose

a

random player Pij 2 {Alice, Bob}

1, y1), …, g(xr, yr)))

i = 0

• Want

- If Ptoij =bound

Alice,IC

Xiext

{0,1},

Y

j 2(Gap-Thresh(g(x

j

R

- If Pij = Bob, Xij = 0, Yij 2R {0,1} Call this distribution ¹

• Know ICextμ, δ(Gap-Thresh(AND)) = Ω(r) for uniform

Say ¹ is collapsing

distribution

Embed into random

i

i

What if we could embed the uniform

ai, bi into

special bits

coordinate

Si x , y

so that inner function g(xi, yi) = ai Æ bi ?

• Example embedding for g = DISJ

0

1

0

ai

0

0

0

0

0

1

0

0

0

bi

1

1

0

0

1

0

Analyzing the

X<i, Information

Y<i determines A<i, B<i

1, y1), …, DISJ(xr, yr)))

given P, S

• Let Π be protocol for Gap-Thresh(DISJ(x

Chain rule

• Embedding AND into DISJ: I(Π ; A1, …, Ar, B1, …, Br | P, S)

Now let’sextlook at

¸ IC μ, δ(Gap-Thresh(AND)) = (r)

the information

- Independence of Xi, Yi from X<i, Y<i

cost

• By chain rule,- for

most

i,

I(Π

;

Ai , Bi | entropy

A<i, B<i, P, S) = Ω(1)

Conditioning reduces

• Maximum likelihood estimation: from Π, A<i, B<i, P, S, there is a

look

predictor θ whichNormally

guesseswe

Ai,would

Bi with

pr. ¼ + Ω(1)

-

<i, B<i, P-i, S i

at information

cost.

• Holds for (1) fraction

of fixings of A

Here

at ran

r | P,we

• I(Π ; X1,…,Xr,Y1,…,Y

S)look

= Σi=1

I(Π ; Xi, Yi | X<i, Y<i, P, S)

intermediate

r

= Σi=1measure

I(Π ; Xi, Yi | X<i,Y<i,A<i,B<i, P, S)

¸ Σi=1r I(Π ; Xi, Yi | A<i, B<i, P, S)

Guessing Game

1,…,Xr,Y1Ψ

r| P,S) ¸

i, Yi | A<i, B<i,P,S)

is correct

if can

• I(Π ;XProtocol

,…,Y

Σi=1r guess

I(Π ; X(A,B)

- Onr other coordinates j, choose

w. pr. 1/4

given

S,<iP

i | Pi, SΨ(U,V),

i, A<i =a,B

=aΣrandom

I(Π+;(1)

Xi,2Y{Alice,

= b,P-i =p, S-i =s)

i=1 Σ a,b,p,s

player

P

Bob}

j

<i

-i , S-i) = (a,b,p,s)]

¢ Pr[(A

B<i , PV

- If Pj ext

= Alice,

UPj 2R, {0,1},

=0

CIC (Guessing Game) = Pj

- If Pj = Bob, UPj = 0, VPj 2R {0,1}

mincorrect Ψ I (Π ; U, V | S,- P)

Choose a

• Embedding Step

random coordinate

• Consider a protocol Ψ for the following

“Guessing Game”

S

- Embed random

bits A, B

on{0,1}

S n

u 2 {0,1}n

v2

Lower bounds for

thisVproblem

implyfrom collapsing distribution μ:

• U and

are chosen

lower bound for DISJ

0

1

0

A

0

0

0

0

0

1

0

0

0

B

1

1

0

0

1

0

Embedding

the Guessing

Show CIC (Guessing

Game) = (n) Game

ext

• I(Π ;X1,…,Xr,Y1,…,Yr | P, S) ¸ Σi=1r I(Π ; Xi, Yi | A<i, B<i, P, S)

toi DISJ

lower

bound

r Proof related

i

i

i

<i

= Σi=1 Σ a,b,p,s I(Π ; X , Y | P , S , A = a, B<i = b, P-i = p, S-i = s)

¢ Pr[A<i,B<i, P-i,S-i = (a,b,p,s)]

= (r) ¢ CICext(Guessing Game)

• Embedding Step

• Create a protocol Πi,a,b,p,s for Guessing Game on inputs (U,V)

• Use private randomness, a, b, p, s to sample Xj, Yj for j i

• Set (Xi, Yi) = (U,V)

• Let the transcript of Πi,a,b,p,s equal the transcript of Π

• Use predictor θ, given a, b, p, s, Pi, Si, and the transcript Π, to

guess Ai, Bi w. pr. ¼ + (1), so solve Guessing Game

Distributed Streaming Model

coordinator:

C

players:

P1

P2

P3

inputs:

x1

x2

x3

…

Pk

xk

• Each xi 2 {-M, -M+1, …, M}n

• Problems on x = x1 + x2 + … + xk: sampling, p-norms, heavy

hitters, compressed sensing, quantiles, entropy

• Direct Sum of Compositions (generalized to k players): tight

bounds for approximating |x|2 and additive ε approx. to entropy

Open Questions

• Direct Sum with Aborts:

Dμ, δ (fk) = (k) ¢ ICextμ, 1/10, δ/k (f)

Instead of fk, when can we improve the standard direct sum

theorem for combining operators such as MAJ, OR, etc.?

• Direct Sum of Compositions: for which functions g is

ICext(Gap-Thresh(g(x1, y1), …, g(xr, yr))) = (r ¢ n)?

- See Section 4 of http://arxiv.org/abs/1112.5153 for work on a

related Gap-Thresh(XOR) problem (a bit different than GapThresh(DISJ))

- Gap-Thresh(DISJ) problem in followup work [WZ]