Teaching Parallelism Panel, SPAA11 Uzi Vishkin,

advertisement

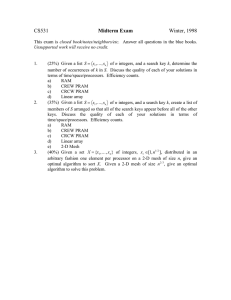

Teaching Parallelism Panel, SPAA11 Uzi Vishkin, University of Maryland Dream opportunity Limited interest in parallel computing evolved into quest for generalpurpose parallel computing in mainstream computers. Alas: - Only heroic programmers can exploit the vast parallelism in today’s mainstream computers. - Rejection of their par prog by most programmers: all but certain. - Widespread working assumption Programming models for larger-scale & mainstream systems - similar. Not so in serial days. - Parallel computing plagued with prog difficulties. [build-first figure-outhow-to-program-later’ fitting parallel languages to these arbitrary arch standardization of language fits doomed later parallel arch - Working assumption import parallel computing ills to mainstream Shock and awe example 1st par prog trauma ASAP: Start par prog course with tile-based parallel algorithm for matrix mult. How many tiles to fit 1000X1000 matrices in cache of modern PC? Teach later: OK Missing Many-Core Understanding Comparison of many-core platforms for: Ease-of-programming & achieving hard speedups. The Economist I (F) : Summary of my thoughts 1. In class Parallel PRAM algorithmic theory -2nd in magnitude only to serial algorithmic theory -Won the “battle of ideas” in the 1980s. Repeatedly: -Challenged without success no real alternative! Is this another: the older we get the better we were? 2. Parallel programming experience for concreteness: •In Homework Extensive programming assignments [ The XMT HW/SW/Alg solution Programming for locality: 2nd order consideration] •Must be Trauma-free providing Hard speedups/Best serial 3. Tread carefully Consider non-parallel computing colleague instructors. Limited line of credit. Future change is certain. 3 Pushing may backfire when need cooperation in future Parallel Random-Access Machine/Model PRAM: n synchronous processors all having unit time access to a shared memory. Reactions [Important to convey plurality, plus coherent approach(es)] You got to be kidding, this is way: - Too easy - Too difficult: Why even mention processors? What to do with n processors? How to allocate processors to instructions? Immediate Concurrent Execution ‘Work-Depth framework’ SV82, Adopted in Par Alg texts [J92,KKT01]. ICE basis for architecture specs: V, Using simple abstraction to reinvent computing for parallelism, CACM 1/2011 [Similar to role of stored-program & program-counter in arch specs for serial comp] 5 Algorithms-aware many-core is feasible Algorithms PRAM-On-Chip HW Prototypes Programming 64-core, 75MHz FPGA of XMT [SPAA98..CF08] Toolchain Compiler + simulator HIPS’11 128-core interconnection network Programmer’s workflow IBM 90nm: 9mmX5mm, 400 MHz [HotI07] - Rudimentary yet stable FPGA designASIC • IBM 90nm: 10mmX10mm compiler • 150 MHz Architecture scales to 1000+ cores on-chip XMT homepage: www.umiacs.umd.edu/users/vishkin/XMT/index.shtml or search: ‘XMT’. considerable material/suggestions for teaching: class notes, tool chain, lecture videos, programming assignment. Elements in My education platform • Identify ‘thinking in parallel’ with the basic abstraction behind the SV82b workdepth framework. Note: presentation framework in PRAM texts: J92, KKT01. • Teach as much PRAM algorithms as timing and developmental stage of the students permit; extensive ‘dry’ theory homework: required from graduate students. Little from high-school students. • Students self-study programming in XMTC (standard C plus 2 commands, spawn and prefix-sum) and do demanding programming assignments • Provide a programmer’s workflow: links the simple PRAM abstraction with XMTC (even tuned) programming. The synchronous PRAM provides ease of algorithm design and reasoning about correctness and complexity. Multi-threaded programming relaxes this synchrony for implementation. Since reasoning directly about soundness and performance of multi-threaded code is known to be error prone, the workflow only tasks the programmer with: establish that the code behavior matches the PRAM-like algorithm • Unlike PRAM, XMTC incorporates locality as 2nd order. Unlike many approaches, XMTC preempts harm of locality on programmer’s productivity. • If XMT architecture is presented: only at the end of the course; parallel programming more difficult than serial, which… does not require architecture. Anecdotal Validation (?) • Breadth-first-search (BFS) example 42 students: joint UIUC/UMD course - <1X speedups using OpenMP on 8-processor SMP - 7x-25x speedups on 64-processor XMT FPGA prototype [Built at UMD] What’s the big deal of 64 processors beating 8? Silicon area of 64 XMT processors ~= 1-2 SMP processors • Questionnaire Rank approaches for achieving (hard) speedups: All students, but one : XMTC ahead of OpenMP • Order-of-magnitude: teachability/learnabilty (MS, HS & up, SIGCSE’10) • SPAA’11: >100X speedup on max-flow relative to 2.5X on GPU (IPDPS’10) • Fleck/Kuhn: research too esoteric to be reliable exoteric validation! Reward alert: Try to publish a paper boasting easy to obtain results EoP: 1. Badly needed. Yet, 2. A lose-lose proposition. 8 Where to find a machine that supports effectively such parallel algorithms? • Parallel algorithms researchers realized decades ago that the main reason that parallel machines are difficult to program has been that bandwidth between processors/memories is limited. Lower bounds [VW85,MNV94]. • [BMM94]: 1. HW vendors see the cost benefit of lowering performance of interconnects, but grossly underestimate the programming difficulties and the high software development costs implied. 2. Their exclusive focus on runtime benchmarks misses critical costs, including: (i) the time to write the code, and (ii) the time to port the code to different distribution of data or to different machines that require different distribution of data. G. Blelloch, B. Maggs & G. Miller. The hidden cost of low bandwidth communication. In Developing a CS Agenda for HPC (Ed. U. Vishkin). ACM Press, 1994 • Patterson, CACM04: Latency Lags Bandwidth. HP12: as latency improved by 30-80X, bandwidth improved by 10-25KX Isn’t this great news: cost benefit of low bandwidth drastically decreasing • Not so fast. X86Gen Senior Eng, 1/2011: Okay, you do have a ‘convenient’ way to do parallel programming; so what’s the big deal?! • Commodity HW Decomposition-first programming doctrine heroic programmers sigh … Has the ‘bw ease-of-programming opportunity’ got lost? Sociologists of science • Debates between adherents of different thought styles consist almost entirely of misunderstandings. Members of both parties are talking of different things (though they are usually under an illusion that they are talking about the same thing). They are applying different methods and criteria of correctness (although they are usually under an illusion that their arguments are universally valid and if their opponents do not want to accept them, then they are either stupid or malicious) 10 Comment on need for breadth of knowledge Where are your specs? One example: what is your par alg abstraction? • ‘First-specs then-build’ is “not uncommon”.. for engineering • 2 options for architects WRT example: A. 1. Learn parallel algorithms 2. Develop abstraction that meets EoP 3. Develop specs 4. Build B. Start from abstraction with proven EoP It is similarly important for algorithms people to learn architecture and applications