Download talk (ppt, 48 slides)

advertisement

Keynote: Parallel Programming for High Schools

Uzi Vishkin, University of Maryland

Ron Tzur, Purdue University

David Ellison, University of Maryland and University of Indiana

George Caragea, University of Maryland

CS4HS Workshop, Carnegie-Mellon University, July 26, 2009

1

Why are we here?

• It’s a time of emerging update to what literacy in CS means:

Parallel Algorithmic Thinking (PAT)

2

Goals

• Nurture your sense of:

– Sense of urgency of shift to parallel within computational thinking

– Get a sense of PAT and of potential student understandings

– Confidence, competence, and enthusiasm in ability to take on the

challenge of promoting PAT in your students

• At the end we hope you’ll say:

– I understand, I want to do it, I can, and I know it will not happen

without me (“irreplaceable member of the Jury”)

3

Outline (RT)

•

•

•

•

Intro: What’s all the fuss about parallelism? (UV)

Teaching-learning activities in XMT (DE)

A teacher’s voice (1): It’s the future … & teachable (ST)

PAT Module: goals, plan, hands-on pedagogy, learning theory

(RT)

•

•

•

•

•

A teacher’s voice (2): XMT Approach/content (ST)

Hands-on: The Merge-Sort Problem (UV)

A teacher’s voice (3): To begin PAT, use XMTC (ST)

How to (start)? (GC)

Q & A (All, 12 min)

4

Intro: Commodity Computer Systems (UV)

•

Serial General-purpose computing:

–

–

•

19462003, 5KHz4GHz.

2004 Clock frequency growth turns flat

2004 Onward

–

–

–

–

Parallelism: ‘only game in town’

If you want your program to run significantly faster … you’re going

to have to parallelize it

General-purpose computing goes parallel

#Transistors/chip 19802011: 29K30B!

–

#”cores”: ~dy-2003

Intel Platform 2015, March05

5

Intro: Commodity Computer Systems

•

40 Years of Parallel Computing

–

Never a successful general-purpose parallel computer (easy to

program & good speedups)

–

Grade from NSF Blue-Ribbon Panel on Cyberinfrastructure: ‘F’ !!!

“Programming existing parallel computers is as intimidating and

time consuming as programming in assembly language”

6

Intro: Second Paradigm Shift - Within Parallel

• Existing parallel paradigm: “Decomposition-First”

– Too painful to program

• Needed Paradigm: Express only “what can be done in parallel”

– Natural (parallel) algorithm: Parallel Random-Access Model (PRAM)

– Build both machine (HW) and programming (SW) around this model

What could I do in parallel

at each step assuming

unlimited hardware

Serial Paradigm

#

ops

..

time

Time = Work

..

Natural (Parallel) Paradigm

.

# ..

.

ops

..

..

..

time

Work = total #ops

Time << Work

7

Middle School Summer Camp Class Picture,

July’09 (20 of 22 students)

8

Demonstration: Exchange Problem (DE)

How to exchange the contents of memory locations A & B

I

A

B

2

5

Let’s refer to input row I as our input state

where the values are currently A=2 and B=5

How can we direct the computer one operation at a

time and create a serial algorithm?

Let’s try! Hint: Operations include assigning values.

9

Let’s Look at our First Step

A

B

I

2

5

X

1

2

5

2

Our first step [ X:=A ]

10

Let’s Look at our Second Step

A

B

I

2

5

X

1

2

5

2

2

5

5

Our second step [B:=A ]

11

Let’s Look at our Third Step

A

B

I

2

5

X

1

2

2

5

2

5

5

3

5

2

Our third step [ B:=X ]

How many

steps? 3 and pseudo programming code:

Our first

algorithm*

1. X:=AHow many operations? 3

2. A:=B

What’s the connection between the number

Equal

3. B:=Xof steps & operations?

* Serial exchange, 3 steps, 3 operations, 1 working memory space

How much working memory space consumed? 1 Space

Hands-on Challenge: Can we exchange the contents of A (=2)

and B (=5) in fewer steps?

12

I

A

B

2

5

X

Y

What is the hint in this figure?

13

First Step in a parallel algorithm

A

B

I

2

5

X

Y

1

2

5

2

5

X:=A and simultaneously Y:=B

Can you anticipate the next step?

14

Second step in a parallel algorithm

A

B

I

2

5

X

Y

1

2

5

2

5

2

5

2

X:=A and Y:=B

A:=Y and simultaneously B:=X

How many steps? 2

How many operations? 4

How much working memory space consumed?

2

Can you make any generalizations with respect to

serial and parallel problem solving?

Parallel algorithms tend to

X:=A and Y:=B

involve fewer Steps, but may

cost more operations and may

A:=Y and B:=X

consume more working

memory.

15

Array Exchange : A and B as arrays with indices 0 – 9 and input state as shown. Using a single working

memory space X, devise an algorithm to exchange the contents of cells with the same index (e.g.,

replace A0=22 with B0=12) . Consider number of steps, operations.

Index No.

12

A

22

B

X

12

X

Step 1 X:=A[0]

12

13

Step 2 A[0]:=B[0]

0

12

22

22

12

1

13

23

2

14

24

Step 3 B[0]:=X

3

15

25

Step 4 X:=A[1]

4

16

26

5

17

27

6

18

28

7

19

29

8

20

30

12

9

For i=0 to 9

Do

X:=A[i]

A[i]:=B[i]

B[i]:=X

end

21

31

How many steps needed to complete the exchange? 30

How many operations? 30

How much working memory space? 1

Your homework asks for the general case of arrays A and B of length n

16

Array Exchange Problem: Can you parallelize it?

Index No.

A

X

B

0

12

22

12

22

12

1

13

23

13

23

13

2

14

24

15

25

14

24

14

25

15

16

5

16

26

17

27

6

28

18

18

28

7

19

29

18

19

8

20

30

31

21

20

30

20

21

31

21

3

4

9

15

17

26

16

27

17

29

19

Step 1 X[0-9]:=A[0-9]

Step 2 A[0-9]:=B[0-9]

Step 3 B[0-9]:=X[0-9]

Parallel algorithm

For i=0 to n-1

pardo

X(i):=A(i)

A(i):=B(i)

B(i):=X(i)

end

XMTC Program

spawn(0,n-1){

var x

x:=A( $ );

A( $ ):=B( $ );

B( $ ):=x

}

How many steps? 3

How many operations? 30

How much working memory space consumed? 10

Answer the above questions for the general case of arrays A and B of length n?

3 steps, 3n operations, n spaces

17

Array Exchange Algorithm: A highly parallel approach

Index No.

A

X

Y

B

0

12

22

12

22

22

12

1

13

23

14

24

13

23

14

24

23

13

24

14

15

25

16

26

15

25

16

26

25

15

26

16

2

3

4

5

17

27

18

28

17

18

27

28

27

17

28

18

19

29

8

19

29

20

30

20

30

29

19

30

20

9

21

31

21

31

31

21

6

7

Step 1 X[0-9]:=A[0-9]

And Y[0-9]:=B[0-9]

Step 2 A[0-9]:=Y[0-9]

And B[0-9]:=X[0-9]

For i=1 to n

pardo

X(i):=A(i) and B(i):=X(i)

Y(i):=B(i) and A(i):=Y(i)

end

How many steps? 2

How many operations? 40

How much working memory space consumed? 20

Answer the above questions for the general case of arrays A and B of length

n?

2 steps , 4n operations, 2n spaces

18

Intro: Second Paradigm Shift (cont.)

• Late 1970s THEORY

– Figure out how to think algorithmically in parallel

– Huge success. But

• 1997 Onward: PRAM-On-Chip @ UMD

– Derive specs for architecture; design and build

• Above premises contrasted with:

– “Build-first, figure-out-how-to-program-later” approach

J. Hennessy: “Many of the early [parallel] ideas were motivated by

observations of what was easy to implement in the hardware rather than

what was easy to use”

19

Pre Many-Core Parallelism: Three Thrusts

Improving single-task completion time for general-purpose

parallelism was not the main target of parallel machines

1. Application-specific:

–

–

–

computer graphics

Limiting origin

GPUs: great performance if you figure out how

2. Parallel machines for high-throughput (of serial programs)

–

–

Only choice for “HPC”Language standards, but many issues (F!)

HW designers (that dominate vendors): YOU figure out how to

program (their machines) for locality.

20

Pre Many-Core Parallelism: 3 Thrusts (cont.)

• Currently, how future computer will look is unknown

– SW Vendor impasse: What can a non-HW entity do without ‘betting on

the wrong horse’?

• Needed - successor to Pentium for multi-core area that:

– Is easy to program (hence, learning – hence, teaching)

– Gives good performance with any amount of parallelism

– Supports application programming (VHDL/Verilog, OpenGL, MATLAB)

AND performance programming

– Fits current chip technology and scales with it (particularly – strong

speed-ups for single-task completion time)

• Hindsight is always 20/20:

– Should have used the benchmark of Programmability

TEACHABILITY !!!

21

Pre Many-Core Parallelism: 3 Thrusts (cont.)

3. PRAM algorithmic theory

–

Started with a clean slate target:

• Programmability

• Single-task completion time for general-purpose parallel computing

–

Currently: the theory common to all parallel approaches

• necessary level of understanding parallelism;

As simple as it gets; Ahead of its time: avant-garde

–

–

1990s Common wisdom (LOGP): never implementable

UMD Built: eXplicit Multi-Threaded (XMT) parallel computer

• 100x speedups for 1000 processors on chip

• XMTC – programming language

• Linux-based simulator – download to any machine

–

Most importantly: TAUGHT IT

• Graduate seniors freshmen high school middle school

• Reality check: The human factor YOU

Teachers Students

22

One Teacher’s Voice (RT)

• Mr. Shane Torbert (could not join us - sister’s getting married!)

• Thomas Jefferson (TJ) High School

• Two years of trial

• Interview question: Why you gave Vishkin’s XMT a try?

• Observe video segment #1

http://www.umiacs.umd.edu/users/vishkin/TEACHING/SHANETORBERT-INTERVIEW7-09/01 Shane Why XMT.m4v

(It requires either some iTune installation or other m4v player)

23

Summary of Shane’s Thesis:

It’s the Future … and Teachable !!!

24

Teaching PAT with XMT-C

• Overarching goal:

– Nurture a (50-year) generation of CS enthusiasts ready to think/work

in parallel (programmers, developers, engineers, theoreticians, etc.)

•

Module goals for student learning

– Understand what are parallel algorithms

– Understand the differences, and links, between parallel and serial

algorithms (serial as a special case of parallel - single processor)

– Understand and master how to:

• Analyze a given problem into the shortest sequence of steps within which

all possible concurrent operations are performed

• Program (code, run, debug, improve, etc.) parallel algorithms

– Understand and use measures of algorithm efficiency

• Run-time

• Work – distinguish number of operations vs. number of steps

• Complexity

25

Teaching PAT with XMT-C (cont.)

• Objectives - students will be able to:

–

–

–

–

–

Program parallel algorithms (that run) in XMTC

Solve general-purpose, genuine parallel problems

Compare and choose best parallel (and serial) algorithms

Explain why an algorithm is serial/parallel

Propose and execute reasoned improvements to their own

and/or others’ parallel algorithms

– Reason about correctness of algorithms: Why an algorithm

provides a solution to a given problem?

26

Hands-0n: The Bill Gates Intro Problem

(from Baltimore Polytechnic Institute)

• Please form small groups (3-4)

• Consider Bill Gates, the richest person on earth

– Well, he can hire as many helpers for any task in his life …

• Suggest an algorithm to accomplish the following morning

tasks in the least number of steps and go out to work

Start = Mr. Gates in pajama gown

Fasten belt

Put on shirt

Put on right sock

Put on left shoe

Put on underpants

Remove pajama gown

Put on left sock

Put on right shoe

Put on undershirt

Tuck shirt into pants

Put on pants

27

10-Year Old Solves Bill Gates …

• Play tape

28

A Solution for Bill Gates

Gates in pajama gown

Remove pajama gown

Put on right sock

Put on underpants

Put on left sock

Put on pants

Put on right shoe

I

Put on undershirt

Put on shirt

Put on left shoe

Tuck shirt into pants

Fasten belt

1

2

3

4

5

Moral: Parallelism introduces both constraints and opportunities

Constraints: We can’t just assume we can accomplish everything at once!

Opportunities: Can be much faster than serial!

[5 parallel steps versus 11 serial steps]

29

Pedagogical Considerations (1)

• In your small groups discuss:

How might solving the Bill Gates problem help students in

learning PAT?

Will you use it as an intro to a PAT module? Why?

• Be ready to share your ideas with the whole group

• Whole group discussion of Bill Gates problem to initiate PAT

30

A Brain-based Learning Theory

• Understanding: anticipation and reasoning about invariant

relationship between activity and its effects (AER)

• Learning: transformation in such anticipation, commencing

with available and proceeding to intended

• Mechanism: Reflection (two types) on activity-effect

relationship (Ref*AER)

– Type-I: comparison between goal and actual effect

– Type-II: comparison across records of experiences/situations in which

AER has been used consistently

• Stages:

– Participatory (provisional, oops), Anticipatory (transfer enabling,

succeed)

• For more, see www.edci.purdue.edu/faculty_profiles/tzur/index.html

31

A Teacher’s Voice: XMT Approach/Content

• Pay attention to his emphasis on student development of

anticipation of run-time using “complexity” analysis (deep

level of understanding even for serial thinking)

• Play video segments #2 and #3 (5:30 min)

http://www.umiacs.umd.edu/users/vishkin/TEACHING/SHANETORBERT-INTERVIEW7-09/02 Shane Ease of Use.m4v

http://www.umiacs.umd.edu/users/vishkin/TEACHING/SHANETORBERT-INTERVIEW7-09/03 Shane Content Focus.m4v

• Shane’s suggested first trial with teaching this material:

- Where: your CS AP class (you most likely ask when …)

- When: Between the AP exam and the end of the school year.

32

PAT Module Plan

• Intro Tasks: Create informal algorithmic solutions for problems

students can relate to; parallelize

– Bill Gates; Way out of a maze; train a dog to fetch a ball; standing

blindfolded in line, the toddler problem, building a sand castle, etc.

• Discussion:

– What is Serial? Parallel? How do they differ? Advantages and

disadvantages of both (tradeoffs)? Steps vs. operations? Breadth-first vs.

Depth-first searches?

• Establish XMT environment:

– Installation (Linux, Simulator)

– Programming syntax (Logo? C? XMT-C?) – “Hello World” and beyond …

• Algorithms for Meaningful Problems

– For each problem: create parallel and serial algorithms that solve it;

analyze and compare them (individual, pairs, small groups, whole class

– Revisit discussion of how serial and parallel differ

33

Problem Sequence

•

•

•

•

•

Exchange problems

Ranking problems

Summation and Prefix-Sums (application – compaction)

Matrix multiplication problems

Sorting problems (including merge-sort , integer-sort and

sample-sort)

• Selection problems (finding the median)

• Minimum problems

• Nearest-one problems

• See also:

–

–

–

–

www.umiacs.umd.edu/users/vishkin/XMT/index.shtml

www.umiacs.umd.edu/users/vishkin/XMT/index.shtml#tutorial

www.umiacs.umd.edu/users/vishkin/XMT/sw-release.html

www.umiacs.umd.edu/users/vishkin/XMT/teaching-platform.html

34

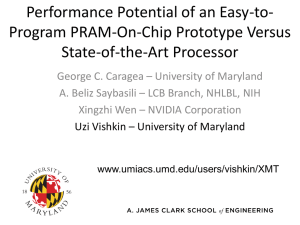

PRAM-On-Chip Silicon: 64-processor, 75MHz prototype

FPGA Prototype built n=4,

#TCUs=64, m=8, 75MHz.

The system consists of 3 FPGA chips:

2 Virtex-4 LX200 & 1 Virtex-4 FX100(Thanks

Xilinx!)

Block diagram of XMT

Some experimental results (UV)

• AMD Opteron 2.6 GHz, RedHat

Linux Enterprise 3, 64KB+64KB L1

Cache, 1MB L2 Cache (none in

XMT), memory bandwidth 6.4 GB/s

(X2.67 of XMT)

• M_Mult was 2000X2000 QSort

was 20M

• XMT enhancements: Broadcast,

prefetch + buffer, non-blocking

store, non-blocking caches.

XMT Wall clock time (in seconds)

App.

M-Mult

QSort

XMT Basic XMT Opteron

179.14

63.7

113.83

16.71

6.59

2.61

Assume (arbitrary yet conservative)

ASIC XMT: 800MHz and 6.4GHz/s

Reduced bandwidth to .6GB/s and projected back by

800X/75

XMT Projected time (in seconds)

App.

M-Mult

QSort

XMT Basic XMT Opteron

23.53

12.46 113.83

1.97

1.42

2.61

- Simulation of 1024 processors: 100X on standard benchmark suite for VHDL gatelevel simulation. for 1024 processors [Gu-V06]

-Silicon area of 64-processor XMT, same as 1 commodity processor (core)

36

Hands-On Example: Merging

•

Input:

–

–

–

•

Two arrays A[1. . n], B[1. . N]

Elements from a totally ordered domain S

Each array is monotonically non-decreasing

Merging task (Output):

–

Map each of these elements into a monotonically

non-decreasing array C[1..2n]

Serial Merging algorithm

SERIAL − RANK(A[1 . . ];B[1. .])

Starting from A(1) and B(1), in each round:

1. Compare an element from A with an element of B

2. Determine the rank of the smaller among them

Complexity: O(n) time (hence, also O(n) work...)

Hands-on: How will you parallelize this algorithm?

A

B

4

6

8

9

16

17

18

19

20

21

23

25

27

29

31

32

1

2

3

5

7

10

11

12

13

14

15

22

24

26

28

30

37

Partitioning Approach

Input size for a problem=n; Design a 2-stage parallel algorithm:

• Partition the input in each array into a large number, say p, of

independent small jobs

• Size of the largest small job is roughly n/p

• Actual work - do the small jobs concurrently, using a separate

(possibly serial) algorithm for each

“Surplus-log” parallel algorithm for Merging/Ranking

for 1 ≤ i ≤ n pardo

• Compute RANK(i,B) using standard binary search

• Compute RANK(i,A) using binary search

Complexity: W=O(n log n), T=O(log n)

38

Middle School Students Experiment with

Merge/Rank

39

Linear work parallel merging: using a single spawn

Stage 1 of algorithm: Partitioning for 1 ≤ i ≤ n/p pardo [p <= n/log and p | n]

• b(i):=RANK(p(i-1) + 1),B) using binary search

• a(i):=RANK(p(i-1) + 1),A) using binary search

Stage 2 of algorithm: Actual work

Observe Overall ranking task broken into 2p independent “slices”.

Example of a slice

Start at A(p(i-1) +1) and B(b(i)).

Using serial ranking advance till:

Termination condition

Either some A(pi+1) or some B(jp+1) loses

Parallel program 2p concurrent threads

using a single spawn-join for the whole

algorithm

Example Thread of 20: Binary search B.

Rank as 11 (index of 15 in B) + 9 (index of

20 in A). Then: compare 21 to 22 and rank

21; compare 23 to 22 to rank 22; compare 23

to 24 to rank 23; compare 24 to 25, but terminate

since the Thread of 24 will rank 24.

40

Linear work parallel merging (cont’d)

Observation 2p slices. None has more than 2n/p elements

(not too bad since average is 2n/2p=n/p elements)

Complexity Partitioning takes W=O(p log n), and T=O(log n) time, or

O(n) work and O(log n) time, for p <= n/log n

Actual work employs 2p serial algorithms, each takes O(n/p) time

Total W=O(n), and T=O(n/p), for p <= n/log n

IMPORTANT: Correctness & complexity of parallel programs

Same as for algorithm

This is a big deal. Other parallel programming approaches do not

have a simple concurrency model, and need to reason w.r.t. the

program

41

A Teacher’s Voice: Start PAT with XMT

• Observe Shane’s video segment #4

http://www.umiacs.umd.edu/users/vishkin/TEACHING/SHANETORBERT-INTERVIEW7-09/04 Shane Word to Teachers.m4v

42

How to (start)? (GC)

•

•

Contact us! ! !

Observe online teaching sessions (more to be added soon)

–

•

Download and install simulator

•

•

•

Read manual

Google XMT or www.umiacs.umd.edu/users/vishkin/XMT/index.shtml

Solve a few problems on your own

•

•

•

Contact us

Try programming a parallel algorithm in XMTC for prefix-sums …

Contact us

Follow teaching plan (slides #29-30)

– Did we already say: CONTACT US ?!?! (entire team waiting

for your call …)

43

???

44

Additional Intro Problems

• See next slides

45

The Year-Old Toddler Problem:

Initial input state: Sleeping toddler in crib

End: Toddler ready to go to daycare …

Analyze for parallelism: Steps and operations

Prepare mush

Pack toddler’s lunch

Bring toddler to kitchen

Put on coat

Put on right sock

Put on shirt

Tuck shirt into pants

Sleeping toddler in crib

Wake up toddler and take out of crib

Put on left sock

Put on left shoe

Put on pants

Put on right shoe

Remove pajamas

Put toddler in high chair and spoon mush

Take toddler to car and put into car seat

sleeping toddler in crib

Remove diaper, clean bottom, and put on

clean diaper (must be done after mushing)

46

How can we direct the

computer to search this maze

and help the cat get to the

milk a parallel algorithm

(depth first search?)

0

Back to A

A

B

C

H

Overmight

We

to E imagine

locations

Back to A in the maze that

force a decision and call

Over

F

theseto

junctions.

Back

to A say the

We might

Over

to G is forced

computer

to

make

Back

to Aa decision

at junction A

Over to H

D

E

Backprogresses

And

to A

in a left

handed fashion to B…

Until it reaches a

blockage C…

And must return to B…

G

F

And proceed to the next

junction D but that returns

to itself…

Back to B

47

Back-up slide: FPGA 64-processor, 75MHz prototype

Specs and aspirations

n=m

64

# TCUs 1024

- Multi GHz clock rate

FPGA Prototype built n=4,

#TCUs=64, m=8, 75MHz.

The system consists of 3 FPGA chips:

2 Virtex-4 LX200 & 1 Virtex-4 FX100(Thanks

Xilinx!)

- Cache coherence defined away: Local cache

only at master thread control unit (MTCU)

- Prefix-sum functional unit (F&A like) with

global register file (GRF)

- Reduced global synchrony

- Overall design idea: no-busy-wait FSMs

Block diagram of XMT