Some Data Mining Challenges Learned From Bioinformatics & Actions Taken

advertisement

Some Data Mining Challenges Learned

From Bioinformatics & Actions Taken

Limsoon Wong

National University of Singapore

Bertinoro, Nov 2005

Plan

• Bioinformatics Examples

– Treatment prognosis of DLBC lymphoma

– Prediction of translation initiation site

– Prediction of protein function from PPI data

• What have we learned from these projects?

• What have I been looking at recently?

– Statistical measures beyond frequent items

– Small changes that have large impact

– Evolution of pattern spaces

Bertinoro, Nov 2005

Copyright 2005 © Limsoon Wong

Example #1:

Treatment

Prognosis for

DLBC Lymphoma

Ref: H. Liu et al, “Selection of

patient samples and genes for

outcome prediction”, Proc.

CSB2004, pages 382--392

Bertinoro, Nov 2005

Image credit: Rosenwald et al, 2002

Diffuse Large B-Cell Lymphoma

• DLBC lymphoma is the

most common type of

lymphoma in adults

• Can be cured by

anthracycline-based

chemotherapy in 35 to 40

percent of patients

DLBC lymphoma

comprises several

diseases that differ in

responsiveness to

chemotherapy

Bertinoro, Nov 2005

• Intl Prognostic Index (IPI)

– age, “Eastern Cooperative

Oncology Group” Performance

status, tumor stage, lactate

dehydrogenase level, sites of

extranodal disease, ...

• Not very good for

stratifying DLBC

lymphoma patients for

therapeutic trials

Use gene-expression

profiles to predict outcome

of chemotherapy?

Copyright 2005 © Limsoon Wong

Knowledge Discovery from Gene

Expression of “Extreme” Samples

240

samples

“extreme”

sample

selection:

< 1 yr vs > 8 yrs

knowledge

discovery

from gene

expression

47 shortterm survivors

26 longterm survivors

7399

genes

80

samples

84

genes

T is long-term if S(T) < 0.3

T is short-term if S(T) > 0.7

Bertinoro, Nov 2005

Copyright 2005 © Limsoon Wong

Kaplan-Meier Plot for 80 Test Cases

Low risk

High risk

p-value of log-rank test: < 0.0001

Risk score thresholds: 0.7, 0.3

Bertinoro, Nov 2005

No clear difference on

the overall survival of the

80 samples in the

validation group of DLBCL

study, if no training

sample selection

conducted

Copyright 2005 © Limsoon Wong

Example #2: Protein Translation

Initiation Site Recognition

Ref: L. Wong et al., “Using

feature generation and feature

selection for accurate

prediction of translation

initiation sites”, GIW 13:192-200, 2002

Bertinoro, Nov 2005

A Sample cDNA

• What makes the second ATG the TIS?

299 HSU27655.1 CAT U27655 Homo sapiens

CGTGTGTGCAGCAGCCTGCAGCTGCCCCAAGCCATGGCTGAACACTGACTCCCAGCTGTG

CCCAGGGCTTCAAAGACTTCTCAGCTTCGAGCATGGCTTTTGGCTGTCAGGGCAGCTGTA

GGAGGCAGATGAGAAGAGGGAGATGGCCTTGGAGGAAGGGAAGGGGCCTGGTGCCGAGGA

CCTCTCCTGGCCAGGAGCTTCCTCCAGGACAAGACCTTCCACCCAACAAGGACTCCCCT

80

160

240

• Approach

– Training data gathering

– Signal generation

• k-grams, distance, domain know-how, ...

– Signal selection

• Entropy, 2, CFS, t-test, domain know-how...

– Signal integration

• SVM, ANN, PCL, CART, C4.5, kNN, ...

Bertinoro, Nov 2005

Copyright 2005 © Limsoon Wong

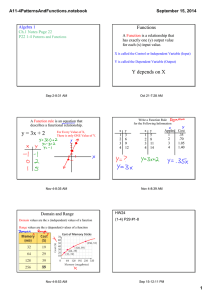

Too Many Signals Feature Selection

• For each value of k, there

are 4k * 3 * 2 k-grams

• If we use k = 1, 2, 3, 4, 5,

we have 24 + 96 + 384 +

1536 + 6144 = 8184

features!

• This is too many for most

machine learning

algorithms

Bertinoro, Nov 2005

• Choose a signal w/ low

intra-class distance

• Choose a signal w/ high

inter-class distance

• E.g.,

Copyright 2005 © Limsoon Wong

Sample k-grams Selected by CFS

Kozak consensus

Leaky scanning

• Position –3

• in-frame upstream ATG

• in-frame downstream

– TAA, TAG, TGA,

– CTG, GAC, GAG, and GCC

Stop codon

Codon bias?

Bertinoro, Nov 2005

Copyright 2005 © Limsoon Wong

Validation Results (on Chr X and Chr 21)

Our

method

ATGpr

• Using top 100 features selected by entropy and

trained on Pedersen & Nielsen’s

Bertinoro, Nov 2005

Copyright 2005 © Limsoon Wong

Level-1 neighbour

Example #3: Protein

Function Prediction

from Protein Interactions

Bertinoro, Nov 2005

Level-2 neighbour

An illustrative Case of

Indirect Functional Association?

SH3 Proteins

SH3-Binding

Proteins

• Is indirect functional association plausible?

• Is it found often in real interaction data?

• Can it be used to improve protein function

prediction from protein interaction data?

Bertinoro, Nov 2005

Copyright 2005 © Limsoon Wong

Freq of Indirect Functional Association

• 59.2% proteins in dataset

share some function with

level-1 neighbours

YAL012W

|1.1.6.5

|1.1.9

YJR091C

YMR300C

YPL149W

YBR055C

YMR101C

|1.3.16.1

|16.3.3

|1.3.1

|14.4

|20.9.13

|42.25

|14.7.11

|11.4.3.1

|42.1

YPL088W

YBR293W

|2.16

|1.1.9

|16.19.3

|42.25

|1.1.3

|1.1.9

YDR158W

|1.1.6.5

|1.1.9

YBL072C

|12.1.1

YBR023C

YLR330W

YBL061C

|10.3.3

|32.1.3

|34.11.3.7

|42.1

|43.1.3.5

|43.1.3.9

|1.5.1.3.2

|1.5.4

|34.11.3.7

|41.1.1

|43.1.3.5

|43.1.3.9

|1.5.4

|10.3.3

|18.2.1.1

|32.1.3

|42.1

|43.1.3.5

|1.5.1.3.2

YLR140W

• 27.9% share some function

with level-2 neighbours

but share no function with

level-1 neighbours

YMR047C

|11.4.2

|14.4

|16.7

|20.1.10

|20.1.21

|20.9.1

YKL006W

YOR312C

|12.1.1

|16.3.3

|12.1.1

Bertinoro, Nov 2005

YPL193W

YDL081C

YDR091C

YPL013C

|12.1.1

|12.1.1

|1.4.1

|12.1.1

|12.4.1

|16.19.3

|12.1.1

|42.16

Copyright 2005 © Limsoon Wong

Over-Rep of Functions in

L1 & L2 Neighbours

Fraction of Neighbours w ith Functional

Sim ilarity

Sensitivity vs Precision

1

0.6

0.5

Fraction

0.4

0.3

0.2

L1 - L2

0.9

L2 - L1

0.8

L1 ∩ L2

0.7

Sensitivity

L1 - L2

L2 - L1

L1 ∩ L2

L3 - (L1 U L2)

All Proteins

0.6

0.5

0.4

0.3

0.2

0.1

0.1

0

≥0.1 ≥0.2 ≥0.3 ≥0.4 ≥0.5 ≥0.6 ≥0.7 ≥0.8 ≥0.9

Similarity

Bertinoro, Nov 2005

0

0

0.1

0.2

0.3

0.4

0.5

0.6

0.7

0.8

0.9

1

Precision

Copyright 2005 © Limsoon Wong

Performance Evaluation

• Prediction performance improves after

incorporation of L1, L2, & interaction reliability info

Informative FCs

1

NC

Chi²

PRODISTIN

Weighted Avg

Weighted Avg R

0.9

0.8

Sensitivity

0.7

0.6

0.5

0.4

0.3

0.2

0.1

0

0

Bertinoro, Nov 2005

0.1 0.2 0.3 0.4 0.5 0.6 0.7 0.8 0.9

Precision

1

Copyright 2005 © Limsoon Wong

What Have We

Learned?

Bertinoro, Nov 2005

Some of those

“techniques”

frequently needed

in analysis of

biomedical data are

insufficiently

studied by current

data mining

researchers

Bertinoro, Nov 2005

• Recognizing what

samples are relevant

and what are not

• Recognizing what

features are relevant

and what are not &

handling missing or

incorrect values

• Recognizing trends,

changes, and their

causes

Copyright 2005 © Limsoon Wong

Action #1:

Going Beyond

Frequent Patterns to

Recognize What

Features Are

Relevant and What

Are Not

Bertinoro, Nov 2005

Going Beyond Frequent Patterns

• Statisticians use a battery

of “interestingness”

measures to decide if a

feature/factor is relevant

PD ,ed

• Odds ratio

PD , d

OR ( P, D )

PD ,e

PD ,

• Examples:

– Odds ratio

– Relative risk

– Gini index

– Yule’s Q & Y

– etc

Bertinoro, Nov 2005

Copyright 2005 © Limsoon Wong

Challenge: Frequent Pattern Mining

Relies on Convexity for Efficiency, But …

• Proposition:

Let SkOR(ms,D) = { P

F(ms,D) | OR(P,D) k}.

Then SkOR(ms,D) is not

convex

• i.e., the space of odds ratio

patterns is not convex.

Ditto for many other types

of patterns

{A,B}:1

{A,B,C}:3

{A}:∞

OR search space

Bertinoro, Nov 2005

Copyright 2005 © Limsoon Wong

Solution: Luckily They Become Convex

When Decomposed Into Plateaus

• Theorem:

Let Sn,kOR(ms,D) = { P

F(ms,D) | PD,ed=n, OR(P,D)

k}. Then Sn,kOR(ms,D) is

convex

The space of odds ratio

patterns becomes convex

when stratified into

plateaus based on support

levels on positive (or

negative) dataset

Bertinoro, Nov 2005

• Proposition:

Let Q ∊[P]D, then

OR(Q,D)=OR(P,D)

The plateau space can be

further divided into convex

equivalence classes on the

whole dataset

The space of equivalence

classes can be concisely

represented by generators

and closed patterns

Copyright 2005 © Limsoon Wong

Performance

• Mining odds ratio and

relative patterns depends

on GC-growth

• GC-Growth is mining both

generators and closed

patterns

• It is comparable in speed

to the fastest algorithms

that mined only closed

patterns

Bertinoro, Nov 2005

Copyright 2005 © Limsoon Wong

Action #2: Tipping

Factors---The Small

Changes With Large

Impact

Bertinoro, Nov 2005

Tipping Events

• Given a data set, such as

those related to human

health, it is interesting to

determine impt cohorts

and impt factors causing

transition betw cohorts

Tipping events

Tipping factors are “action

items” for causing

transitions

Bertinoro, Nov 2005

• “Tipping event” is two or

more population cohorts

that are significantly

different from each other

• “Tipping factors” (TF) are

small patterns whose

presence or absence

causes significant

difference in population

cohorts

• “Tipping base” (TB) is the

pattern shared by the

cohorts in a tipping event

• “Tipping point” (TP) is the

combination of TB and a

TF

Copyright 2005 © Limsoon Wong

Impact-To-Cost-Ratio of Tipping Points

Bertinoro, Nov 2005

Copyright 2005 © Limsoon Wong

Some Simple Results Useful

For Constructing TPs

Bertinoro, Nov 2005

Copyright 2005 © Limsoon Wong

Action #3: Evolution of

Pattern Spaces---How

Do They Change When

the Sample Space

Changes?

Bertinoro, Nov 2005

Impact of Adding New Transactions on

Key and Closed Patterns

Bertinoro, Nov 2005

Copyright 2005 © Limsoon Wong

Impact of

Removing

Items From All

Transactions

Bertinoro, Nov 2005

Copyright 2005 © Limsoon Wong

Acknowledgements

• DLBC Lymphoma:

– Jinyan Li, Huiqing Liu

• Translation Initiation:

– Fanfan Zeng, Roland

Yap

– Huiqing Liu

• Protein Function

Prediction:

– Kenny Chua, Ken Sung

Bertinoro, Nov 2005

• Odds Ratio & Relative Risk

– Mengling Feng, Yap-Peng

Tan,

– Haiquan Li, Jinyan Li

• Tipping Points:

– Guimei Liu, Jinyan Li

– Guozhu Dong

• Pattern Space Evolution:

– Mengling Feng, Yap-Peng

Tan

– Guozhu Dong

– Jinyan Li

Copyright 2005 © Limsoon Wong