Slides 7

advertisement

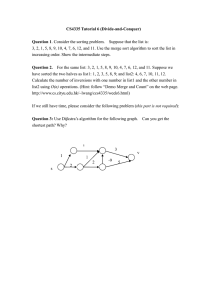

Chapter 7 (Part 2)

Sorting Algorithms

Merge Sort

Merge Sort

Basic Idea

Example

Analysis

http://www.geocities.com/SiliconValley/Program/2864/Fil

e/Merge1/mergesort.html

2

Idea

Two sorted arrays can be merged

in linear time with N comparisons

only.

Given an array to be sorted,

consider separately

its left half and its right half,

sort them and then merge them.

3

Characteristics

Recursive algorithm.

Runs in O(NlogN) worst-case running time.

Where is the recursion?

Each half is an array that can be sorted

using the same algorithm - divide into two,

sort separately the left and the right halves,

and then merge them.

4

5

Merge Sort Code

void merge_sort ( int [ ] a, int left, int right)

{

if(left < right) {

int center = (left + right) / 2;

merge_sort (a,left, center);

merge_sort(a,center + 1, right);

merge(a, left, center + 1, right);

}

}

6

Analysis of Merge Sort

Assumption: N is a power of two.

For N = 1 time is constant (denoted by 1)

Otherwise:

time to mergesort N elements =

time to mergesort N/2 elements +

time to merge two arrays each N/2 el.

7

Recurrence Relation

Time to merge two arrays each N/2

elements is linear, i.e. O(N)

Thus we have:

(a) T(1) = 1

(b) T(N) = 2T(N/2) + N

8

Solving the Recurrence Relation

T(N) = 2T(N/2) + N

divide by N:

(1) T(N) / N = T(N/2) / (N/2) + 1

Telescoping: N is a power of two, so we can

write

(2) T(N/2) / (N/2) = T(N/4) / (N/4) +1

(3) T(N/4) / (N/4) = T(N/8) / (N/8) +1

…….

T(2)

/2

= T(1) / 1

+1

9

Adding the Equations

The sum of the left-hand sides will be equal to

the sum of the right-hand sides:

T(N) / N + T(N/2) / (N/2) + T(N/4) / (N/4) +

… + T(2)/2 =

T(N/2) / (N/2) + T(N/4) / (N/4) + ….

+ T(2) / 2 + T(1) / 1 + LogN

(LogN is the sum of 1’s in the right-hand sides)

10

Crossing Equal Terms,

Final Formula

After crossing the equal terms, we get

T(N)/N = T(1)/1 + LogN

T(1) is 1, hence we obtain

T(N) = N + NlogN = (NlogN)

Hence the complexity of the Merge Sort

algorithm is (NlogN).

11

Quick Sort

Basic Idea

Code

Analysis

Advantages and Disadvantages

Applications

Comparison with Heap sort and Merge

sort

http://math.hws.edu/TMCM/java/xSortLab/

12

Basic Idea

Pick one element in the array, which will be

the pivot.

Make one pass through the array, called a

partition step, re-arranging the entries so

that:

• entries smaller than the pivot are to the left of

the pivot.

• entries larger than the pivot are to the right

13

Basic Idea

Recursively apply quicksort to the part of

the array that is to the left of the pivot,

and to the part on its right.

No merge step, at the end all the elements

are in the proper order

14

Choosing the Pivot

Some fixed element: e.g. the first, the

last, the one in the middle.

Bad choice - may turn to be the

smallest or the largest element, then

one of the partitions will be empty

Randomly chosen (by random generator)

- still a bad choice

15

Choosing the Pivot

The median of the array

(if the array has N numbers, the

median is the [N/2] largest number).

This is difficult to compute - increases

the complexity.

16

Choosing the Pivot

The median-of-three choice:

take the first, the last and the middle

element.

Choose the median of these three

elements.

17

Find the Pivot – Java Code

int median3 ( int [ ]a, int left, int right)

{ int center = (left + right)/2;

if (a [left] > a [ center]) swap (a [ left ], a [center]);

if (a [center] > a [right]) swap (a[center], a[ right ]);

if (a [left] > a [ center]) swap (a [ left ], a [center]);

swap(a [ center ], a [ right-1 ]);

return a[ right-1 ];

}

18

Quick Sort – Java Code

If ( left + 10 < = right)

{ // do quick sort }

else insertionSort (a, left, right);

19

Quick Sort – Java Code

{ int i = left, j = right - 1;

for ( ; ; )

{ while (a [++ i ] < pivot) { }

while ( pivot < a [- - j ] ) { }

if (i < j) swap (a[ i ], a [ j ]);

else break; }

swap (a [ i ], a [ right-1 ]);

quickSort ( a, left, i-1);

quickSort (a, i+1, right); }

20

Implementation Notes

Compare the two versions:

A.

while (a[++i] < pivot) { }

while (pivot < a[--j]){ }

if ( i < j ) swap (a[i], a[j]);

else break;

B.

while (a[ i ] < pivot) { i++ ; }

while (pivot < a[ j ] ) { j- - ; }

if ( i < j ) swap (a [ i ], a [ j ]);

else break;

21

Implementation Notes

If we have an array of equal elements,

the second code will

never increment i or decrement j,

and will do infinite swaps.

i and j will never cross.

22

Complexity of Quick Sort

Average-case O(NlogN)

Worst Case: O(N2)

This happens when the pivot is the

smallest (or the largest) element.

Then one of the partitions is empty,

and we repeat recursively the

procedure for N-1 elements.

23

Complexity of Quick Sort

Best-case O(NlogN)

The pivot is the median of the array,

the left and the right parts have same

size.

There are logN partitions, and to

obtain each partitions we do N

comparisons (and not more than N/2

swaps). Hence the complexity is

O(NlogN)

24

Analysis

T(N) = T(i) + T(N - i -1) + cN

The time to sort the file is equal to

the time to sort the left partition

with i elements, plus

the time to sort the right partition with

N-i-1 elements, plus

the time to build the partitions.

25

Worst-Case Analysis

The pivot is the smallest (or the largest) element

T(N) = T(N-1) + cN, N > 1

Telescoping:

T(N-1) = T(N-2) + c(N-1)

T(N-2) = T(N-3) + c(N-2)

T(N-3) = T(N-4) + c(N-3)

…………...

T(2) = T(1) + c.2

26

Worst-Case Analysis

T(N) + T(N-1) + T(N-2) + … + T(2) =

= T(N-1) + T(N-2) + … + T(2) + T(1) +

c(N) + c(N-1) + c(N-2) + … + c.2

T(N) = T(1) +

c times (the sum of 2 thru N)

= T(1) + c (N (N+1) / 2 -1) = O(N2)

27

Best-Case Analysis

The pivot is in the middle

T(N) = 2T(N/2) + cN

Divide by N:

T(N) / N = T(N/2) / (N/2) + c

28

Best-Case Analysis

Telescoping:

T(N) / N

= T(N/2) / (N/2) + c

T(N/2) / (N/2) = T(N/4) / (N/4) + c

T(N/4) / (N/4) = T(N/8) / (N/8) + c

……

T(2) / 2 = T(1) / (1) + c

29

Best-Case Analysis

Add all equations:

T(N) / N + T(N/2) / (N/2) + T(N/4) / (N/4)

+ …. + T(2) / 2 =

=

(N/2) / (N/2) + T(N/4) / (N/4) + … +

T(1) / (1) + c.logN

After crossing the equal terms:

T(N)/N = T(1) + c*LogN

T(N) = N + N*c*LogN = O(NlogN)

30

Advantages and Disadvantages

Advantages:

One of the fastest algorithms on average

Does not need additional memory (the sorting

takes place in the array - this is called in-place

processing )

Disadvantages:

The worst-case complexity is O(N2)

31

Applications

Commercial applications

QuickSort generally runs fast

No additional memory

The above advantages compensate for

the rare occasions when it runs with O(N2)

32

Warning:

Applications

Never use in applications which require a guarantee of

response time:

Life-critical

(medical monitoring,

life support in aircraft, space craft)

Mission-critical

(industrial monitoring and control in handling dangerous

materials, control for aircraft,

defense, etc)

unless you assume the worst-case response

time

33

Comparison with Heap Sort

O(NlogN) complexity

quick sort runs faster, (does not

support a heap tree)

the speed of quick sort is not

guaranteed

34

Comparison with Merge Sort

Merge sort guarantees O(NlogN) time

Merge sort requires additional memory

with size N

Usually Merge sort is not used for main

memory sorting, only for external memory

sorting

35