Conference Log

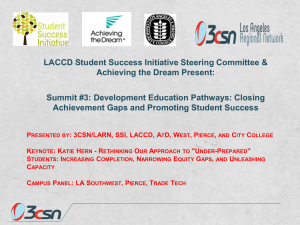

advertisement